Gentle Introduction to LLMs

Last Updated on June 29, 2024 by Editorial Team

Author(s): Saif Ali Kheraj

Originally published on Towards AI.

The LLM market is expected to grow at a CAGR of 40.7%, reaching USD 6.5 billion by the end of 2024, and rising to USD 140.8 billion by 2033.

Given market size and the industry need, it is critical to understand the various models that have already been implemented. Here are a few.

Applications by Industry

Retail and Ecommerce: This market segment can leverage LLMs for customized shopping experiences and recommendations.

Media and Entertainment: They can also witness rapid growth, contributing to personalized content recommendations and innovative storytelling formats.

Supply Chain Management: Providing predictability and control over supply and demand, with applications in vendor selection, financial data analysis, and supplier performance evaluation.

Healthcare/Customer Service and Education: Providing question-answering systems that enhance communication and problem-solving. In addition, analyzing clinical notes.

Traditional RNNs and Pathway to Transformers

Scaling is a major issue for traditional RNNs. They are hard to scale at large because of their short-term memory. Because RNNs are sequential in nature, parallelizing them is difficult. For tasks like translation and summarization, traditional RNNs struggle with alignment and attention mechanisms. This is where transformer comes in.

Example Sentence:

“John lent the book to Mary because she wanted to read it.”

Transformers use attention weights to determine the context and relationships between words. The model uses the attention mechanism to determine that “John” lent the book.

“Mary” is the recipient who wishes to read the book. The word “book” is central to the context and is associated with both “John” and “Mary.”

“Because” indicates the reason. By assigning attention weights, the model learns how each word relates to the others, regardless of their position. This understanding is critical for correctly interpreting and producing context-appropriate text.

To have an intuitive understanding, we can make use of attention maps.

This is the way to visualize attention weights and how each word is linked to each other.

Transformer Architecture

Input to the Model: We will input text to the model. For example: I love books.

Tokenized Inputs: We will then tokenize the text. [“I”, “love”, “book”]

Input IDs: We will then convert tokens to integers or input ids. So for example [342, 500, 200].

Embedding: Each token is represented as an embedding. The idea is that these vectors learn to store the meaning and context of individual tokens in the input sequence. This is similar to word2vec.

Positional Encoding: Then we add positional encoding to maintain the relevance and order of the word.

Attention Layer: We then transfer it to the Multi-head attention layer, as illustrated in Figure 5. In this layer, the contextual dependencies between each word will be captured. We do not only have a single attention layer; rather, we will have multiple attention layers, which is why it is named “multi-headed self-attention.” Each attention layer learns something different.

Feed Forward Neural Network: The logits are then outputted by the feedforward neural network.

Decoder: Depending on the specific architecture and task (such as in sequence-to-sequence models), the logits will be passed to a decoder for further processing. The decoder generates the final output sequence based on the encoded information.

Different Architectures within Transformers with Applications:

The table above depicts 3 different model types that can be leveraged for variety of use cases.

Let us now turn our attention towards Prompt Engineering.

Prompt Engineering

Prompt: This method uses a prompt consisting of instructions followed by context and the instruction to produce an output.

Here we have 3 different types of model: 0 shot, 1 shot, 2 shot + inference.

This all comes under In Context Learning, which refers to a model's ability to perform tasks by using examples provided in the input prompt without further training or finetuning. For instance, in Zero Shot, we just ask the model to classify the sentiment without providing an example. In 1 shot, we provided 1 example to the model and asked the model to produce output for the second review. In summary, the model uses these examples to understand the task and applies this understanding to new inputs within the same prompt.

Smaller models can benefit from 1-shot or 2-shot inference. Larger Models require no examples. However, when the model is not performing well by giving 5 or 6 examples, we should try finetuning the model instead.

Generative Configs — Inference Parameters

Each model exposes a set of configuration parameters that can influence the model’s output during inference. Note that these are different than the training parameters which are learned during training time. Instead, these configuration parameters are invoked at inference time and give you control over things like the maximum number of tokens in the completion, and how creative the output is.

If you go to the hugging face link: https://huggingface.co/docs/transformers/en/main_classes/text_generation. It has several parameters for text generation that is vital to understand.

- Max New Tokens: This is the number of tokens that the model will generate. This controls how much text the model will generate.

2. Greedy vs. random Sampling: As we already know, that model generates probability distribution across all vocabulary. In the greedy approach, we pick the word with the highest probability for the next word generation. However, in this case, we may have repeated words in our generation and this lacks diversity. To increase diversity, we can use random sampling from probability distribution so that any word can be selected. In this case, the output may be too random or creative. To avoid this, we can use top p and top k parameters.

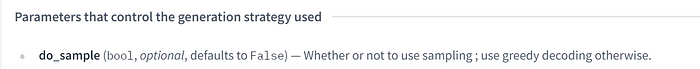

We will set do_sample to True if we want to use random sampling.

3. Top K: This selects an output from the top k results randomly(sort by probability first).

4. Top P: The tokens are sorted by their probability scores in descending order. Then the cumulative probability is computed, starting from the highest probability token down to the least one, until the cumulative probability reaches a predefined threshold. We then select token randomly.

5. Temperature: The temperature is a hyperparameter that controls the randomness of the predictions by scaling the logits before applying softmax. In simple terms, it affects how much the model is willing to take risks when generating text.

- A temperature of 1.0 makes the model use the probabilities directly from the logits(no scaling) .

- Lower temperature makes the model output more predictable and repetitive. It selects tokens with the highest probability, reducing randomness.

- At high temperatures, the model’s output is more varied and creative. It allows for the selection of less probable tokens increasing randomness.

Conclusion

The aforementioned concepts are of great importance in the later stages of LLM finetuning. The initial stage involves utilizing the current model and experimenting with various inference parameters, including 0 shot, 1 shot, and >1 shot.

References:

Large Language Model Market: Growth to USD 140.8 Billion by 2033

Large Language Model Market, with a projected CAGR of 40.7%, reaching USD 140.8 billion by 2033.

www.linkedin.com

Generation

We're on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Generative AI with Large Language Models

In Generative AI with Large Language Models (LLMs), you'll learn the fundamentals of how generative AI works, and how…

www.coursera.org

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: