Learn Prompting 101: Prompt Engineering Course & Challenges

Last Updated on November 12, 2023 by Editorial Team

Introduction

The capabilities and accessibility of large language models (LLMs) are advancing rapidly, leading to widespread adoption and increasing human-AI interaction. Reuters reported research recently estimating that OpenAI’s ChatGPT already reached 100 million monthly users in January, just two months after its launch! This raises the importance of the question; how do we talk to models such as ChatGPT and how do we get the most out of them? This is prompt engineering. While we expect the meaning and methods to evolve, we think it could become a key skill and might even become a common standalone job title as AI, Machine Learning, and LLMs become increasingly integrated into everyday tasks.

This article will explore what prompt engineering is, its importance & challenges, and provide an in-depth review of the Learn Prompting course designed for the practical application of prompting for learners of all levels (minimal knowledge of machine learning is expected!).

Learn Prompting is an open-source, interactive course led by @SanderSchulhoff and contributed to by Towards AI and tons of generous contributors. Towards AI is also teaming up with Learn Prompting to launch the HackAPrompt Competition, the first-ever prompt hacking competition. Participants do not need a technical background and will be challenged to hack several progressively more secure prompts. Stay tuned in our Learn AI Discord community or the Learn Prompting’s Discord community for full details and information about prizes and dates!

What is Prompting?

Generative AI models primarily interact with the user through textual input. Users can instruct the model on the task by providing a textual description. What users ask the model to do in a broad sense is a “prompt”. “Prompting” is how humans can talk to artificial intelligence (AI). It is a way to tell an AI agent what we want and how we want it using adapted human language. A prompt engineer will translate your idea from your regular conversational language into clearer and optimized instructions for the AI.

The output generated by AI models varies significantly based on the engineered prompt. The purpose of prompt engineering is to design prompts that elicit the most relevant and desired response from a Large Language Model (LLM). It involves understanding the capabilities of the model and crafting prompts that will effectively utilize them.

For example, in the case of image generation models, such as Stable Diffusion, the prompt is mainly a description of the image you want to generate. And the precision of that prompt will directly impact the quality of the generated image. The better the prompt, the better the output.

Why is Prompting Important?

Prompting serves as the bridge between humans and AI, allowing us to communicate and generate results that align with specific needs. To fully utilize the capabilities of generative AI, it’s essential to know what to ask and how to ask it. Here is why prompting is important:

- By providing a specific prompt, it’s possible to guide the model to generate output that is most relevant and coherent in context.

- Prompting allows users to interpret the generated text in a more meaningful way.

- Prompting is a powerful technique in generative AI that can improve the quality and diversity of the generated text.

- Prompting increases control and interpretability, and reduces potential biases.

- Different models will respond differently to the same prompting, and understanding the specific model can generate precise results with the right prompting.

- Generative models may hallucinate knowledge that is not factual or incorrect. Prompting can guide the model in the right direction by prompting it to cite correct sources.

- Prompting allows for experimentation with diverse types of data and different ways of presenting that data to the language model.

- Prompting enables the determination of what good and bad outcomes should look like by incorporating the goal into the prompt.

- Prompting improves the safety of the model and helps defend against prompt hacking (users sending prompts to produce undesired behaviors from the model).

In the following example, you can observe how prompts impact the output and how generative models respond to different prompts. Here, the DALLE model was instructed to create a low-poly style astronaut, rocket, and computer. This was the first prompt for each image:

- Low poly white and blue rocket shooting to the moon in front of a sparse green meadow

- Low poly white and blue computer sitting in a sparse green meadow

- Low poly white and blue astronaut sitting in a sparse green meadow with low poly mountains in the background

This image was generated by the prompt above:

Although the results are decent, the style just wasn’t consistent. Although, after optimizing the prompts to:

- A low poly world, with a white and blue rocket blasting off from a sparse green meadow with low poly mountains in the background. Highly detailed, isometric, 4K.

- A low poly world, with a glowing blue gemstone magically floating in the middle of the screen above a sparse green meadow with low poly mountains in the background. Highly detailed, isometric, 4K.

- A low poly world, with an astronaut in a white suit and blue visor, is sitting in a sparse green meadow with low poly mountains in the background. Highly detailed, isometric, 4K.

This image was genearted by the prompt above:

These images are more consistent in style, and the main takeaway is that prompting is very iterative and requires a lot of research. Modifying expectations and ideas is important as you continue to experiment with different prompts and models.

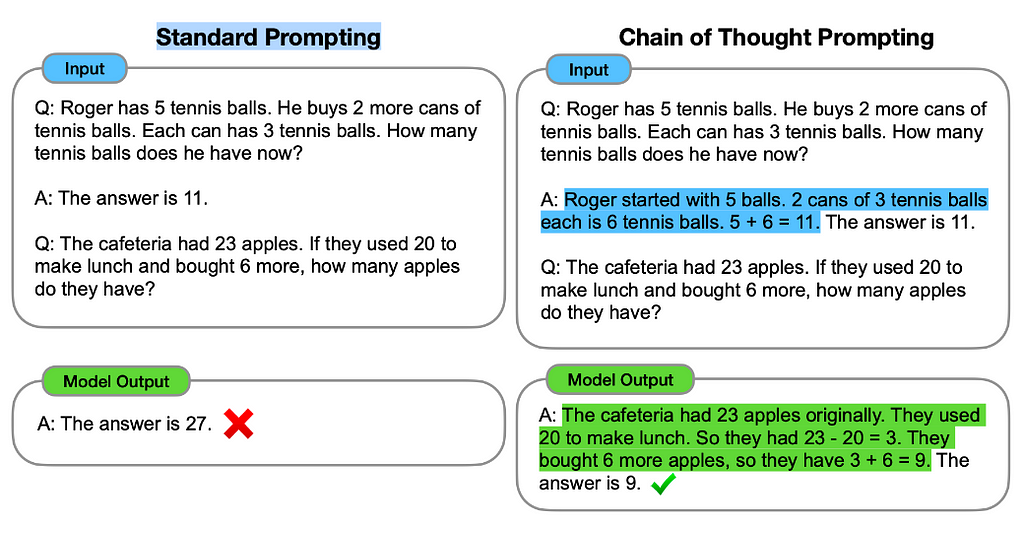

Here is another example (on a text model, specifically it was with ChatGPT) of how prompting can optimize the results and help you generate accurate results.

Challenges and Safety Concerns with Prompting

While prompting enables the efficient utilization of generative AI, its correct usage for optimal output faces various challenges and brings several security challenges to the fore.

Prompting for Large Language Models can present several challenges, such as:

- Achieving the desired results on the first try.

- Finding an appropriate starting point for a prompt.

- Ensuring output has minimal biases.

- Controlling the level of creativity or novelty of the result.

- Understanding and evaluating the reasoning behind the generated responses.

- Wrong interpretation of the intended meaning of the prompt.

- Lack of the right balance between providing enough information in the prompt to guide the model and allowing room for novel or creative responses.

The rise of prompting has led to the discovery of security vulnerabilities, such as:

- Prompt injection, where an attacker can manipulate the prompt to generate malicious or harmful output.

- Leak sensitive information through the generated output.

- Jailbreaking the model, where an attacker could gain unauthorized access to the model’s internal states and parameters.

- Generate fake or misleading information.

- The model’s ability to perpetuate societal biases if not trained on diverse and minimally biased data.

- Generate realistic and convincing text that can be used for malicious or deceitful purposes.

- The model may generate responses that violate laws or regulations.

Learn Prompting: Course Details

As technology advances, the ability to communicate effectively with artificial intelligence (AI) systems has become increasingly important. It is possible to automate a wide range of tasks that currently consume large amounts of time and effort with AI. AI can either complete or provide a solid starting point for all tasks, from writing emails and reports to coding. This resource is designed to provide non-technical learners and advanced engineers with the practicable skills necessary to effectively communicate with generative AI systems.

About the Course

Learn Prompting is an open-source, interactive course with applied prompt engineering techniques and concepts. It is designed for both beginners and experienced professionals looking to expand their skill sets and adapt to emerging AI technologies. The course is frequently updated to include new techniques, ensuring that learners stay current with the latest developments in the field.

Besides real-world applications and examples, the course provides interactive demos to aid hands-on learning. One of the unique features of Learn Prompting is its non-linear structure, which allows learners to dive into the topics that interest them most. These articles are rated by difficulty and labeled for ease of learning, making it easy to find the right level of content. The gradual progression of the material also makes it accessible to those with little to no technical background, enabling them to understand even advanced prompt engineering concepts.

Learn Prompting is the perfect course for anyone looking to gain practical, immediately applicable techniques for their own projects.

Course Highlights

The Learn Prompting course offers a unique learning experience focusing on practical techniques that learners can apply immediately. The course includes:

- In-depth articles on basic concepts and applied prompt engineering (PE)

- Specialized learning chapters for advanced PE techniques

- An overview of applied prompting using generative AI models

- An inclusive, open-source course for non-technical and advanced learners

- A self-paced learning model with interactive applied PE demos

- A non-linear learning model designed to make learning relevant, concise, and enjoyable

- Articles rated by difficulty level for ease of learning

- Real-world examples and additional resources for continuous learning

Learn Prompting: Chapter Summary

Here is a quick summary of each chapter:

1.Basics

It is an introductory lesson for learners unfamiliar with machine learning (ML). It covers basic concepts like artificial intelligence (AI), prompting, key terminologies, instructing AI, and types of prompts.

2. Intermediate

This chapter focuses on the various methods of prompting. It goes into more detail about prompts with different formats and levels of complexity, such as Chain of Thought, Zero-Shot Chain of Thought prompting, and the generated knowledge approach.

3. Applied Prompting

This chapter covers the end-to-end prompt engineering process with interactive demos, practical examples using tools like ChatGPT, and solving discussion questions with generative AI. This chapter allows learners to experiment with these tools, test different prompting approaches, compare generated results, and identify patterns.

4. Advanced Applications

This lesson covers some advanced applications of prompting that can tackle complex reasoning tasks by searching for information on the internet or other external sources.

5. Reliability

This chapter covers techniques for making completions more reliable and implementing checks to ensure that outputs are accurate. It explains simple methods for debiasing prompts, such as using various prompts, self-evaluation of language models, and calibration of language models.

6. Image Prompting

This guide explores the basics of image prompting techniques and provides additional external resources for further learning. It delves into fundamental concepts of image prompting, such as style modifiers, quality boosters, and prompting methods like repetition.

7. Prompt Hacking

This chapter covers concepts like prompt injection and prompt leaking and examines potential measures to prevent such leaks. It highlights the importance of understanding these concepts to ensure the security and privacy of the data generated by language models.

8. Prompting IDEs

This chapter provides a comprehensive list of various prompt engineering tools, such as GPT-3 Playground, Dyno, Dream Studio, etc. It delves deeper into the features and functions of each tool, giving learners an understanding of the capabilities and limitations of each.

9. Resources

The course offers comprehensive educational resources for further learning, including links to articles, blogs, practical examples, and tasks of prompt engineering, relevant experts to follow, and a platform to contribute to the course and ask questions.

How to Navigate

The Learn Prompting course offers a non-linear learning model to make learning practical, relevant, and fun. You can read the chapters in any order and delve into the topics that interest you the most.

If you are a complete novice, start with the Basics section. You can start with the Intermediate section if you have an ML background.

Articles are rated by difficulty level and are labeled:

? Very easy: no programming required

? Easy: simple programming required, but no domain expertise needed

? Medium: programming required, and some domain expertise is needed to implement the techniques discussed (like computing log probability)

? Hard: programming required, and robust domain expertise is needed to implement the techniques discussed (like reinforcement learning approaches)

Please note: Even though domain expertise is helpful for medium and hard articles, you will still be able to understand it.

The future of the course

We plan on keeping this course up to date with Sander and all collaborators (maybe you?). More specifically, we want to keep adding relevant sections for new prompting techniques but also for new models, such as ChatGPT, DALLE, etc., sharing the best tips and practices for using those powerful new models. Still, all of this would not be possible without YOUR help…

Contributing to the course

The idea of learning thrives on growing with each other as a community. We welcome contributions and encourage individuals to share their knowledge through this platform.

Contribute to the course here.

Along with contributions, your feedback is also vital to the success of this course. If you have questions or suggestions, please reach out to Learn Prompting. You can create an issue here, email, or connect with us on our Discord community server.

Find the complete course here.

HackAPrompt Competition: A step towards better prompt safety

Recent advancements in large language models (LLMs) have enabled easy interaction with AI through prompts. However, this has also led to the emergence of security vulnerabilities, such as prompt hacking, prompt injection, leaking, and jailbreaking.

Human creativity generally outsmarted efforts to mitigate prompt hacking. To address this issue, we are helping Learn Prompting to organize the first-ever prompt hacking competition. Participants will be challenged to hack several progressively secure prompts. Prompt engineering is a non-technical pursuit, meaning that diversified people can practice it, from English teachers to AI scientists. This competition aims to motivate users to try hacking a set of prompts in order to gather a comprehensive, open-source dataset for safety research. We expect to collect diverse sets of creative human attacks that can be used in AI safety research.

Stay tuned in our Learn AI Discord community or the Learn Prompting’s Discord community for full details and information about prizes and dates!

Conclusion

Prompt engineering is becoming an increasingly important skill for individuals across all fields. The Learn Prompting course emphasizes practicality and immediate application. One of the exciting aspects of this course is that we are learning about it collectively, and we can only fully understand the techniques through exploration.

As we continue to experiment with generative AI, we must prioritize safety to ensure that the benefits of AI are accessible to everyone. The HackAPrompt competition is one step towards improving prompt safety, as it aims to create a large, open-source dataset of adversarial inputs for AI safety research.

To stay informed about the latest developments in AI, consider subscribing to the Towards AI newsletter and joining our Learn AI Discord community or Learn Prompting’s Discord community.

Learn Prompting 101: Prompt Engineering Course & Challenges was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.