15 Leading Cloud Providers for GPU-Powered LLM Fine-Tuning and Training

Last Updated on February 20, 2024 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI.

Demand for building products with Large Language Models has surged since the launch of ChatGPT. This has caused massive growth in the computer needs for training and running models (inference). Nvidia GPUs dominate market share, particularly with their A100 and H100 chips, but AMD has also grown its GPU offering, and companies like Google have built custom AI chips in-house (TPUs). Nvidia data center revenue (predominantly sale of GPUs for LLM use cases) grew 279% yearly in 3Q of 2023 to $14.5 billion!

Most AI chips have been bought by leading AI labs for training and running their own models, such as Microsoft (for OpenAI models), Google, and Meta. However, many GPUs have also been bought and made available to rent on cloud services.

The need to train your own LLM from scratch on your own data is rare. More often, it will make sense for you to finetune an open-source LLM and deploy it on your own infrastructure. This can deliver more flexibility and cost savings compared to LLM APIs (even when they offer fine-tuning services).

This guide will provide an overview of the top 15 cloud platforms that facilitate access to GPUs for AI training, fine-tuning, and inference of large language models.

1.Lambda Labs

Lambda Labs is among the first cloud service providers to offer the NVIDIA H100 Tensor Core GPUs — known for their significant performance and energy efficiency — in a public cloud on an on-demand basis. Lambda’s collaboration with Voltron Data also provides accessible AI computing solutions focusing on availability and competitive pricing.

Lambda’s other offerings include:

- NVIDIA GH200 Grace Hopper™ Superchip-powered clusters with 576 GB of coherent memory.

- Weights & Biases integration to accelerate model development for teams.

Lambda’s cloud services simplify complex AI tasks such as training sophisticated models or processing large datasets. The platform enhances LLM training efficiency for large-scale projects requiring substantial memory capabilities.

2.Microsoft Azure

Microsoft Azure provides a suite of tools, resources, guides, and a selection of products, including Windows and Linux virtual machines.

Azure enables:

- An easy setup and the safety of content.

- A remote desktop experience, accessible from any location for convenience and security.

- Implementation of advanced programming and natural language processing techniques for various applications such as speech-to-text, text-to-speech, and speech translation services, as well as natural language understanding and machine translation features.

Microsoft Azure employs AI to analyze visual content and accelerate the process of extracting information from documents. The platform also uses AI to ensure content safety, simplifying operations and management from cloud to edge.

3.Google Cloud

Google Cloud’s AI solutions offer a range of AI-powered tools for streamlining complex tasks and enhancing efficiency. The platform also provides customization capability for democratized access to advanced AI capabilities for businesses of various sizes. Additionally, Vertex AI streamlines the training of high-quality, custom machine-learning models with relatively little effort or technical knowledge.

Google Cloud’s other features include:

- Pre-configured AI tools for tasks such as document summarization and image processing.

- A platform to experiment with sample prompts and create customized prompts.

- Functions to adapt foundation and large language models (LLMs) to meet specific needs.

- Over 80 models in the Vertex Model Garden, including Palm 2 and open-source models such as Stable Diffusion, BERT, and T-5.

4.AWS Sagemaker

AWS SageMaker provides the necessary resources for LLM training with a blend of performance and user-friendliness. SageMaker simplifies the training process with its Training Jobs feature and facilitates advanced methods such as supervised fine-tuning.

Additionally, AWS offers its customers exclusive early access to unique customization features. Users can also leverage the fine-tuning capabilities for their proprietary data. Amazon Bedrock, a platform that simplifies the integration and deployment of AI models, facilitates this customization.

5.Paperspace’s

The Paperspace platform helps manage complex infrastructure by providing an efficient abstraction layer for accelerated computing.

Paperspace’s key features:

- Enables access to pre-configured templates for complex projects.

- Provides a user-friendly interface for training and deploying AI models.

- Removes costly and time-consuming distractions tied to infrastructure upkeep.

- Facilitates efficient remote work for building and sharing projects.

- Offers low-latency desktop streaming software.

The key strength of Paperspace is its user-friendliness, particularly in today’s increasingly remote and distributed work environments, where seamless access to resources is essential.

6.NVIDIA DGX

The NVIDIA DGX platform integrates cloud-based services with on-premises data centers. It combines hardware and software to meet the demands of businesses of all scales. Additionally, the platform includes optimized frameworks and accelerated data science software libraries to deliver faster results and a quicker return on investment (ROI) for AI projects.

The DGX Cloud is a multi-node AI-training-as-a-service solution tailor-made for enterprise AI workloads. It provides the scalability and flexibility to handle complex, large-scale AI projects.

One of the key features of the NVIDIA DGX platform is its repository of pretrained models. These models significantly reduce the time and resources required to get projects off the ground. The DGX infrastructure also offers reference architectures for AI infrastructure. These architectures are blueprints for building efficient, reliable, and scalable AI systems in the cloud or on-premises.

7.Jarvis Labs

Jarvis Labs offers a one-click GPU cloud platform tailored for AI and machine learning professionals. It offers a variety of GPUs, ensuring enough computational power for diverse projects like complex neural network training or a high-performance AI application. Additionally, the ready-to-use environments save time required for manual installations and configurations.

Jarvis Lab’s offerings include:

- Wide selection of GPUs, including the powerful A100, A6000, RTX5000, and RTX6000.

- Pre-installed frameworks like Pytorch, Tensorflow, and Fastai.

- Fast deployment process in a preferred Python environment.

8.IBM Cloud

The IBM Cloud platform is built with a focus on sustainability, accessibility, and technological creativity. It provides various tools and environments necessary for creative problem-solving and development.

The key feature of IBM Cloud is its deployment capabilities for pre-configured, customized security and compliance controls. The platform also emphasizes accessibility, allowing users to easily create a free account and access over 40 always-free products.

In line with its sustainability commitment, IBM Cloud offers the IBM Cloud Carbon Calculator. It monitors carbon emissions and provides emissions data for workloads according to their geographical locations. The Carbon Calculator is helpful for businesses aiming to meet their sustainability goals and adhere to reporting requirements.

9.OCI (Oracle Cloud)

Oracle Cloud is a fully managed database service with an in-memory query accelerator. It combines transactions, analytics, and machine learning in a single MySQL database. It provides real-time, secure analytics without the complexity, latency, and cost typically associated with ETL duplication.

Oracle Cloud’s offerings include:

- Deployment solutions for disconnected and intermittently connected operations.

- Consistent pricing worldwide, with a lower price for outbound bandwidth and superior price-performance ratios for computing and storage.

- Provision for low latency, high performance, data locality, and security.

- Free account with no time limits on over 20 services, including the Autonomous Database and Arm Compute.

- Several tutorials, hands-on labs, workshops, and events to get started.

Oracle Cloud Infrastructure is designed with the community in mind. It supports developers, administrators, analysts, customers, and partners in maximizing their use of the cloud. Additionally, new users can benefit from $300 in free credits to experiment with additional services.

10.CoreWeave

CoreWeave is the first Elite Cloud Solutions Provider for Compute in the NVIDIA Partner Network. It has a massive scale of GPUs and flexible infrastructure tailored for large-scale, GPU-accelerated workloads.

CoreWeave’s features include:

- Broad computing options, from A100s to A40s, for key areas like AI, machine learning, and visual effects.

- Kubernetes native cloud service for 35x faster and 80% less expensive computing solutions.

- Fully managed Kubernetes, delivering bare-metal performance without infrastructure overhead.

- NVIDIA GPU-accelerated and CPU-only virtual servers.

- Distributed and fault-tolerant storage with triple replication, ensuring data integrity and availability.

- Cloud computing efficiency and cost-effectiveness.

11.Tencent Cloud

Tencent Cloud offers a suite of GPU-powered computing instances for workloads such as deep learning training and inference. Their platform provides a fast, stable, and elastic environment for developers and researchers who need access to powerful GPUs.

They offer various GPUs, including the NVIDIA A10, Tesla T4, Tesla P4, Tesla T40, Tesla V100, and Intel SG1. These GPUs are available in multiple instance types designed for specific workloads.

Tencent Cloud’s GPU instances are priced competitively at $1.72/hour. The specific price will depend on the type of GPU instance and the required resources.

12.Vast AI

Vast AI is a rental platform for GPU hardware where hosts can rent out their GPU hardware. This unique approach allows users to find the best deals tailored to their computing requirements.

Vast AI’s features include:

- On-demand instances for users to run tasks for as long as needed at a fixed price.

- Use of Ubuntu-based systems to ensure a stable and familiar environment.

- Flexibility to scale up or down based on the project’s demands.

13.Latitude.sh

Latitude.sh provides a suite of high-performance services, from bare metal servers to cloud acceleration and customizable infrastructure to accelerate business growth.

This cloud platform balances performance and security with Metal Single-tenant servers with SSD and NVMe disks. It also provides GPU-intensive instances that users can customize for their specific needs.

Their network infrastructure includes features like 20 TB bandwidth per server and robust DDoS protection, ensuring secure internet traffic management.

14.Seeweb

Seeweb’s GPU Cloud Server seamlessly integrates with existing public cloud services. Their GPU cloud server tackles complex calculations and modeling with easy driver installation and 1Gbps bandwidth.

Seeweb offers usage-based billing, with pricing starting at €0.380 per hour. The best Seeweb Cloud Server GPU plan depends on your specific needs and requirements. The CS GPU 1 plan is a good option for someone just starting. It offers a good balance of price and performance.

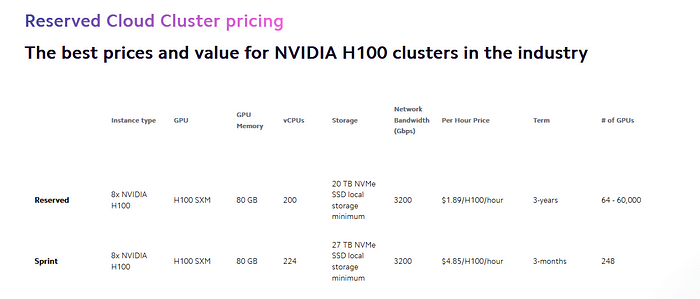

15.FluidStack

FluidStack is a scalable and cost-effective GPU cloud platform. It provides access to a network of GPUs from data centers worldwide. It provides instant access to over 47,000 servers with tier-four uptime and security through a simple interface.

The platform offers 3–5x lower costs and free egress than hyperscalers. It also allows users to train, fine-tune, and deploy large language models (LLMs) for up to 50,000 high-performance GPUs with a single platform.

Conclusion

The LLM landscape witnessed a notable change because of advancements in cloud-based fine-tuning. Platforms like Azure OpenAI Service, Lambda Cloud Clusters, and others are at the forefront of this revolution, offering powerful and scalable cloud solutions for fine-tuning LLMs. These services provide businesses and developers with the necessary infrastructure and tools to customize and enhance LLMs efficiently and cost-effectively.

These cloud platforms are not just facilitating the fine-tuning process; they are enabling a new era of AI and machine learning, where language models become more accurate, efficient, and tailored to specific needs. The ease of access to high-powered computing resources and the ability to manage large datasets effectively in the cloud are key drivers in this evolution.

The advancement of cloud computing is reshaping the digital landscape, with platforms like CoreWeave, Oracle Cloud Infrastructure, and IBM Cloud leading the way. These providers offer more than just cloud services; they catalyze innovation and efficiency. CoreWeave specializes in GPU-accelerated workloads, delivering unparalleled performance and cost savings. Oracle Cloud Infrastructure excels in versatility, offering various services, from managed databases to AI and machine learning tools. IBM Cloud focuses on digital transformation with robust security and sustainability through its AI-driven Carbon Calculator.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI