Building Intuition on the Concepts behind LLMs like ChatGPT — Part 1- Neural Networks, Transformers, Pretraining, and Fine Tuning

Last Updated on August 28, 2023 by Editorial Team

Author(s): Stephen Bonifacio

Originally published on Towards AI.

https://twitter.com/Stepanogil/status/1617907019692019714?s=20

I’m sure I’m not the only one, but if it wasn’t too apparent from my tweet back in January, my mind was completely blown away when I first encountered ChatGPT. The experience was unlike any other I’ve had with any ‘chatbot’. It seemed to understand user intentions, responding to queries and comments so naturally in a way only a human can. If I was told that there was another person on the other side of that conversation, I wouldn’t have doubted it for a second.

After the initial freakout, I started reading about anything I could get my hands on about this mysterious tech. I began experimenting with the ChatGPT API when it came out in March — creating chatbots and writing blogs about the experience — (one of which got boosted on Medium which I’m still over the moon about). This was not enough, of course, I needed to know more about what was happening on the other side of that API call and began going deep into the rabbit hole of language models, deep learning, and transformers, etc.

The most famous of Sir Arthur C. Clarke’s three laws on predicting future scientific and technological advancements states:

“Any sufficiently advanced technology is indistinguishable from magic.”

This blog post is an attempt to demystify concepts behind large language models (LLM) by uncovering the “magician’s trick”, if you will, in an accessible manner. Whether you’re one of those actively paying attention to AI trends, exploring the bourgeoning AI engineering space and needs grounding on the basics, or just trying to satisfy a piqued curiosity — the hope is you come away from reading this article with a little bit more information on the ingenious concepts that made ChatGPT possible.

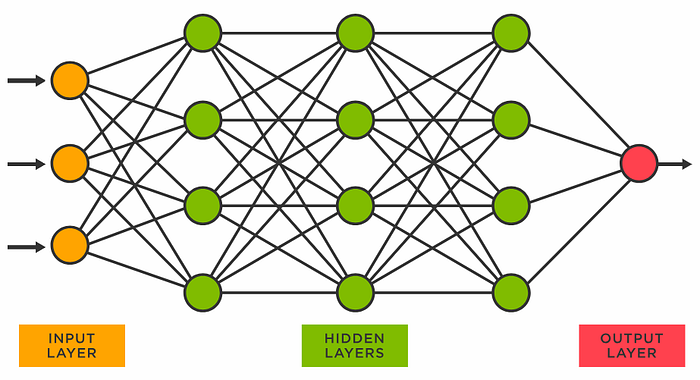

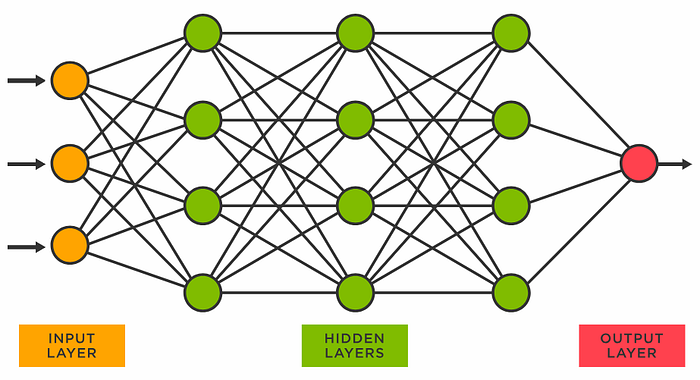

Neural Networks

LLMs like ChatGPT are trained on huge amounts of publicly accessible text data from the internet using artificial neural networks. Artificial neural networks are machine learning algorithms that are designed to mimic in an abstract way, our brain’s structure and learning process. They are made up of layers of interconnected nodes or “neurons,” and through repeated training iterations on massive amounts of text data, the network learns the patterns in the texts and the nuances of the language — enough to generate coherent words, sentences, or whole documents by itself.

The artificial neural network is the main feature of a subset of machine learning called deep learning. It is very important in the field of AI due to its ability to capture intricate patterns and dependencies in data and generalize from these patterns to make predictions on new, unseen data. In the context of language modeling, this means predicting what word should come next given a sequence of a preceding word or words. Compared to conventional machine learning algorithms like linear regression, neural networks are able to represent and model non-linear relationships between different features present in large amounts of data through the use of nonlinear mathematical functions (the activation function) in the neurons of the network’s hidden layers.

Neural networks have produced consumer tech that you’ve probably interacted with (and they are not necessarily exclusive to language tasks) such as unlocking your phone using facial recognition, the augmented reality feature in your Pokémon game, or the show suggestions in Netflix home screens.

Andrej Karpathy even argues that it can be a new and better way of writing software:

For example, instead of hand coding the logic of a program (— if condition A is met, do x; if condition A is not met, do y) the neural network instead learns through examples from the training data that if it encounters ‘condition A’ in production it should do x. These conditions/logic are not defined by its creators, rather the neural network adjusts itself (by tweaking its billions or even trillions of parameters — the weights and biases) to conform to this desired behavior.

Nobody knows what each individual weight and bias does specifically or how a single weight contributes to a specific change in the behavior of the artificial neural network. These parameters are changed en masse as a unit during training via gradient updates. (discussed in more detail later.)

This is why you’ll often hear machine learning models trained on neural networks as ‘black boxes’. Their inputs and outputs can be observed, but the internal workings or how it does what it does is not easily understood. This is also the reason for discoveries of ‘emergent’ capabilities. As an LLM gets bigger and bigger (measured by its number of parameters), it starts coming out of training with unanticipated abilities. For example, GPT-2 was discovered to be good at language translation, GPT-3 was an excellent few-shot learner, and GPT-4 has shown sparks of artificial general intelligence or AGI. None of these were explicitly defined as a training goal — its main objective was to predict the next word in a sequence.

Emergent behaviors are not unique to large neural networks. As a system gets bigger and more complex, the interaction between its individual components can lead to unexpected behaviors that cannot be fully explained by analyzing the properties of each individual component in isolation— a single ant is stupid, but a colony of ants can build very complex tunnel networks and wage war against other colonies. This phenomenon has been documented in systems like social insects (ants and bees), crowd behavior, and other biological ecosystems.

Pretraining Foundation Models

The first step in creating something like ChatGPT is pretraining a base model or a foundation model. The goal of this step is to create a machine learning model that is able to autonomously generate a coherent word structure or generate human-like text (phrase, sentence, paragraph) by generating words in sequence based on its prediction of what word should come next given the preceding words. It’s called pretraining as the output of this step — the base model is still a raw product that has limited practical applications and is usually only of interest to researchers. Base models are ‘trained’ further via the fine-tuning stages for specific tasks with a real-world utility like text translation, summarization, classification, etc.

At the start of pretraining, the parameters of the neural network are set with random numerical values. The words in the massive internet text data are converted into numerical representations in the form of tokens (as an integer) and embeddings (as vectors)- before being fed to the neural network.

Tokens and embeddings will be discussed in detail in the next part of this series but for now, think of a token as the unique ID of a word in the model’s vocabulary and the embedding as the meaning of that word.

The model is given a word or words and is asked to predict the next word based on those preceding word/s. Then, it is tested on unseen data and evaluated on the accuracy of its predictions based on the ‘ground truth’ next word from a hidden dataset previously unseen by the model.

In machine learning, the dataset is usually divided into the training dataset and the test dataset. The training dataset is used to train the model and the test dataset (the unseen data) is used to evaluate the model’s predictions. The test set is also used to prevent ‘overfitting’ — where the model performs exceptionally well on the training data but poorly on new, unseen data.

Consider the example sentence in the training dataset: “I have to go to the store”. This sentence might be used as follows:

- The model is given “I have” and is expected to predict “to”.

- Then it’s given “I have to” and is expected to predict “go”.

- Then “I have to go” and is expected to predict “to”.

- Finally, “I have to go to the” and is expected to predict “store”.

Going through the whole corpus of the training dataset like this, the model will be able to learn which words tend to appear after different sets of words. It learns the dependencies between “I” and “have”, “have” and “to”, and so on.

In the testing step, the process is similar, but the sentences or texts used are the ones that the model has not been trained on. It’s a way to check how well the model generalizes its language understanding to unseen data.

Let’s consider the unseen sentence from the test set: “She needs to head to the ___” Even though this exact sentence was not part of the training dataset, the model can use its understanding of similar contexts it encountered to make an educated prediction. For example, it has been seen in the training sentence “I have to go to the store” that the phrase “to go to the” or “to head to the” is often followed by a location or a destination. Based on this, the model might predict “market”, “store”, “office”, or other similar words, as they are common destinations in this kind of context. So, while the model was trained on “I have to go to the store” and variations of this text with similar meaning, it’s able to generalize from that to understand that “She needs to head to the…” is likely to be followed by a similar type of word, even though this exact sentence was not part of its training data.

How Models ‘Learn’

At the start of pretraining, the model would usually output nonsensical sequences of words when asked to make a prediction as it hasn’t ‘learned’ anything yet. In our example sentence earlier, it might generate the word ‘apple’ instead of the ground truth next word — ‘store’. Since LLMs are probabilistic, ‘wrong’ in this context means the model is assigning a higher probability (to be selected) to the word ‘apple’ compared to the expected word — ‘store’.

The ultimate goal is to have the model output ‘store’ every time it’s asked to predict the next word that comes next after the sequence “She needs to head to the ___”.

The difference in the actual and the expected or ground truth next word is calculated using a ‘loss function’ where the greater the difference, the higher the ‘loss’ value. The loss is a single number that ‘averages’ the loss or error of all the predictions you asked the model to make. Through several iterations of these steps, the aim is to minimize the value of this ‘loss’ through processes called backpropagation and gradient descent optimization. The model ‘learns’ or improves its prediction ability through these steps.

You’re probably wondering how you can ‘calculate the difference between two words’ to arrive at a loss value. Do note that what goes through the neural network are not actual texts (words, sentences) but numerical representations of these texts — their tokens and embeddings. The number representations of a word sequence are processed through the layers of the network where the output is a probability distribution over the vocabulary to determine what word comes next. An untrained model might assign a higher probability to the token id of the word ‘apple’ (say 0.8) compared to the token id of the ground truth next word — ‘store’ (at 0.3). The neural network will not encounter a single word or letter of any text. It works exclusively with numbers — basically a calculator with extra steps. U+1F605

Through backpropagation, the degree of the error of the model (the loss value) is propagated backward through the neural network. It computes the derivative to the output of each individual weight and bias i.e. how sensitive the output is to changes in each specific parameter.

For my people who didn’t take on differential calculus in school (such as myself), think of the model parameters (weights/biases) as adjustable knobs. These knobs are arbitrary — in the sense that you can’t tell in what specific way it governs the prediction ability of the model. The knobs, which can be rotated clockwise or counterclockwise have different effects on the behavior of the output. Knob A might increase the loss 3x when turned clockwise, knob B reduces the loss by 1/8 when turned counterclockwise (and so on). All these knobs are checked (all billions of them) and to get information on how sensitive the output is to adjustments of each knob — this numerical value is their derivative with respect to the output. Calculating these derivatives is called backpropagation. The output of backpropagation is a vector (a list of numbers) whose elements or dimensions consist of the parameters’ individual derivatives. This vector is the gradient of the error with respect to the existing parameter values (or the current learnings) of the neural network.

A vector has two properties: length or magnitude and direction. The gradient vector contains information on the direction in which the error or loss is increasing. The magnitude of the vector signifies the steepness or rate of increase.

Think of the gradient vector as the map of a foggy hill you’re descending from — gradient descent optimization is using the information about direction and steepness from the gradient vector to reach the bottom of the hill (the minimum loss value) as efficiently as possible by navigating to the path with the greatest downward incline (the opposite direction of the gradient vector). This involves iteratively adjusting the values of the weights and biases of the network (by subtracting small values to it i.e. the learning rate) en masse to reach this optimal state.

After these steps, the hope is during the next training iteration, when the model is again asked to predict the next word for “She needs to head to the…” it should assign a higher probability to the word ‘store’. This process is repeated several times until there is no significant change to the loss value meaning the model’s learning has stabilized or has reached convergence.

So the TL;DR on how neural networks learn to communicate in English (and other languages) is — math in serious amount. Like oodles. It boils down to reducing the value of a single number (the loss value) generated from complex computations within the neural network — where, as this number gets smaller, the more ‘fluent’ or ‘coherent’ the language model becomes. The millions or billions of mathematical operations applied between matrices and vectors in the inner layers of the network somehow coalesce into a geometric model of the language.

To help with intuition, we’ve anthropomorphized the model by using words like ‘understand’, ‘seen’, and ‘learn’, but in truth, it has no capacity to do any of these things. It’s just an algorithm that outputs the next best token of a sequence based on a probability distribution given a sampling method.

The Transformer

The Transformer is the breakthrough in natural language processing (NLP) research that gave us ChatGPT. It is a type of neural network architecture that utilizes a unique self-attention mechanism. It’s famously discussed in the paper ‘Attention is All You Need’ that came out in 2017. Almost all state-of-the-art LLMs (like BERT, GPT-1) that came out after this paper, were built on or using ideas from the transformer. It’s hard to overstate the importance of this paper due to its impact on deep learning. It’s now finding its way to vision tasks making it truly multi-modal and demonstrating its flexibility to handle other types of data. It also started the ‘…is all you need’ memetic trend that even the Towards AI editorial team is unable to resist. U+1F602

Prior to transformers, neural networks used in NLP that produced SOTA models, relied on architectures that utilize sequential data processing e.g. recurrent neural networks or RNNs — this means that during training, each word or token is processed by the network one after the other in sequence. Note that the order of words is important to preserve the context/meaning of a sequence — ‘the cat ate the mouse’ and ‘the mouse ate the cat’ are two sentences with two different meanings even though they are made up of the exact same words/tokens (albeit in a different order).

One of the key innovations of the transformer is doing away with recurrence or sequential token processing. Instead of processing tokens sequentially, it encodes the position information of each word (i.e. in which order a word appears in the sequence being processed) into its embedding before being inputted in the network’s inner layers.

More importantly, transformers solved the issue of long-term dependencies that neural nets like RNNs struggled with. Given a long enough sequence of words (e.g. a very long paragraph), RNNs will ‘forget’ the context of the word it processed earlier in the sequence — this is called the vanishing gradient problem. RNNs store information on the relevance of words in a sequence up to that point in what’s called the hidden state at each sequential or time step. As it processes a long sequence, gradients corresponding to earlier time steps can become very small during backpropagation. This makes it challenging for the RNN to learn from the early parts of the sequence and can lead to the ‘loss’ of information about words processed earlier. This is problematic for a next-word prediction model, especially if those ‘forgotten’ words are important to the context of the sequence currently being generated

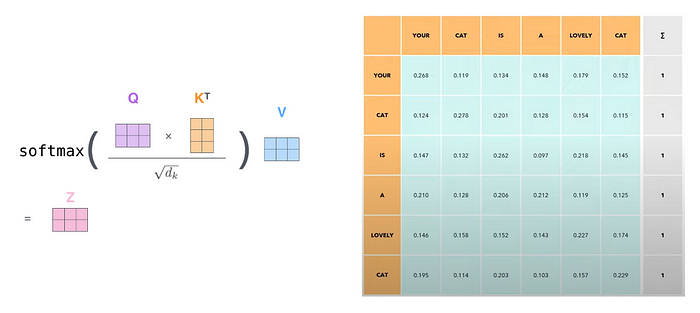

The transformer solves this limitation through the ‘self-attention’ mechanism. Same with positional encoding, each word, through its embedding is encoded with information on the degree or how much it should ‘attend to’ the rest of the words in the sequence — no matter the length of the sequence or the relative distance of the attended word in the sequence. This encoding is done simultaneously for all words in the sequence allowing the transformer to preserve the context of any sequence.

The degree how which one word should attend to other words is a ‘learned’ trait stored in the model weights and encoded in the word embeddings via matrix multiplications. These ‘learnings’ get adjusted during each training iteration as the model learns more about the relationships between words in the training data.

The final output of the self-attention layer (the Z matrix in our illustration above) is a matrix of the word embeddings that is encoded with information on the position of each word in the sequence (from the position encoding step) and how much each word should attend to all other words in the sequence.

It is then fed to a traditional neural network like the one discussed earlier in the article (called the feed-forward neural network). These steps (attention + feed-forward — which makes up a transformer block) are repeated multiple times for each hidden layer of the transformer — 96 times for GPT3, for example. The transformation in each layer adds additional information to the ‘knowledge’ of the model on how to best predict the next word in the sequence.

According to the LLM scaling laws published by OpenAI, to train better models, increasing the number of parameters is 3x more important than increasing the size of the training data. (Note: DeepMind has since published a paper with a differing view.) This translates to a significant increase in computational requirements, as handling a larger number of parameters demands more complex calculations. Parallelization, which is the process of dividing a single task into multiple sub-tasks that can be processed simultaneously across multiple compute resources, becomes essential in dealing with this problem.

Parallelization is difficult to achieve with RNNs given their sequential nature. This is not an issue for transformers as it computes relationships between all elements in a sequence simultaneously, rather than sequentially. It also means that they work well with GPUs or video cards. Graphics rendering requires a large number of simple calculations happening concurrently. The numerous, small, and efficient processing cores that a GPU has, which are designed for simultaneous operations, make it a good fit for tasks such as matrix and vector operations that are central to deep learning.

AI going ‘mainstream’ and the mad scramble to build larger and better models is a boon to GPU manufacturers. NVIDIA- specifically — whose stock price has grown 200% YTD as of this writing, has made them the highest-performing stock this year and pushed their market cap to USD 1 trillion. They join megacaps like Apple, Google, Microsoft, and Amazon in this exclusive club.

The Transformer is a decidedly complex topic and the explanation above wholesale left out important concepts in order to be more digestible to a broader audience. If you want to know more, I found these gentle yet significantly more fleshed-out introductions to the topic: Jay Allamar’s illustrated transformer, Lili Jiang’s potion analogy, or if you want something more advanced — Karpathy’s nanoGPT that babbles in Shakepear-ish.

Fine-tuning ‘chat’ models like ChatGPT

The output of pretrainings are base models or foundation models. Examples of recently released text-generation foundation models are GPT-4, Bard, LLaMa 1 & 2, and Claude 1 & 2. Since base models already have extensive knowledge of the language from pretraining (the structure of sentences, relationships between words, etc.), you can leverage this knowledge to further train the model to do specific tasks — translation, summarization, or conversational assistants like ChatGPT. The underlying idea is that the model’s general language understanding gained from pretraining can be used for a wide range of downstream tasks. This idea is called transfer learning.

If you ask or prompt a base model a question, it will probably reply with another question. Remember that it’s trained to complete a word sequence by predicting the word that should come next given the previous words in the sequence. Example:

However, we can get a base model to answer questions by ‘tricking’ it into thinking that it’s trying to complete a sequence:

Using this idea, the model goes through another round of training using different sets of prompt/completion pairs in a question-and-answer format. Instead of ‘learning English’ from random texts found on the internet by predicting what words come next after a set of words, the model ‘learns’ that to complete a prompt in a ‘question’ form, the completion should be in an ‘answer’ form. This is the supervised fine-tuning (SFT) stage.

Pretraining utilizes a type of machine learning called self-supervised learning where the model trains itself by creating the ‘label’ or ground truth word it’s trying to predict from the training data itself. Here is our example from earlier:

- The model is given “I have” and is expected to predict “to”.

- Then it’s given “I have to” and is expected to predict “go”.

The labels or target words ‘to’ and ‘go’ are created by the model as it goes through the corpus. Note that the target/ground truth words are important as this is the basis of the loss value — i.e. how good the current model’s prediction is versus the target- and the subsequent gradient updates.

Compared to the pretraining phase, the training data preparation in the fine-tuning stages can be labor intensive. It requires human labelers and reviewers that will do careful annotation of the ‘labels’ or the target completions. However, since the model has already learned general features of the language, it can quickly adapt to the language task it’s being fine-tuned for, even with the limited availability of task-specific training data. This is one of the benefits of transfer learning and the motivation behind pretraining. According to Karpathy, 99 percent of the compute power and training time and most of the data to train an LLM are utilized during the pretraining phase and only a fraction is used during the fine-tuning stages.

Fine-tuning uses the same gradient update method outlined earlier but this time it’s learning from a list of human-curated question/answer pairs that teaches it how to structure its completions i.e. ‘what to say and how to say it’.

It goes through other fine-tuning stages like reward modeling and reinforcement learning from human feedback (RLHF) to train the model to output completions that cater more to human preference. In this stage, the human labelers score the model’s completions on attributes like truthfulness, helpfulness, harmlessness, and toxicity. The human-preferred completions get reinforced into the training i.e. it will have a higher probability to appear in completions of the fine-tuned version of the model.

The output of these fine-tuning steps is the ‘assistant’ or ‘chat’ models like ChatGPT. These are the ‘retail’ versions of these foundation models and are what you interact with when you go to the ChatGPT website. The GPT-3 base model (davinci) can be accessed via an API. The GPT-4 base model has not been released as an API as of this writing and is unlikely to be released by OpenAI, given their recent statements about competition and LLM safety. These fine-tuning steps are generally the same for all available commercial and open-source fine-tuned models.

End of Part 1

Note: Part 2 will talk about Embeddings which predates the LLM explosion but is equally as fascinating — how embedding models are trained, and how a sentence or document-level embeddings (used in RAG systems) are generated from word embeddings. We will also be discussing about Tokens and why it’s needed. We have implied in this post that token = word to simplify things, but a real-world token can be an individual character or letter, a subword, a whole word, a series of words, or all of these types in a single model vocabulary!

If I got anything wrong, I’m happy to be corrected in the comments! 🙂

Resources/References:

3blue1brown. What is a neural network?

Geeks for Geeks. Artificial Neural Networks and its Applications

Jay Alammar. The Illustrated Transformer

Luis Serrano. What Are Transformer Models and How Do They Work?

Andrej Karpathy. The State of GPT

Andrej Karpathy. Let’s build GPT: from scratch, in code, spelled out.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI