Why Should Adam Optimizer Not Be the Default Learning Algorithm?

Last Updated on August 23, 2022 by Editorial Team

Author(s): Harjot Kaur

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

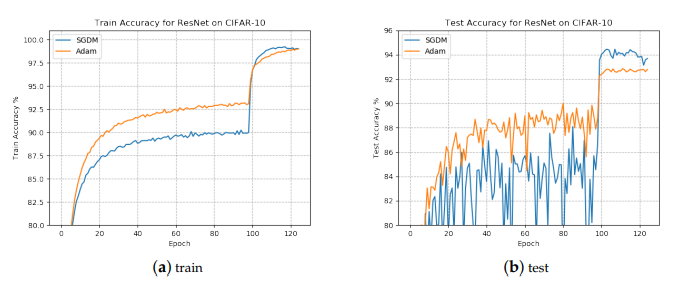

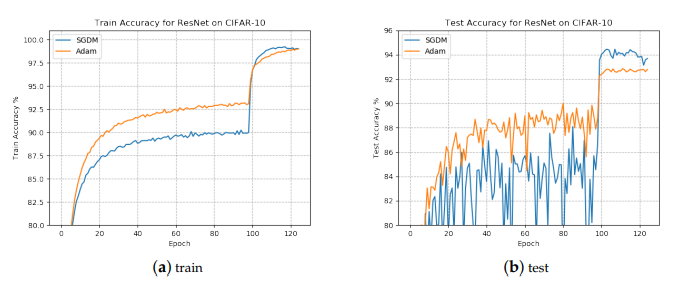

An increasing share of deep learning practitioners is training their models with adaptive gradient methods due to their rapid training time. Adam, in particular, has become the default algorithm used across many deep learning frameworks. Despite superior training outcomes, Adam and other adaptive optimization methods are known to generalize poorly compared to Stochastic gradient descent (SGD). These methods tend to perform well on the training data but are outperformed by SGD on the test data.

Lately, many researchers have penned down empirical studies to mull over the marginal value of adaptive gradient methods— Adam. Let’s try to comprehend the research findings.

Adam may converge faster but generalize poorly!

To fully understand this statement, it is pertinent to briefly observe the pros and cons of popular optimization algorithms Adam and SGD.

Gradient descent(vanilla) is the most common method used to optimize deep learning networks. First proposed in the 1950s, the technique can update each parameter of a model, observe how a change would affect the objective function, choose a direction that would lower the error rate, and continue iterating until the objective function converges to the minimum. To learn the math and functionality of Gradient descent, you can read up:

Math behind the Gradient Descent Algorithm

SGD is a variant of gradient descent. Instead of performing computations on the whole dataset — which is redundant and inefficient — SGD only computes on a small subset of a random selection of data examples. SGD produces the same performance as regular gradient descent when the learning rate is low.

Adam's optimization method computes individual adaptive learning rates for different parameters from estimates of the first and second moments of the gradients. It combines the advantages of Root Mean Square Propagation (RMSProp) and Adaptive Gradient Algorithm (AdaGrad) — to compute individual adaptive learning rates for different parameters. Instead of adapting the parameter learning rates based on the average first moment (the mean) as in RMSProp, Adam also makes use of the average of the second moments of the gradients (the uncentered variance). To learn more about Adam, read Adam — latest trends in deep learning optimization.

To summarize, Adam definitely converges rapidly to a “sharp minima” whereas SGD is computationally heavy, converges to a “flat minima” but performs well on the test data.

Why should ADAM not be the default algorithm?

Article published in September 2019, “Bounded Scheduling Method for Adaptive Gradient Methods” investigates the factors that lead to poor performance of Adam while training complex neural networks. Key factors leading to the weak empirical generalization capability of Adam are summarized as:

- The non-uniform scaling of the gradients will lead to the poor generalization performance of adaptive gradients methods. SGD is uniformly scaled, and low training error will generalize well

- The exponential moving average used in Adam can’t make the learning rate monotonously decline, which will cause it to fail to converge to an optimal solution and arise the poor generalization performance.

- The learning rate learned by Adam may circumstantially be too small for effective convergence, which will make it fail to find the right path and converge to a suboptimal point.

- Adam may aggressively increase the learning rate, which is detrimental to the overall performance of the algorithm.

The story so far…

Despite faster convergence behaviors, adaptive gradient algorithms usually suffer from worse generalization performance than SGD. Specifically, adaptive gradient algorithms often show faster progress in the training phase, but their performance quickly reaches a plateau on test data. Differently, SGD usually improves model performance slowly but could achieve higher test performance. One empirical explanation for this generalization gap is that adaptive gradient algorithms tend to converge to sharp minima whose local basin has large curvature and usually generalizes poorly, while SGD prefers to find flat minima and thus generalizes better.

Remember, this doesn’t negate the contribution of adaptive gradient methods in learning parameters in a neural network framework. Rather, it warrants experimentation with SGD and other non-adaptive gradient methods. Through this piece, I’ve attempted to impress upon exploring non-adaptive gradient methods in the ML experiment setup. Blindly setting Adam as the default algorithm may not be the best approach.

If you’ve read up till this point, I thank you for your patience and hope this piece has been a primer to your knowledge and you are taking back something of value. Let me know your thoughts on this.

References:

a) A Bounded Scheduling Method for Adaptive Gradient Methods

b) The Marginal Value of Adaptive Gradient Methods in Machine Learning

c) Improving Generalization Performance by Switching from Adam to SGD

d) Towards Theoretically Understanding Why SGD Generalizes Better Than ADAM in Deep Learning

Why Should Adam Optimizer Not Be the Default Learning Algorithm? was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.