Arcadia: Put your LLMs to Work — Part I: Setup

Last Updated on April 3, 2024 by Editorial Team

Author(s): Tim Cvetko

Originally published on Towards AI.

Part I of the 4-Part DocuSeries on Building Arcadia — the End-to-End Platform for API ML model Billing

I call it the Anti-HuggingFace. It’s bold, capitalist, and presumptuous, and I always wanted to build it.

Back when I was conducting research for a biotech company, my brother & I came across a difficult challenge.

“How can we let another company use our proprietary ML models without giving them access to the models themselves?

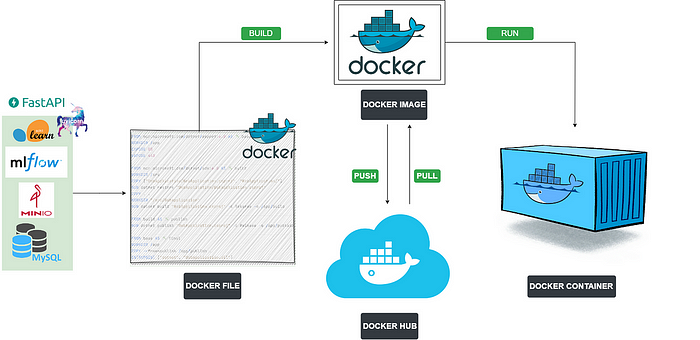

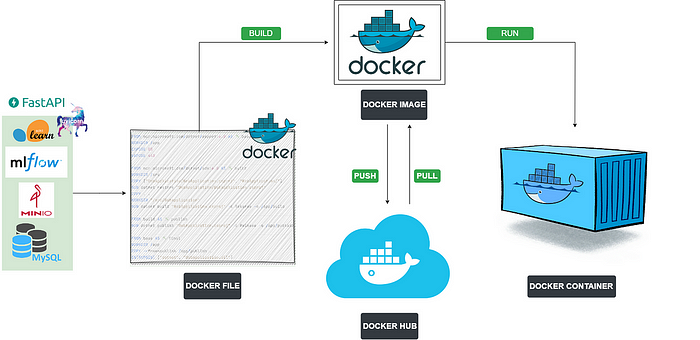

The answer is: you don’t. You containerize the ML model and expose its external endpoint for API inference.

Typical System Design for Model InferenceAPI Billing: How can we ensure our users pay per API billing without heavy overload in implementation?Ensuring hosting, scalability, and single-call SDK access.

That’s why I created Arcadia — an end-to-end platform for uploading your ML models up for inference and charging for their API usage.

Watch the 1st Arcadia Demo. Sign up to the Waitlist here.

Here’s THE thing: I built Arcadia out of my own necessity to help ML teams. I did what you are not supposed to do. I built it first. And I don’t care if they come. It solved my problem.

Throughout this 4-Part Series, I’m going to take you on a journey of experimentation, frustration, and ultimately the creation of Arcadia — the platform for exposing your existing ML/LLMs to the world with zero… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.