Vector Databases 101: A Beginner’s Guide to Vector Search and Indexing

Last Updated on February 20, 2025 by Editorial Team

Author(s): Afaque Umer

Originally published on Towards AI.

Vector Databases 101: A Beginner’s Guide to Vector Search and Indexing

Introduction

Alright, folks! Ever wondered how search engines find that one perfect cat meme out of a billion images? Or how Netflix somehow knows you’ll love that obscure sci-fi thriller? The secret sauce behind all of this is vector search and vector databases, helping power similarity-based recommendations and retrieval!

With AI and Large Language Models (LLMs) taking over the world (hopefully not like Skynet 🤖), we need smarter ways to store and retrieve high-dimensional data. Traditional databases? They tap out. That’s where vector databases step in — designed specifically for similarity searches, recommendation engines, and AI-powered applications.

In this short blog, we’re diving deep into vector databases — what they are, how they work, and, most importantly, how to use them like a pro. 🧑💻 By the end, you’ll not only understand the theory but also get hands-on experience. Here’s what we’ll cover: 👇

✅ What vector databases are 🤔

✅ How they work ⚙️

✅ Key components 🔑

✅ Indexing techniques 📌

✅ Popular databases 🌍

✅ Real-world use cases 🚀

✅ Hands-on implementation 💻

Buckle up, because this is going to be a ride! 🎢 But hey, fair warning — some theoretical bits ahead! 😴📖 Stick with me, though, because once we power through, you’ll be flexing your vector database skills like a champ. 😎🔥

Section 1: What is a Vector Database? (And Why Should You Care?)

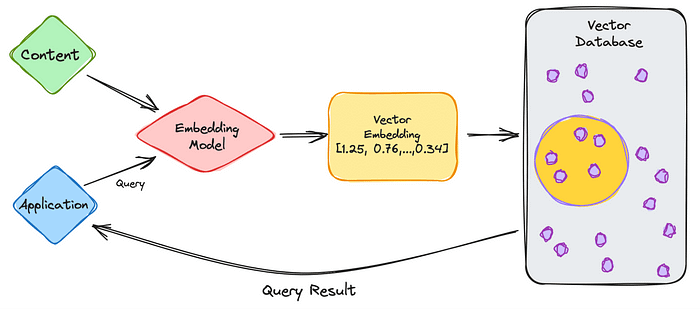

A vector database is a specialized database designed to efficiently store, index, and query high-dimensional vectors — numerical representations of unstructured data such as text, images, and audio. These vectors are typically generated by machine learning models and enable fast similarity searches that power AI-driven applications like recommendation engines, image recognition, and natural language processing.

How is it Different from Traditional Databases?

Traditional search relies on discrete tokens like keywords, tags, or metadata to retrieve exact matches. For instance, searching for laptop in a traditional database would return results containing the word laptop but miss related terms like notebook or ultrabook.

In contrast, vector search represents data as dense vectors in a continuous mathematical space, capturing deeper relationships between concepts. This enables similarity-based retrieval — so a vector search for laptop might also return results for MacBook or gaming PC based on contextual similarity rather than exact wording. Each dimension of a vector represents a latent feature, helping AI-driven applications understand meaning beyond keywords.

Section 2: How Do Vector Databases Work?

Imagine you’re at a music store looking for songs similar to your favorite jazz track. A traditional database would only find exact matches — songs with the same title or artist. But a vector database works like an expert DJ who understands the vibe of the music. If you ask for smooth jazz it might also suggest tracks from blues or lo-fi genres that have a similar feel, even if they don’t explicitly mention jazz in their metadata.

Just like that DJ, vector databases analyze the deeper relationships within data, enabling smart and relevant recommendations.

Vector databases are designed to store, index, and query high-dimensional vectors, which are numerical representations of data like text, images, or audio. These vectors are typically generated by machine learning models (e.g., Word2Vec, BERT, ResNet) and capture the semantic meaning or features of the data.

But here’s the catch — scanning millions of vectors one by one (a brute-force k-Nearest Neighbors or KNN search) is painfully slow. Instead, vector databases rely on Approximate Nearest Neighbors (ANN) techniques, which trade a tiny bit of accuracy for massive speed improvements. ANN methods (like HNSW, IVF, LSH) structure data efficiently, so instead of blindly searching everything, they quickly retrieve the most relevant vectors in milliseconds.

In short, vector databases don’t just store data; they make finding meaning inside that data lightning-fast. 🚀

Okay, let’s break this pipeline into steps:

1️⃣ Vectorization: Turning Songs into Numbers

🎼 What Happens? Each song is analyzed for its characteristics — tempo, mood, instruments, and even lyrics. An embedding model model converts these features into a high-dimensional vector, a numerical representation of the song.

🔹 Example: A chill acoustic track might be transformed into a vector like [0.12, -0.87, 0.45, …], capturing its melody, rhythm, and feel.

💡 Why? This lets the system understand music in a way that allows for similarity comparisons, just like how humans recognize familiar vibes in different songs.

2️⃣ Storing Vectors: Organizing the Music Library

📀 What Happens? These song vectors are stored in a database, often with metadata like title, artist, genre, and release date for additional context.

🔹 Example: The vector for an indie folk song might be stored with { "title": "Sunset Drive", "artist": "IndieSoul", "mood": "relaxing" }.

💡 Why? Efficient storage ensures fast and scalable song recommendations and retrieval.

3️⃣ Indexing: Making Search Fast & Efficient

🗂 What Happens? Just like organizing a playlist, the database groups similar songs together using indexing algorithms (HNSW, IVF, Annoy).

🔹 Example: Songs with similar moods and tempos are clustered together, making it faster to find related music.

💡 Why? Without indexing, the system would have to compare every song — which would be painfully slow as the library grows!

4️⃣ Querying: Finding Similar Songs

🔍 What Happens? When you ask for a recommendation, the app translates your song choice into a vector and searches for the most similar ones.

🔹 Example: Searching for a lo-fi jazz track might return similar chill beats, soft piano instrumentals, or even acoustic covers, even if they aren’t tagged under “lo-fi jazz.”

💡 Why? This enables personalized song recommendations beyond exact genre labels.

5️⃣ Distance Metrics: Measuring Musical Similarity

📏 What Happens? The database compares your song vector to others using similarity metrics to find the best matches.

📌 Common Metrics:

✔ Cosine Similarity → Measures how “aligned” two songs are in terms of mood & melody.

✔ Euclidean Distance → Measures how “far apart” two songs are in the musical space.

✔ Dot Product → Compares how strongly two songs match in terms of characteristics.

🔹 Example: If two songs have a low cosine angle, they likely share a similar melodic structure and feel.

💡 Why? This helps return musically relevant tracks instead of just relying on artist or genre.

6️⃣ Retrieval: Serving You the Best Recommendations

What Happens?

Once the database identifies the top-k most similar vectors, it retrieves them along with relevant metadata. These results aren’t just random — they are ranked by similarity scores, ensuring the most relevant items appear first.

Example:

Imagine you’re searching for “chill acoustic music” in a streaming app. Instead of just returning exact matches, the database finds songs that have similar vibes based on their vector representations. Your query might return:

Vector ID: 123, Score: 0.92

Metadata: {“title”: “Soft Sunset”, “artist”: “Indie Waves”, “genre”: “acoustic”}

Vector ID: 456, Score: 0.89

Metadata: {“title”: “Morning Breeze”, “artist”: “Calm Strings”, “genre”: “folk”}

Filtering for Relevance 🔍

Before returning the results, an optional filtering step ensures the results align with specific criteria. This can be applied before or after similarity search:

✅ Pre-filtering: Restrict search to a specific genre (e.g., only acoustic songs).

✅ Post-filtering: Exclude tracks you’ve already played or those marked as explicit.

Why?

This refined retrieval process allows applications to:

🎵 Recommend personalized playlists in music apps.

📸 Find similar images in a photo search engine.

🛍️ Suggest related products in e-commerce.

By combining vector search with filtering, the database ensures that every result is both accurate and relevant, making the search experience smarter and more intuitive! 🚀

That’s how vector databases revolutionize search and recommendations — whether for music, images, or text! 🚀

Section 3: Indexing Techniques: Making Queries Faster ⚡

Alrighty folks, now we get to the main engine that makes vector searches fast and scalable — Indexing! 🏎️

Without proper indexing, searching through millions (or billions) of vectors would be like manually skimming through an ocean of data — painfully slow and computationally expensive. Indexing structures data in a way that allows for efficient retrieval, making vector searches significantly faster.

When we step back and look at indexing techniques from a higher-level perspective, all these methods can be grouped into four main categories of indexing algorithms:

Tree-Based AlgorithmsGraph-Based AlgorithmsHash-Based AlgorithmsQuantization-Based Algorithms

All vector indexing techniques generally fall into one of these four categories. However, modern methods often combine multiple techniques — for example, IVF-PQ blends quantization and clustering, while HNSW merges graph-based and hierarchical approaches for better efficiency.

The key is to balance speed, accuracy, and memory based on your specific use case. 🚀 While there are many indexing methods, some of the most commonly used ones include:

Flat Index (Brute Force)

IVF (Inverted File Index)

HNSW (Hierarchical Navigable Small World)

ANNOY (Approximate Nearest Neighbors Oh Yeah)

LSH (Locality-Sensitive Hashing)

PQ (Product Quantization)

Faiss Index Variants (e.g., IVF-PQ, IVF-HNSW, OPQ)

We can dive deeper into the full range of indexing methods in another blog, but for now, let’s focus on the most widely used techniques.

HNSW (Hierarchical Navigable Small World) — Graph-Based Magic ✨

🛠️ How It Works:

HNSW is a graph-based indexing technique that builds a multi-layered graph to store high-dimensional vectors. The key idea is to create a small-world network, where vectors are connected based on their similarity, allowing for fast nearest-neighbor searches.

🔥 Why It’s Awesome:

- Instead of searching through all vectors, it navigates the graph by jumping from one node to another, reducing search time.

- Uses hierarchical layers, where the top layers provide a broad search scope, and lower layers refine the results.

- Highly accurate and faster than tree-based or brute-force approaches in high-dimensional spaces.

⚖️ Trade-offs:

✔ Fast search times 🔥

✔ High accuracy 🎯

❌ Memory-intensive due to graph structure 🧠

📌 When to Use It?

- When speed and accuracy are critical.

- When dealing with high-dimensional data (e.g., embeddings from large neural networks).

- When you can afford higher memory usage for faster retrieval.

IVF (Inverted File Index) — Divide & Conquer 🏗️

🛠️ How It Works:

IVF (Inverted File Index) is a cluster-based technique that divides the entire vector space into smaller clusters (or cells). When querying, the system first identifies the most relevant clusters and then performs a localized search within them.

🔥 Why It’s Awesome:

- Instead of scanning millions of vectors, it narrows down the search to only a few relevant clusters.

- Faster than brute-force search, especially for large-scale datasets.

- Works well when combined with other methods like PQ (Product Quantization) to improve efficiency.

⚖️ Trade-offs:

✔ Significantly faster than brute force 🚀✔ More memory-efficient than graph-based methods 📦

❌ Can lose accuracy if too few clusters are searched ❗❌ Cluster quality affects retrieval performance

📌 When to Use It?

When handling large-scale datasets where brute-force search is impractical.When memory constraints are an issue.When exact accuracy isn’t crucial, but speed is.

Flat Index (Brute Force) — The No-Frills Workhorse 🔍

🛠️ How It Works:

The simplest indexing technique: store all vectors as they are and perform an exhaustive search across the entire dataset. This means comparing every single vector against the query vector to find the nearest neighbors.

🔥 Why It’s Awesome:

- Perfect accuracy — it always finds the exact nearest neighbors since it scans everything.

- No additional indexing structures needed, so it’s easy to implement.

- Works well for small datasets where search speed isn’t a major concern.

⚖️ Trade-offs:

✔ 100% accuracy 🎯

✔ Minimal setup required 🛠️

❌ Very slow for large datasets 🐌

❌ Computationally expensive ⚡

📌 When to Use It?

- When you have small datasets and don’t need fancy indexing.

- When accuracy is the top priority and speed isn’t a concern.

- When comparing against other indexing methods to measure performance trade-offs.

Which One Should You Choose? 🤔

It depends on your use case:

✅ Need fast + accurate search? → HNSW

✅ Handling massive datasets with speed constraints? → IVF

✅ Want perfect accuracy and don’t care about speed? → Flat Index

For large-scale applications, hybrid approaches (e.g., IVF-PQ, HNSW with quantization) are often used to balance speed, accuracy, and memory efficiency.

💡 We’ll cover more indexing techniques in future blogs, but for now, these are the heavy hitters. That wraps up our indexing section!

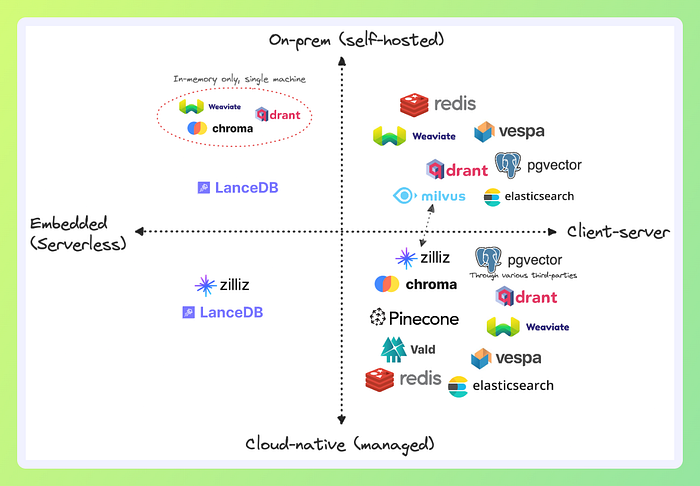

Section 4: Popular Vector Databases (The Heavyweights 🎖️)

A top-tier vector database isn’t just about storing vectors — it needs to handle large-scale, high-dimensional data efficiently while ensuring fast and accurate retrieval. The best vector databases are designed to scale, integrate seamlessly with machine learning workflows, and provide robust search capabilities. Here are the key features that make a vector database stand out:

Scalability & Adaptability— Handles millions to billions of vectors efficiently, scaling across multiple nodes while optimizing query and insertion rates.High-Performance Indexing & Search— Utilizes optimized Approximate Nearest Neighbor (ANN) search techniques like HNSW, IVF, or PQ for lightning-fast retrieval.Multi-Tenancy & Data Privacy— Ensures data isolation between users while allowing controlled data sharing when required.Comprehensive API & SDK Support— Offers integration with various programming languages (Python, Java, Go, etc.) to fit into diverse application ecosystems.Efficient Storage & Memory Management— Uses compression, quantization, and optimized indexing to balance memory usage without sacrificing accuracy.Hybrid Search Support— Allows combining vector search with traditional keyword or metadata filtering for more refined results.Streaming & Real-Time Updates— Supports dynamic updates without requiring complete re-indexing, enabling real-time data ingestion.Fault Tolerance & High Availability— Ensures reliability with replication, backup strategies, and a distributed architecture.User-Friendly Interfaces & Visualization— Provides intuitive dashboards or tools for monitoring, querying, and managing vector data.

Top Vector Databases

There are several vector databases available, each optimized for different use cases. Here are some of the most popular ones:

Pinecone — A fully managed, high-performance vector database designed for real-time applications and AI-powered search.

FAISS (Facebook AI Similarity Search) — An open-source library optimized for efficient similarity search and clustering of dense vectors.

Milvus — A scalable, cloud-native open-source vector database that supports hybrid search and real-time indexing.

Weaviate — A powerful vector database with built-in semantic search and machine learning capabilities.

Annoy (Approximate Nearest Neighbors Oh Yeah) — A lightweight and memory-efficient C++/Python library for fast nearest neighbor search.

Vespa — A scalable search engine that combines vector search with structured data filtering for hybrid search use cases.

Redis (Redis-Vector and Redis-Search) — A high-speed in-memory database that supports vector search via extensions.

These databases cater to different needs, from real-time AI applications to large-scale retrieval tasks. Choosing the right one depends on factors like scalability, latency, memory efficiency, and integration with your existing stack. 🚀

How to Choose the Right Vector Database?

When selecting a vector database, consider the following factors:

Scalability: Can it handle your dataset size and query volume?Performance: Does it meet your latency and throughput requirements?Ease of Use: Is it easy to integrate with your existing stack?Features: Does it support hybrid search, filtering, or other advanced features?Cost: Is it open-source, or does it require a paid subscription?

Section 5: Real-World Use Cases: Where Vector DBs Shine 🔥

Semantic Search— Finds relevant documents, images, or videos based on meaning rather than exact keywords (e.g., AI-powered search engines).Recommendation Systems— Suggests similar products, songs, or movies based on user behavior and vector embeddings (e.g., e-commerce, streaming platforms).Fraud Detection & Anomaly Detection— Identifies unusual patterns in transactions or network traffic to prevent fraud and cybersecurity threats.Image & Video Search— Enables reverse image search and content-based retrieval by comparing feature vectors of images and videos.Chatbots & Conversational AI— Enhances chatbot responses by retrieving semantically similar queries and answers from large knowledge bases.Personalized Healthcare & Drug Discovery— Matches medical records, genomic data, or molecular structures to find relevant treatments or drug candidates.Code Search & Developer Tools— Helps developers find similar code snippets or resolve errors based on past solutions.Multimodal Search— Allows cross-modal search (e.g., finding images based on text descriptions) by aligning vectors across different data types.

Section 6: Hands-on Implementation: Build Your First Vector Search with FAISS

Alright, Alright, Alright — We’re Finally Here!

This is the moment we’ve been waiting for! Enough with the theory — let’s get into action. It’s time to walk the talk and see a vector database in action.

We’ll start with a simple example:

- Create a vector database

- Add some random text samples from different domains

- Run queries and observe what vectors it retrieves

For simplicity, we’ll use an in-memory vector database like FAISS. But the process stays the same for any other vector database — just make sure to have the server up and running (or spin up a Docker container if you’re going big).

Let’s dive in and see some real magic happen! 🚀

First, let’s import the necessary libraries 🔃

Next, We’ll use a small dataset of sentences grouped into four categories:

Now to make the data easier to work with, we’ll flatten it into a list of sentences and track their categories:

Next, we’ll convert the sentences into embeddings (vector representations) using a pre-trained sentence transformer model, this model Converts each sentence into a 384-dimensional vector.

Well done! We’ve got our index ready — now it’s time to put it to the test and see if our queries fetch the right results.

It will required a function to search for the most relevant sentences based on a query.

Let’s test the search function with some example queries:

Wow! 🤩 Exactly what we were looking for! The results are in, and our vector search is doing its magic — fetching the most relevant matches with precision. Feels like unlocking a hidden superpower, doesn’t it?

Here’s the complete code for this operation 👇

Final Thoughts 💡

- FAISS provides efficient and fast nearest neighbor searches for large-scale vector data.

- This in-memory approach works well for small-scale use cases. For production, distributed FAISS or a vector database like Pinecone, Weaviate, or Milvus should be used.

- The process remains similar for other vector databases — you just replace FAISS with an actual DB connection.

That’s it! 🚀Now that you’ve got FAISS up and running, it’s time to explore other vector databases. Try out Pinecone, Weaviate, or Milvus, and see how they compare! 🔍⚡

All right, all right, all right! With that, we come to the end of this blog.

I hope you enjoyed this article! You can follow me Afaque Umer for more such articles.

I will try to bring up more Machine learning/Data science concepts and will try to break down fancy-sounding terms and concepts into simpler ones.

Thanks for reading 🙏Keep learning 🧠 Keep Sharing 🤝 Stay Awesome 🤘

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI