Email Assistant Powered by GPT-3

Last Updated on January 6, 2023 by Editorial Team

Last Updated on November 29, 2020 by Editorial Team

Author(s): Shubham Saboo

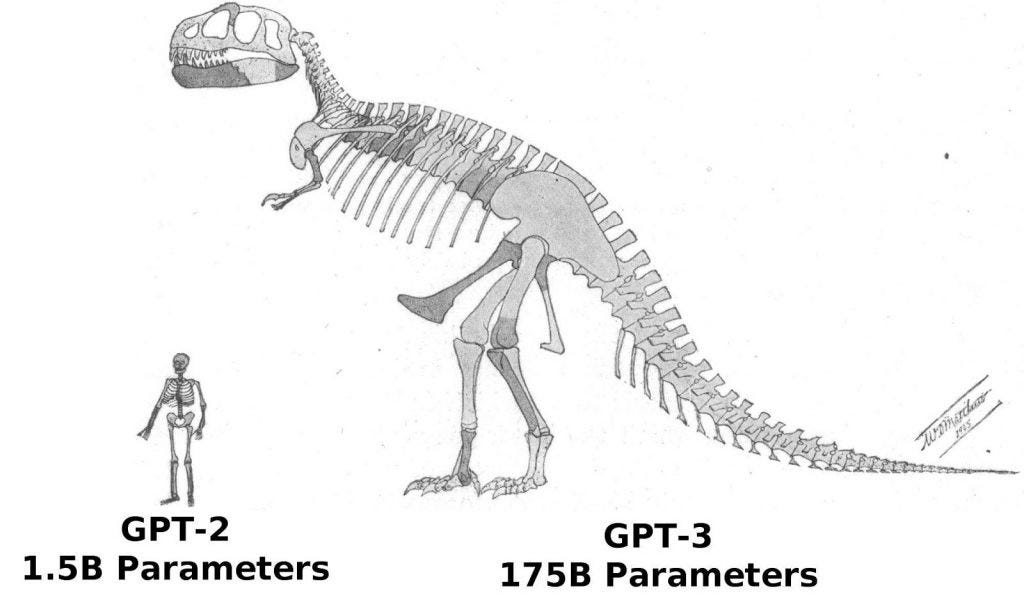

OpenAI recently published a new successor to their language model, GPT-3, which is now the largest model trained so far with 175 billion parameters.

What is GPT-3?

GPT-3 stands for Generative Pre-trained Transformer 3. It is an autoregressive language model that uses deep learning to produce human-like results in various language tasks. It is the third-generation of the language model in the GPT-n series created by OpenAI [1].

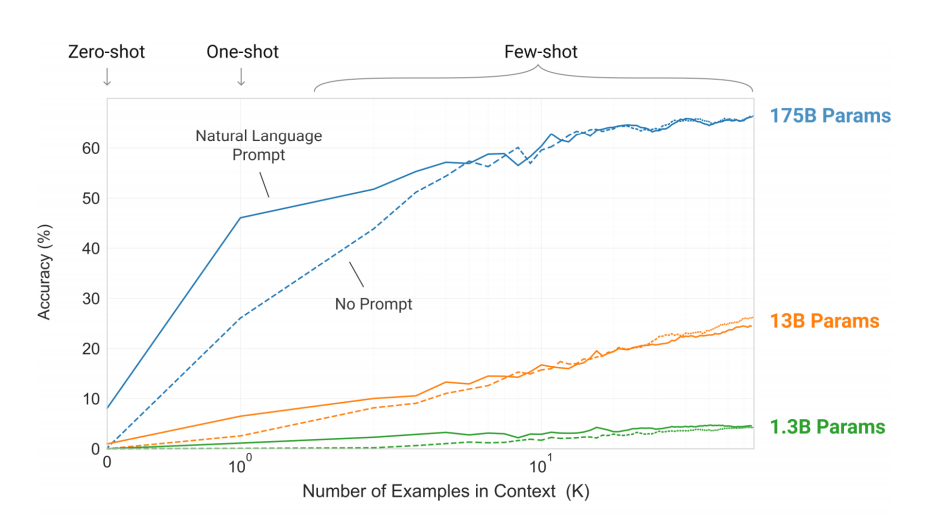

GPT-3 is an extension and scaled-up version of GPT-2 model architecture — It includes the modified initialization, pre-normalization, and reversible tokenization and shows strong performance on many NLP tasks in the zero-shot, one-shot, and few-shot settings.

In the above graph, it is clearly visible how GPT-3 dominates all the small models and get substantial gains on almost all the NLP tasks. It is based on the approach of pretraining on a large dataset followed by fine-tuning or priming for a specific task. Today’s AI system have their limitations in terms of performance while switching among various language tasks, but GPT-3 makes it very flexible to switch among different language tasks and is very efficient in terms of performance.

GPT-3 uses 175 billion parameters, which is by far the largest number of parameters that a model is trained on. It has brought up some fascinating insights that showed us if we can scale up the training of language models, it can significantly improve task-agnostic, few-shot performance making it comparable or even better than prior SOTA approaches.

Extrapolating the spectacular performance of GPT3 into the future suggests that the answer to life, the universe and everything is just 4.398 trillion parameters. — Geoffrey Hinton [3]

GPT-3 is created by keeping the tech as well as the non-tech audience in mind, it doesn’t require complex gradient fine-tuning or updates, and the interface design is straightforward and intuitive that can be easily used by anyone with little to no prerequisites.

Access to GPT-3

The access to GPT-3 is given in the form of an API. Due to the largeness of the model, the openAI community decided not to release the entire model with 175 billion parameters. Unlike the current AI systems, which are designed for one use-case, GPT-3 is designed to be task-agnostic and provides a general-purpose “text in, text out” interface, providing the flexibility to the users to try it on virtually for any language task.

The API is designed in such a way that once you provide it with the apt text prompt, it will process it in the backend on the OpenAI servers and return the completed text trying to match the pattern you gave it. Unlike the current deep learning systems, which require a humungous amount of data to achieve SOTA performance, the API requires a few examples to be primed for your downstream tasks [2].

The API is designed to be very simple and intuitive to make machine learning teams more productive. The idea behind releasing GPT-3 in the form of API was to allow data teams to focus on focus on machine learning research rather than worrying about distributed systems problems.

GPT-3 in Action

To see GPT-3 in action, I have created an automated email assistant that’s capable of writing a human-like email in a formal capacity with minimal external instructions. In the next part of this article, I will walk you through the workflow of the email assistant application and the tech stack used to build the application.

Tech Stack

- OpenAI GPT-3 API: The OpenAI API connects the application to the servers hosting the GPT-3 model for sending the input and fetching the output. The API key provides us with a simple and intuitive text prompt interface (with text in/text out feature) that can be easily used for getting model training and predictions.

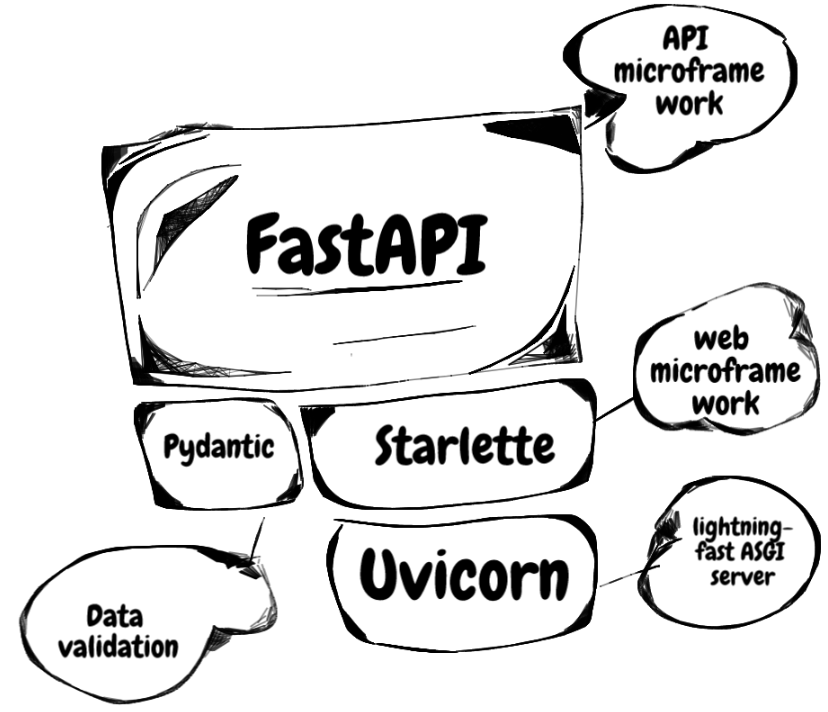

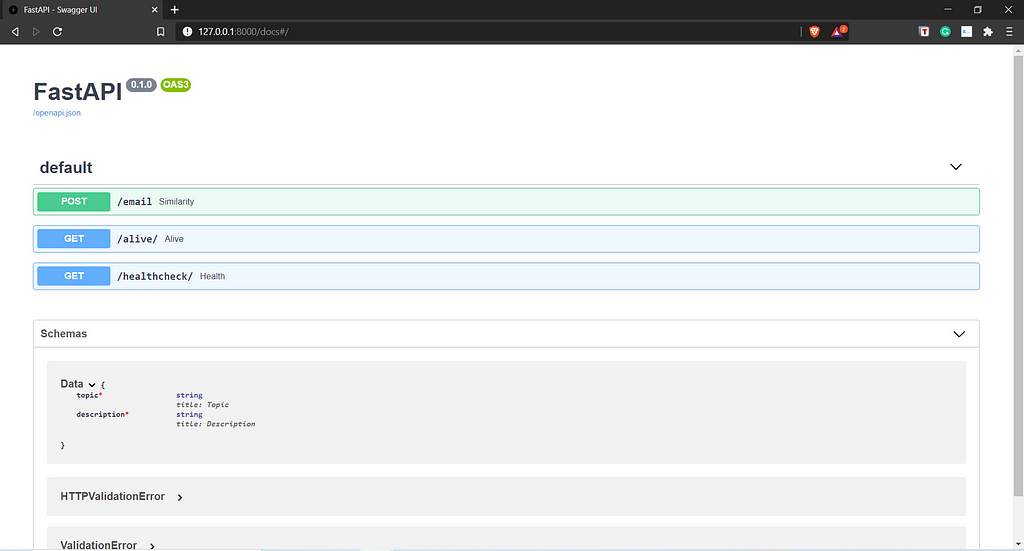

- FastAPI: FastAPI is used as a wrapper for our data application to expose its functionality as RESTFUL microservices. It is a modern, fast, high-performance web framework for building APIs with Python. It is built on top of Starlette, Pydantic, and Uvicorn. To learn more about FastAPI, please refer to this article.

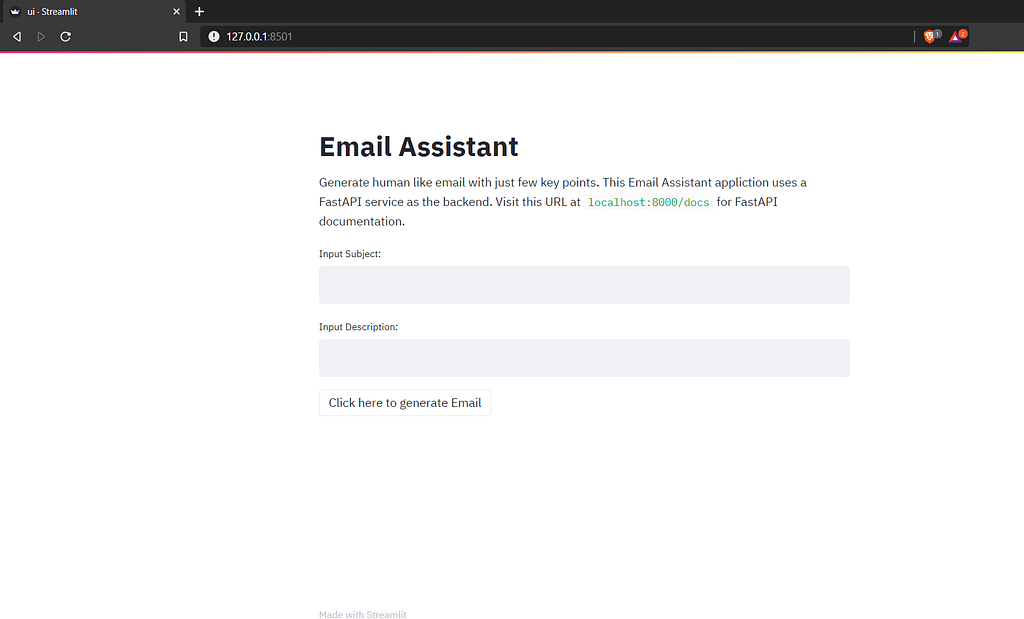

- Streamlit: We have used streamlit in the frontend to provide a simple yet elegant user interface for our application. It is an open-source python library capable of turning data scripts into sharable web applications within minutes. It makes it very easy to build custom web applications with minimal effort and zero knowledge of front-end design frameworks (all in python). To know more about Streamlit, head over to this article.

Application walkthrough:

Now I will walk you through the email assistant application step by step:

So, there are two main components based on which I have created the training promptly. Prompt design is the most significant process in priming the GPT-3 model to give a favorable response. The two main components of my prompt design process are as follows:

- Subject: A one-liner to tell the assistant what the email is about.

- Description: It has minimal relevant information in the form of one or two sentences to describe the main objectives of the email.

Let’s see an example in action, to truly understand the power of GPT-3. In the below example, we will generate a few emails from minimal instructions to the email assistant.

Conclusion

GPT-3 will redefine the way we look at technology, the way we communicate with our devices and will lower the barrier to access to advanced technology. GPT-3 can write, create, and converse. It has great potential to create an array of business opportunities and take a giant leap towards an abundant society. It may act as a pivotal step for the democratization of Artificial Intelligence.

References

- https://en.wikipedia.org/wiki/GPT-3

- https://openai.com/blog/openai-api

- https://twitter.com/geoffreyhinton/status/1270814602931187715

If you would like to learn more or want to me write more on this subject, feel free to reach out.

My social links: LinkedIn| Twitter | Github

Email Assistant Powered by GPT-3 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.