Working with PyTorch Tensors

Last Updated on January 6, 2023 by Editorial Team

Author(s): Bala Priya C

Some useful tensor manipulation operations

As we know, PyTorch is a popular, open source ML framework and an optimized tensor library developed by researchers at Facebook AI, used widely in deep learning and AI Research. ✨

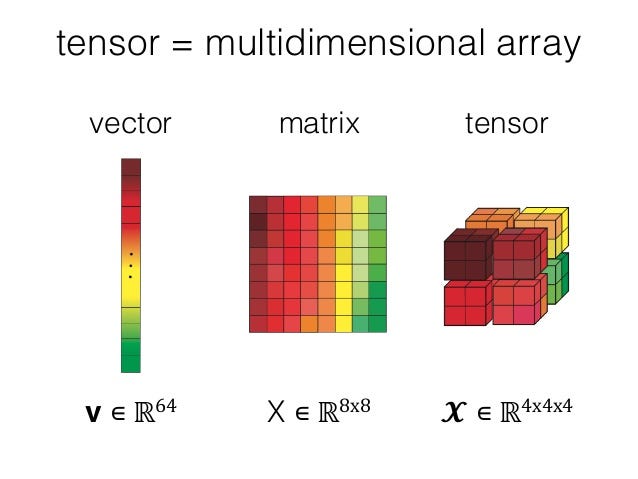

The torch package contains data structures for multi-dimensional tensors (N-dimensional arrays) and mathematical operations over these are defined.

In this blog post, we seek to cover some of the useful functions that the torch package provides for tensor manipulation, by looking at working examples for each and an example when the function doesn’t work as expected.

OUTLINE

TORCH.CAT - Concatenates the given sequence of tensors along the given dimension TORCH.UNBIND - Removes a tensor dimension TORCH.MOVEDIM - Moves the dimension(s) of input at the position(s) in source to the position(s) in destination TORCH.SQUEZE - Returns a tensor with all the dimensions of input of size 1 removed TORCH.UNSQUEEZE - Returns a new tensor with a dimension of size one inserted at the specified position.

TORCH.CAT

torch.cat(tensors, dim=0, *, out=None)

This function concatenates the given sequence of tensors along the given dimension. All tensors must either have the same shape (except in the concatenating dimension) or be empty.

The argument tensors denotes the sequence of tensors to be concatenated

dim is an optional argument that specifies the dimension along which we want tensors to be concatenated (default dim=0)

out is an optional keyword argument

Let’s walk through a few examples;🙂

In this example, as we had specified dim=0 , the input tensors have been concatenated along dimension 0, as seen in the output cell below. The input tensors each had shape (2,3) , and as the tensors were concatenated along dimension 0, the output tensor is of shape (4,3)

Well, this time, let’s choose to concatenate along the first dimension (dim=1)

The ip_tensor_1 was of shape (2,3) and the ip_tensor_2 was of shape (2,4).As we chose to concatenate along the first dimension, the output tensor returned is of shape (2,6)

Now, let’s see what happens when we try to concatenate the above two input tensors along. dim=0

We see that an error is thrown when we try to concatenate along dim=0.This is because the size of the tensors should agree in all dimensions other than the one that we’re concatenating along.

Here, ip_tensor_1 has size 3 along dim=1 whereas ip_tensor_2 has size 4 along dim=1 which is why we run into an error.

Therefore, we can use the torch.cat function when we want to concatenate tensors along a valid dimension provided the tensors have the same size in all other dimensions

TORCH.UNBIND

torch.unbind(input, dim=0)

This function removes the tensor dimension specified by the argument dim (default dim=0) and returns a tuple of slices of the tensor along the specified dim

The following examples will help us understand what torch.unbind does better.

Here, the ip_tensor is of shape (2,3). As we specified, dim=0 we can see that applying unbind along the dim=0 returns a tuple of slices of the ip_tensor along the zeroth dimension. Time to look at example 2.

In the above example, we see that when we choose to unbind along dim=1, we get a tuple containing three slices of the input tensor along the first dimension

Take a look at the output cell below. As expected, we see that the input tensor is of shape (10,10), and when we choose to unbind along a dimension that is not valid, we run into an error.

A clear understanding of dimensions and size along a specific dimension is necessary; Even though our input tensor has 100 elements and has size 10 in each of the dimensions 0 and 1, it does not have a third dimension of index 2; hence, it’s important to pass in a valid dimension for the tensor manipulation operations.🙂

The unbind function can be useful when we would like to examine slices of a tensor along a specified input dimension.

TORCH.MOVEDIM

torch.movedim(input, source, destination)

This function moves the dimensions of input at the positions in source to the positions specified in destination

source and destination can be either int (single dimension) or tuple of dimensions to be moved; Other dimensions of input that are not explicitly moved to remain in their original order and appear at the positions not specified in the destination

Well, it’s time to put together a few examples.👩🏽💻

In this example, we wanted to move dimension 1 in the input tensor to dimension 2 in the output tensor & we’ve done just that using the movedim function.

ip_tensor is of shape (4,3,2) whereas op_tensor is of shape (4,2,3) that is, dim1 in input tensor has moved to dim2 in the output tensor

In this example, we wanted to move dimensions 1 and 0 in the input tensor to dimensions 2 and 1 in the output tensor. And we see that this change has been reflected by checking the shape of the respective tensors.

Now, we head to an example where the function fails to work.

Let’s look at the output cell embedded above and the error message. In this example, we get an error as we’ve repeated dimension 1 in the destination tuple. The entries in source and destination tuples should all be unique.

This function movedim helps in going from a tensor of specified shape to another while retaining the same underlying elements.

TORCH.SQUEEZE

torch.squeeze(input, dim=None, *, out=None)

This operation returns a tensor with all the dimensions of input of size 1 removed. When dim is specified, then squeeze operation is done only along that dimension

We had ip_tensor of shape (2,1,3), in the op_tensor after squeezing operation, we have the shape (2,3); In the input tensor ip_tensor the second dimension of size 1 has been dropped.

In the above example, we set the dimension argument dim=0 The input tensor ip_tensor has size=2 along dim=0. As we did not have size=1 along dim=0, there’s no effect of squeezing operation on the tensor, and the output tensor is identical to the input tensor

In the above example, we set the dimension argument dim=1 . As the tensor had size=1 along the first dimension, in the output tensor, that dimension was removed, and the output tensor is of shape (2,3,1)

In the above example, we see that ip_tensor has shape (2,3) and is a 2-D tensor with dim=0,1 defined; As we tried to squeeze along dim=2 which does not exist in the original tensor, we get an IndexError

In essence, squeeze functions helps remove all dimensions of size 1 or along a specific dimension.

TORCH.UNSQUEEZE

torch.unsqueeze(input, dim)

This function returns a new tensor with a dimension of size one inserted at the specified position.

Here, dim denotes the index at which we want the dimension of size 1 to be inserted. The returned tensor shares the same underlying data with the input tensor.

In this simple example shown above, unsqueeze inserts a singleton dimension at the specified index 0

In the example shown above, unsqueeze inserts a singleton dimension at the specified index 2 (the input is of dimension 2 (0,1), and we have inserted a new dimension of size 1 along dim=2)

We get an IndexError as expected; This is because the argument dim can only take values up to input_dim+1 . In this case, dim can take a maximum value of 2

Summing up, the unsqueeze function lets us insert dimension of size 1 at the required index.

In this blog post, we’ve covered a few useful functions that torch provides for manipulating tensors. To learn more about the utilities that torch package provides, please do check the official documentation of PyTorch 😊

torch – PyTorch 1.7.0 documentation

Working with PyTorch Tensors was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.