Stable Face-Mask Detection Using Adapted Eye Cascader

Last Updated on July 4, 2024 by Editorial Team

Author(s): Jan Werth

Originally published on Towards AI.

Table of content

- Introduction

- Prerequisite

- Eye Detection via Cascaders

– Cascaders

– Scalefactor and Min_Neighbour - Find Matching eye-pairs

– Matching eyes step by step with code

– Less than three eyes

– Draw facebox from eyebox - Conclusions

Update

Dear reader, I wrote this article at the beginning of the COVID-19 pandemic in 2022. Unfortunately, I used a licensed image for my analysis, which prevented me from publishing the article. After years, I finally found the owner of this particular image and thankfully gained their permission to use the image for this article. Thanks a lot to NDTV allowing me to use their copyrighted image for this analysis. NDTV provides latest News from India and around the world.

The information in this article might be not as fresh as back in 2022. However, it still explains the details on how to approach a data science problem and guides you through it step by step.

Introduction

Lately, we created a face-mask detection algorithm to run on our embedded hardware, the phyBOARD Pollux. There are many ways to create a facemask detection algorithm, such as…

- using the TensorFlow object detection API trained with and without images of masked faces

- or training a cascade classifier with and without images of masked faces

- or mixing a facial detection model with a following mask detection model.

There are reasons when it is not advisable/possible to use a deep neural network, and it is more suitable to use a more lightweight solution such as the openCV cascaders. One reason would be the implementation into an embedded system with limited computation capabilities.

For demonstration purposes, we chose to use the latter method by detecting the face with an openCV cascade classifier and following up with a trained MobileNet mask classifier (not described here). What we noticed was that the classic haarcascade classifiers included frontal_face, frontal_face_alt, etc. Did not work properly when the person would carry a mask. Now, there would be the option to train a model like the MTCNN or a haarcascader to detect faces with masks. However, we decided to use an eye detection haarcascader and optimize it to create the face bounding boxes for further detection.

Prerequisite

The entire code can be found here:

JanderHungrige/Face_via_eye_detection

Permalink Failed to load latest commit information. Here we use an eye-haarcascader to recognize faces. This helps with…

github.com

- Python 3.x

- opencv-python

- matplotlib

- numpy

I recommend Anaconda or Python3 virtualenv to create a virtual environment. Then the libraries can be installed via:

Eye Detection via Cascader

Cascaders

The openCV library has several good working cascade classifies, which are widely used in e.g., facial recognition tasks. The main cascaders can be found here:

opencv/opencv

Open Source Computer Vision Library. Contribute to opencv/opencv development by creating an account on GitHub.

github.com

As mentioned in the introduction, we want to detect faces. However, if a person wears a mask, the classic frontal_face cascader and its derivatives fail. Therefore, we use the haarcascade_eye.xml in this post and adapt it to give us facial bounding boxes. You can also use others like haarcascade_eye_tree_eyeglasses.xml or haarcascade_lefteye_2splits.xml.

As the name suggest, this cascader is optimized to detect eyes.

First, we import our libraries and load the cascader.

Scalefactor and Min_Neighbour

There are two parameters for the cascader, the scalefactor and the min_neighbour.

scalefactor: What the haarcascader is doing, it is sliding a window over an image to find corresponding haar features (e.g., for a face) it was trained on. The size is fixed during training. To allow finding haar features with different sizes (e.g., large face in the foreground), the image is scaled down after each iteration, presenting a different cutout in the sliding window (image pyramid). The scalefactor defines the percentage the image is scaled down between each iteration. The downscaling takes place until the rescaled image size hits the dimensions of the model input dimensions (x or y). For more details, check this out the Viola-Jones algorithm.

min_neighbour: During the sliding windows' analysis, the algorithm finds many false positives. To reduce the false positives, the min_neigbour parameter determines how many times an object needs to be detected between the scaling to count as a true positive. So, setting it to, e.g., 2, overall scaling iterations in the same region, a face has to be detected at least two times to count as a detection of a face.

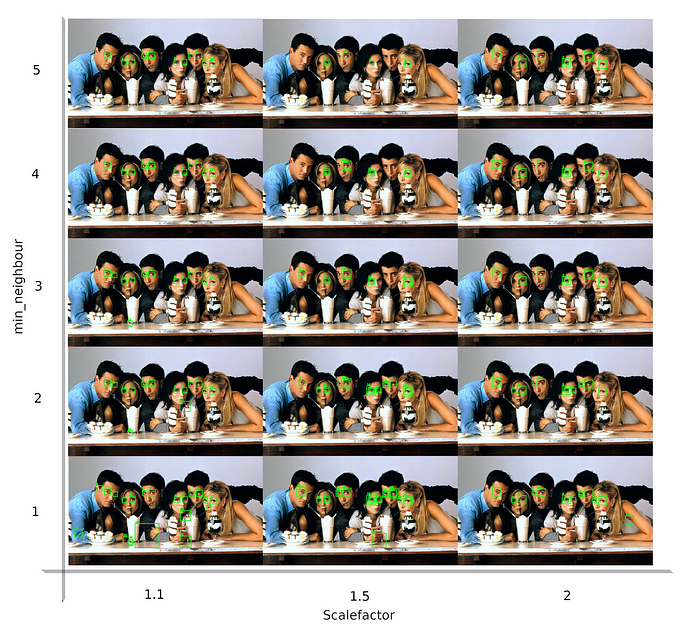

In the image (you probably have to zoom in a bit), you can see that an increase in the min_neighbours variable leads to fewer “false eyes” detections. With the increase in the scale factor, fewer non-eye-objects are detected as an eye. The idea is to find the sweet spot between both parameters. For our task, the scalefactor=1.1 and min_neighbour = 5 seem to work best.

Find Matching eye Pairs

The most difficult part of retrieving the correct bounding boxes of the face from the eyes is to correctly match eye pairs. Therefore, we first want to see in which order eyes are detected.

Let us mark the eyes with the index at which they are detected.

This results in marked and labeled eyes:

You can see, that the cascader first moves from left to right before changing the height. This can result in the index sequence not matching the eye pairs, as seen below.

So, the first idea was to look for similarities in the y-axis, proximity in the x-axis, and similar box size. However, depending on how a person's face is shown in an image, the consideration of only one value would lead to mistakes. Below, we see that, e.g., with a tilted head, the y-value or the box size as match-finders might not function properly.

We found that the best comparison would be to use the Euclidean distance between each entry of the detected eyes. The Euclidean distance calculates the distance between two vectors in an n-dimensional room.

Matching eyes step by step with code

Following, the steps are described to achieve the detection step by step matched to the code below

- Go through all eyes, and put the index into a variable [as in line 5].

- Put the found eye-box into a variable [line 9]. Create the Euclidean distance [line 11] between the found eye and all other ones [line 10], one by one [for loop in line 7]. Also add the indices to the distance values to know from which pair it originates [line 11].

- The if in line 8 prohibits comparison to itself (distance of zero).

- Then sort the distance [line 12].

- The list now contains all combinations of distances. So, we go through the distances [line 14] and copy each entry to a new variable (Dist_check) if the index of one of them has not yet appeared [line 16–20].

- If, for one face, only one eye is detected, we have at least one leftover eye. So we go again through the list and see which indices are missing [line 23]. Those are appended [line 30].

- To create the facebox from the eye box we only want one eye detected. In lines 25–29, we pop out the right eyes to keep only the left ones.

- We draw the boxes for the eyes and leftovers differently, as a slanted face needs different adjusting to get a good face matching box [lines 31–36].

Less than three eyes

If we only detect one eye, it is quite simple… we just have one eye.

If we detect two eyes, it could be one person or two persons. So, we noticed that the distance of the boxes should be below 2 times the width of a box. So far, it works quite well. However, this could certainly be tuned further. Also, if two persons put their heads very close together, it could be mistaken as one person. Somebody should find a clever solution for this issue, but be aware a slanted head to the back can also create a very short distance 🙂

Draw facebox from eyebox

Now, we only have to expand the bounding box to get the full face from the eye.

We found that three times the height of an eye-bounding box and 4 times the width to the right seems to work pretty well. Maybe this can also be optimized. However, with the changing distance of a face to the camera, all the measures change accordingly. Just try yourselves.

In addition, a single eye belonging to a slanted head needs a slightly smaller box. Therefore, we adjusted the drawing function a bit. Now, we check for the variable rest (for leftovers). If the rest is not empty, we draw the slightly thinner bounding box (line 8).

Conclusions

This method works generally well. Sure, a few points can be optimized, and most likely, you will have to tune a few parameters, such as the distance multiplier to fit to your purpose. Nevertheless, this is an easy and lightweight solution that does not require intense retraining or deep neural network tuning.

Yes, this all can be shortened drastically if you use a left or right-eye cascader. However, we noticed that detecting both eyes and then reducing it manually works slightly better, as sometimes the single eye is not detected (check out Chandler's left eye from his view).

I hope this helps someone. Good luck, and keep safe.

Yours Jan

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: