Different Types of Memory

Last Updated on July 25, 2023 by Editorial Team

Author(s): Sai Teja Gangapuram

Originally published on Towards AI.

LangChain DeepDive — Memory U+007C The Key to Intelligent Conversations

Discover the intricacies of LangChain’s memory types and their impact on AI conversations and an example to showcase the impact.

this is Part 2 in LangChain DeepDive, in this part, we will cover Memory. This feature is particularly important in applications where remembering previous interactions is crucial, such as chatbots.

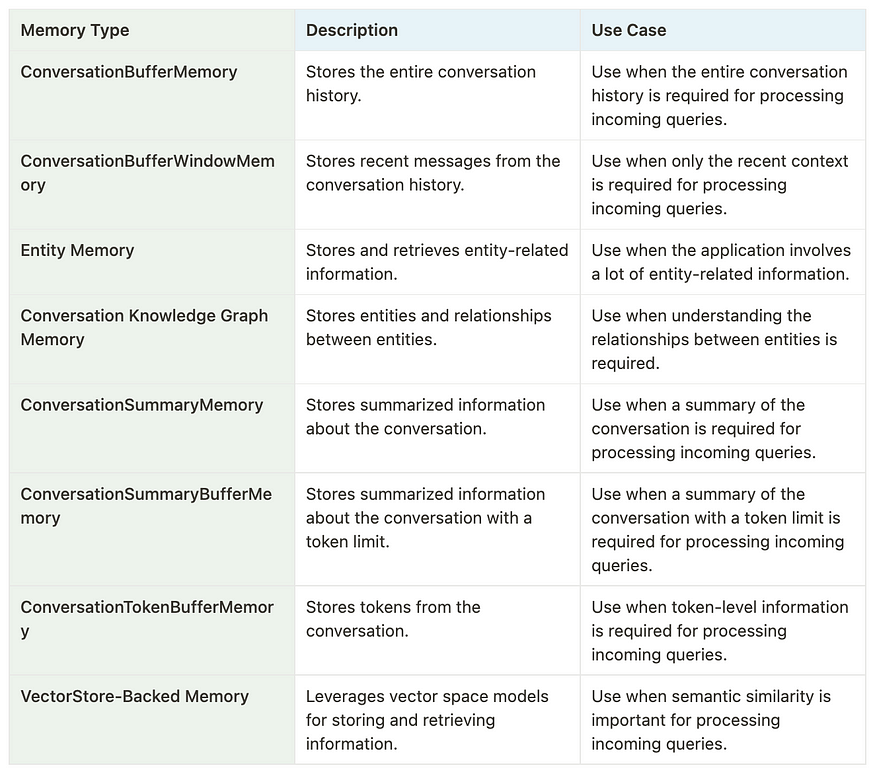

LangChain provides memory components in two forms: helper utilities for managing and manipulating previous chat messages and easy ways to incorporate these utilities into chains. The memory types provided by LangChain include:

- ConversationBufferMemory

- ConversationBufferWindowMemory

- Entity Memory

- Conversation Knowledge Graph Memory

- ConversationSummaryMemory

- ConversationSummaryBufferMemory

- ConversationTokenBufferMemory

- VectorStore-Backed Memory

The different types of memory in LangChain are not mutually exclusive; instead, they complement each other, providing a comprehensive memory management system. For instance, ConversationBufferMemory and ConversationBufferWindowMemory work together to manage the flow of conversation, while Entity Memory and Conversation Knowledge Graph Memory handle the storage and retrieval of entity-related information.

let's look at each one of them in detail.

- Buffer Memory: The Buffer Memory in Langchain is a simple memory buffer that stores the history of the conversation. It has a

bufferproperty that returns the list of messages in the chat memory. Theload_memory_variablesfunction returns the history buffer. This type of memory is useful for storing and retrieving the immediate history of a conversation. - Buffer Window Memory: Buffer Window Memory is a variant of Buffer Memory. It also stores the conversation history but with a twist. It has a property

kwhich determines the number of previous interactions to be stored. Thebufferproperty returns the lastk*2messages from the chat memory. This type of memory is useful when you want to limit the history to a certain number of previous interactions. - Entity Memory: The Entity Memory in Langchain is a more complex type of memory. It not only stores the conversation history but also extracts and summarizes entities from the conversation. It uses the Langchain Language Model (LLM) to predict and extract entities from the conversation. The extracted entities are then stored in an entity store which can be either in-memory or Redis-backed. This type of memory is useful when you want to extract and store specific information from the conversation.

Each of these memory types has its own use cases and trade-offs. Buffer Memory and Buffer Window Memory are simpler and faster but they only store the conversation history. Entity Memory, on the other hand, is more complex and slower but it provides more functionality by extracting and summarizing entities from the conversation.

As for the data structures and algorithms used, it seems that Langchain primarily uses lists and dictionaries to store the memory. The algorithms are mostly related to text processing and entity extraction, which involve the use of the Langchain Language Model.

- Conversation Knowledge Graph Memory: The Conversation Knowledge Graph Memory is a sophisticated memory type that integrates with an external knowledge graph to store and retrieve information about knowledge triples in the conversation. It uses the Langchain Language Model (LLM) to predict and extract entities and knowledge triples from the conversation. The extracted entities and knowledge triples are then stored in a NetworkxEntityGraph, which is a type of graph data structure provided by the NetworkX library. This memory type is useful when you want to extract, store, and retrieve structured information from the conversation in the form of a knowledge graph.

- ConversationSummaryMemory: The ConversationSummaryMemory is a type of memory that summarizes the conversation history. It uses the LangChain Language Model (LLM) to generate a summary of the conversation. The summary is stored in a buffer and is updated every time a new message is added to the conversation. This memory type is useful when you want to maintain a concise summary of the conversation that can be used for reference or to provide context for future interactions.

- ConversationSummaryBufferMemory: ConversationSummaryBufferMemory is similar to the ConversationSummaryMemory but with an added feature of pruning. If the conversation becomes too long (exceeds a specified token limit), the memory prunes the conversation by summarizing the pruned part and adding it to a moving summary buffer. This ensures that the memory does not exceed its capacity while still retaining the essential information from the conversation.

- ConversationTokenBufferMemory: ConversationTokenBufferMemory is a type of memory that stores the conversation history in a buffer. It also has a pruning feature similar to the ConversationSummaryBufferMemory. If the conversation exceeds a specified token limit, the memory prunes the earliest messages until it is within the limit. This memory type is useful when you want to maintain a fixed-size memory of the most recent conversation history.

- VectorStore-Backed Memory: The VectorStore-Backed Memory is a memory type that is backed by a VectorStoreRetriever. The VectorStoreRetriever is used to retrieve relevant documents based on a query. The retrieved documents are then stored in the memory. This memory type is useful when you want to store and retrieve information in the form of vectors, which is particularly useful for tasks such as semantic search or similarity computation.

These memory types provide more advanced functionality compared to the basic memory types. They allow for more sophisticated information retrieval and storage, which can be beneficial in more complex conversational AI scenarios.

Quick comparison for later reference

Comparison with Indexes Module

In LangChain, memory and indexes serve different but complementary roles in managing and accessing data. Here’s a comparison of the two:

Memory

Memory in LangChain refers to the various types of memory modules that store and retrieve information during a conversation.

Memory is crucial for maintaining context over a conversation, answering follow-up questions accurately, and providing a more human-like interaction. It also enables the agent to learn from past interactions and improve its responses over time.

Indexes

Indexes in LangChain refer to ways to structure documents so that Language Models (LLMs) can best interact with them. Indexes are used in a “retrieval” step, which involves taking a user’s query and returning the most relevant documents.

The primary index types supported by LangChain are centred around vector databases. These indexes are used for indexing and retrieving unstructured data (like text documents). For interacting with structured data (SQL tables, etc) or APIs, LangChain provides relevant functionality.

Indexes are crucial for the efficient retrieval of relevant documents based on a user’s query. They enable the agent to sift through large amounts of data and return the most relevant information.

Comparison

While both memory and indexes are used for storing and retrieving data, they serve different purposes. Memory is used for storing conversation history and context, which is crucial for maintaining a coherent and context-aware conversation. On the other hand, indexes are used for structuring and retrieving documents based on their relevance to a user’s query.

In terms of their implementation, memory types in LangChain can be backed by various databases like Postgres, DynamoDB, Redis, MongoDB, and Cassandra. Indexes, on the other hand, are primarily centred around vector databases.

In summary, memory and indexes in LangChain work together to provide a comprehensive data management and retrieval system that enhances the capabilities of AI agents.

Example: How Memory Improves Chats

pre-requisites:

- GOOGLE_API_KEY: get it from https://console.cloud.google.com/apis/credentials

- GOOGLE_CSE_ID: https://cse.google.com/cse/create/new

- OPENAI_API_KEY: https://platform.openai.com/account/api-keys

ans set them as environemnt varibales

import os

os.environ["GOOGLE_CSE_ID"] = ""

os.environ["GOOGLE_API_KEY"] = ""

os.environ['OPENAI_API_KEY'] = ""

Imports

from langchain.agents import ZeroShotAgent, Tool, AgentExecutor

from langchain.memory import ConversationBufferMemory

from langchain.memory.chat_memory import ChatMessageHistory

from langchain import OpenAI, LLMChain

from langchain.utilities import GoogleSearchAPIWrapper

GoogleSearchWrapper setup

search = GoogleSearchAPIWrapper()

tools = [

Tool(

name = "Search",

func=search.run,

description="useful for when you need to answer questions about current events"

)

]

Prompt Template setup

prefix = """Have a conversation with a human, answering the following questions as best you can. You have access to the following tools:"""

suffix = """Begin!"

{chat_history}

Question: {input}

{agent_scratchpad}"""

prompt = ZeroShotAgent.create_prompt(

tools,

prefix=prefix,

suffix=suffix,

input_variables=["input", "chat_history", "agent_scratchpad"]

)

The agent with Memory Setup

memory = ConversationBufferMemory(memory_key="chat_history")

llm_chain = LLMChain(llm=OpenAI(temperature=0), prompt=prompt)

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_chain = AgentExecutor.from_agent_and_tools(agent=agent, tools=tools, verbose=True, memory=memory)

agent_chain.run(input="Which footballer took more penalties?")> Entering new AgentExecutor chain...

Thought: I need to find out which footballer took more penalties in the Premier League

Action: Search

Action Input: "Premier League penalties taken by footballers"

Observation: Apr 11, 2023 ... When offered as a quiz question, most people will opt for Matt Le Tissier as their guess. Wrong. The accolade for the most penalties taken with ... Oct 17, 2022 ... Scoring a penalty is often the most difficult task in the world for many footballers, with nerves playing a huge factor when lining the ball ... View goals scored by Premier League players for 2022/23 and previous seasons, on the official website of the Premier League. Since the Premier League's formation at the beginning of the 1992–93 season, a total of 34 players have scored 100 or more goals in the competition; ... Six of the 34 players accomplished the feat without scoring a penalty ... Jul 11, 2021 ... Rashford, who plays for Manchester United, noted the racial abuse he received on social media in May after the team lost the Europa League final ... Apr 18, 2003 ... Only three Iraqis dared to take penalties, and Zair was one of them. ... the full tale of the Iraq football team can be told. Apr 6, 2022 ... Here are the top five players with most Premier League penalty ... The Premier League, in its history, has seen some iconic footballers. May 14, 2022 ... The players who have won the most penalties, featuring Salah, ... Premier League top scorers 2022/23: Erling Haaland leads with EPL goal ... Apr 27, 2020 ... Further, we analyzed a large sample of penalty kicks taken in major European leagues (4,708 penalties). We consider this research important, as ... Jul 11, 2019 ... However, there are some ice-cold footballers who have perfected the art of the ... Rooney has scored 21 spot kicks in the Premier League, ...

Thought: I now know the final answer

Final Answer: Wayne Rooney has scored the most penalties in the Premier League with 21.

> Finished chain.

'Wayne Rooney has scored the most penalties in the Premier League with 21.'

the next question will refer to chat before answering

agent_chain.run(input="What is the success ratio?")

Output:

> Entering new AgentExecutor chain...

Thought: I need to find out the success ratio of Wayne Rooney's penalties.

Action: Search

Action Input: Wayne Rooney penalty success ratio

Observation: Wayne Rooney · Total penalties scored - 48 · Total penalties missed - 13. Wayne Rooney ; Attack. Goals 208. Goals per match 0.42. Headed goals 21. Goals with right foot 124. Goals with left foot 21. Penalties scored 23. Freekicks ... Wayne Rooney · Total penalties scored - 48 · Total penalties missed - 13. Mar 8, 2019 ... In light of Noble's penalty success, talkSPORT.com has looked at the history of the division since it started 1992 ... 6 = Wayne Rooney - 23. May 14, 2011 ... Manchester United were crowned English champions for a record 19th time on Saturday when Wayne Rooney's penalty earned a 1-1 draw at ... Apr 11, 2023 ... The accolade for the most penalties taken with a 100% success rate is held by ... record scorer Wayne Rooney (11) and Teddy Sheringham (10). Rooney has scored just 18 of the 26 spot kicks he has taken in the league which gives him a success rate of 69%. That leaves the striker in the bottom three of ... England Penalty Takers. Player; Total; Scored; Missed; Conversion %. Harry Kane; 22; 18; 4; 81.8%. Frank Lampard; 11; 9; 2; 81.8%. Wayne Rooney; 7; 7 ... Mar 24, 2015 ... Worst penalty success rates (Premier League). 67 %. Michael Owen. 68 %. Teddy Sheringham. 69 %. WAYNE ROONEY. Minimum 20 penalties taken ... Nov 21, 2018 ... United rejoice after Wayne Rooney buries his penalty at Blackburn Rovers. Statistics, courtesy of @OptaJoe, correct as of 16 November 2018.

Thought: I now know the final answer.

Final Answer: Wayne Rooney has a penalty success rate of 69%.

> Finished chain.

'Wayne Rooney has a penalty success rate of 69%.'

The agent without Memory Setup

prefix = """Have a conversation with a human, answering the following questions as best you can. You have access to the following tools:"""

suffix = """Begin!"

Question: {input}

{agent_scratchpad}"""

prompt = ZeroShotAgent.create_prompt(

tools,

prefix=prefix,

suffix=suffix,

input_variables=["input", "agent_scratchpad"]

)

llm_chain = LLMChain(llm=OpenAI(temperature=0), prompt=prompt)

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_without_memory = AgentExecutor.from_agent_and_tools(agent=agent, tools=tools, verbose=True)

agent_without_memory.run("Which footballer took more penalties in Premier League?")

> Entering new AgentExecutor chain...

Thought: I need to compare two players

Action: Search

Action Input: "Premier League penalties taken by footballers"

Observation: Apr 11, 2023 ... When offered as a quiz question, most people will opt for Matt Le Tissier as their guess. Wrong. The accolade for the most penalties taken with ... Oct 17, 2022 ... Scoring a penalty is often the most difficult task in the world for many footballers, with nerves playing a huge factor when lining the ball ... View goals scored by Premier League players for 2022/23 and previous seasons, on the official website of the Premier League. Since the Premier League's formation at the beginning of the 1992–93 season, a total of 34 players have scored 100 or more goals in the competition; ... Six of the 34 players accomplished the feat without scoring a penalty ... Jul 11, 2021 ... Rashford, who plays for Manchester United, noted the racial abuse he received on social media in May after the team lost the Europa League final ... Apr 18, 2003 ... Only three Iraqis dared to take penalties, and Zair was one of them. ... the full tale of the Iraq football team can be told. Apr 6, 2022 ... Here are the top five players with most Premier League penalty ... The Premier League, in its history, has seen some iconic footballers. May 14, 2022 ... The players who have won the most penalties, featuring Salah, ... Premier League top scorers 2022/23: Erling Haaland leads with EPL goal ... Apr 27, 2020 ... Further, we analyzed a large sample of penalty kicks taken in major European leagues (4,708 penalties). We consider this research important, as ... Jul 11, 2019 ... However, there are some ice-cold footballers who have perfected the art of the ... Rooney has scored 21 spot kicks in the Premier League, ...

Thought: I now know the final answer

Final Answer: Wayne Rooney has taken the most penalties in the Premier League with 21.

> Finished chain.

'Wayne Rooney has taken the most penalties in the Premier League with 21.'

agent_without_memory.run(input="What is the success ratio?")

> Entering new AgentExecutor chain...

Thought: I need to find out what the success ratio is.

Action: Search

Action Input: Success ratio

Observation: The win/loss or success ratio is a trader's number of winning trades relative to the number of losing trades. · In other words, the win/loss ratio tells how many ... 3:Contrast (Minimum) (Level AA). Success Criterion (SC). The visual presentation of text and images of text has a contrast ratio of at least 4.5:1 ... Jul 20, 2021 ... When we run a study with multiple users, we usually report the success (or task-completion) rate: the percentage of users who were able to ... NIH Success Rate Definition (~68KB) · Research Project Grants and Other Mechanisms: Competing applications, awards, success rates, and funding, by Institute/ ... Aug 11, 2020 ... Success Rate measures the rate at which people who come to the community — either as members or visitors — succeed in achieving their purpose ... May 5, 2016 ... The expense ratio is the most proven predictor of future fund returns. We find that it is a dependable predictor when we run the data. That's ... Jul 17, 2020 ... In selected cases, ultra-short implants may represent an alternative to bone augmentation procedures and a long-term predictable solution. Success rate is the fraction or percentage of success among a number of attempts to perform a procedure or task. It may refer to: Call setup success rate ... May 1, 2000 ... The ratio between the length of the 2nd and 4th digit (2D:4D) is ... and reproductive success. evidence for sexually antagonistic genes? Feb 10, 2021 ... To be successful in college, students need to be prepared for college ... In Division I, the Graduation Success Rate tracks the number of ...

Thought: I now know the final answer

Final Answer: The success rate is the fraction or percentage of success among a number of attempts to perform a procedure or task.

> Finished chain.

'The success rate is the fraction or percentage of success among a number of attempts to perform a procedure or task.'

In this example, the agent is able to remember the context of the conversation due to its memory. When asked a follow-up question that relies on information from the previous exchange, the agent can correctly answer because it remembers that the previous question was about Wayne Rooney's penalty success ratio not the generic definition of it.

references:

Memory

By default, Chains and Agents are stateless, meaning that they treat each incoming query independently (as are the…

python.langchain.com

GitHub – hwchase17/langchain: U+26A1 Building applications with LLMs through composability U+26A1

U+26A1 Building applications with LLMs through composability U+26A1 Looking for the JS/TS version? Check out LangChain.js…

github.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI