Generating Professional Introduction Emails with LlamaIndex

Last Updated on June 6, 2023 by Editorial Team

Author(s): Kelly Hamamoto

Originally published on Towards AI.

Imagine this: It’s 7 P.M., and you are finally freed after a grueling day at work. As you fantasize about diving into the warm embrace of your couch and indulging in a well-deserved Netflix marathon, your phone buzzes. Your friend from college, in the heat of job searching, wants to be introduced to your esteemed colleague. Just what you needed!

You are happy to oblige, but you quickly realize that this seemingly simple task is no ordinary feat. You must navigate through a labyrinth of internships, achievements, and skill endorsements, figure out what the two have in common, and write an email that paints both parties in the best light possible.

Enter LlamaIndex (formerly GPT-index), the magic tool for lazy geniuses. Today, I will show you how to make an introduction email generator that will save you a couple of brain cells (at least) per email.

The Idea

- Have the user input their LinkedIn login information and the links to the LinkedIn pages of soon-to-be-connected individuals;

- Scrape, clean, and format LinkedIn data so it is ready to be fed to an LLM;

- Feed LinkedIn data to LLM using Llama index and query for introduction email.

The packages you need are llama_index, langchain, selenium, and linkedin-scraper, all of which can be installed with pip; you also need chromedriver, which you can download using this link. You’ll also need an OpenAI key for this project.

Importing packages

Before importing, run this code (as required by linkedin-scraper) through your Mac’s Terminal. Don’t forget to replace your-path-to-chromedriver:

export CHROMEDRIVER = your-path-to-chromedriver

Let's import our packages! But before that, let me briefly explain what each of our packages is used for. LlamaIndex is a tool that helps us build LLM apps: with it, we can easily feed our own data to LLMs. LangChain is similar to LlamaIndex in this way, but we are only using it to borrow its OpenAI model. LinkedIn Scraper scrapes LinkedIn data — simple enough. And last but not least, Selenium automates browsers, which is crucial for our web scraper.

import os

from llama_index import GPTVectorStoreIndex, Document, SimpleDirectoryReader, LLMPredictor, ServiceContext

from langchain.llms.openai import OpenAI

from linkedin_scraper import Person, actions

from selenium import webdriver

# replace your-chromedriver-path and your-api-key!

chromedriver_path = your-path-to-chromedriver

os.environ['OPENAI_API_KEY']= your-api-key

Getting and Cleaning Linkedin Data

From Linkedin Scraper’s GitHub page, we see what fields a Person object has:

Person(linkedin_url=None, name=None, about=[], experiences=[], educations=[], interests=[], accomplishments=[], company=None, job_title=None, driver=None, scrape=True)

Let’s keep it simple and only use name and experiences from this very long list. We first define a function get_experiences(links, driver) where, given some LinkedIn links and a web driver, returns a dictionary where the keys are names of the individuals in question and the values are given by function get_person_exp(person). We also define function clean_name(person), since our scraper, when returning a person’s name, sometimes includes strings like “\n1st degree connection” which we would like to get rid of.

# returns {person name: get_person_exp(person) }

def get_experiences(links, driver):

out = {}

for link in links:

try:

person = Person(link, driver=driver)

out[clean_name(person)] = get_person_exp(person)

except:

driver.quit()

driver = webdriver.Chrome(chromedriver_path)

actions.login(driver, email, password)

person = Person(link, driver=driver)

out[clean_name(person)] = get_person_exp(person)

return out

def clean_name(person):

match = re.search(r'\n', person.name)

if match != None:

return person.name[:match.start()]

return person.name

Next up, we create another function: get_person_exp(person). It takes a Person object as argument and returns that person’s experiences in dictionary form, {institution_name: description}. The complicated-looking structure, with dictionaries and sets, is my attempt at getting rid of duplicate information given by the LinkedIn Scraper — I won’t bore you with more details.

# returns { institution_name: description }

def get_person_exp(person):

exp_dict = {}

for exp in person.experiences:

if exp.description == "":

continue

if exp.institution_name not in exp_dict.keys():

exp_dict[exp.institution_name] = set()

for d in str(exp.description).split('\n'):

exp_dict[exp.institution_name].add(d)

exp_dict[exp.institution_name].add("Job started from date " + exp.from_date)

exp_dict[exp.institution_name].add("Job started on date " + exp.to_date)

return exp_dict

Now we can see that the dictionary given by get_experiences(links, driver) is in the form { name: { institution_name: description } }.

Almost there! Let’s put it all together and get the data we want. Using Terminal, we ask the user to input their LinkedIn login information, as well as the links the LinkedIn pages of the individuals we want to know about. Then, using ChromeDriver as our driver, we log in to LinkedIn.

# ask user for LinkedIn login info

print("\n Hi! We need your Linkedin login information for scraping purposes, don't be suspicious!!")

email = input("Linkedin email: ")

password = input("Linkedin password: ")

# ask user for LinkedIn links

print("\n Input the Linkedin links for the two people you would like to introduce.")

llink1 = input("link 1: ")

llink2 = input("link 2: ")

driver = webdriver.Chrome(chromedriver_path)

actions.login(driver, email, password)

d = get_experiences([llink1, llink2], driver)

driver.quit()

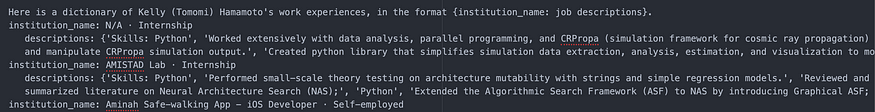

Data acquired! Although it is looking a little like gibberish. To optimize the performance of our LLM, let’s make it a little more readable before saving it. Before anything else, let’s tell the LLM what the data is about by saying, “Here is a dictionary of this person’s work experiences, in the format {institution_name: job descriptions}”. Then, we list each institution_name followed by their job descriptions.

with open("data/linkedin_data.txt", "w") as f:

for person_name in d.keys():

f.write("Here is a dictionary of " + person_name + "\'s work experiences, in the format {institution_name: job descriptions}.\n")

for institution_name in d[person_name].keys():

f.write("institution_name: " + institution_name + "\n")

f.write(" descriptions: " + str(d[person_name][institution_name]) + "\n")

f.write("This is the end of " + person_name + "\'s work experiences.\n\n")

Let’s run it and take a look at some of the output. Not too bad!

Setting up LlamaIndex

Now that we have our LinkedIn data ready, we need to feed it to LlamaIndex. Here is some code I’m recycling from another project of mine. The make_query_engine() function takes the files from our “data” directory, uses vector embeddings to make the data more readable to an LLM, then returns a query engine.

The query(question, query_engine) function takes that query engine and a question you would like to ask the LLM as input, and outputs the LLM’s response.

# Load documents, build index, return query engine ready for use

def make_query_engine():

documents = SimpleDirectoryReader('data').load_data()

service_context = ServiceContext.from_defaults(llm_predictor=LLMPredictor(llm=OpenAI(temperature=0.2, model_name="gpt-3.5-turbo")))

# load from previously daved data

index = GPTVectorStoreIndex.from_documents(documents, service_context=service_context)

index.storage_context.persist()

query_engine = index.as_query_engine()

return query_engine

def query(question, query_engine):

response = query_engine.query(question)

return responseAll done! Last step, let’s formulate a question for the query engine and print its response. Note that we try loading the existing index from our storage folder (which should be in the same directory as the email generator python file). My purpose for this was to save API calls when running this file multiple times on the same LinkedIn links, but you don’t have to structure the code this way.

question = "\

Given the information you are given about these two people\'s work experience, output a professional email directed at the two of them. \

\

The goal of this email is to introduce the two people to each other's work experiences and facilitate their connection. \

It is important to help them identity their shared interest and experiences. \

\

Here is a good format for the email:\

Section 1: you address both of them, for example: \'It\'s my pleasure to introduce you two\'. \

Section 2: you address the first person, and tell them to meet the second person. You then introduce the some of the second person's work experiences, \

focusing on what the two people might both be interested in. \

You can also describe or compliment the second person as a person or worker, for example describe them as seasoned or innovative.\

Section 3: in the same manner as the second section, introduce the second person to the first person.\

Section 4: conclude the email with something similar to \'I am sure you guys will hit it off and have a lot to talk about\'.\

\

You must include all four sections in your output. Your output should be in complete sentences. Start on a new line for each section of the email."

# use existing index if possible

try:

storage_context = StorageContext.from_defaults(persist_dir='./storage') # rebuild storage context

index = load_index_from_storage(storage_context) # load index

query_engine = index.as_query_engine()

print('loaded index from memory')

except:

query_engine = make_query_engine()

print(query(question, query_engine))

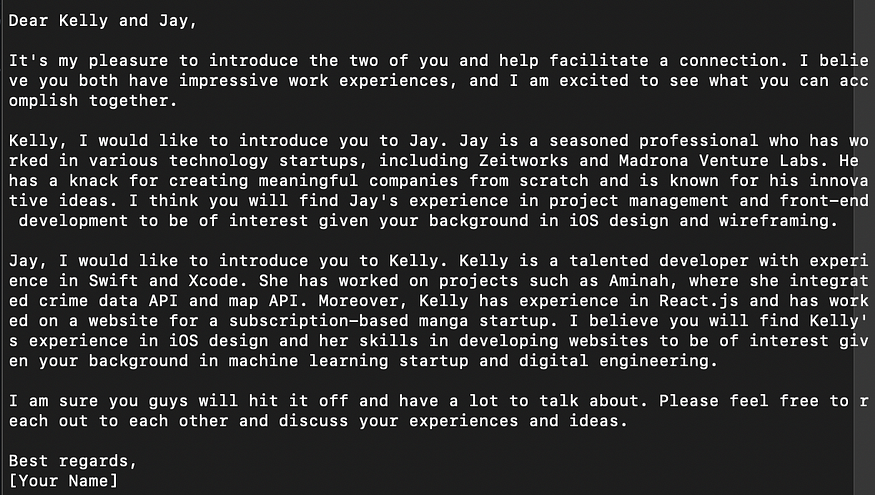

I arrived at this final version after some trials and errors in prompt engineering, but feel free to try different things out. Let’s see how it performs!

Sometimes it takes a couple of tries, but the generated email template doesn’t look too bad at all. With a bit of tweaking, it should be ready to send — and that’s it!

The code could definitely use improvement, ex. better cleaning and formatting of the LinkedIn data, more customization for the query engine, etc. If you would like to play around with the code, you can find it on my GitHub repo.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.