StyleGAN: In depth explaination

Last Updated on July 25, 2023 by Editorial Team

Author(s): Albert Nguyen

Originally published on Towards AI.

Generative Adversarial Networks (GAN) have yielded state-of-the-art results in generative tasks and have become one of the most important frameworks in Deep Learning. Many variants of GAN have been proposed to improve the quality of generated images or allow conditional synthesis. However, they have yet to offer intuitive, scale-specific control of the synthesis procedure until StyleGAN.

StyleGAN is an extension of progressive GAN, an architecture that allows us to generate high-quality and high-resolution images. As proposed in [paper], StyleGAN only changes the generator architecture by having an MLP network to learn image styles and inject noise at each layer to generate stochastic variations.

In this post, we will explore the architecture of StyleGAN.

ProGAN Architecture

Because StyleGAN is built on ProGAN, we will first have a quick look through ProgGAN architecture.

Like a general GAN, ProGAN consists of a Generator and Discriminator. The Generator will try to produce “realistic images,” and the Discriminator will classify whether it is fake.

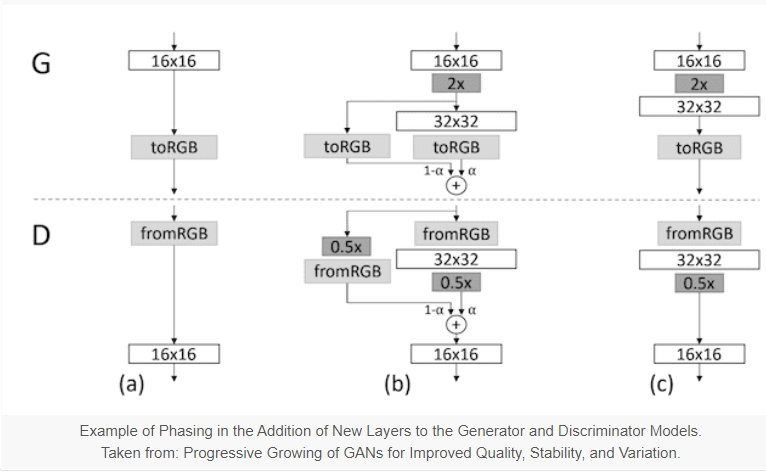

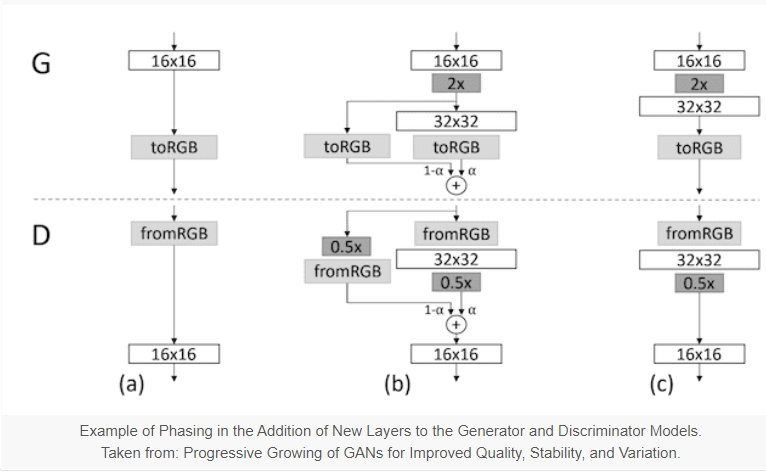

Unlike general GAN, ProGAN Discriminator will classify the image at different scales. In training, Discriminator will receive input at different resolutions and combine them to tell if the image is real or fake. The real data image will undergo a “progressive downsampling process” to produce lower-resolution images. For example, a 256×256 image will be turned into a list of [256×256, 128×128, 64×64, 32×32, 16×16] images and fed into the Discriminator. On the other hand, the Generator will produce images at such resolutions and feed them into the Discriminator.

The architecture of ProGAN has shown success in producing high-quality images. And StyleGAN is built based on this architecture to obtain an intuitive synthesis procedure.

Note: The progressive growth of ProGAN allows the Generator to first learn the image’s overall distribution (context) at early layers and details at later layers.

StyleGAN Generator

There are three changes in the StyleGAN Generator:

- A starting learnable constant

- Mapping Network and Adaptive Instance Normalization (AdaIN)

- Noise Injection for Stochastic Variation

Starting Constant

General GANs Generator generates images from a latent code. Instead, StyleGAN starts with a constant learned image of size 4×4. The progressive growth of the Generator will add new content and upscale this image by applying style and noiseat each block.

Mapping Network (Style Learning Network)

The latent code z, which we often referred in GAN as the mapping of the image at latent space, is now used to produce the style of the image.

The vector zis first sampled from a predefined distribution (Uniform or Gaussian) at latent space Z. Then it is mapped into an intermediate latent space W to produce w. The mapping network is implemented using an 8-layer MLP:

An affine transformation on the intermediate latent code w will produce style y = (y_s, y_b) and feed into the AdaIN layer, following up with a convolution to draw new contents to the image.

The Entangled Problem

There is a question we must ask about the Mapping Network. Why do we need it? Why not just put the latent z instead?

The reason is because of the ‘entangled problem,’ which means that each element of z control more than one factor of the image. Unfortunately, this also means that some elements can affect the same factor. Therefore, we can not scale a single factor without affecting the other. For example, when analyzing the Generator, you may find some elements of z control hair length elements. So you want to change the hair length of the image by scaling up and down these elements. But you may end up with a totally different image because these elements also affect other factors like gender, eye color, etc.

This is because the latent ‘z’ is sampled from a fixed distribution (uniform or normal) while the data distribution is probably different.

This requires the Generator to learn how to match factors from ‘z’ to data distribution. And the Mapping Network covers this job in StyleGAN. “This mapping can be adapted to ‘unwrap’ W so that the factors of variations become more linear” — Tero et al., 2018. i.e., each factor in w contributes to one aspect of the image. The Mapping Network allows the Generator to reserve its capacity to generate more realistic images.

There are many other works on ‘disentanglement’ to solve this problem. [Disentanglement]

Adaptive Instance Normalization (AdaIN)

The latent code ‘w’ produced by the Mapping network is then fed into a learned affine transform and AdaIN layer. The affine transform is implemented using two linear layers to create a style with scale = y_s and bias = y_b . The AdaIN operation formula:

Where x is the output feature map of the previous layer. The AdaIN first normalizes each channel x_i to “zero mean” and “unit variance” and then applies the scale y_s and y_b . This means the style y will control the statistic of the feature map for the next convolutional layer. Where y_s is the standard deviation, and y_b is mean. The style decides which channels will have more contribution in the next convolution.

Localized Feature

One property of the AdaIN is that it makes the effect of each style localized in the network. In other words, the style will only affect the image in the next convolution.

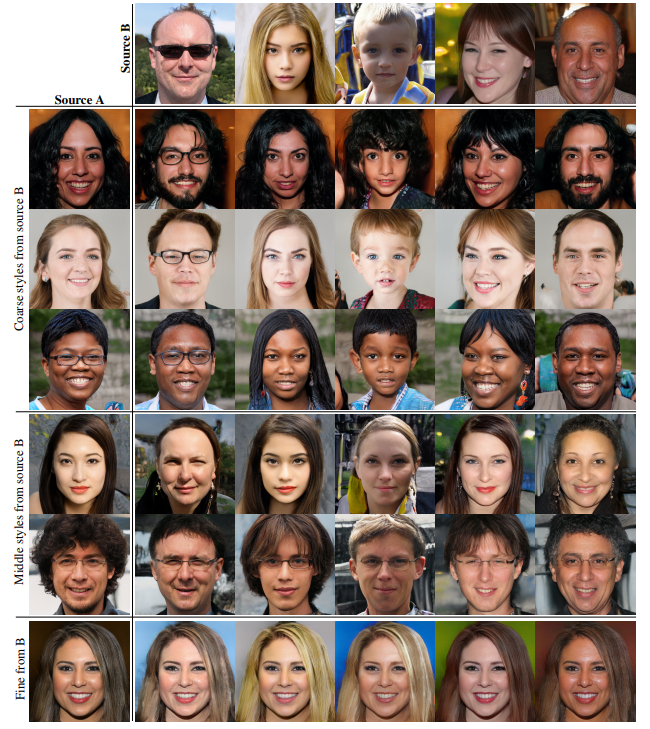

The instance normalization makes all channels ‘zero mean’ and ‘unit variance.’ By doing this, it denies the scaling effects of previous y_s and y_b . This allows scale-specific modification to the styles to control image synthesis. Evidence for this, and also to encourage the localization in the network while training, is Style mixing. The model can generate styles from two latent codes w_1 and w_2 , (or more). For example, for the first four blocks, we use the styles from w_1 , and then use the styles from w_2 . The picture below shows the result of using two different latent codes.

Stochastic Variation with Noise Injection

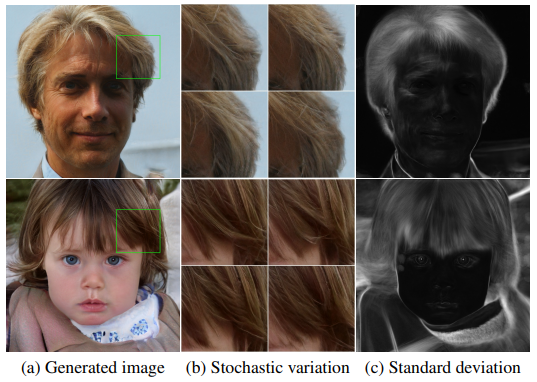

Stochastic variations in an image are small details that do not change the overall context of the image. For example, hair placement, smile angle, stubble, freckles, etc. Yes! They do not change the overall image but are there, and the Generator will learn to generate them. The Noise Injection to the StyleGAN generator before AdaIN layers help generate such variations.

The noise added to the feature map has zero mean and a small scale of variance (compared to the feature map). Therefore, the overall context of the image is preserved as the statistics of the feature map stay “the same.”

This allows the Generator to reserve its capacity to learn how to generate new content on the given style without learning how to generate stochastic variations.

According to the paper, the noise also appears tightly localized in the network. This is interesting and somehow similar to the styles. But noise is noise, and it has nothing to do with the AdaIN. The author of the paper hypothesizes that the generator is pressured to introduce new content asap. This leads to creating stochastic variations based on the new noise provided and denies the effects of previous noises.

Conclusion

To wrap up, StyleGAN achieves style-based image generation by disentangling styles from randomness. We can control the synthesis by controlling the style by localizing or scaling the latent code. The Generator also separates the introduction of stochastic variation. Give us more control over the synthesis procedure of StyleGAN.

Bonus

Although the StyleGAN reaches state-of-the-art performance in generative tasks. It introduces a problem with artifacts in the generated images. In the StyleGAN2 paper, they spotted the problem in the Adaptive Instance Normalization and the Progressive Growing of the Generator. Link to paper!!

StyleGAN has been proposed since 2018. But I hope this post will help some readers understand the architecture of StyleGAN.

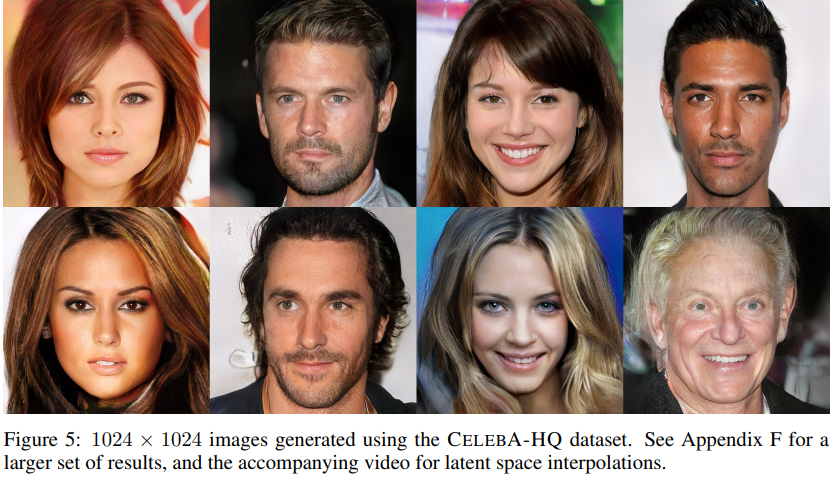

My result running StyleGAN for a night:

They are pretty nice tho U+1F601U+1F601

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.