ROUGE Metrics: Evaluating Summaries in Large Language Models.

Last Updated on August 22, 2023 by Editorial Team

Author(s): Pere Martra

Originally published on Towards AI.

The increment of business applications that are based on Large Language Models has brought the need to measure the quality of the solutions provided by these applications. Here is where metrics like ROUGE become important.

The metrics that we have been using up to now with more traditional models, such as Accuracy, F1 Score or Recall, do not help us to evaluate the results of generative models.

With these models, we are beginning to use metrics such as BLEU, ROUGE, or METEOR. Metrics that are adapted to the objective of the model.

In this article, I want to explain how to use the ROUGE metrics to measure the quality of the summaries produced by the newest large language models.

What is ROUGE?

ROUGE consists of a set of metrics that are used to compare the quality of a generated text against a reference text.

It can be used whenever we have a reference text available. Some of the most common applications are: translations, text summarization, or entity extraction between others.

It’s important to note that ROUGE is only as reliable as the quality of the reference text. If they have poor quality, the results provided by ROUGE may not reflect correctly the quality of the generated text.

Another utility of ROUGE is measuring how different the outputs produced by two different models are. Maybe we want to see if there are any significant differences between the texts produced by the two models.

Additionally, we can use ROUGE to estimate whether processes like pruning or quantization (weight reduction of models) have significantly altered the results produced by our model for the specific task we want to use it for.

The metrics provided are:

- ROUGE-1: This indicates the match between the generated text and the reference text using 1-grams or individual words.

- ROUGE-2: The same than ROUGE-1, but It considers sets of 2-grams.

- ROUGE-L: This metric evaluates the match of the longest common subsequence of words between the two texts. The words do not need to be in the exact same order.

More information about the ROUGE metrics can be found at: https://pypi.org/project/rouge-score/

What are we going to measure in our Notebook?

We will measure a base T5 model against a T5 model fine-tuned for summary generation.

Initially, we will analyze the differences between the two models to determine if the fine-tuning process has produced a model with distinct behavior.

Later, we will evaluate both models using the reference texts provided in the CNN-DailyMail Dataset, to see which one produces better summaries.

The source code for this article, as well as the entire course on Large Language Models, can be found in my GitHub repository along with the corresponding English articles. The notebook used for this article can be accessed at: https://github.com/peremartra/Large-Language-Model-Notebooks-Course/blob/main/rouge-evaluation-untrained-vs-trained-llm.ipynb

GitHub – peremartra/Large-Language-Model-Notebooks-Course

Contribute to peremartra/Large-Language-Model-Notebooks-Course development by creating an account on GitHub.

github.com

The models used can be found on Hugging Face.

- Fine-Tuned: t5-Base: https://huggingface.co/flax-community/t5-base-cnn-dm

- Base: t5-Base: https://huggingface.co/t5-base

Loading the data.

In the first part of the article, we will use a dataset available on Kaggle containing MIT news that does not have generated summaries. This dataset will be used to verify that both loaded models generate summaries different enough.

Dataset Link: https://www.kaggle.com/datasets/deepanshudalal09/mit-ai-news-published-till-2023

Let’s begin by importing the typical Python libraries:

#Import generic libraries

import numpy as np

import pandas as pd

import torch

All of these are well-known libraries in the Python universe. Importing torch is necessary because ROUGE requires it.

Let’s load the dataset.

news = pd.read_csv('/kaggle/input/mit-ai-news-published-till-2023/articles.csv')

DOCUMENT="Article Body"

#Because it is just a course we select a small portion of News.

MAX_NEWS = 3

subset_news = news.head(MAX_NEWS)

subset_news.head()

The complete article content is contained in the column Article Body. This is the only field from the Dataset that we need.

We are selecting only three articles to speed up the execution of the notebook.

articles = subset_news[DOCUMENT].tolist()

We’ve stored the complete text of the three loaded articles in the variable named articles. If we want to work with more or fewer news articles, it is as simple as changing the value of the variable MAX_NEWS.

Load the models and create the summaries.

The two models used are from the T5 family. One of them is the foundational T5-Base model, and the other is a model derived from T5-Base but fine-tuned with the CNN-DailyMail dataset.

Since both models are available on Hugging Face, we’ll work with the transformers library.

import transformers

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

#The model names are stored in the variables model_name_small and model_name_reference.

model_name_small = "t5-base"

model_name_reference = "flax-community/t5-base-cnn-dm"

To obtain the tokenizers and models, I created the following function:

#This function returns the tokenizer and the Model.

def get_model(model_id):

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForSeq2SeqLM.from_pretrained(model_id)

return tokenizer, model

This function receives the model_id as input, and returns the loaded model jointly with the tokenizer.

The tokenizer is used to convert the text into sequences of tokens that can be processed by the model.

Using the get_model function, we can obtain the two tokenizers and models:

tokenizer_small, model_small = get_model(model_name_small)

tokenizer_reference, model_reference = get_model(model_name_reference)

To generate the summaries, we will create a function that takes four parameters:

- A list of texts to summarize.

- The tokenizer.

- The model.

- The maximum length for the obtained summary.

The function should format, a bit, the text of the articles to be summarized. This involves introducing a prefix before each article. This prefix will consist of instructions for the model. In our case, we want the model to make a summary of the articles, so the prefix to add will be: “Summarize this news: “.

Using the tokenizer, each article will be transformed into encodings, that will be sent to the model. The model will return a summary, that needs to be decoded and converted from encodings to text.

def create_summaries(texts_list, tokenizer, model, max_l=125):

# We are going to add a prefix to each article to be summarized

# so that the model knows what it should do

prefix = "Summarize this news: "

summaries_list = [] #Will contain all summaries

texts_list = [prefix + text for text in texts_list]

for text in texts_list:

summary=""

#calculate the encodings

input_encodings = tokenizer(text,

max_length=1024,

return_tensors='pt',

padding=True,

truncation=True)

# Generate summaries

with torch.no_grad():

output = model.generate(

input_ids=input_encodings.input_ids,

attention_mask=input_encodings.attention_mask,

max_length=max_l, # Set the maximum length of the generated summary

num_beams=2, # Set the number of beams for beam search

early_stopping=True

)

#Decode to get the text

summary = tokenizer.batch_decode(output, skip_special_tokens=True)

#Add the summary to summaries list

summaries_list += summary

return summaries_list

Let’s look into a specific part of the function’s code: the model invocation for generating the summaries:

# Generate summaries

with torch.no_grad():

output = model.generate(

input_ids=input_encodings.input_ids,

attention_mask=input_encodings.attention_mask,

max_length=max_l, # Set the maximum length of the generated summary

num_beams=2, # Set the number of beams for beam search

early_stopping=True

)

As you can see, we are starting the block with the line: with torch.no_grad() which indicates that we don’t want to calculate gradients within the block. This improves the speed of the code.

Then we call model.generate with the following parameters:

- input_ids: The encodings representing the tokenized input text.

- attention_mask: The attention mask, provided by the tokenizer.

- max_length: The maximum length of the model’s response.

- beams: The greater the number of beams, the greater the diversity in the model's generated response. We’re keeping it at 2 to introduce some variety.

- early_stopping: this parameter allows the model to stop generating text before the response reaches the maximum length. It is a good practice to set his value to True.

Let’s see how to call the create_summaries function to obtain the summaries:

# Creating the summaries for both models.

summaries_small = create_summaries(articles,

tokenizer_small,

model_small)

summaries_reference = create_summaries(articles,

tokenizer_reference,

model_reference)

As you can see, it's simple. You just need to pass the texts to be summarized along with the tokenizers and the models.

Let’s take a look at the generated summaries from the two models:

summaries_small: [‘MIT and MIT-Watson AI Lab have developed a unified framework. the system can simultaneously predict molecular properties and generate new molecules. it uses this grammar to construct viable molecules and predict their properties.’, ‘\’BioAutoMATED\’ is an automated machine-learning system that can select and build an appropriate model for a given dataset. it can even take care of the laborious task of data preprocessing, whittling down a months-long process to just a few hours. \’”We want to lower these barriers for a lot of folks that want to use machine learning or biology,” says first co-author Jacqueline Valeri.’, “MIT and IBM research scientists have made a computer vision model more robust by training it to work like a part of the brain that humans and other primates rely on for object recognition. ‘we asked the artificial neural network to make the function of one of your inside simulated “neural” layers as similar as possible to the corresponding biological neural layer,’ says MIT professor.”]

summaries_reference: [‘Researchers created a machine-learning system that automatically learns the “language” of molecules using only a small, domain-specific dataset. The system learns to construct viable molecules and predict their properties. Computational design and Fabrication Group will be presented at the International Conference for Machine Learning.’, “Automated machine-learning system can select and build an appropriate model for a given dataset. ‘BioAutoMATED’ is an automated machine-learning system. The tool includes binary classification models, multi-class classification models, and more complex neural networks.”, “MIT and IBM researchers have found that artificial neural networks resemble the multilayered brain circuits that process visual information in humans and other primates. ‘We asked it to do both of those things as well as the standard, computer vision approach,’ said one expert. The network found to be more robust by training it to work like a part of the brain that humans rely on for object recognition.”]

At first look, you can already observe that the generated summaries are different. However, it is difficult to determine which one of them is better for our needs simply by looking at the summaries.

To determine whether the summaries are significantly different, we’ll use ROUGE. When comparing two generated summaries, where neither of them serves as the reference summary, we aren’t gaining an understanding of the quality of the generated summaries per se. Instead, we’re obtaining an indication of how different the results are from each other. Allowing us to identify if the fine-tuning process has had some impact on the outcomes of the summaries.

Calculating ROUGE

Let’s install and load the necessary libraries so that we can run the ROUGE metrics.

While there are several libraries that implement ROUGE calculation, I’ve decided to use the evaluate library. Sometimes, the choice is based on familiarity, and this is the library I’m habituated to using.

!pip install evaluate

import evaluate

from nltk.tokenize import sent_tokenize

#With the function load of the library evaluate

#we create a rouge_score object

rouge_score = evaluate.load("rouge")

Once we have the libraries imported, calculating the ROUGE metric is as simple as a single call to the compute function of the rouge_score object we just created.

The texts are passed to this function, along with a third parameter indicating whether you want to use word stems (lemmas) or whole words for the comparisons. It’s common to compare only the stems so that words like “jump” and “jumped” are considered equal.

Here are some lemmas examples:

- Jumping → Jump.

- Running → Run.

- Cats → Cat.

When using True for the steamer parameter, words with the same stem are considered equal, while using False treats them as distinct words.

However, it’s important to prepare the text a bit by adding line breaks at the beginning of each line and removing empty characters. To accomplish this, let’s create the compute_rouge_score function:

def compute_rouge_score(generated, reference):

#We need to add '\n' to each line before send it to ROUGE

generated_with_newlines = ["\n".join(sent_tokenize(s.strip())) for s in generated]

reference_with_newlines = ["\n".join(sent_tokenize(s.strip())) for s in reference]

return rouge_score.compute(

predictions=generated_with_newlines,

references=reference_with_newlines,

use_stemmer=True,

)

As you can see, this function modifies the texts received as parameters by adding line breaks to each sentence.

Thereafter, it makes only a single call to the rouge_score.compute function.

compute_rouge_score(summaries_small, summaries_reference)

In this call, we are passing the generated text using both the T5 Base model and the fine-tuned T5 model. The result it returns is:

{‘rouge1’: 0.47018752391886715,

‘rouge2’: 0.3209013209013209,

‘rougeL’: 0.34330271718331423,

‘rougeLsum’: 0.44692881745120555}

We can see that the degree of similarity in ROUGE-1 is 47%, whereas in ROUGE-2, it’s 32%. This clearly indicates that the summaries generated by both models are distinct. With some similarities but significant differences, suggesting that the fine-tuning process has influenced in how the model generates the summaries.

However, we can’t yet determine which model is better, as we haven’t compared against any reference text.

Comparing with a reference text.

Now we are going to use a second Dataset: the CNN_Dailymail, a well-known dataset that’s part of the Datasets library.

Apart from the articles, this dataset contains human-generated summaries that we are going to use as reference texts.

We will compare the summaries generated by the two models with the reference summaries in the dataset. This will help us determine which model produces summaries that are more similar to the ones provided as reference texts.

from datasets import load_dataset

cnn_dataset = load_dataset(

"cnn_dailymail", version="3.0.0"

)

#Get just a few news to test

sample_cnn = cnn_dataset["test"].select(range(MAX_NEWS))

sample_cnn

As in the first part of the article, we will load only a limited number of news. And it will help to keep the execution time under control.

We obtain the maximum length of the selected summaries of the dataset, to indicate the maximum length that we want the response generated by the models to have.

max_length = max(len(item['highlights']) for item in sample_cnn)

max_length = max_length + 10

We now have all the necessary to generate the summaries.

summaries_t5_base = create_summaries(sample_cnn["article"],

tokenizer_small,

model_small,

max_l=max_length)

summaries_t5_finetuned = create_summaries(sample_cnn["article"],

tokenizer_reference,

model_reference,

max_l=max_length)

#Get the real summaries from the cnn_dataset

real_summaries = sample_cnn['highlights']

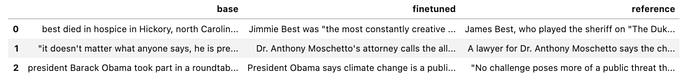

With the three summaries in their respective variables. Let’s look at the content.

summaries = pd.DataFrame.from_dict(

{

"base": summaries_t5_base,

"finetuned": summaries_t5_finetuned,

"reference": real_summaries,

}

)

summaries.head()

The truth is that at a glance, it’s really difficult to know which of the two models creates better summaries.

Let’s compute the ROUGE metrics for both models.

compute_rouge_score(summaries_t5_base, real_summaries)

summaries_t5_base: {‘rouge1’: 0.3050834824090638,

‘rouge2’: 0.07211128178870115,

‘rougeL’: 0.2095520274299344,

‘rougeLsum’: 0.2662418008348241}

compute_rouge_score(summaries_t5_finetuned, real_summaries)

summaries_t5_finetuned: {‘rouge1’: 0.31659149328289443,

‘rouge2’: 0.11065084340946411,

‘rougeL’: 0.22002036956205442,

‘rougeLsum’: 0.24877540132887144}

With these results, I would say that the fine-tuned model makes better summaries than the T5-Base model. This is based on the fact that it achieves higher scores in all metrics, except for LSUM, where the difference is minimal.

It’s also important to note that ROUGE metrics are highly interpretable and do not provide an absolute truth. In other words, a model isn’t necessarily better than another simply because its ROUGE scores are higher. These scores only indicate that the texts it generates have more similarity to the reference texts than those produced by another model.

Both models have very similar results, but the fine-tuned model achieves higher scores in all metrics, especially in ROUGE-2 and ROUGE-L, except for ROUGE-LSUM. This implies that the Base model might generate texts that are more similar, while the fine-tuned model utilizes a vocabulary more similar to the reference texts.

In any case, we cannot obtain clear conclusions since we have only analyzed a few summaries. To determine which model is the best, we would need to develop a different strategy. A nice approach can be: grouping the news by topic and examining whether there are significant differences in results.

Conclusions.

Metrics are not enough, even metrics like ROUGE falls short for us, since it depends largely on the quality of the texts we take as reference.

The human factor is still very important to check the suitability of the responses. This is why the Models that have been trained with human mediation are succeeding.

At least we have one good news: it is quite easy to get metrics like ROUGE, which can help us in the decision process, and make better model judgments.

The full course about Large Language Models is available at Github. To stay updated on new articles, please consider following the repository or starring it. This way, you’ll receive notifications whenever new content is added.

GitHub – peremartra/Large-Language-Model-Notebooks-Course

Contribute to peremartra/Large-Language-Model-Notebooks-Course development by creating an account on GitHub.

github.com

I write about Deep Learning and AI regularly. Consider following me on Medium to get updates about new articles. And, of course, You are welcome to connect with me on LinkedIn.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.