Machine Learning A-Z Briefly Explained Part 2

Last Updated on January 7, 2023 by Editorial Team

Author(s): Gencay I.

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

Refreshing and for Quick Recall

Content Table

· Introduction

· Terms

∘ Dimensionality Reduction

∘ Supervised Learning Algorithms

∘ Unsupervised Learning Algorithms

∘ Association Rule Learning

∘ Reinforcement Learning

∘ Batch Learning

∘ Online Learning

∘ Learning Rate

∘ Instance-Based Learning

∘ Model-based Learning

∘ Model Parameters

∘ Sampling Noise

∘ Sampling Bias

∘ Feature Engineering

∘ Feature Extraction

∘ Regularization

∘ Hyperparameter

∘ Grid Search

∘ Stratified Sampling

∘ Map- Reduce

∘ Cross-validation

∘ Grid Search

∘ Randomized Search

· Conclusion

Introduction

Welcome to another dose of A-Z article. After publishing the “Machine Learning A-Z Briefly Explained” article, I realized that there are many terms that I did not mention. Of course, Machine Learning is a really big concept, I explained some of the terms briefly as I mentioned in the first part of this article, this article aims to refresh your memory, before an interview or before doing the project.

In two scenarios, you can take benefit from this article too.

Also for junior developers & entry-level Data Scientists & Data Analysts who want to be Data Scientists, this article also helps you to be familiar with these machine learning terms.

Actually, I would like to add codes too, however that won't be briefly, so I am now prepared E-Book containing real-life examples and Python codes, will be ready I think in July, and I will add links after the articles.

And I have to thank you too due to your attention to my A-Z articles about Machine Learning, your feedback really motivates me to keep going.

So let's skip the chit chat and let's dig into terms.

Terms

Dimensionality Reduction

Simplifying the data, however, tries not to lose information.

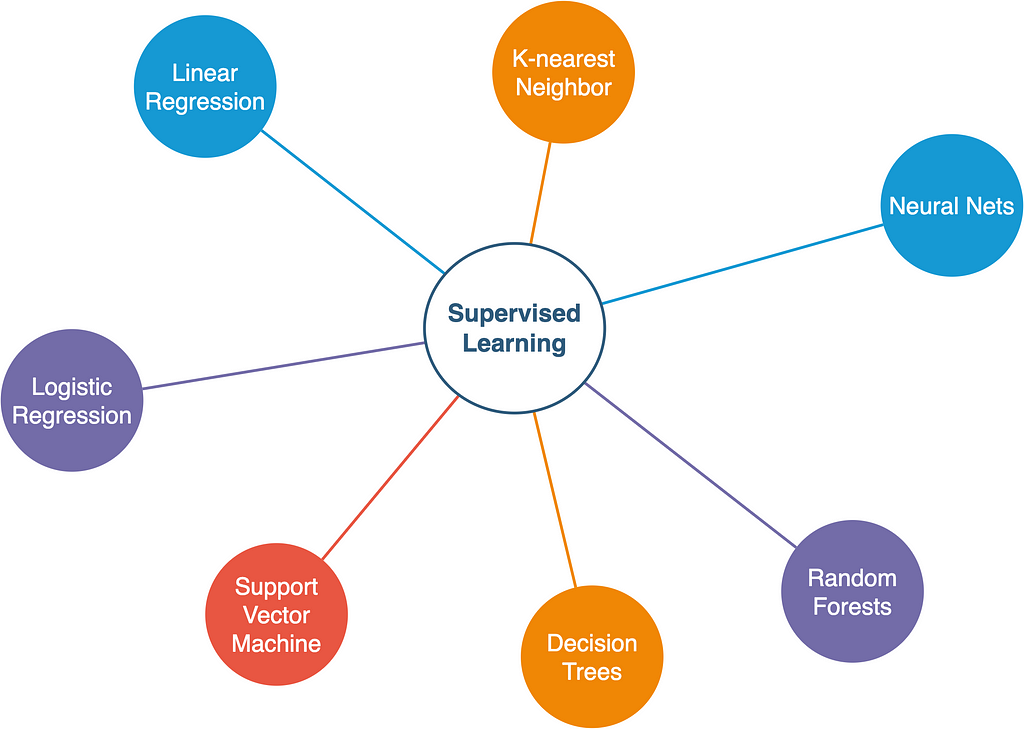

Supervised Learning Algorithms

You want to make a prediction, with the label.

When you want to predict house price;

Label = house price

- K-Nearest neighbor

- Linear Regression

- Logistic Regression

- Support Vector Machines(SVM)

- Decision Trees

- Random Forests

- Neural Nets

If visualization helps you to remember easily;

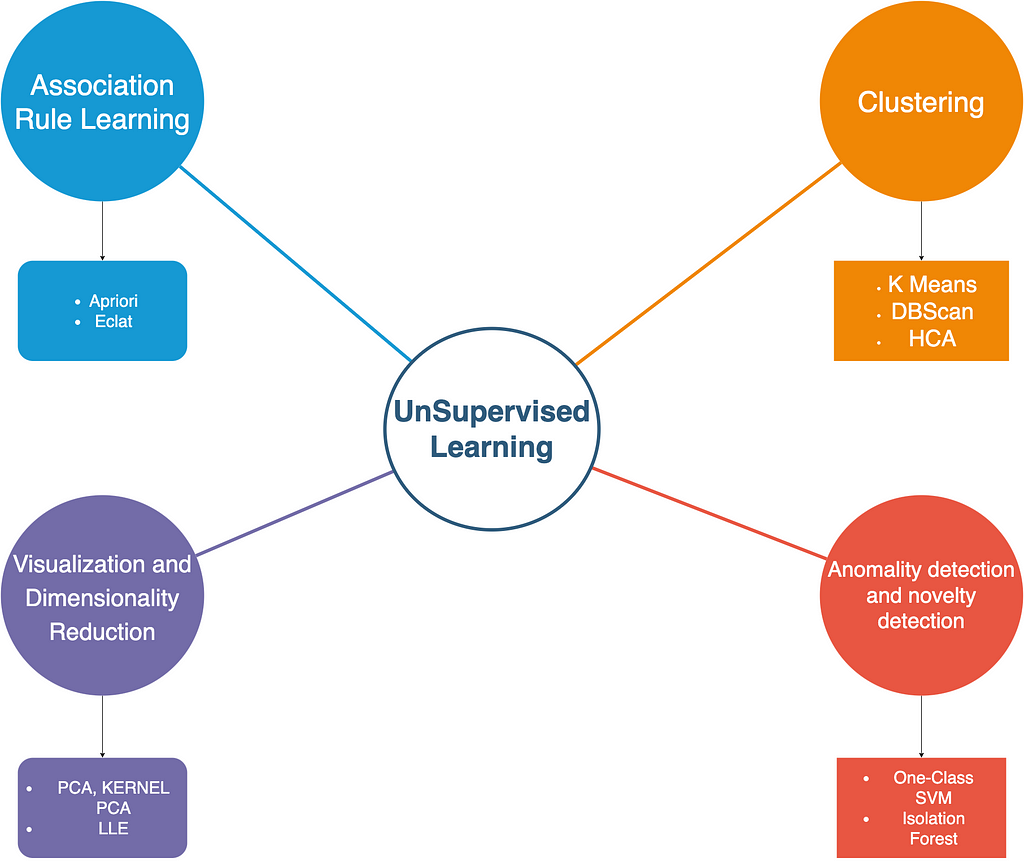

Unsupervised Learning Algorithms

You want to make a prediction, without the label.

When you want to predict house price;

Label = house price

- Clustering- (KMeans, DBScan, HCA)

- Anomaly detection and novelty detection. (One-class SVM, Isolation forest)

- Visualization and dimensionality reduction(PCA, Kernel PCA, LLE)

- Association Rule Learning. (Apriori, Eclat)

If visualization helps you to remember easily;

Association Rule Learning

Let me explain it by giving an example, market shelf locating.

The products tend to sell together and will be located close to one another.

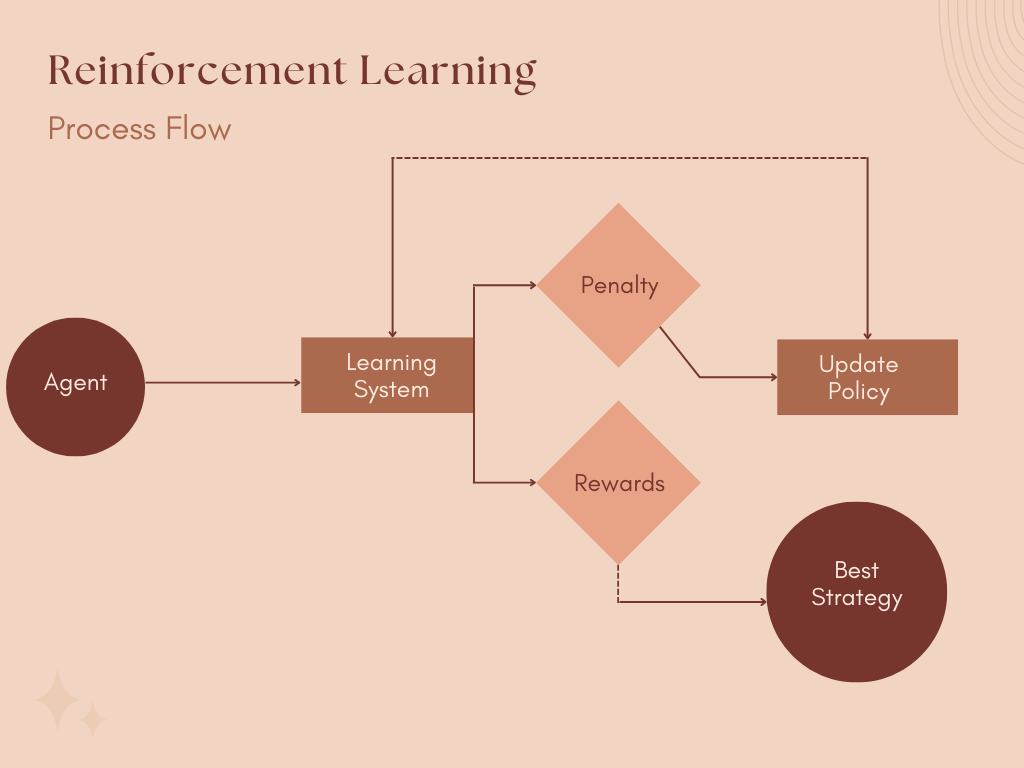

Reinforcement Learning

The learning system = agent

Example: Teach a robot how to walk.

Batch Learning

The system learns step by step.

Online Learning

The system learns from mini-groups of data. If you are giving services, as a result of this, be carefully monitoring your system's performance, because incoming bad data may ruin your model and your service as well. Try to set limits, or observe your data frequently.

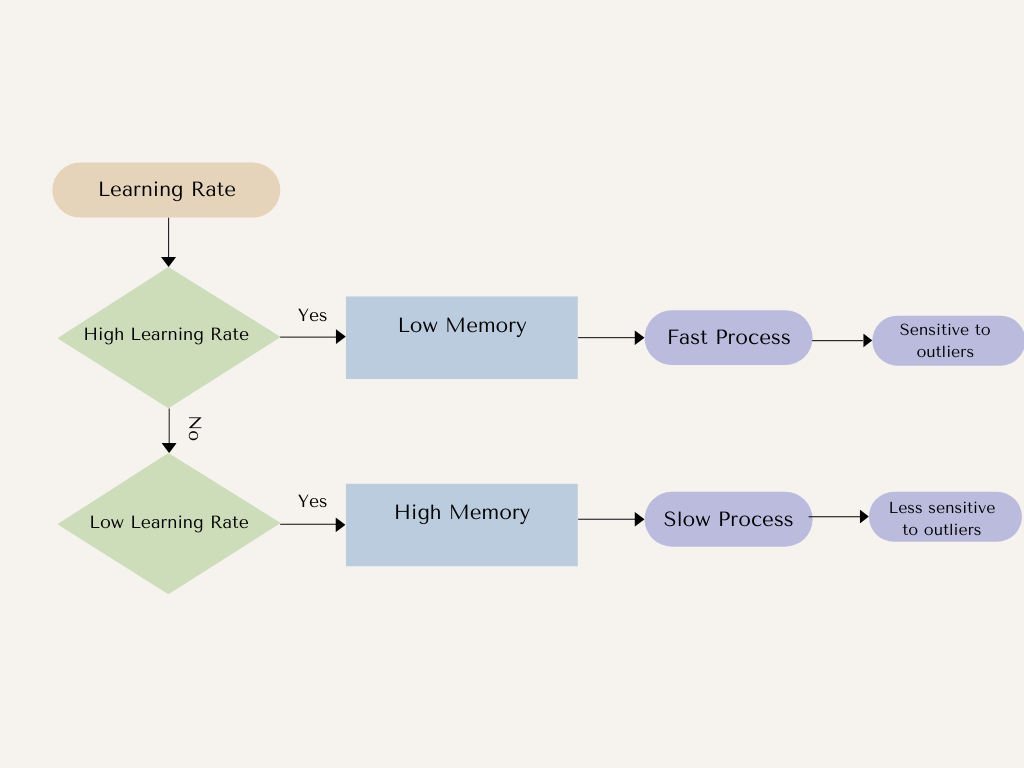

Learning Rate

A parameter defines a stepsize.

High Learning Rate-Low memory-Fast Process-Sensitive to outliers

Low learning Rate- High Memory-Slow Process- Less sensitive to outliers

Instance-Based Learning

The system learns from the experiences and then tries to predict new events according to its experiences.

Model-based Learning

The system analyzes the data, makes a model to explain it, and makes predictions accordingly.

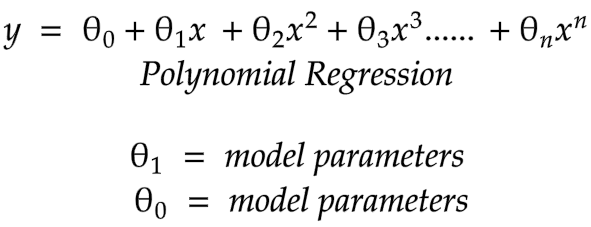

Model Parameters

Let me explain it to you in function;

Sampling Noise

When your data is small, your model might not be performed well.

Sampling Bias

When your data is large but your model can not perform well.

Feature Engineering

Combining features to find the best parameters for your model.

When you say best parameters that means the most correlated to your value that you want to predict.

I can easily say label or response variable however I try to explain it like you are a complete stranger about that topic.

Feature Extraction

If your model parameters are related to each other, you can merge them into one, for example, if you are handling housing data, how many rooms exist in your home per square meter?

Regularization

Limiting the model to be simple for avoiding overfitting.

Hyperparameter

It defines the values of the model parameters, like learning rate, and helps you to find the best performance model

Grid Search

It is a way to change hyperparameters manually.

Stratified Sampling

If you expect from data set to be representative of the population.

For example, if the population distribution of your country is, % 54 male people and % 46 female. If you want to make a prediction in that country, you should select 54 males and 46 females.

What you did is to split the population into strata.

Map- Reduce

When your data is enormous, splitting your data into multiple servers is map reducing.

Cross-validation

Using small validation set for different models to be trained and evaluate them and find the best performance model to use in production.

Grid Search

It is a way to change hyperparameters manually.

Randomized Search

If you have too many hyperparameters.

Rather than calculating each possible combination, this selects a given number of “random” combinations for each hyperparameter.

Conclusion

After explaining these terms, I really like to talk about that book.

Hands-On Machine Learning with Scikit-Learn and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems

If you want to be familiar with Machine Learning and Deep Learning and do your task professionally or learn concepts deeply, really one of the best sources I have found.

Also, all codes were written in Python and explained in detail.

Like all O’Reilly books, it is really excellent and highly recommended.

Now thanks for reading my article, in case you could miss out, here is the first part of Machine Learning A-Z;

Machine Learning A-Z Briefly Explained

And also if you feel you do not have extensive knowledge about statistics to understand Machine Learning concepts, I already wrote articles about statistics too, which were also Part 1 and Part two;

Thanks for reading my articles.

If you want to follow up on my other articles, please follow me and send me claps, and a quick reminder, do not hesitate to send me claps after you save my article, because I saw too many people save this article and do not send me claps.

“Machine learning is the last invention that humanity will ever need to make.” Nick Bostrom

Machine Learning A-Z Briefly Explained Part 2 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.