Use Google Colab Like A Pro

Last Updated on March 24, 2022 by Editorial Team

Author(s): Wing Poon

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

15 tips to supercharge your productivity

Regardless of whether you’re a Free, Pro, or Pro+ user, we all love Colab for the resources and ease of sharing it makes available to all of us. As much as we hate its restrictions, Google is to be commended for democratizing Deep Learning, especially for students from countries which would otherwise have no opportunity to participate in this ‘new electrification’ of entire industries.

Have you spent countless hours working in Colab but have yet to invest a few minutes of time to really get to know the tool and be at your most productive?

“Time is not refundable; use it with intention.”

1. Check and Report on your GPU Allocation

“Life is like a box of chocolates. You never know what you’re gonna get.”

— Forest Gump

I’m so sorry, we’re out of V100s, let’s set you up with our trusty old K80 with 8GB of RAM. You can try again in an hour if you’re feeling lucky! Uhm, yeah Google, thanks but no thanks.

gpu = !nvidia-smi -L

print(gpu[0])

assert any(x in gpu[0] for x in ['P100', 'V100'])

Jokes aside, I’m sure you’ve been burned by going through a lengthy pip install, mounting GDrive, downloading, and preparing your data, only to find out three-quarters ways through your notebook that you forgot to set your runtime to ‘GPU’? Put this assertion on the first cell of your notebook — you can thank me later.

2. No More Authenticating “gdrive.mount( )”

Not all Python notebooks are the same, Colab treats Jupyter Notebooks as second-class citizens. When you upload your Jupyter Notebook to GDrive, you’ll see that it appears as a blue/folder icon. When you create a new Colab notebook from scratch in GDrive (+New Button ➤ More ➤ Google Colaboratory), it’ll appear as an orange icon with the Colab logo.

This ‘native’ Colab notebook has special powers — namely, Google can automatically mount your GDrive on the Colab VM for you as soon as you open the notebook. If you open a Jupyter notebook and click on the Files icon (left sidebar), and then the Mount Drive icon (pop-out panel top-row), Colab will insert the following new cell into your Jupyter notebook:

from google.colab import drive

drive.mount('/content/drive')

And when you execute that cell, it’ll pop up a new browser tab and you have to go through that annoying i. Select your Account, ii. Allow and iii. Copy-and-Paste iv. Switch and Close Tabs, rigamarole.

If you have a Jupyter notebook that you frequently open and it needs GDrive access, invest thirty seconds and save yourself that constant hassle. Simply create a new (native) Colab notebook (as described above), then open your existing Jupyter notebook — with Colab — in another browser tab. Click: Edit ➤ Clear all outputs. Then, making sure you’re in Command mode, press <SHIFT> + <CMD|CTRL> + A , then <CMD|CTRL> + C. Go to your new Colab notebook and press <CMD|CTRL> + V . Mount your GDrive using the Mount Drive icon method and delete the old Jupyter notebook to avoid confusion.

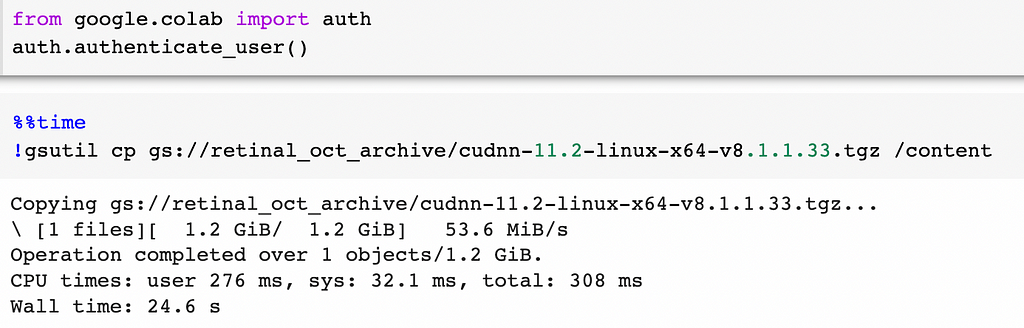

3. Fastest way to Copy your Data to Colab

One way is to copy it from Google Cloud Storage. You do have to sign up for a Google Cloud Platform account, but Google offers a Free Tier, which gives your 5GB, as long as you select US-WEST1, US-CENTRAL1, or US-EAST1 as your region.

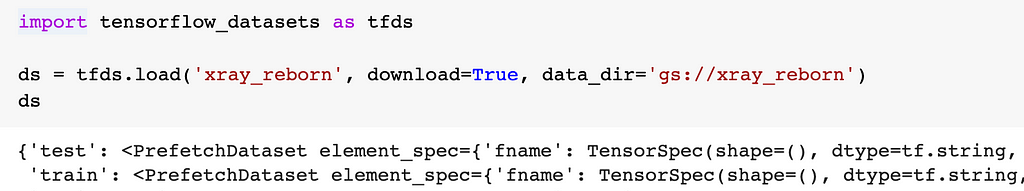

Besides security, another benefit of using Google Storage buckets is if you’re using TensorFlow Datasets for your own data (if you aren’t, you really should … I’ll be writing an article about why Follow me to be notified), you can bypass the copying and load your datasets directly using tfds.load():

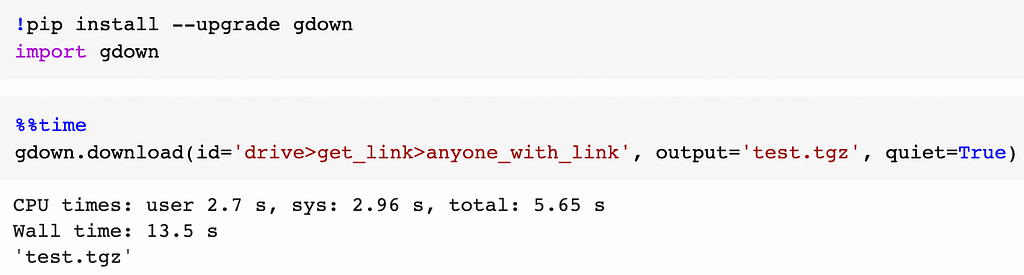

Another way is to use the wonderful gdown utility. Note that as of this writing (Mar 2022), even though gdown is pre-installed on Colab instances, you have to upgrade the package for it to work. You’ll need to have the file ID, which you can get by Right-Clicking on the file in Drive ➤ Get link ➤ Anyone with link, and then plucking out the ID (shown in bold below) from the provided URL:

https://drive.google.com/file/d/1sk…IzO/view?usp=sharing

NOTE: Since anyone with the link can access your file with this file-sharing permission, use this method only for your personal projects!

4. Bypass “pip install …” on Runtime (Kernel) Restarts

If you’re like me and are always restarting and re-running the python kernel — usually because you’ve got non-idempotent code like dataset = dataset.map().batch()— save precious seconds and keep your notebooks readable and concise by not invoking pip install every time your restart the runtime, by creating a dummy file and testing for its presence:

![ ! -f "pip_installed" ] && pip -q install tensorflow-datasets==4.4.0 tensorflow-addons && touch pip_installed

In fact, use this same technique to avoid unnecessary copying and downloading of those large data files whenever you rerun your notebook:

![ ! -d "my_data" ] && unzip /content/drive/MyDrive/my_data.zip -d my_data

5. Import your own Python Modules/Packages

If you find yourself constantly using a helper.py, or your own private Python package, e.g. to display a grid of images or chart your training losses/metrics, put them all on your GDrive in a folder called /packages and then:

import sys

sys.path.append('/content/drive/MyDrive/packages/')

from helper import *

6. Copy files To Google Storage Bucket

If you’re training on Google’s TPUs, your data has to be stored in a Google Cloud Storage Bucket. You can use the pre-installed gsutil utility to transfer files from the Colab VM’s local drive to your storage bucket if you need to run some pre-processing on data prior to kicking off each TPU training run.

!gsutil -m cp -r /root/tensorflow_datasets/my_ds/ gs://my-bucket/

7. Ensure all Files have been Completely Copied to GDrive

As I’m sure you’re well aware, Google can and will terminate your session due to inactivity. There are good reasons not to store (huge) model checkpoints during model training to your mounted GDrive. E.g. so as not to exceed Colab I/O limits, or you’re running low on your GDrive storage quota and need to make use of the ample local disk storage on your Colab instance, etc. To ensure that your final model and data is completely transferred to GDrive if you’re paranoid, call drive.flush_and_unmount() at the very end of your notebook:

from google.colab import drive

model.fit(...) # I'm going to take a nap now, <yawn>

model.save('/content/drive/MyDrive/...')

drive.flush_and_unmount()

Note that the completion of copying/writing files to /content/drive/MyDrive/ does not mean that all files are safely on GDrive and that you can immediately terminate your Colab instance because the transfer of data between the VM and Google’s infrastructure happens asynchronously, so performing this flushing will help ensure it’s indeed safe to disconnect.

8. Quickly open your local Jupyter Notebook

There’s no need to copy your .ipynb to GDrive and then double-click on it. Simply go to https://colab.research.google.com/ and then click on the Upload tab. Your uploaded notebook will reside on GDrive://Colab Notebooks/ .

9. Use Shell Commands with Python Variables

OUT_DIR = './models_ckpt/'

...

model.save(OUT_DIR + 'model1')

...

model.save(OUT_DIR + 'model2')

...

!rm -rf {OUT_DIR}*

Make use of the powerful Linux commands available to you … why bother with importing the zip file and requests libraries and all that attendant code? In fact, get the best of both worlds by piping the output of a Linux command to a Python variable:

wget -O data.zip https://github.com/ixig/archive/data_042020.zip

unzip -q data.zip -d ./tmp

# 'wc': handy linux word and line count utility

result = !wc ./tmp/tweets.txt

lines, words, *_ = result[0].split()

10. Am I running in Colab or Jupyter?

If you switch between running your notebooks on your local machine and training on Colab, you need a way to tell where that notebook is running, e.g. don’t pip install when running on your local machine. You can do this using:

COLAB = 'google.colab' in str(get_ipython())

if COLAB:

!pip install ...

11. Message Me, Baby!

No need to sit around waiting for your training to complete, have Colab send you a notification on your phone! First, you’ll need to follow the instructions to allow CallMeBot to message you on your Signal/FB/WhatsApp/Telegram app. Takes all of one minute — very simple, quick, and safe signup. Then, you can:

import requests

from urllib.parse import quote_plus

number = '...'

api_key = '...'

message = quote_plus('Done Baby!')

requests.get(f'https://api.callmebot.com/signal/send.php?phone={number}&apikey={api_key}&text={message}')

12. Use IPython Cell Magics

Okay, this one is not specific to Colab Notebooks as it applies to Jupyter Notebooks as well, but here are the most useful ones to know about.

%%capture : Silence the copious, annoying outputs from executing statements in a cell. Useful for those ‘pip install …’, ‘tfds.load(…)’, and innumerable TensorFlow deprecation warnings.

%%writefile <filename> : Writes the text contained in the rest of the cell into a file. Useful for creating a YAML, JSON, or simple text file on-the-fly for testing.

%tensorflow_version 1.x : If you’re still stuck in the past (not judging … well, maybe just a little!), don’t ‘pip install tensorflow==1.0’, it’ll make a mess of dependencies, use this line magic instead, before importing TensorFlow.

13. Dock the Terminal

If you’re a Pro/Pro+ user, you have access to the VM via the Terminal. You can do some super-powerful things like run your own jupyter server on it (so you can have back the familiar Jupyter Notebook UI if that’s your preference). Also for wrangling files, the terminal is invaluable.

When you click on the Terminal icon on the left sidebar, the Terminal panel pops out on the right of the page, but that’s really difficult to use given how narrow it is and constantly having to close it so you can see your code! Solution: Dock the Terminal as a separate Tab. After opening the Terminal, click on the Ellipsis (…) ➤ Change page layout ➤ Single tabbed view.

14. Change those Shortcuts

Who can remember the obscure shortcuts that Google assigns?! Go to Tools ➤ Keyboard shortcuts, and make sure you assign the following shortcuts to your own memorable two-key combinations:

- Restart runtime and run all cells in the notebook

- Restart runtime

- Run cells before the current

- Run selected cell and all cells after

The nice thing is that Colab remembers your changes (be sure to click on the Save button at the bottom of the pop-out) so you only need to do this once.

15. GitHub Integration

You can launch any notebook hosted on GitHub directly in Colab using the following URL:

https://colab.research.google.com/github/<org>/<repo>/…/<xx.ipynb>

Or you can just bookmark your favorite org/repo with the following URL and you’ll be prompted by a file browser whenever you click on the bookmark:

https://colab.research.google.com/github/<org>/<repo>

Use Google Colab Like A Pro was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.