YOLO V5 — Explained and Demystified

Last Updated on January 6, 2023 by Editorial Team

Last Updated on July 1, 2020 by Editorial Team

Author(s): Mihir Rajput

Computer Vision

YOLO V5 — Explained and Demystified

YOLO V5 — Model Architecture and Technical Details Explanation

From my previous article on YOLOv5, I received multiple messages and queries on how things are different in yolov5 and other related technical doubts.

Therefore, I decided to write another article to explain some technical details used in YOLOv5.

As YOLO v5 has a total of 4 versions, I will cover the ‘s’ version. But if you refer this thoroughly you will find that in other versions there are no huge changes except for the model layers/architecture and a number of parameters.

In this article, I will cover the following the most important details and aspects used in YOLOv5 implementation.

- YOLO v5 Model Architecture

- Activation Function

- Optimization Function

- Cost Function or Loss Function

- Weights, Biases, Parameters, Gradients, and Final Model Summary

NOTE: As YOLO v5 is still in the development phase and we are receiving updates from ultralytics frequently, in future developers may change some aspects. So this article is specifically for the initial release of YOLOv5 only. However, I will try to update/add article for subsequent releases as well.

Let’s move to the technical discussion.

YOLO v5 Model Architecture

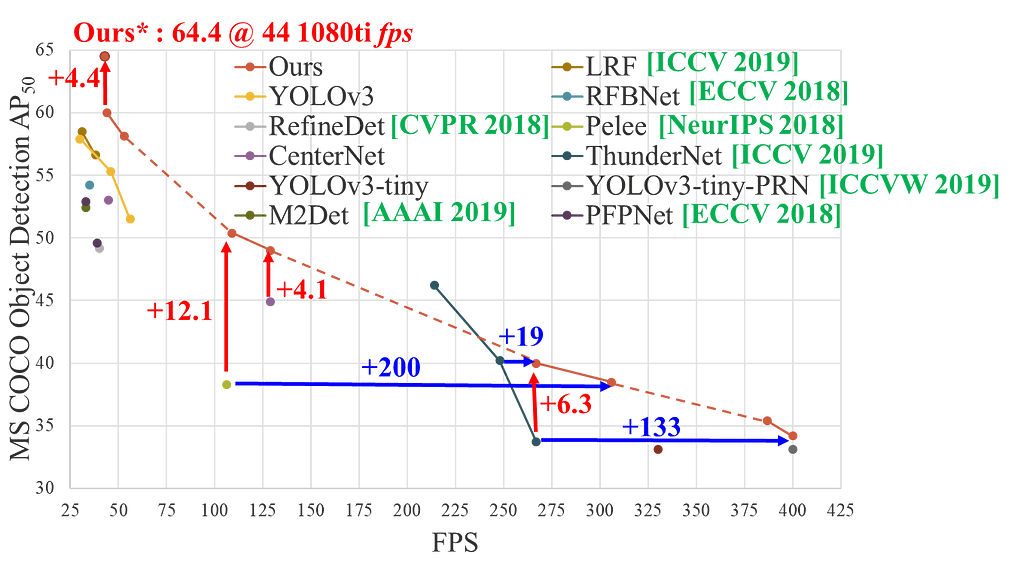

As YOLO v5 is a single-stage object detector, it has three important parts like any other single-stage object detector.

- Model Backbone

- Model Neck

- Model Head

Model Backbone is mainly used to extract important features from the given input image. In YOLO v5 the CSP — Cross Stage Partial Networks are used as a backbone to extract rich in informative features from an input image.

CSPNet has shown significant improvement in processing time with deeper networks. Refer to the following image, for more information about CSPNet visit the Github repo.

Model Neck is mainly used to generate feature pyramids. Feature pyramids help models to generalized well on object scaling. It helps to identify the same object with different sizes and scales.

Feature pyramids are very useful and help models to perform well on unseen data. There are other models that use different types of feature pyramid techniques like FPN, BiFPN, PANet, etc.

In YOLO v5 PANet is used for as neck to get feature pyramids. For more information on features pyramids, refer to the following link.

Understanding Feature Pyramid Networks for object detection (FPN)

The model Head is mainly used to perform the final detection part. It applied anchor boxes on features and generates final output vectors with class probabilities, objectness scores, and bounding boxes.

In YOLO v5 model head is the same as the previous YOLO V3 and V4 versions.

Additionally, I am attaching the final model architecture for YOLO v5 — a small version.

Activation Function

The choice of activation functions is most crucial in any deep neural network. Recently lots of activation functions have been introduced like Leaky ReLU, mish, swish, etc.

YOLO v5 authors decided to go with the Leaky ReLU and Sigmoid activation function.

In YOLO v5 the Leaky ReLU activation function is used in middle/hidden layers and the sigmoid activation function is used in the final detection layer. You can verify it here.

Optimization Function

For optimization function in YOLO v5, we have two options

In YOLO v5, the default optimization function for training is SGD.

However, you can change it to Adam by using the “ — — adam” command-line argument.

Cost Function or Loss Function

In the YOLO family, there is a compound loss is calculated based on objectness score, class probability score, and bounding box regression score.

Ultralytics have used Binary Cross-Entropy with Logits Loss function from PyTorch for loss calculation of class probability and object score.

We also have an option to choose the Focal Loss function to calculate the loss. You can choose to train with Focal Loss by using fl_gamma hyper-parameter.

Weights, Biases, Parameters, Gradients, and Final Model Summary

To look closely at weights, biases, shapes, and parameters at each layer in the YOLOv5-small model, refer to the following information.

Additionally, you can also refer to the following brief summary of the YOLO v5 — small model.

Model Summary: 191 layers, 7.46816e+06 parameters, 7.46816e+06 gradients

Hopefully, this may help you to understand the YOLO v5 better. In the ending notes, I would like to thank you for reading.

Feel free to contact me for any doubts/queries/suggestions.

References:

[1] https://github.com/ultralytics/yolov5

[2] https://github.com/WongKinYiu/CrossStagePartialNetworks

Contributions:

Thanks and Cheers!

YOLO V5 — Explained and Demystified was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.

Comments are closed.