ECCV 2020 Best Paper Award | A New Architecture For Optical Flow

Last Updated on January 6, 2023 by Editorial Team

Author(s): Louis Bouchard

Computer Vision, Research

ECCV 2020 Best Paper Award Goes to Princeton Team.

They developed a new end-to-end trainable model for optical flow.

Their method beats state-of-the-art architectures’ accuracy across multiple datasets and is way more efficient.

They even made the code available for everyone on their Github!

Let’s see how they achieved that.

Paper’s introduction

The ECCV2020 conference happened last week.

A ton of new research papers in the field of computer vision was out just for this conference.

Here, I will be covering the “Best Paper Award” that they gave to Princeton Team.

In short, they developed a new end-to-end trainable model for optical flow called “RAFT: Recurrent All-Pairs Field Transforms for Optical Flow.”

Their method achieves state-of-the-art accuracy across multiple datasets and is way more efficient.

What is optical flow?

First, I will quickly explain what optical flow is.

It is defined as the pattern of apparent motions of objects in a video.

Which, in other terms, means the motion of objects between consecutive frames of a sequence.

It calculates the relative motion between the object and the scene.

It does that by using the temporal structure found in a video in addition to the spatial structure found in each frame.

As you can see, you can easily calculate the optical flow of a video using OpenCV’s functions:

import cv2

import numpy as np

cap = cv2.VideoCapture("vtest.avi")

ret, frame1 = cap.read()

prvs = cv2.cvtColor(frame1,cv2.COLOR_BGR2GRAY)

hsv = np.zeros_like(frame1)

hsv[...,1] = 255

while(1):

ret, frame2 = cap.read()

next = cv2.cvtColor(frame2,cv2.COLOR_BGR2GRAY)

flow = cv2.calcOpticalFlowFarneback(prvs,next, None, 0.5, 3, 15, 3, 5, 1.2, 0)

mag, ang = cv2.cartToPolar(flow[...,0], flow[...,1])

hsv[...,0] = ang*180/np.pi/2

hsv[...,2] = cv2.normalize(mag,None,0,255,cv2.NORM_MINMAX)

rgb = cv2.cvtColor(hsv,cv2.COLOR_HSV2BGR)

cv2.imshow('frame2',rgb)

k = cv2.waitKey(30) & 0xff

if k == 27:

break

elif k == ord('s'):

cv2.imwrite('opticalfb.png',frame2)

cv2.imwrite('opticalhsv.png',rgb)

prvs = next

cap.release()

cv2.destroyAllWindows()

It just takes a couple of lines of code to generate it in a live feed. Here are the results you get from a normal video frame using this shortcode:

It is super cool and really useful for many applications.

Such as traffic analysis, vehicle tracking, object detection and tracking, robot navigation, and much more. The only problem is that it is quite slow and needs a lot of computing resources.

This new paper helps with both of these problems while producing even more accurate results!

What is this paper? What did the researchers do exactly?

Now, let’s dive a bit deeper into what this paper is all about and how it’s an improvement from current state-of-the-art approaches.

They improved the state-of-the-art methods in four ways.

First, it can be directly trained on optical flow instead of requiring the network to be trained using an embedding loss between pixels making it much more efficient.

Then, regarding the flow prediction, current methods directly predict it between a pair of frames.

Instead, they optimized their computation time a lot by maintaining and updating a single high-resolution flow field.

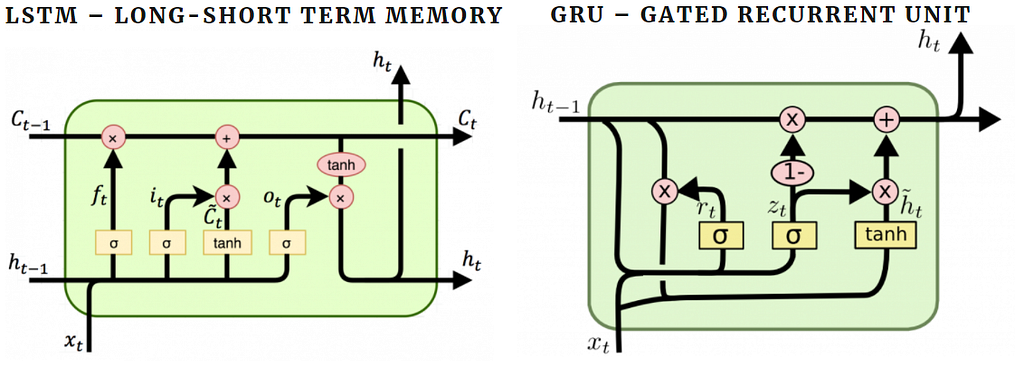

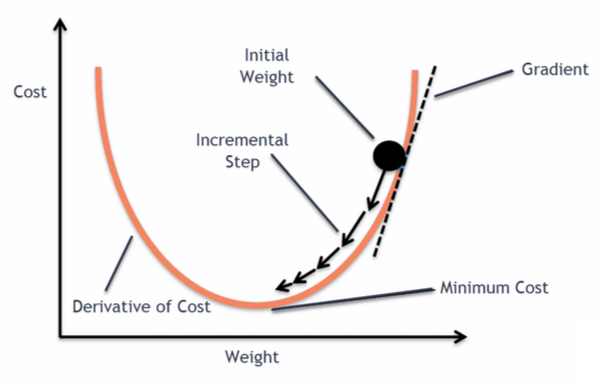

With this flow field, they had to use a GRU block, which is similar to an LSTM block, in order to refine their optical flow iteratively, as the best current approaches do.

This block allows them to share the weights between these iterations while allowing convergence using their fixed flow field when training.

The last distinction between their technique and the other approaches is that instead of explicitly defining a gradient with respect to an optimization objective, using backpropagation, they retrieve features from correlation volumes to propose the descent direction.

How have they done that?

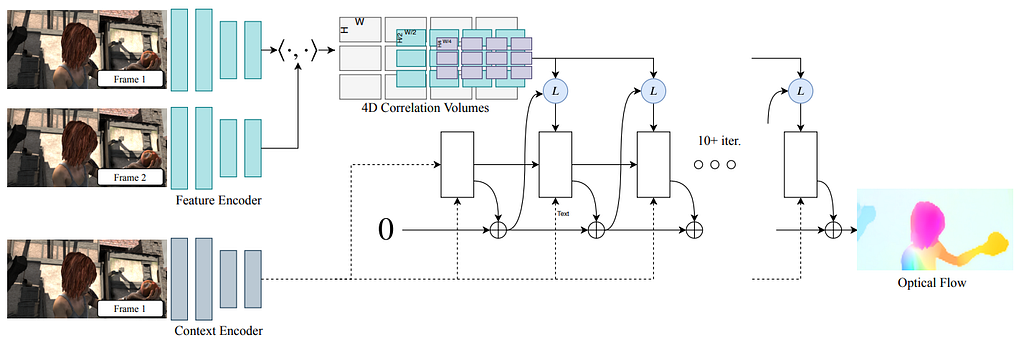

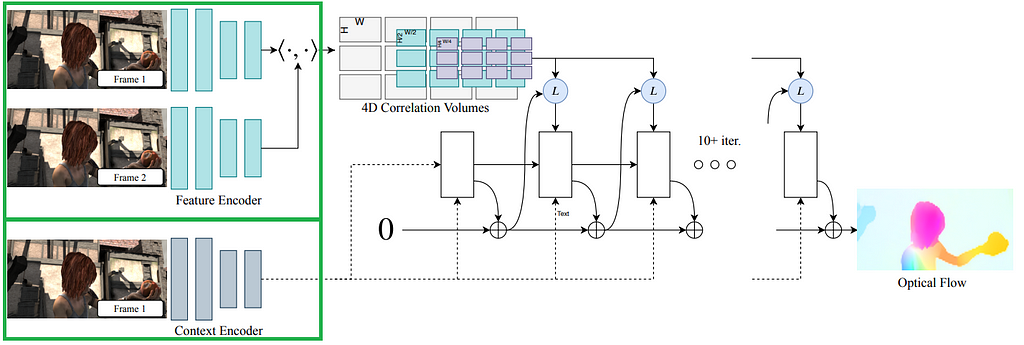

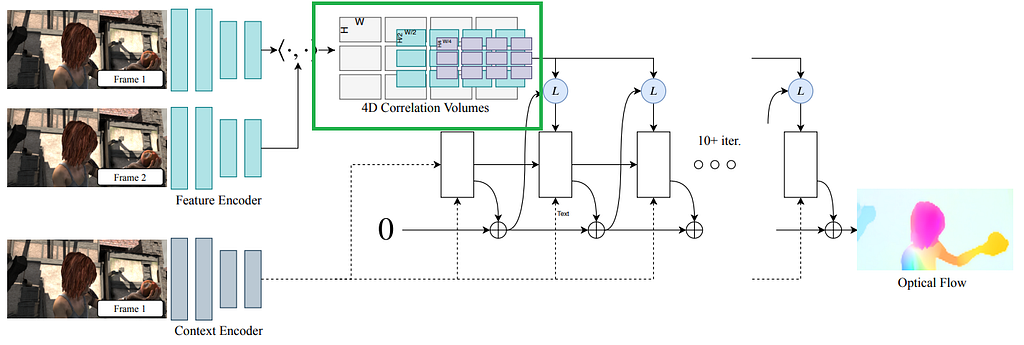

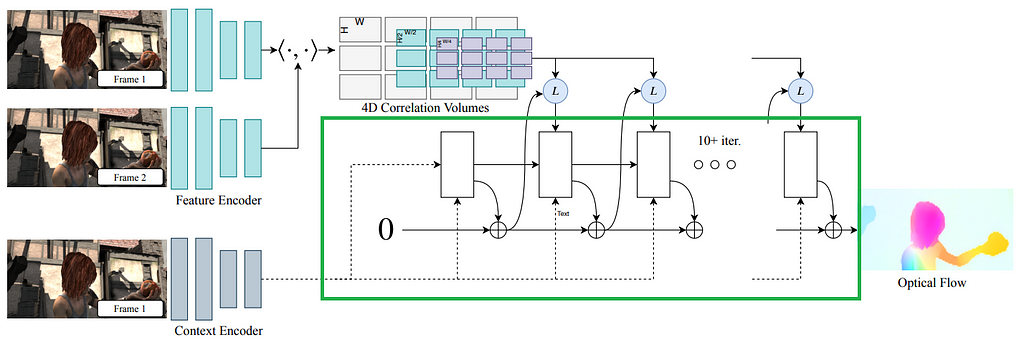

All these improvements were made using their new architecture.

It is basically composed of 3 main components.

At first, there is an encoder that extracts the per-pixel features from two different frames along with another encoder which extracts features only from the first frame, in order to understand the context of the

image.

Then, using all pairs of feature vectors, they generate a 4-dimensional volume using the width and height of both frames.

Finally, they use an update operator which recurrently updates the optical flow.

This is where the GRU block is.

It retrieves values from the previous correlation volumes and iteratively updates the flow field.

Results

Just look at how sharp the results are. While being faster than current approaches!

Watch the video showing the results:

They even made the code available for everyone on their Github!

Which I linked in below if you’d like to try it out.

Of course, this was a simple overview of this ECCV2020's best paper award winner.

I strongly recommend reading the paper linked below for more information.

OpenCV Optical Flow tutorial: https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_video/py_lucas_kanade/py_lucas_kanade.html

The paper: https://arxiv.org/pdf/2003.12039.pdf

GitHub with code: https://github.com/princeton-vl/RAFT

If you like my work and want to support me, I’d greatly appreciate it if you follow me on my social media channels:

- The best way to support me is by following me on Medium.

- Subscribe to my YouTube channel.

- Follow my projects on LinkedIn

- Learn AI together, join our Discord community, share your projects, papers, best courses, find kaggle teammates and much more!

ECCV 2020 Best Paper Award | A New Architecture For Optical Flow was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.