Understanding Bias in the Simplest Plausible Way

Last Updated on May 23, 2020 by Editorial Team

Author(s): Anirudh Dayma

I have recently started exploring Neural networks, and I came across the term activation function and biases. Activation function kinda made some sense to me, but I found it difficult to get the exact essence of biases in Neural Network.

I explored various source and all they had was –

Bias in Neural Networks can be thought of as analogous to the role of an intercept in linear regression.

But what the heck does this mean? I very well understand that intercept is the point where the line crosses the y-axis. And if we don’ have an intercept, then our line would always pass through the origin, but that isn’t how the things are in the real world, so we use intercept to add some flexibility.

So how do biases add flexibility to Neural networks?

You would find the answer to this question by the end of this article.

The above-quoted definition is the most common one we come across when we talk about biases. So I thought that let’s ignore my above question and go ahead with this mediocre explanation of bias (which ain’t making any sense). Because anyone, this is the mugged up answer people have when asked about bias. But then I came across this below image, and it hit me really hard.

If I am not able to explain biases to a six-year-old, then I guess even I haven’t understood it (which indeed is the truth). So I started going through some more resources, and then finally, biases started making sense. Let’s understand bias with the help of a perceptron.

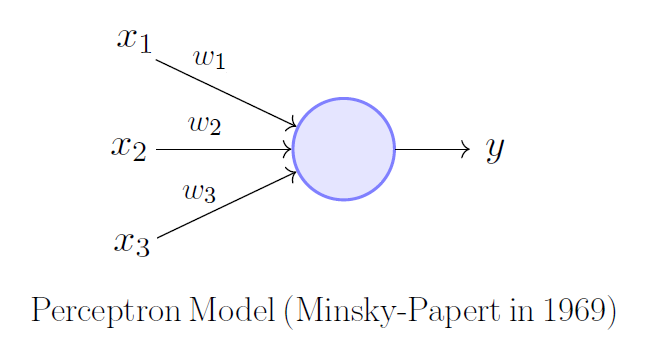

Perceptron

A perceptron can be imagined as something that takes in some set to binary inputs x1, x2,… and produces a single binary output.

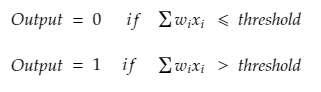

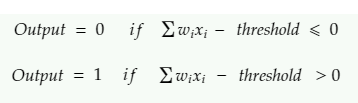

The weights correspond to the importance of the inputs, AKA features. The output i.e., 0 or 1, depends on whether the weighted sum of weights and input is greater than some threshold.

Mathematically

Let’s consider an example, suppose you like a person, and you want to decide whether or not you should tell that person about your feelings. You might come up to the final decision depending on the following questions.

- Do I really love that person, or is it just a mere infatuation?

- What if me speaking my heart out would ruin my equation with that person?

- Is it really worth with (would it have negative consequences on your career)

I know there might be many other questions as well, but these are common ones. So your decision to whether or not to speak your heart out depends on the above questions.

So let us consider x1, x2 and x3 as your 3 questions and x1 = 0 if it is an infatuation and x1 = 1 if it isn’t. Similarly x2 = 0 if it won’t ruin your equation and x3 = 0 if you feel that it isn’t worth it.

It might so happen that not all the above questions carry equal importance. What you could do is assign some numbers to all the questions depending on their importance/relevance. These numbers are nothing but weights.

Suppose you assign weights, w1 = 3, w2 = 2, w3 = 7 where w1, w2, w3 represent weights for question 1,2 and 3 respectively. Meaning you are more concerned about whether or not it’s worth it as you want to focus on your career, and you cannot afford to have distractions(as w3 has higher magnitude). You set the threshold as 6, so if the weighted sum comes out to be greater than 6, then you would speak your heart out. But from the above weight values, it can be so seen that you ain’t worried about whether it’s infatuation or not and whether or not it would ruin the things because even if x1 = 1, x2 = 1 it won’t contribute much to your decision as their weight is less. So looking at the threshold and w3, we can say the deciding factor is x3 (question 3).

Let us consider a different scenario, imagine the weights being w1 = 10, w2 = 3, w3 = 5. So here whether it is an infatuation or not is more important than anything else. And say now you keep the threshold low as 2, so you would come to a decision as Yes quite quickly. Say x1 = 0, x2 = 1, x3 = 0. In this case, the weighted sum is greater than the threshold even when x1 and x3 are 0. So even if x1 had larger weight, but it didn’t play the role of a deciding factor as the threshold was too low. This means that you are more eager to speak your heart out by setting a lower threshold.

So changing the weights and threshold changes the decision as well.

Imagine a case where we don’t have a threshold so you would get a Yes as soon as the weighted sum is greater than 0. But we don’t want this to happen. We want to get to a conclusion depending upon the magnitude of the weighted sum. If the weighted sum exceeds a certain threshold, only then the output should be Yes else it should be No. Hence we need a threshold.

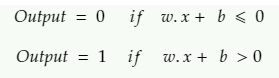

Let us simplify the equation by taking the threshold to the left-hand side.

Similarly, we want our neuron to get activated when our weighted sum is greater than a specific threshold. If we don’t use a threshold, the neuron will get activated as soon as the weighted sum is greater than 0.

So bias b=−threshold and using bias instead of the threshold, we get a familiar equation.

Here Σwixi is written as w.x = Σwixi, where w and x are vectors whose components are weights and inputs, respectively.

We can think as bias is used for inactivity, the neuron would be activated only if the weighted sum is greater than the threshold, as b=−threshold the concepts reverse a bit. Earlier, we said larger the threshold larger should be the weighted sum of the neuron to activate, but now as bias is the negation of threshold, larger the bias smaller the weighted sum required to activate the neuron.

In this way bias is adding flexibility to the Neural network by deciding when should a neuron get activated.

Obviously, the perceptron isn’t exactly similar to the way humans make complex decisions, but this example helps understanding biases in a simpler way.

I hope I have explained what bias in the simplest plausible way is. Feel free to drop comments or questions below, you can find me on Linkedin.

Understanding Bias in the Simplest Plausible Way was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.