Optical Character Recognition (OCR) with CNN-LSTM Attention Seq2Seq

Last Updated on September 18, 2024 by Editorial Team

Author(s): Tan Pengshi Alvin

Originally published on Towards AI.

In previous articles, we have covered a lot, and exhaustively, on Convolutional Neural Networks (CNNs) and their various Deep Learning tasks. CNNs are particularly good at learning spatial information in images. If you have taken time to walk through some of these articles, you will uncover rare insights on some of these Deep Learning applications for Computer Vision:

Lightweight YOLO Detection with Object Tracking from Scratch

Designing YOLO and object tracking models from scratch with OpenCV data simulation

pub.towardsai.net

Siamese Neural Networks with Triplet Loss and Cosine Distance

Theory & Code-along: Triplet loss with cosine distance for Siamese Networks on CIFAR-10 dataset

towardsdatascience.com

Beginning with this article, we will begin to dive into sequential information, such as text and videos, which can be learned via two approaches — Recurrent Neural Networks (RNNs) and the Transformer. Of note, we will also begin to introduce the attention mechanism more formally, which we briefly mentioned in the article on Graph Neural Networks:

Graph Neural Networks (GNN) — Concepts and Applications

Exploring the concept and applications of Graphs, and how to apply Neural Networks to it

pub.towardsai.net

In this article, we will explore another interesting Deep Learning application, called Optical Character Recognition (OCR), which is the reading of text images into binary text information or computer text data, by combining both the CNNs and RNNs framework — an exciting application indeed!

Note: We will also introduce the fundamentals of the technologies behind Recurrent Neural Networks and the attention mechanism before we apply them in our CNN-RNN model.

There are several open-source packages, such as Pytesseract or EasyOCR that are general-purpose in reading text images. Nonetheless, for more task-attuned and structured OCR applications, a new OCR model is typically built from scratch.

Such a custom-built OCR model naturally consists of 2 steps:

- Text-field image detections

- Processing of each text-field image to obtain text data

The text fields can be detected by training an open-sourced YOLO detection model, such as YOLOv8, especially when the text fields are structured, or labeled, in every image data.

On the other hand, the OCR task in this article, which we are building from scratch, is primarily concerned with processing a fixed (or cropped) text field image to obtain binary text data.

Without further hesitation, let us dive into these exciting materials!

1. The OCR Text-Field Data — CAPTCHA

To easily obtain a set of data that is both challenging and meaningful, we again rely on CAPTCHA simulation using an open-source package here. CAPTCHA is an acronym that stands for “Completely Automated Public Turing test to tell Computers and Humans Apart.” However, with the Deep Learning model that we are about to build, we will see that even computers can achieve human-level performance.

To create the dataset, we will first install the library to generate arbitrary CAPTCHAs. On the terminal:

pip install captcha

Now we want to create these CAPTCHA images stored in our local directory. Of note, we want to create random CAPTCHAs that are alphanumeric, between 4 and 8 characters long. To give a slight helping hand to our model, we omit characters like 0 (zero) and 1 (one), since they highly resemble o (‘oh’) and l (‘ell’).

import tqdm

import string

import numpy as np

from captcha.image import ImageCaptcha

image = ImageCaptcha(width = 224, height = 80)

ALL_CHAR = string.ascii_lowercase + '23456789'

DATA_NUM = 8000

for data in tqdm.tqdm(range(DATA_NUM)):

num_char = np.random.randint(4,9)

str = ''

for i in range(num_char):

char_index = np.random.randint(len(ALL_CHAR))

str += ALL_CHAR[char_index]

image_data = image.generate(str)

image.write(str, './data/'+str+'.png')

I leave a snapshot of the dataset sample generated in the image above, and we can see that the data is pretty challenging even for the human eye. Personally, I could not identify 100% of these characters, as some of them are quite indistinguishable.

The CAPTCHAs generated vary in character length, character orientation, character location, and inter-character proximity, which added several levels of complexity for the Deep Learning task.

After I obtained these images in the local directory, I processed the image data to obtain both the feature arrays and target arrays. Each target array, corresponding to a text image, consists of a sequence of one-hot encoded vectors representing the characters in the CAPTCHAs. Of note, both the start and end positions of the CAPTCHA are also one-hot encoded. We illustrate the process in the codes below:

data_list = []

for data_path in tqdm.tqdm(glob.glob('data/*')):

image_array = cv2.imread(data_path)

image_array = cv2.cvtColor(image_array, cv2.COLOR_BGR2RGB)

image_array = cv2.resize(image_array,(112,112))

image_array = image_array/255

data_string = data_path.split('\\')[-1].split('.')[0]

target_array = np.zeros((10,36))

target_array[0][34] = 1

count = 0

for char in range(9):

if char+1 <= len(data_string):

target_array[char+1][ALL_CHAR.index(data_string[char])] = 1

else:

target_array[char+1][35] = 1

data_list.append({'actual_text': data_string, 'image_array':image_array, 'target_array': target_array})

df = pd.DataFrame(data_list)

feature_array = np.stack(df['image_array'].values)

target_array = np.stack(df['target_array'].values)

Now, we have successfully obtained our dataset but before we formally introduce the Deep Learning model architecture, let us take some time to walk through the Recurrent Neural Networks and also the attention mechanism more formally.

2. Sequence Models and Recurrent Neural Networks

As the name suggests, the Recurrent Neural Networks ingest a string of data inputs and then process each input sequentially, learning their dependencies and patterns across time steps. Below we illustrate with a diagram the general structure of Recurrent Neural Networks:

In Python, here is how simple Recurrent Neural Networks would perform computation:

class RNN:

# ... (some codes here to instantiate RNN object)

def step(self, x):

# update the hidden state

self.h = np.tanh(np.dot(self.W_hh, self.h) + np.dot(self.W_xh, x))

# compute the output vector

y = np.dot(self.W_hy, self.h)

return y

rnn = RNN()

y1 = rnn.step(x1)

y2 = rnn.step(x2)

y3 = rnn.step(x3)

# where x1, x2 and x3 are a sequence of input data

In the Forward Propagation, the same RNN neuron recomputes itself at every timestep with the same set of weight vectors, passing the computed Hidden State vector, self.h, as input to itself in the next step. Optionally, at every time step, the RNN can output an embedding vector, y, describing the computed state at the current time step.

In the Backpropagation, the process is slightly different from that of CNN or the vanilla Multi-Layered Perceptron (MLP), with a name called Backpropagation Through Time (BPTT). In BPTT, given a loss with respect to an output y at a particular time-step, the total weight delta with respect to this loss (at the end of one BPTT process) is the sum of the weight deltas (with respect to the loss) at each time-step before the loss (or output y) occurs. The formula is given as follows:

If there are outputs at every time step, this total weight delta with respect to each output loss is again summed up for all losses, multiplied by the learning rate, and added to the initial weight vector.

If you are interested in how each weight delta (at each time-step) is being computed, you can check out the mathematics behind Backpropagation in MLP with the article below, which is very similar in process:

Neural Networks from Scratch: N-Layers Perceptron — Part 3

Developing deep neural networks from scratch with Mathematics and Python

pub.towardsai.net

There can be varying numbers of outputs given a sequence of RNN cells, but it turns out there can be varying numbers of inputs as well. In fact, the RNNs are so versatile that they have different structural variants that are suitable for different Deep Learning tasks. We will illustrate with the diagram below, and discuss their various applications:

- One-to-One Neural Networks: In fact, this is not an RNN, since there is only a single time-step. The traditional MLP and also CNNs follow this framework

- One-to-Many RNNs: This is usually a Generative AI task. A prominent use case is image-captioning, where we can pass an image to the RNN, and it outputs a summary of the description of the image.

- Many-to-One RNNs: Prominent use cases include sentiment analysis by turning a passage of text (such as movie reviews) into a quantitative score. Another example related to Computer Vision is classifying a sequence of images like short videos in particular classes. For Generative AI, we can even turn a description prompt into an image.

- Many-to-Many RNNs: There are 2 variants of Many-to-Many RNNs. In this one, each input will correspond to an output at the same time step. One good use case is in a Natural Language Processing (NLP) task called Named Entity Recognition (NER), where for instance at every word input, we can classify whether a word is a person’s name, and if it is a person’s name, is the word is start or the end of the name.

- Many-to-Many Encoder-Decoder RNNs: This second variant of Many-to-Many RNNs, sometimes called Seq2Seq, is particularly important and is typically adopted in important areas such as Machine Translation, chatbot Question-Answering, and Speech Recognition. In this variant, we need to process (or encode) an entire sequence (or part of, like in Speech Recognition), and understand the positional dependencies between each input, before generating a meaningful output sequence. Note that in this architecture, there are 2 separate but connected RNNs — the Encoder RNN and the Decoder RNN.

In fact, the OCR model we are covering in the following relies on the Encoder-Decoder Seq2Seq architecture. Another approach to this task is to use One-to-Many RNNs to process the text image in its entirety, yet this approach may not utilize the positional dependencies in characters. For instance, if there are any obscuring or blurring of characters in the text image like:

He is wearing a blu* T-shirt.

After training through a lot of data, the Seq2Seq OCR model may learn to decode the obscured word as “blue”, especially when the words follow a certain font or handwriting.

Nonetheless, the RNNs have a serious shortcoming. If you have read the MLP article on Backpropagation, you will realize that the weight delta is computed by multiplication of the weight delta in the previous time-step. This means that if the sequences (input or output) are very long, the cumulative weight delta at the earlier time steps may become very small. Hence, this is the problem of Vanishing Gradients, causing the model unable to learn effectively.

To address this issue, the Long Short-Term Memory Networks (LSTMs) — a famous variant of the RNN — modify the vanilla RNNs’ internal cell structure to make sequential learning more efficient.

3. Long Short-Term Memory (LSTM) Networks

In the two illustrations above, we compare the differences in the RNN and the LSTM cell structures. The LSTM’s operation is much more convoluted, and we will not go deep into its sub-processes in this article. A good resource for understanding the sub-processes can be seen in this article. Instead, we will attempt to give a high-level intuition of why the LSTM can learn long-term dependencies from its inputs.

In the diagram, notice that the LSTM passes two cell states, instead of one like the RNN, back to itself. The upper arrow represents the cell state C, while the lower arrow represents the cell state H — the Hidden State. The cell state C interacts with the processed cell state H (that interacts with the input data) and collects the information (from the input) to keep and determines what to forget (as evidenced by the sigmoid activations) and its information passes through the LSTM cell like a highway lane. Hence, the backpropagated weight deltas do not impact the cell state C directly, allowing it to capture long-term dependencies.

In summary, the cell state C can represent “Long-Term Memory”, while the cell state H represents “Short-Term Memory”. The cell state C is also used to compute the cell output at each time step.

Hence, this LSTM mechanism is useful not only for long sequences but also for learning much richer sequential information. As such, most people use the LSTM variant for sequential modeling, and that is what we will do in this article.

4. The Attention Mechanism

We next move to discuss the attention mechanism, which is an extremely useful add-on to neural network architectures. It has been heavily utilized by researchers to create novel models like Vision Transformers and Graph Attention Networks, and many of them, thanks to the attention mechanism, have achieved state-of-the-art performances. However, the attention mechanism was first introduced by Bahdanau in the paper Neural Machine Translation by Jointly Learning to Align and Translate to enhance the performance of the Seq2Seq model for machine translation.

The attention mechanism is usually embedded as part of a neural network such that, it can be trained — yes, the attention mechanism has associated trainable weights — for a certain intermediate set of neurons to be activated, based on their relevance to the target prediction. As such, the attention mechanism is extremely useful for a Seq2Seq task like machine translation, where each output word has relative connections to different parts (words) of the inputs.

A simple illustration is shown below:

The English sentence “I am a student” is translated into French, and the attention mechanism has been trained, as such the first output word “Je” is highly connected to the first two words in the input — “I am” — which are given high attention scores.

There can be a few implementations of the attention mechanism, but one prominent one is called Additive Attention, and that is the one we would be using. To compute the attention scores, at every time step in the decoder, the previous decoder Hidden State, together with the set of Encoder outputs (from every input time step) is passed into the attention layer, where they are processed as shown below:

class AdditiveAttention(Layer):

def __init__(self, units):

super(AdditiveAttention, self).__init__()

# Weights for the encoder outputs

self.W1 = Dense(units)

# Weights for the decoder hidden state

self.W2 = Dense(units)

# Weight for calculating the attention scores

self.V = Dense(1)

def call(self, encoder_outputs, decoder_hidden):

# Expand the decoder hidden state to be broadcastable with the encoder outputs

decoder_hidden_with_time_axis = tf.expand_dims(decoder_hidden, 1)

# Calculate the attention scores

# encoder_outputs shape: (batch_size, seq_length, units)

# decoder_hidden_with_time_axis shape: (batch_size, 1, units)

score = self.V(tf.nn.tanh(self.W1(encoder_outputs) + self.W2(decoder_hidden_with_time_axis)))

# Attention weights (Softmax over the first axis, which is the sequence axis)

attention_weights = Softmax(axis=1)(score)

# Context vector is the weighted sum of encoder outputs, based on the attention weights

context_vector = attention_weights * encoder_outputs

context_vector = tf.reduce_sum(context_vector, axis=1)

return context_vector, attention_weights

Notice that the attention scores are computed over the Encoder outputs relative to the Hidden State in the previous time step. In summary, the neural network is trained to effectively know which Encoder outputs to focus on, relative to the previous Hidden State, to compute the output prediction at the current time step.

There also exists another form of attention mechanism that computes attention scores over intermediate outputs, relative to the outputs themselves — called the self-attention. One application of self-attention is in Convolutional Neural Networks (CNNs), where we can apply something called Spatial Self-Attention and Channel Self-Attention:

class SpatialSelfAttention(Layer):

def __init__(self):

super(SpatialAttentionLayer, self).__init__()

# This convolution layer is used to create the attention map

self.conv2d = Conv2D(1, (9, 9), padding='same',activation='sigmoid')

def call(self, inputs):

attention = self.conv2d(inputs) # creates self-attention map

attention = tf.broadcast_to(attention, tf.shape(inputs))

weighted_input = inputs * attention # applies attention

return weighted_input

class ChannelSelfAttention(Layer):

def __init__(self, layers):

super(ChannelAttentionLayer, self).__init__()

# This convolution layer is used to create the attention map

self.conv2d = Conv2D(layers, (1, 1), padding='same',activation='sigmoid')

def call(self, inputs):

attention = self.conv2d(inputs) # creates self-attention map

weighted_input = inputs * attention # applies self-attention

return weighted_input

# CNN Feature Extraction

def create_cnn_model(input_shape):

inputs = Input(shape=input_shape)

x = Conv2D(64, (3, 3), activation='relu', padding='same')(inputs)

x = Conv2D(64, (3, 3), activation='relu', padding='same')(x)

x = Conv2D(64, (3, 3), activation='relu', padding='same')(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

x = Conv2D(128, (3, 3), activation='relu', padding='same')(x)

x = Conv2D(128, (3, 3), activation='relu', padding='same')(x)

x = Conv2D(128, (3, 3), activation='relu', padding='same')(x)

x = SpatialSelfAttention()(x)

x = ChannelSelfAttention(128)(x)

x = Conv2D(128, (1, 1), activation='relu', padding='same')(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

return Model(inputs, x, name="cnn_model")

After applying these attention mechanisms, great results were achieved when training the CNN-LSTM Seq2Seq model for OCR, and we will proceed to demonstrate below.

5. The CNN-LSTM Seq2Seq Architecture

One implementation of the CNN-LSTM Visual Seq2Seq model for OCR is done by Qi Guo and Yuntian Deng, as shown in their GitHub repository. The authors implemented the model in PyTorch (while we implemented in TensorFlow), and they have demonstrated that the attention mechanism predicts output characters based on the positions of the image slice, as shown below:

Note that the entire text image is fed as input to the model, instead of slices of inputs. The vertical slices above only correspond to the columns of the intermediate tensor, which has dimensions reduced from that of the original input image, during the intermediate computation. The attention layer is then applied over these columns that are flattened into vectors.

In our implementation, we obtained 28 vertical slices from the 112×112 input image, which are passed sequentially to the Encoder LSTM.

Without further ado, I illustrate the codes, implemented with TensorFlow, below:

from tensorflow.keras.layers import Input, Conv2D, MaxPooling2D, Reshape, LSTM, Dense, Permute, Concatenate, Softmax, Multiply, Layer

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.losses import categorical_crossentropy

# LSTM Encoder

def create_encoder_model(input_shape, lstm_units):

inputs = Input(shape=input_shape)

lstm = LSTM(lstm_units, return_sequences=True, return_state=True)

encoder_outputs, state_h, state_c = lstm(inputs)

return Model(inputs, [encoder_outputs, state_h, state_c], name="encoder_model")

# LSTM Decoder

def create_decoder_model(input_shape, lstm_units, output_dim, encoder_output_shape):

# Input tensors

decoder_inputs = Input(shape=input_shape)

encoder_outputs = Input(shape=encoder_output_shape)

state_h = Input(shape=(lstm_units,))

state_c = Input(shape=(lstm_units,))

# Additive attention layer

attention_layer = AdditiveAttention(lstm_units)

context_vector, attention_weights = attention_layer(encoder_outputs, state_h)

# expand the context vector's dimensions to concatenate it

context_vector = tf.expand_dims(context_vector, 1)

# Combine context vector with decoder inputs

decoder_combined_input = Concatenate(axis=-1)([context_vector, decoder_inputs])

decoder_lstm = LSTM(lstm_units, return_sequences=True, return_state=True)

decoder_outputs, state_H, state_C = decoder_lstm(decoder_combined_input, initial_state=[state_h, state_c]) # shape: (batch_size, 1, lstm_units)

# Output layer to predict next character

outputs = Dense(256, activation='relu')(decoder_outputs)

outputs = Dense(output_dim, activation='softmax')(outputs) # shape: (batch_size, 1, output_dim)

return Model([decoder_inputs, encoder_outputs, state_h, state_c], [outputs, state_H, state_C], name="decoder_model")

class Seq2SeqDecoder(Layer):

def __init__(self, decoder_model, target_seq_length, lstm_units):

super(Seq2SeqDecoder, self).__init__()

self.decoder_model = decoder_model

self.target_seq_length = target_seq_length

self.lstm_units = lstm_units

def call(self, decoder_inputs, encoder_outputs, state_h, state_c):

batch_size = tf.shape(decoder_inputs)[0]

decoder_outputs = tf.TensorArray(tf.float32, size=self.target_seq_length)

for i in tf.range(self.target_seq_length):

output, state_h, state_c = self.decoder_model([decoder_inputs, encoder_outputs, state_h, state_c])

final_output = tf.squeeze(output, axis=1)

decoder_outputs = decoder_outputs.write(i, final_output)

decoder_inputs = output

decoder_outputs = decoder_outputs.stack()

decoder_outputs = tf.transpose(decoder_outputs, [1, 0, 2])

return decoder_outputs

# Combine models into Seq2Seq OCR Model

def create_seq2seq_model(input_shape, lstm_units, output_dim, target_seq_length):

# Define input

inputs = Input(shape=input_shape)

# CNN for feature extraction

cnn_model = create_cnn_model(input_shape)

cnn_output = cnn_model(inputs)

# Permute dimensions so that width is the first dimension: (batch_size, width, height, channels)

permuted_output = Permute((2, 1, 3))(cnn_output)

# Reshape to fit LSTM input shape: (batch_size, width, height * channels)

reshaped = Reshape((28, 28 * 128))(permuted_output)

# Encoder LSTM

encoder_model = create_encoder_model((28, 28 * 128), lstm_units)

encoder_outputs, state_h, state_c = encoder_model(reshaped)

# Prepare decoder inputs

decoder_input_shape = (1, 36)

decoder_model = create_decoder_model(decoder_input_shape, lstm_units, output_dim, (28, lstm_units))

decoder_inputs = tf.zeros(shape=(tf.shape(inputs)[0], 1, 36))

decoder_layer = Seq2SeqDecoder(decoder_model, target_seq_length, lstm_units)

outputs = decoder_layer(decoder_inputs, encoder_outputs, state_h, state_c)

return Model(inputs, outputs, name="seq2seq_ocr_model")

input_shape = (112, 112, 3)

lstm_units = 512

output_dim = 36

target_seq_length = 10 # Assuming max 8 characters output, with start and end tokens

model = create_seq2seq_model(input_shape, lstm_units, output_dim, target_seq_length)

model.compile(

optimizer=Adam(learning_rate=1e-4),

loss='categorical_crossentropy',

metrics=['accuracy']

)

model.fit(

X_train,

y_train,

epochs=300,

batch_size=16,

validation_data=[X_test,y_test],

verbose = 1,

callbacks=callbacks

)

After initializing and training the model, we achieved a validation accuracy of above 98% on our test set, which is impressive given the difficulty of recognizing some CAPTCHAs, especially with our challenging dataset, some of which may even be unrecognizable by the human eye.

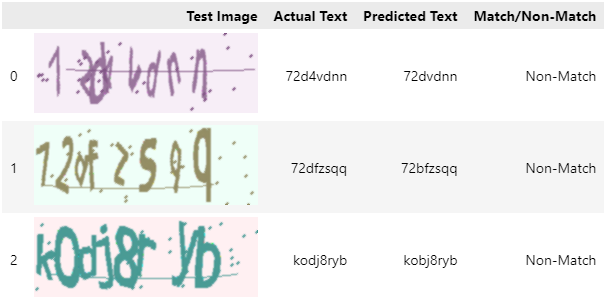

In our simple error analysis, I picked out the first 25 CAPTCHAs of our test set and illustrated both the correct and incorrect predictions below:

In the above incorrect predictions, notice that some of the characters were smashed together, and personally, I would not recognize “72d4dnn” and “72dfzsqq” correctly too. The “kodj8ryb” comes more as a surprise as I thought the character ‘d’ is pretty recognizable.

With that being said, the model is able to make several correct predictions with some really challenging examples, as shown below:

The CAPTCHAs in the above such as “qvpbs” and “ni3ziuq” are pretty challenging, and some of their characters can be easily misread, yet they were predicted accurately by the model.

In summary, the CNN-LSTM Attention-based Seq2Seq model constructed above is reasonably effective for OCR and can be considered for training on custom datasets and deployment in real-world settings.

6. Conclusion

Finally, congratulations on reaching the end of the tutorial! For newcomers to sequence modeling, I hope this tutorial has provided an invaluable resource and introduction to the world of RNNs, the attention mechanism and their potential applications. For more advanced practitioners, I have demonstrated how to train an OCR system from scratch — and if you like to do text field detection too, you may check out my article on building a YOLO detection model.

In the following articles, we will dive into the Transformer architectures which are even more powerful than RNNs and have shown state-of-the-art performances in several tasks not limited to only sequential data, but also image data (Vision Transformers) and even graph data.

If you are interested, please stay tuned for more exciting tutorials that will be coming very soon!

Thanks for reading! If you have enjoyed the content, pop by my other articles on Medium and follow me on LinkedIn.

Tan Pengshi Alvin – Medium

Read writing from Tan Pengshi Alvin on Medium. Data Scientist, AI and Software Engineer 🇸🇬. Shares simple codes…

tanpengshi.medium.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.