Parametric ReLU | SELU | Activation Functions Part 2

Author(s): Shubham Koli Originally published on Towards AI. Parametric ReLU U+007C SELU U+007C Activation Functions Part 2 What is Parametric ReLU ? Rectified Linear Unit (ReLU) is an activation function in neural networks. It is a popular choice among developers and researchers …

Building Activation Functions for Deep Networks

Author(s): Moshe Sipper, Ph.D. Originally published on Towards AI. AI-generated image (craiyon) A basic component of a deep neural network is the activation function (AF) — a non-linear function that shapes the final output of a node (“neuron”) in the network. Common …

Building Activation Functions for Deep Networks

Author(s): Moshe Sipper, Ph.D. Originally published on Towards AI. AI-generated image (craiyon) A basic component of a deep neural network is the activation function (AF) — a non-linear function that shapes the final output of a node (“neuron”) in the network. Common …

Parametric ReLU | SELU | Activation Functions Part 2

Author(s): Shubham Koli Originally published on Towards AI. Parametric ReLU U+007C SELU U+007C Activation Functions Part 2 What is Parametric ReLU ? Rectified Linear Unit (ReLU) is an activation function in neural networks. It is a popular choice among developers and researchers …

Parametric ReLU | SELU | Activation Functions Part 2

Author(s): Shubham Koli Originally published on Towards AI. Parametric ReLU U+007C SELU U+007C Activation Functions Part 2 What is Parametric ReLU ? Rectified Linear Unit (ReLU) is an activation function in neural networks. It is a popular choice among developers and researchers …

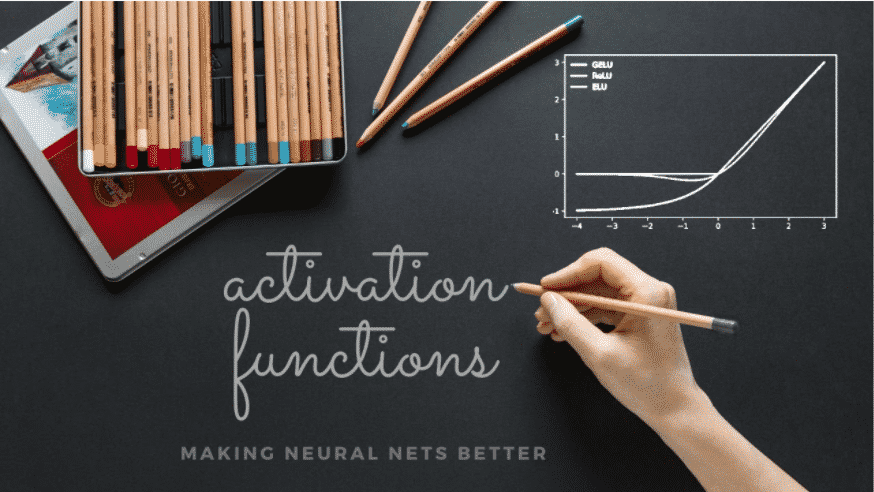

GELU : Gaussian Error Linear Unit Code (Python, TF, Torch)

Author(s): Konstantinos Poulinakis Originally published on Towards AI. Code tutorial for GELU, Gaussian Error Linear Unit activation function. Includes bare python, Tensorflow and Pytorch code. This member-only story is on us. Upgrade to access all of Medium. Photo by Markus Winkler on …

Demystifying GELU

Author(s): Konstantinos Poulinakis Originally published on Towards AI. Python Code for GELU activation function This member-only story is on us. Upgrade to access all of Medium. Photo by Willian B. on Unsplash In this tutorial we aim to comprehensively explain how Gaussian …

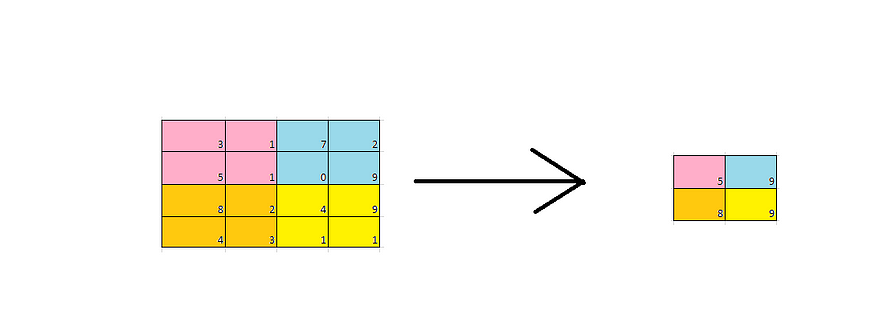

Image classification with neural network Part — 2

Author(s): Mugunthan Originally published on Towards AI. Deep Learning If you missed my earlier blog, have a read here. My previous blog is little on the convolutional layer. One of the disadvantages of using a feed-forward network on images is a number …