Tweet Topic Modeling Part 2: Cleaning and Preprocessing Tweets

Last Updated on January 6, 2023 by Editorial Team

Author(s): John Bica

Web Scraping, Programming, Natural Language Processing

Multi-part series showing how to scrape, preprocess and apply & visualize short text topic modeling for any collection of tweets

Disclaimer: This article is only for educational purposes. We do not encourage anyone to scrape websites, especially those web properties that may have terms and conditions against such actions.

Introduction

Topic modeling is an unsupervised machine learning approach with the goal to find the “hidden” topics (or clusters) inside a collection of textual documents (a corpus). Its real strength is that you don’t need labeled or annotated data but instead it accepts the raw text data as input only, and hence why it is unsupervised. In other words, the model does not know what the topics are when it sees the data but rather produces them using statistical relationships between the words across all documents.

One of the most popular topic modeling approaches is Latent Dirichlet Allocation (LDA) which is a generative probabilistic model algorithm that uncovers latent variables that govern the semantics of a document, these variables representing abstract topics. A typical use of LDA (and topic modeling in general) is applying it to a collection of news articles to identify common themes or topics such as science, politics, finance, etc. However, one shortcoming of LDA is that it doesn’t work well with shorter texts such as tweets. This is where more recent short text topic modeling (STTM) approaches, some that build upon LDA, come in handy and perform better!

This series of posts are designed to show and explain how to use Python to perform and apply a specific STTM approach (Gibbs Sampling Dirichlet Mixture Model or GSDMM) to health tweets from Twitter. It will be a combination of data scraping/cleaning, programming, data visualization, and machine learning. I will cover all the topics in the following 4 articles in order:

Part 2: Cleaning and Preprocessing Tweets

Part 3: Applying Short Text Topic Modeling

Part 4: Visualize Topic Modeling Results

These articles will not dive into the details of LDA or STTM but rather explain their intuition and the key concepts to know. A reader interested in having a more thorough and statistical understanding of LDA is encouraged to check out these great articles and resources here and here.

As a pre-requisite, be sure that Jupyter Notebook, Python, & Git are installed on your computer.

Alright, let’s continue!

PART 2: Cleaning and Preprocessing Tweets

In our previous article, we had scraped tweets from Twitter using Twint and merged all the raw data into a single csv file. Nothing has been removed or changed from the format of the data that was given to us by the scraper. The csv is provided here for your reference to follow along if you are just joining us in part 2.

This article will focus on preprocessing the raw tweets. This step is important because raw tweets without preprocessing are highly unstructured and contain redundant and often problematic information. There’s a lot of noise in a tweet that we might not need or want depending on our objective(s):

‘How to not practice emotional distancing during social distancing. @HarvardHealth https://t.co/dSXhPqwywW #HarvardHealth https://t.co/H9tfffNAo0'

For instance, the hashtags, links, and @ handle references above may not be necessary for our topic modeling approach since those terms don’t really provide meaningful context for discovering inherent topics from the tweet. Plus, if we’d like to retain hashtags because they may serve us another analytical purpose, we will see shortly that there is already a hashtags column in our raw data that holds them all in a list.

Removing unnecessary columns and duplicate tweets

To begin, we will load in our scraped tweets into a data frame. The original scraped data provided from Twint has a lot of columns, a handful of which contain null or NaN values. We will drop these and see what columns remain with actual values for all tweets.

import pandas as pd

tweets_df = pd.read_csv(‘data/health_tweets.csv’)

tweets_df.dropna(axis='columns', inplace=True)

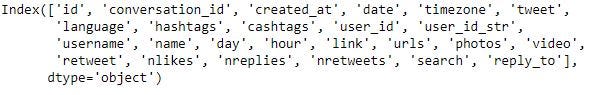

tweets_df.columns

Now we have a reduced set of columns and and can choose which ones to keep. I’ll keep the ones that are most useful or descriptive for topic modeling or exploratory purposes but your list may differ based on your objective(s).

tweets_df = tweets_df[['date', 'timezone', 'tweet',

'hashtags', 'username', 'name',

'day', 'hour', 'retweet', 'nlikes',

'nreplies', 'nretweets']]

And as a sanity check — I always like to remove any duplicates in case the same tweet was posted multiple times or scraped multiple times accidentally!

tweets_df.drop_duplicates(inplace=True, subset="tweet")

Preprocessing the actual tweets

Next we are going to want to clean up the actual tweet for each record in the data frame and remove any extra “noise” that we don’t want. The first thing we’ll need to do is import (and install if needed) some Python modules. We will also define a string of punctuation symbols; this represents any symbols or characters that we will want to remove from a tweet since we only care about the words.

import pandas as pd

import re

import gensim

from nltk.stem import WordNetLemmatizer

punctuation = ‘!”$%&’()*+,-./:;<=>?[\]^_`{|}~•@’

Using the above modules, I find it easiest to create various functions that are responsible for specific preprocessing tasks. This way — you can easily reuse them for any dataset you may be working with.

To remove any links, users, hashtags, or audio/video tags we can use the following functions which use regular expressions (Regex).

To apply necessary natural language processing (NLP) techniques such as tokenization and lemmatization, we can use the following functions below.

Tokenization is the process of breaking down a document (a tweet) into words, punctuation marks, numeric digits, etc. Lemmatization is a method that converts words to their lemma or dictionary form by using vocabulary and morphological analysis of words. For example, the words studying, studied, and studies would be converted to study.

As part of tokenization process, it is best practice to remove stop words which are basically a set of commonly used words that don’t provide much meaningful context on their own. This allows us to focus on the important words instead. An example would be the words “how, the, and” among many more. We use a pre-defined list above from Gensim and exclude any words with less than 3 characters.

Next, our main function preprocess_tweet applies all of the functions defined above in addition to applying lowercase and stripping punctuation, extra spaces, or numbers in the tweet. It returns a list of clean tokens that have been lemmatized for a given tweet. Additionally, we have also defined a basic_clean function which only cleans up the tweet without applying tokenization or lemmatization for use cases that may require all words in tact.

To make things easier and be able to reuse the functions as needed in any file you are working in, I have created a separate file to store all the functions that we can easily import and use to return a preprocessed data frame in one single code run. And the best part about it is it can be used for any collection of text data such as Reddit posts, article titles, etc.!

Complete Tweet Preprocessor Code

Let’s now try to apply all the preprocessing steps we’ve defined to our data frame and see the results.

from tweet_preprocessor import tokenize_tweets

tweets_df = tokenize_tweets(tweets_df)

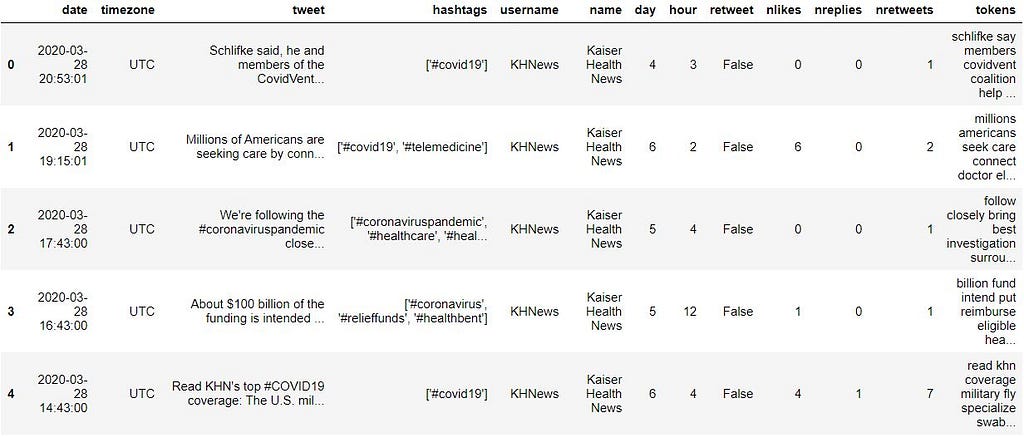

tweets_df.head(5)

We can see that a new tokens column was added which is looking good!

Before we wrap up, let’s make sure we save our preprocessed data set to a csv file. You can also find a copy of it here.

tweets_df.to_csv(r’data/preprocessed_tweets.csv’, index = False,

header=True)

In the next part of the series, we will start doing the fun stuff and start analyzing the tweets and running our short text topic modeling algorithm to see what trends and thematic health issues are present in our collection of tweets!

References and other useful resources

- Detailed explanation of Lemmatization and Stemming differences

- Explanation of stop words

- Preprocessor code that can be used for any set of text data

Tweet Topic Modeling Part 2: Cleaning and Preprocessing Tweets was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.