The NLP Model Forge

Last Updated on August 17, 2020 by Editorial Team

Author(s): Quantum Stat

Natural Language Processing

Unlocking Inference for 1,400 NLP Models

Streamlining an inference pipeline on the latest fine-tuned NLP model is a must for fast prototyping. However, with the plethora of diverse model architectures and NLP libraries to choose from, it can make prototyping a time-consuming task. As such, we’ve created The NLP Model Forge. A database/code generator for 1,400 fine-tuned models that were carefully curated from top NLP research companies such as Hugging Face, Facebook (ParlAI), DeepPavlov, and AI2.

The Forge is your destination for generating inference code for your NLP model of choice.

What Does the Forge Contain?

The Forge contains code for pre-trained models that were fine-tuned across several tasks stemming from classic text classification all the way to text-to-speech and commonsense reasoning. It allows the developer to choose several models at once, of which, upon one click of a button, can generate code templates in a ready-to-run format to be pasted in a Colab notebook. The code blocks are formatted in batch and python programming scripts and are easy enough to get you jump-started on creating your own inference API!💥

Current tasks available in the Forge

- Sequence Classification | topic classification and sentiment analysis

- Text Generation

- Question Answering

- Token Classification | NER, and part of speech

- Summarization

- Natural Language Inference

- Conversational AI

- Machine Translation

- Text-to-Speech

- Commonsense Reasoning

One of the best features of the Forge (besides its diversity of architectures, languages, and libraries) is the metadata descriptions of each model. Different tasks require different metadata to help guide the developer on their model of choice. So for example, in machine translation, we’ve added source language and target language columns, for sequence/token classification/NLI we‘ve added a “labels” column to identify what labels the model has been trained to infer.

Ok… So How Does It Work?

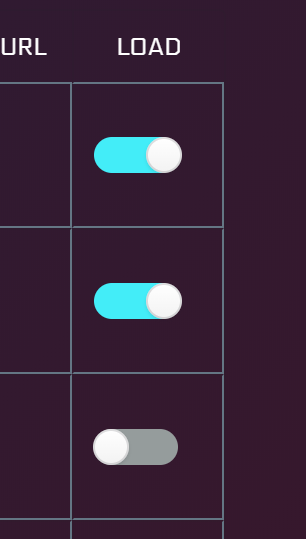

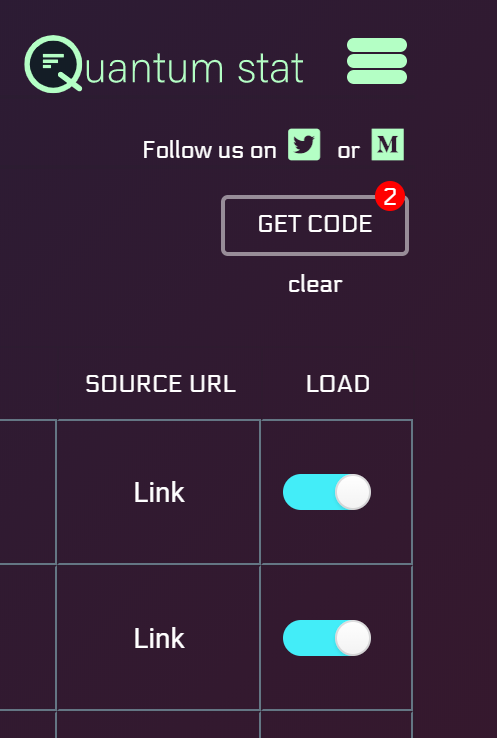

First, you select one or more models in the Forge home page. You do this by selecting the toggle button in the “load” column.

As you select your models, you will notice that the “Get Code” button begins to tally your model selections.

When you’re satisfied with what you’ve selected, click the “Get Code” button.

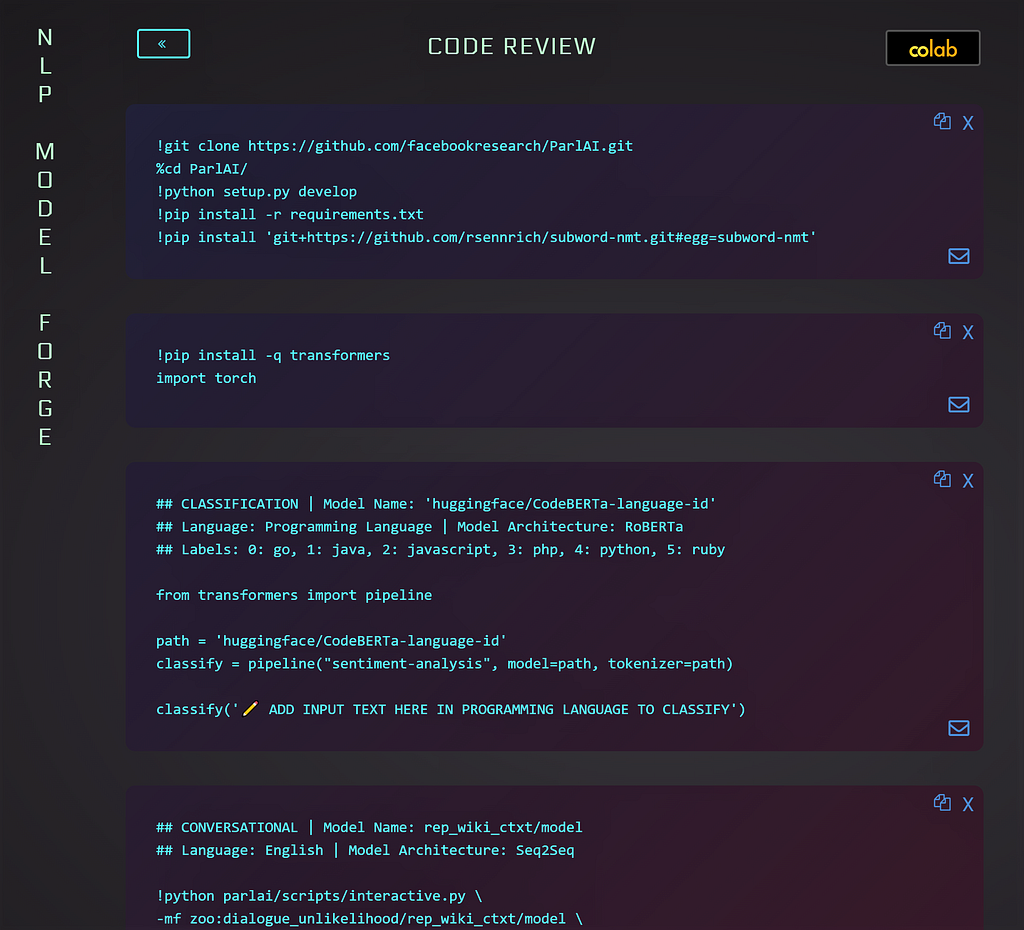

You have now generated code blocks to run inference on your models! And they’re programmatically labeled with relevant metadata to improve interpretation in the functionality of each model. The great part about the Forge is it already knows which imports and dependencies it requires depending on which library you selected. 👇

💡 inner monologue 💡

We also highlight the source language, the areas where to add text inputs and areas to fine-tune decoding parameters (if required). Look for the emojis!

Now, at this stage, you can do one of several things. You can…

✔ Edit the code right on the web page. (you can delete code blocks you don’t want or alter the code directly in the page at your discretion)

✔ Hit the email button to send the code blocks to yourself or your friends.

✔ Copy each code block to the clipboard and paste it on your local machine.

But, the most powerful choice is to click that “Colab” button. If you do this, two things will happen simultaneously:

✔ All code blocks on the page will be copied to the clipboard.

✔ A new Colab page will open.

Now, all you have left to do is to press “control/command + v” on the page, and all the code blocks get pasted in! You are now ready to run inference!

💡 inner monologue 💡

Colab continues to be a powerful destination for prototyping ML models because you can leverage their free GPU in addition to having super fast download speeds for large data files. This comes in handy when models can be as large as 1G or greater and you don’t want to download them locally.

Hope you enjoy going traveling thru the Forge! We hope this platform can help you not only understand variations in model architectures but to put you in control of the development of your own inference API.

We will continue to improve the platform to bring you the latest and most diverse models in the world. Stay tuned for more updates. If you have any questions/suggestions along the way, you can always email us at:

info [at] quantumstat [dot] com

Cheers,

Ricky Costa | Quantum Stat | www.quantumstat.com

P.S. For updates, you can follow us on Twitter and on our newsletter on our website.

The NLP Model Forge was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.