Q Learning — Deep Reinforcement Learning

Last Updated on January 21, 2025 by Editorial Team

Author(s): Sarvesh Khetan

Originally published on Towards AI.

Table of Contents

- Problem Statement

- Value Functions

- Q Learning

3.a. Theory

3.b. Code

3.c. Problems with Q Learning - V Learning

- Resources

Problem Statement

Let’s say our model is trying to play ping pong and is currently in this game state (diagram below) then you need to predict what action (up / down / stationary) should our model take during this situation

If you are successful in creating such a model which can take correct actions for each given situation / state then we can just use this model in a loop to play the entire game against an opponent !!

Value Functions

We will discuss two types of value functions

Q Function (Action Value Function)

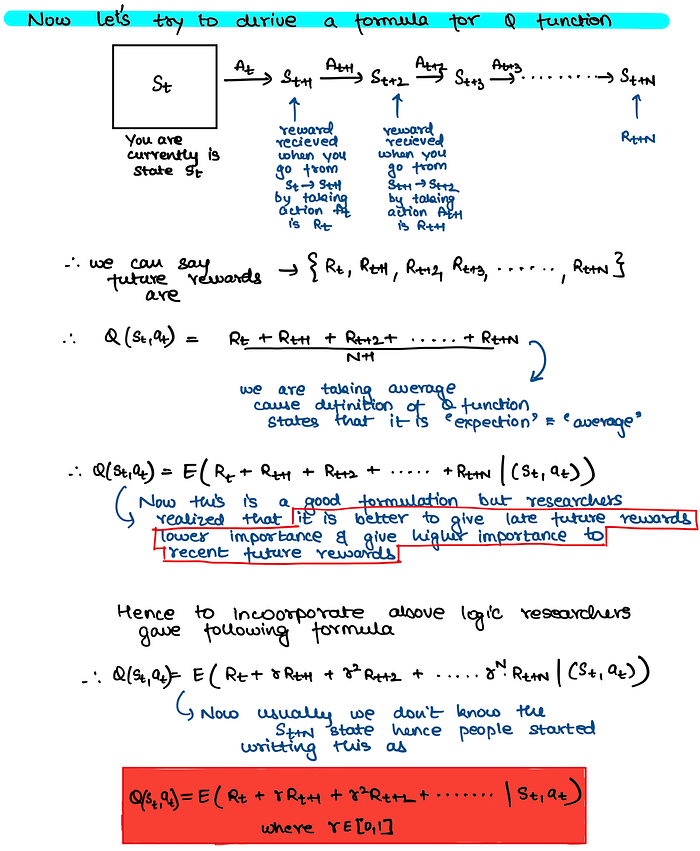

Q function represents — total expected future reward in State (t) when you take an action A. Let’s understand this with the help of an example

Based on above sinario what actions would you prefer taking? Obviously ‘down’ action cause it leads to higher future reward right !! Hence Q function tells us if taking an action A in state S(t) is good or not !!

Now let us define Bellman Optimality Equation for Q Function

If we use this function to create a Neural Network Model then it is called Q Learning

V Function (State Value Function)

If we use this function to create a Neural Network Model then it is called V Learning

Q Learning

Since there are only three possible actions in the game i.e. up / down / stationary, the model architecture will look something like this

In above architecture we can replace FFNN with CNNs or Transformers

Now as understood above we will have to calculate Rt value by playing the game and hence to reduce repetition of this we will keep storing the tuple {State T, Action A taken on State T, State T+1, Reward R during the process State T to State T+1 due to the action A} so that we could use it later if it shows up again !! This the paper called Replay Buffer !!

Code

CV/reinforcement_learning/ping_pong_dqn.ipynb at main · khetansarvesh/CV

Implementation of algorithms like CNN, Vision Transformers, VAE, GAN, Diffusion …. for image data …

github.com

Problems with Q Learning

- Q learning can’t be used when output layer is huge i.e. huge no of actions are possible in the game cause then this would lead to training a larger network and hence require lots of compute.

- This training wont be feasible since compute is limited in this world !!

V Learning

Now just like above here also we can create a model like this

But here we dont know how to train this model since we cant formulat a optimization problem here but later in other RL algorithm we will see how to use this network !!

More Resources

There are many other advanced Q learning algorithms that have been released in papers and these can be found here with its code implementation !!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.