LLaMA Architecture: A Deep Dive into Efficiency and Mathematics

Last Updated on February 5, 2025 by Editorial Team

Author(s): Anay Dongre

Originally published on Towards AI.

LLaMA Architecture: A Deep Dive into Efficiency and Mathematics

In recent years, transformer-based large language models (LLMs) have revolutionized natural language processing (NLP). Meta AI’s LLaMA (Large Language Model Meta AI) stands out as one of the most efficient and accessible models in this domain. LLaMA’s design leverages innovations in transformer architecture to achieve competitive performance with fewer parameters, making it more accessible for researchers and businesses with limited computational resources. This article provides an in-depth exploration of the LLaMA architecture, including its mathematical foundations, architectural innovations (such as rotary positional embeddings), and production-level training code on a small dataset using PyTorch.

We begin with an overview of the transformer architecture before delving into LLaMA-specific modifications. We then walk through the mathematics behind self-attention, rotary positional embeddings, and normalization techniques used in LLaMA. Finally, we present a complete training pipeline code that demonstrates fine-tuning an LLaMA-like model on a custom dataset.

1. Background: The Transformer Architecture

1.1 Overview

Transformers, introduced by Vaswani et al. in 2017, transformed NLP by enabling parallel processing and capturing long-range dependencies without recurrent structures. The key components of a transformer are:

- Self-Attention Mechanism: Allows each token in a sequence to weigh the importance of every other token.

- Feedforward Neural Network (FFN): Applies non-linear transformations to the outputs of the self-attention layer.

- Layer Normalization and Residual Connections: Ensure stable gradient flow and efficient training.

Mathematically, for an input sequence represented by a matrix X (of shape n×d for sequence length n and embedding dimension d), the self-attention mechanism is computed as:

where:

- dk is the dimension of the key vectors.

This formulation allows the model to focus on different parts of the input sequence simultaneously, capturing both local and global relationships.

1.2 Limitations of Standard Transformers

While powerful, standard transformers have some challenges:

- High Computational Cost: Especially when scaling to large sequences.

- Fixed Positional Encodings: Typically, absolute positional encodings may not generalize well for very long contexts.

- Memory Footprint: Large parameter counts require significant computational resources.

2. LLaMA Architecture: Innovations and Improvements

LLaMA builds upon the standard transformer architecture while introducing several key optimizations designed to improve efficiency and scalability.

2.1 Decoder-Only Transformer Design

LLaMA uses a decoder-only transformer architecture similar to GPT models. In this design, the model generates text in an autoregressive manner — predicting one token at a time given all previous tokens. This choice simplifies the architecture by focusing on language modeling without the need for an encoder.

2.2 Pre-Normalization

Instead of the traditional “post-norm” (LayerNorm after sub-layers), LLaMA employs pre-normalization, where LayerNorm is applied before the self-attention and feedforward layers. Mathematically, if x is the input to a sub-layer (e.g., attention), the transformation is:

This approach improves training stability, especially for very deep networks, by ensuring that the input to each sub-layer has a standardized scale.

2.3 Rotary Positional Embeddings (RoPE)

One of the hallmark features of LLaMA is its use of rotary positional embeddings (RoPE). Unlike traditional absolute positional embeddings, RoPE encodes relative positions of tokens in a mathematically elegant way.

Mathematical Explanation of RoPE

For each token, instead of simply adding a fixed vector, RoPE rotates the query and key vectors in a multi-dimensional space according to their position. If θ is a rotation angle that is a function of the token position p and a base frequency ω, then a vector x is rotated as:

Here, the function rotate(x) represents a 90-degree rotation in the embedding space. This method has two key benefits:

- Scalability: It generalizes well to longer sequences because the relative angle between tokens remains consistent.

- Efficiency: No additional parameters are needed compared to learned positional embeddings.

Simplified Explanation

Imagine you have a set of vectors that represent words, and you want to know not just the word identities but also their order. RoPE “rotates” these vectors by an angle proportional to their position. When you compare two tokens, the relative angle (difference in rotation) encodes their distance in the sequence, which is essential for understanding context.

2.4 Parameter Efficiency and Grouped-Query Attention (GQA)

LLaMA optimizes parameter usage through techniques like grouped-query attention (GQA). This mechanism partitions the query vectors into groups that share certain parameters, thereby reducing the overall number of computations and memory footprint without significantly compromising performance. The mathematics here is an extension of standard multi-head attention, where instead of independent heads, groups of heads share projections:

where g indexes groups. This sharing enables the model to maintain a high degree of expressiveness while lowering the parameter count.

3. LLaMA in Practice: Autoregressive Text Generation

3.1 Autoregressive Generation Process

LLaMA, like other decoder-only models, uses an autoregressive method to generate text. At each step, the model:

- Takes the current sequence of tokens.

- Computes the self-attention over all tokens.

- Predicts the next token using a softmax layer over the vocabulary.

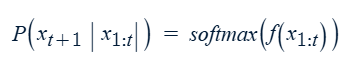

Mathematically, if x1:t represents the sequence, then the probability of the next token xt+1 is:

where f represents the transformer’s forward pass. The process repeats until a termination token is generated.

3.2 Example Scenario

Consider the input prompt:

“The capital of France is”

LLaMA processes the tokens through multiple transformer blocks. Using its autoregressive nature, it predicts the next token with the highest probability (e.g., “Paris”), appends it to the sequence, and continues generating further tokens until the sentence is complete.

4. Mathematical Foundations Simplified

Let’s break down the key mathematical concepts in simpler terms:

4.1 Self-Attention Revisited

The self-attention mechanism calculates relationships between tokens. Imagine you have a sentence: “The cat sat on the mat.” For each word, the model computes:

- Query (what this word is asking for)

- Key (what this word offers)

- Value (the content of this word)

The similarity between words is computed as a dot product of queries and keys. Dividing by sqrt{d_k} (a scaling factor) prevents the numbers from becoming too large. The softmax function then converts these scores into probabilities (weights) that sum to 1. Finally, these weights multiply the value vectors to produce a weighted sum, which becomes the output for that token.

4.2 Rotary Positional Embeddings (RoPE)

RoPE mathematically “rotates” each word’s vector based on its position. Think of each word vector as an arrow in space. By rotating these arrows, the model can encode how far apart words are. When two arrows are compared, the difference in rotation tells you the relative distance between words. This is essential for understanding sentence structure without needing extra parameters for each position.

4.3 Pre-Normalization

In pre-normalization, every input to a sub-layer is normalized before processing. This means the data is scaled so that its mean is zero and its variance is one. Mathematically, given an input xxx, the normalized value x^ is:

x^=x−μ / σ+ϵ

where:

- μis the mean of x,

- σ is the standard deviation,

- ϵ is a small constant to avoid division by zero.

By normalizing the input, the network ensures that the scale of the data does not vary too much from layer to layer, which helps in faster and more stable training.

5. Production Considerations and Optimizations

When deploying or fine-tuning LLaMA models in production, consider the following:

5.1 Data Preprocessing

– Normalization and Cleaning: Ensure that input texts are cleaned (e.g., removing HTML tags, extra whitespace).

– Tokenization: Use the tokenizer associated with your model to ensure consistency.

5.2 Training Infrastructure

– GPU/TPU Usage: Leverage distributed training if using large datasets.

– Checkpointing: Regularly save checkpoints to avoid loss of progress.

5.3 Hyperparameter Tuning

– Learning Rate Schedules: Experiment with warmup and decay schedules.

– Regularization: Techniques such as dropout or weight decay are crucial to avoid overfitting.

– Batch Size and Gradient Accumulation: Adjust based on hardware capabilities.

5.4 Monitoring and Evaluation

– Logging: Use tools like TensorBoard to monitor loss and other metrics.

– Validation Metrics: Regularly evaluate using a validation set to check for overfitting.

– Error Analysis: Analyze model errors to guide further improvements.

5.5 Deployment

– Model Compression: Techniques like quantization or distillation can reduce model size for deployment.

– API Endpoints: Use frameworks such as FastAPI or Flask for serving your model in production.

– Scaling: Consider cloud solutions (e.g., AWS, GCP) to scale inference services.

References

- Vaswani et al., “Attention Is All You Need” (2017):

2. Rotary Positional Embeddings Paper (Su et al., 2021):

3. LLaMA: Open and Efficient Foundation Language Models

4. The Llama 3 Herd of Models

5. Llama 2: Open Foundation and Fine-Tuned Chat Models

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.