Image classification with neural network Part — 2

Last Updated on July 26, 2023 by Editorial Team

Author(s): Mugunthan

Originally published on Towards AI.

Deep Learning

If you missed my earlier blog, have a read here. My previous blog is little on the convolutional layer.

One of the disadvantages of using a feed-forward network on images is a number of learning parameters. Convolutional Neural networks use a concept or scheme called parameter sharing. Using which we can assume that weights that are learned at single coordinates can be used to others of the same depths. So neurons at each depth use the same weights and bias.

Let me explain with an example, Input Image size is 64x64x3 and with Filter of 3×3 and number of filters is 32. The output will be 62x62x32.

For the formula for calculation, please refer here. So the number of learnable parameters in this layer if not using parameter sharing will be,

parameter associated with each neuron = 3x3x3 + 1 (for bias) = 28 parameters.

We have 32x62x62 = 123008 layers. Thus combining we have 123008 x 28 = 3444224 which is extremely high for a single layer.

Now when parameter sharing is used, we get 28 x 32 = 896 <<<< 3 million.

In some cases, parameter sharing can be relaxed such as an object can be found only in the center or corners of the image, where we do not search for that object in the entire image and search in a particular location alone.

There are other layers that help CNN to extract data from images. Few are,

- Pooling layer

- Activation layer

- Dropout layer

- Batch Normalization layer

- Fully Connected layer

Let's discuss one of the important layers here,

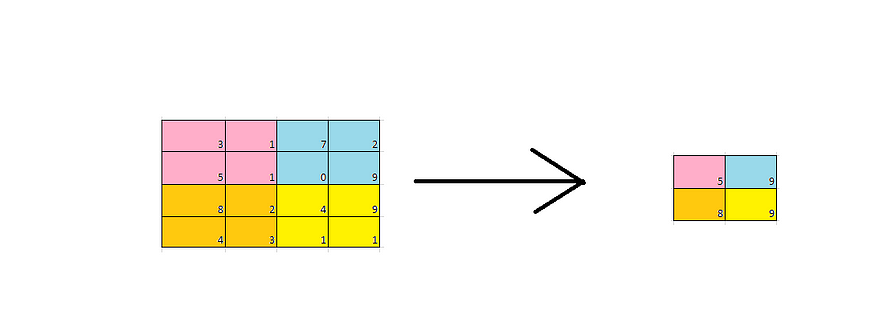

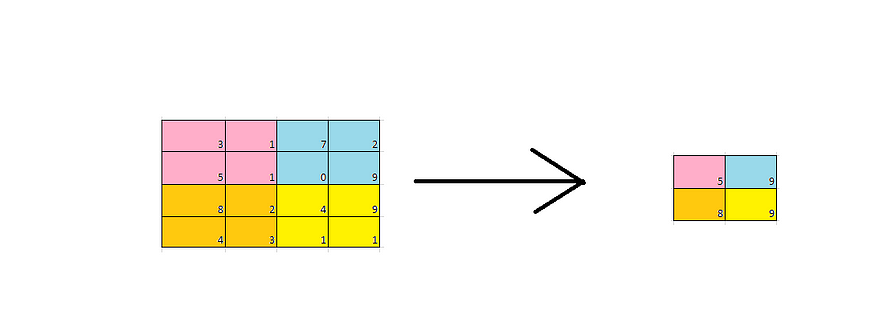

Pooling layer:

Often in CNN, as we go deeper in layer we need to reduce the spatial dimensions and extract information from the image. Deeper the CNN, we can extract more complex patterns from the image.

So pooling layer is one that helps in downsampling the representation. Like convolutional layer pooling layer also have fixed shape matrix that slides over the input and computes the output according to stride, but these layers do not have any learnable parameters.

Various types of pooling layers are present but the popular ones are Max pooling and Average pooling layers.

Here is a basic implementation of pooling layers in python

Similar results can be achieved using TensorFlow package in python

Activation layer

This is used to introduce non-linearity in the neural network. Some implementation of activation functions can be found here.

Tensorflow implementation of the activation function is

Other layers can be discussed in future blogs

Thanks for reading!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: