How Words Learn to Pay Attention: Transformers Part 1

Last Updated on January 15, 2025 by Editorial Team

Author(s): Anushka sonawane

Originally published on Towards AI.

It’s not about how fast you go, but about how smart you can be while going fast.

If you’ve ever wondered how Google Translate or Siri understands you, or how chatgpt creates sentences that sound like a human, well, guess what? It’s all because of Transformers.Now, I know this might sound technical, but trust me, by the end of this blog, you’ll have a clear picture of how this model works and why it’s become such a big deal in the world of AI.

At its core, a Transformer is a type of deep learning model designed to handle sequential data — basically, any data that comes in a sequence, like sentences in a paragraph, words in a sentence, or even pixels in an image.

Think of it like a smart assistant. If you tell it something, it tries to understand your sentence, breaks it down, and then gives you an answer that makes sense. But instead of reading one word at a time like humans do, a transformer can look at all the words in a sentence at once and figure out how they all relate to each other.

Life Before Transformers — RNNs and LSTMs

What were RNNs?

Imagine you are playing a message-passing game. You stand in a line with your friends, and the first person whispers a message to the next, and so on until it reaches the last person. Sounds fun, right?

Now here’s the catch — the longer the chain, the more garbled the message becomes. RNNs (Recurrent Neural Networks) are like that chain. They pass information step by step, word by word, and sometimes… they just forget what was said earlier. That’s a problem when the sentences are long.

They face a couple of significant challenges:

➤ Vanishing and Exploding Gradients: During training, RNNs can encounter issues where the learning updates become either too small (vanishing) or too large (exploding). This makes it hard for the network to learn long-term patterns.

➤ Sequential Processing Bottleneck: RNNs handle one piece of information at a time, making them slow, especially with large datasets. This sequential nature also limits their ability to utilize modern computing hardware efficiently.

➤ Short-Term Memory: Just like in the game, where the original message gets lost over time, RNNs struggle to retain information from earlier parts of a long sequence, making them less effective for understanding context that spans over longer durations.

How did LSTMs Help?

Now, let’s say we improve the message-passing game. Instead of whispering everything, you only pass what’s important — like handing notes instead of repeating full sentences. This is what LSTMs (Long Short-Term Memory networks) did.

They introduced something called cell states that decide:

What to remember.

What to forget.

➤ Complexity and Interpretability: The intricate design of LSTMs, with multiple components deciding what to keep or discard, makes them harder to understand and interpret.

➤ Computational Intensity: Managing these notepads and decisions requires significant computational resources, leading to longer training times and increased demand for processing power.

➤ Difficulty with Hierarchical Structures: LSTMs may struggle to effectively model complex language structures, limiting their ability to generalize in tasks involving intricate hierarchies.

➤ Scalability Issues: The step-by-step processing approach of LSTMs limits their scalability on modern hardware, making it challenging to train large models efficiently.

Imagine you’re reading a sentence like “The cat chased the mouse and the dog barked loudly at the cat.”

In older models like RNNs (Recurrent Neural Networks) or LSTMs (Long Short-Term Memory), words are processed one by one, in order, like reading a book from left to right.

But here’s the issue:

As the model processes each word, it forgets earlier words. It’s like reading a long story and forgetting the beginning the more you move forward. So, when it reaches the end of the sentence, it’s already forgotten important details from the start (like the first “cat” being related to the second “cat”).

How Self-Attention Solves It:

Self-attention fixes this by looking at all words at once, no matter their position in the sentence. So, even if words are far apart, like the two “cats”, they can still connect to each other. Every word can pay attention to the others, remembering what’s important, even from far away.

What is Attention?

Imagine you’re reading a sentence, like “The cat sat on the mat.” Your brain automatically understands that “sat” refers to the cat and “on the mat” describes where the cat sat. You are paying “attention” to different words and their relationships to understand the sentence’s meaning. Transformers work similarly!

➤ Step 1: Initial Word Embeddings

Computers don’t understand words like we do. They need numbers. So, every word in the sentence is turned into a vector (a fancy word for a list of numbers).

Example Sentence:

These vectors are like the computer’s version of the words. But here’s the thing: Initially, these vectors have no sense of context. The word “dog” is just floating around in its own world, clueless about the “cat” or the “barked.”

➤ Step 2: Calculating the Weights (Dot Product)

Now, let’s teach the computer to focus on what’s important. This is done by comparing each word with every other word in the sentence.

For the word “dog,” we ask:

- “How related is ‘dog’ to ‘cat’?”

- “How related is ‘dog’ to ‘mouse’?”

- And so on for all the words.

This comparison is done using a simple mathematical operation called the dot product (don’t worry about the math details). Think of it like giving scores for how well two words match.

Example scores for “dog”:

- With “cat” → High score (because dog and cat are natural rivals).

- With “barked” → Medium score (barking is something a dog does).

- With “mouse” → Low score (dogs don’t care much about mice).

➤ Step 3: Normalizing the Weights

Now, the computer normalizes these scores so they add up to 1. This is like distributing your attention evenly. For example:

- “cat” gets 0.5 (50% attention).

- “barked” gets 0.3 (30% attention).

- “mouse” gets 0.2 (20% attention).

Adjust these scores so they sum to 1, making sure they are balanced.

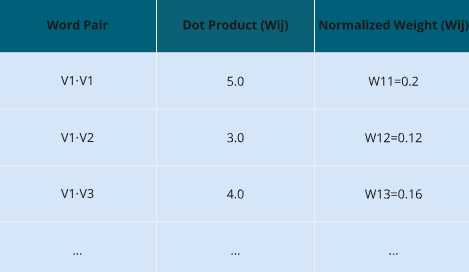

Here, Wij becomes the normalized weight.

For example:

If W11= 5, W12=3, W_{12} = 3W12=3, W13=4:

Sum=5+3+4=12

Normalized weights: W11=5/12=0.42, W12=3/12=0.25, W13=4/12=0.33

➤ Step 4: Reweighing Word Embeddings

To calculate a context-aware embedding for each word.

The embeddings need to capture relationships between words in the sequence. By combining embeddings of related words (weighted by attention), the model creates a representation of a word enriched with contextual information.

For example:

- In the sentence “The cat chased the mouse”, the embedding for “cat” should reflect its relationship with “chased” and “mouse.”

- Without this step, the embeddings would remain isolated and lack meaningful connections.

Using normalized weights Wij, we compute the final embedding Y1 for V1:

Y1=W11.V1+W12.V2+W13.V3

Substituting normalized weights:

Y1=0.42.V1+0.25.V2+0.33.V3

This is repeated for all words so that each word gets some context from every other word in the sentence.

This step-by-step process forms the basis of self-attention, where every word in a sentence can understand its relationship with all other words, creating embeddings enriched with context. This ability to capture global dependencies is what makes self-attention a powerful tool in transformer models for tasks like translation, summarization, and language modeling.

Next up, we’re diving into Multi-Head Attention and breaking down the different stages of Transformers — get ready to see how these models work their magic!

If you’d like to follow along with more insights or discuss any of these topics further, feel free to connect with me:

Looking forward to chatting and sharing more ideas!

Wait, There’s More!

If you enjoyed this, you’ll love my other blogs! 🎯

Everything You Need to Know About Chunking for RAG

How Chunking Makes Data Easier to Handle and Retrieve

pub.towardsai.net

AI Agents, Assemble(Part 1)! The Future of Problem-Solving with AutoGen

Getting to Know AI Agents: How They Work, Why They’re Useful, and What They Can Do for You

pub.towardsai.net

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.