Face Data Augmentation. Part 1: Geometric Transformation

Last Updated on July 17, 2023 by Editorial Team

Author(s): Ainur Gainetdinov

Originally published on Towards AI.

The performance of deep neural nets made a big step forward in the last two decades. Every year new architectures are devised that beat state-of-the-art results. However, only improving architectures won’t work without a quality dataset. Dataset has a big impact on final performance. Collecting and labeling diverse, accurate datasets may be laborious and expensive, and existing datasets usually don’t cover the whole variety of real data distribution, so data augmentation techniques are used. In this article, I will show how your dataset of human faces can be enriched by 3D geometry transformation to improve the performance of your model. Open source code implementation is provided below[1].

Data augmentation is a technique that increases the amount of data by applying different modifications to samples. Generic image augmentation can be divided into two categories: geometric transformations and color transformations. Geometric transformations consist of scaling, cropping, flipping, rotation, translation, etc. While color transformations consist of color jittering, noise adding, grayscaling, brightness/contrast adjustment, etc.

Let’s think about how we can augment an image if we know that there’s a human face. How do we distinguish one person from another? There’re face features that constitute identity, like skin color, face shape, haircut, wrinkles, eye color, etc. One of the most distinguishable is the face shape. So if we change the shape of the input face image, it will be a slightly different person for a trainable model. So let’s use this property to augment our dataset.

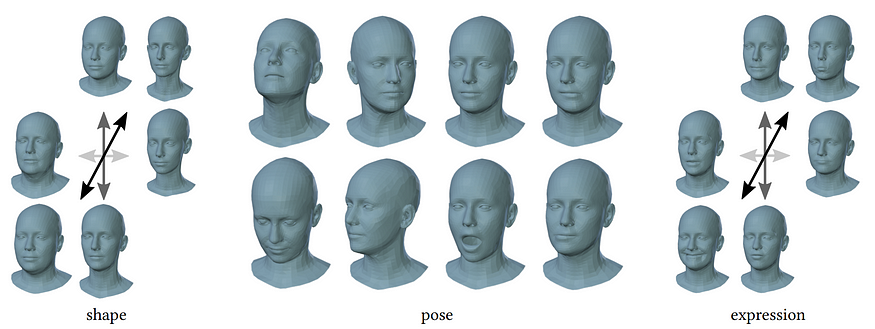

We will alter the geometry of a face by using the 3D Morphable Model (3DMM), specifically the FLAME[2] model. 3DMM is a three-dimensional mesh that has parameters to manipulate its shape, pose, and expression. 3DMM is constructed from three-dimensional meshes which have been registered from real people. Thus it can represent the distribution of real face shapes. Under the hood, it consists of the mean shape and a set of principal components which specify the directions of change for shape and expression. Alpha and beta are parameters of the model.

Before changing the face shape, we need to find parameters of 3DMM that correspond to our input photo. There are several methods to do that, like optimization to landmarks, photometric optimization, or regression models to predict parameters in one step. I used 2D landmark optimization as it has a simple implementation and gives accurate results in a reasonable time. Facial landmarks were detected on the input image with the Dlib face detection model. Adam optimization algorithm was used with MSE loss for landmarks to fit 3DMM into the face image. It took about 150 iterations to converge.

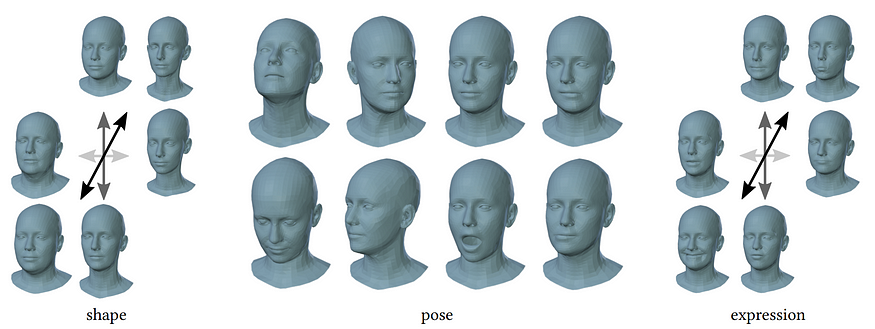

Now we are ready to modify the geometry of the face. First of all, we already have a three-dimensional mesh aligned with our face image. Сhanging 3DMM’s shape parameters lead to a shift of mesh vertices in image space. Knowing that shifts, we can use it to move the pixels of the image. To get a dense map of shifts, I used the OpenGL library, where I rendered 3DMM mesh with shifts in x and y directions instead of vertex color. There is one thing left to consider, how to handle pixels that were out of the face mesh area. For this purpose, I used extrapolation, which smoothly connects the changed face with a static background. As long as we have dense shift maps for x and y directions we can apply them to any type of labels like facial landmarks, segmentation masks, or paired images. You can see examples of face augmentation with geometric transformation in the figure below. If you want to know more details, I invite you to source code[1].

Now you can try it on your datasets. This augmentation technique will help you to improve a model making it stable to input variations. In this article, we learned about 3D geometric transformation, but besides it, there are texture modifications which are further improvements of face datasets, which in the next article.

References

[1] GitHub code. https://github.com/ainur699/face_data_augmentation

[2] FLAME model. https://flame.is.tue.mpg.de/index.html

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI