Deploying Machine Learning Models as API using AWS

Last Updated on January 6, 2023 by Editorial Team

Last Updated on June 12, 2020 by Editorial Team

Author(s): Tharun Kumar Tallapalli

Machine Learning, Cloud Computing

A guide to accessing SageMaker machine learning model endpoints through API using a Lambda function.

As a machine learning practitioner, I used to build models. But just building models is never sufficient for real-time products. ML models need to be integrated with web or mobile applications. One of the best ways to solve this problem is by deploying the model as API and inferencing the results whenever required.

The main advantage of Deploying model as an API is that ML Engineers can keep the code separate from other developers and Update the model without creating a disturbance to Web or App developers.

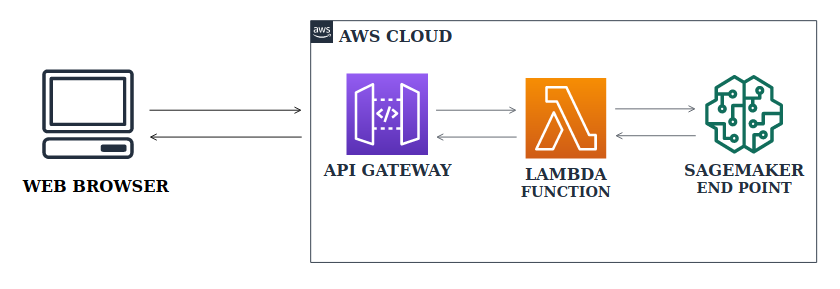

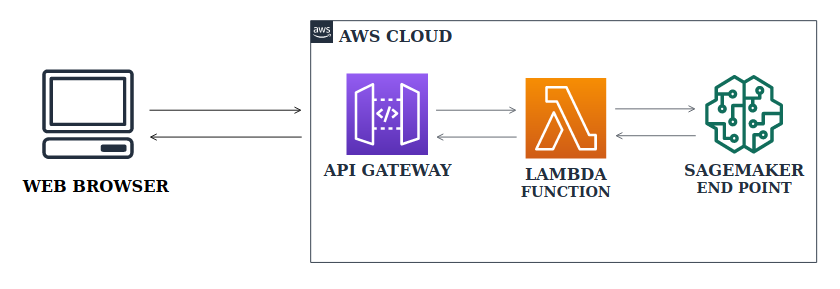

Workflow: The client sends a request to the API. API trigger is added to the Lambda function which results in invoking the SageMaker endpoint and returning predictions back to the client through API.

in this article, I will build a simple classification model and test the deployed model API using Postman.

Let’s get started! The steps we’ll be following are:

- Building SageMaker Model Endpoint.

- Creating a Lambda Function.

- Deploying as API.

- Testing with Postman.

Building SageMaker Model EndPoint

Let’s build an Iris Species Prediction Model.

Note: While training SageMaker classification models, the target variable should be the first column and if it is continuous then convert it into discrete.

1. Creating and Training and Validation data to train and test the model.

2. For Training, the Model, get Image URI of the model in the current region.

3. Set the Hyperparameters for the model (you can get the best hyperparameters for your model using the SageMaker Auto-Pilot experiment or you can set your own hyperparameters manually).

4. Fit the Model with train and validation data.

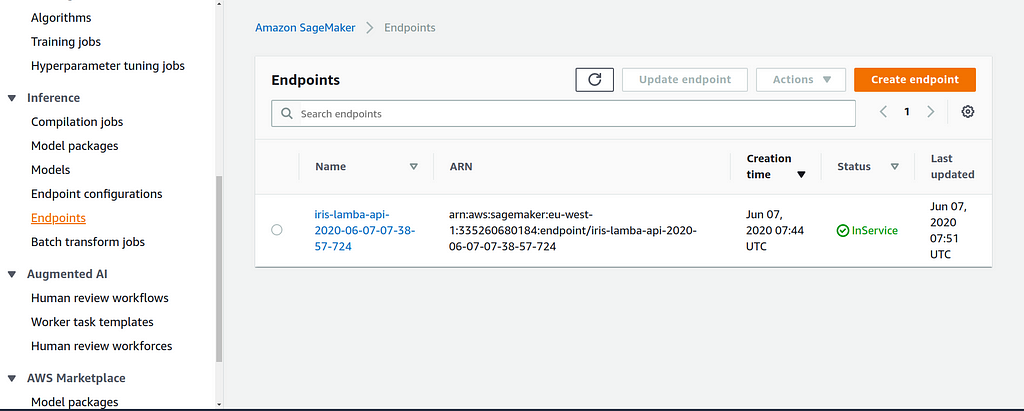

5. Now create an Endpoint for the Model.

You can view the Endpoint Configurations in SageMaker UI.

Creating Lambda Function

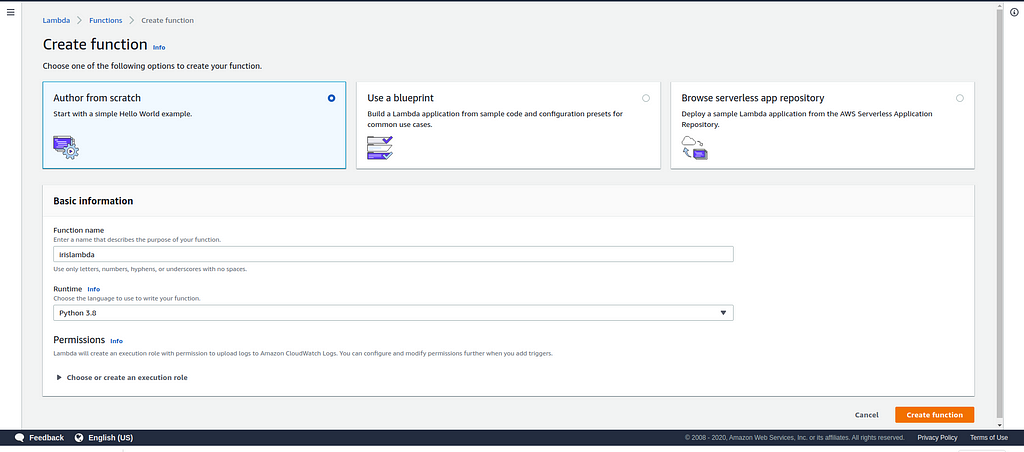

Now we have a SageMaker model endpoint. Let’s look at how we call it from Lambda. There is an API action called SageMaker Runtime and we use the boto3 sagemaker-runtime.invoke_endpoint(). From the AWS Lambda console, choose to Create function.

- Create a New Role such that Lambda Function has permission to invoke SageMaker endpoint.

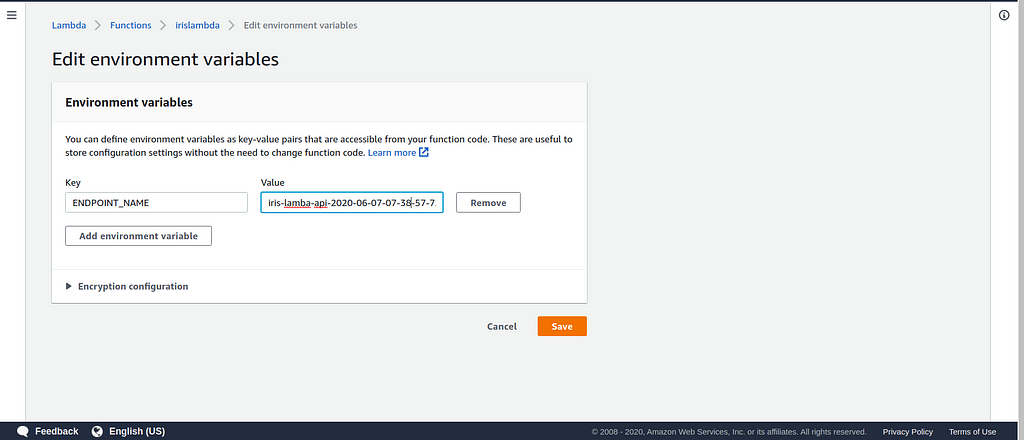

2. ENDPOINT_NAME is an environment variable that holds the name of the SageMaker model endpoint we just deployed.

Deploying API

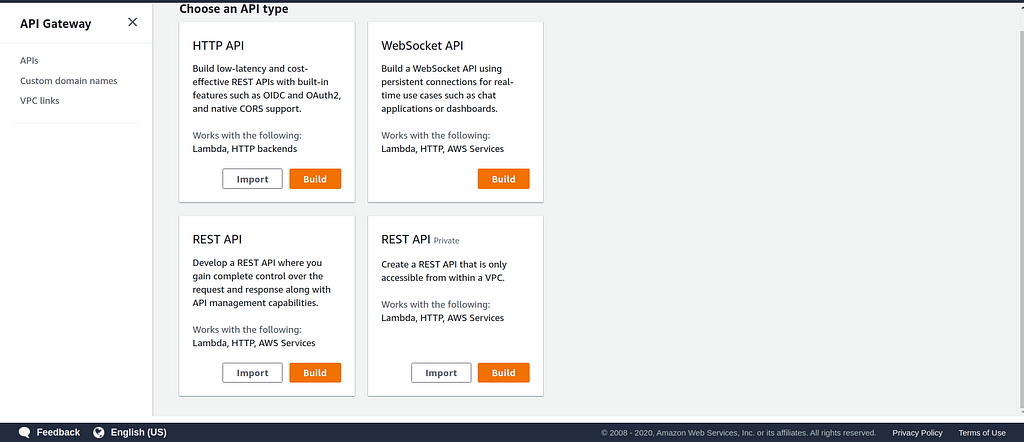

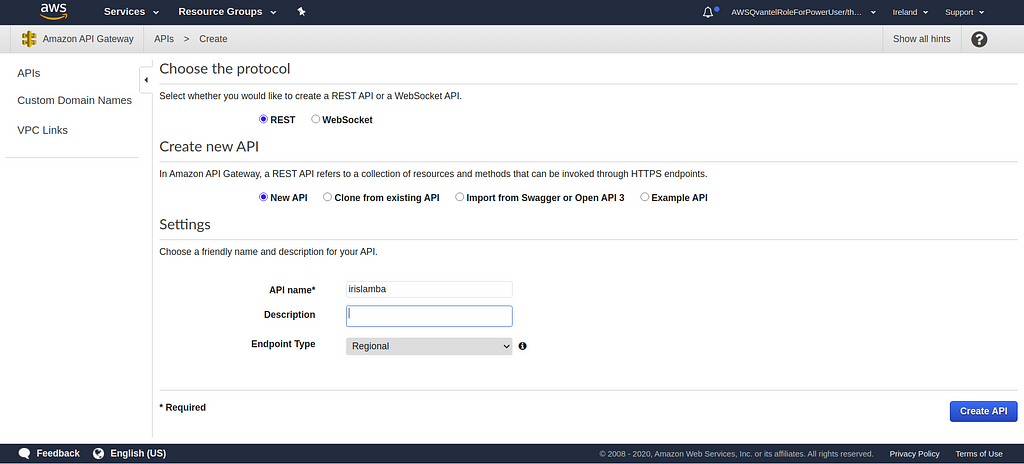

1. Open the Amazon API Gateway console. Choose the Create API, select REST API (as we send a post request and get the response).

2. Name your API and choose endpoint type as Regional (as it should be accessed within your region).

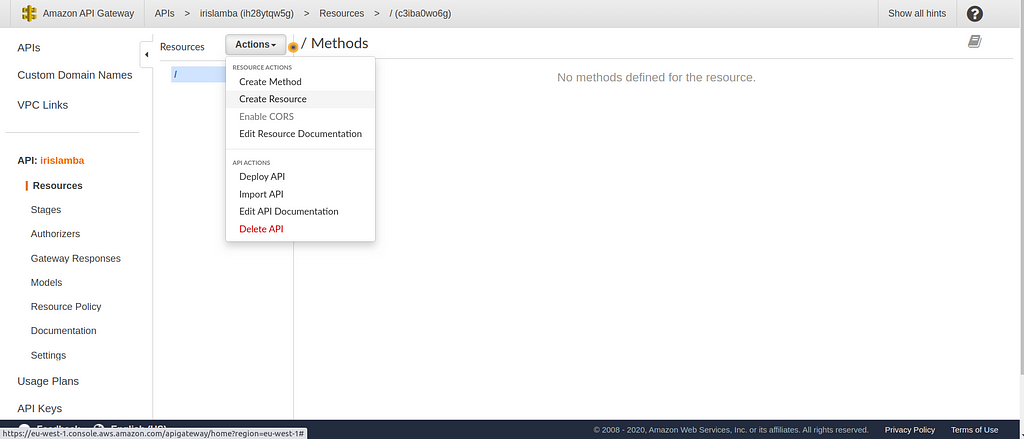

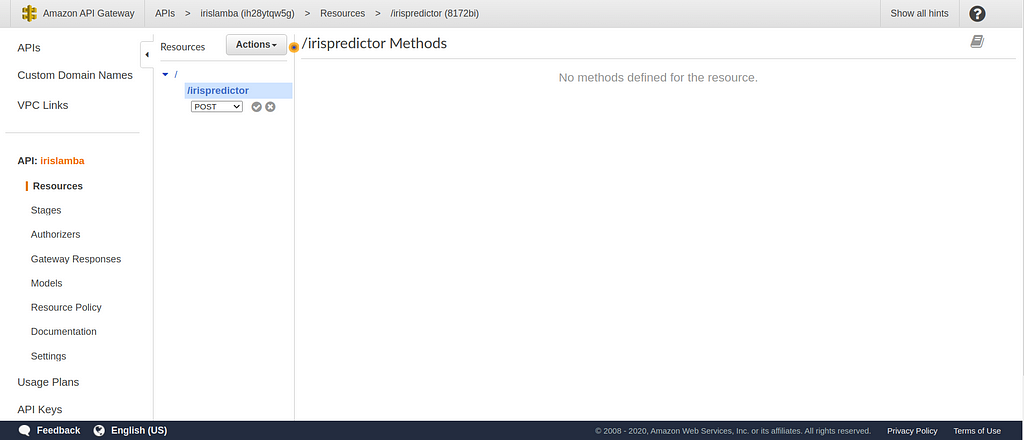

3. Create a Resource choosing from the Actions drop-down list, giving it a name like “irispredict”. Click to create resources.

4. When the resource is created, from the same drop-down list, choose Create Method to create a POST method.

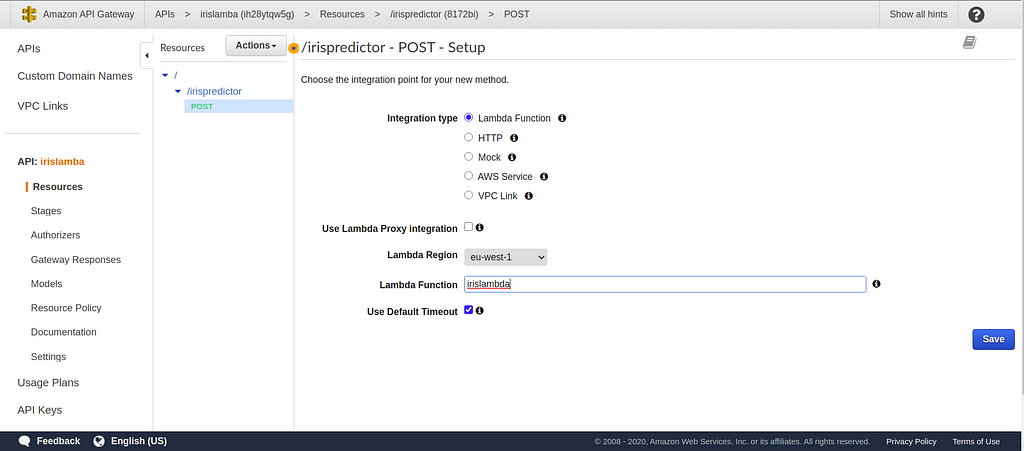

5. On the screen that appears, do the following:

- For the Integration type, choose Lambda Function.

- For Lambda Function, enter the name of the function created.

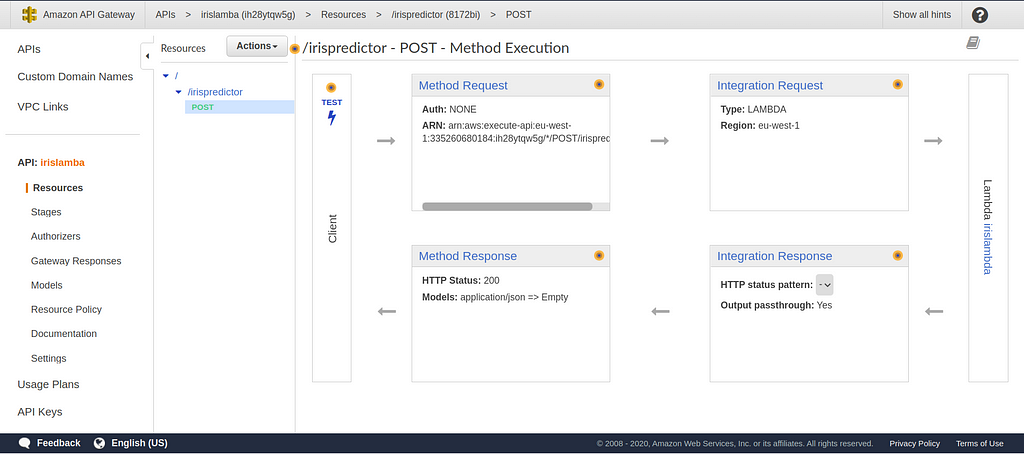

6. API Structure will look something like the following image:

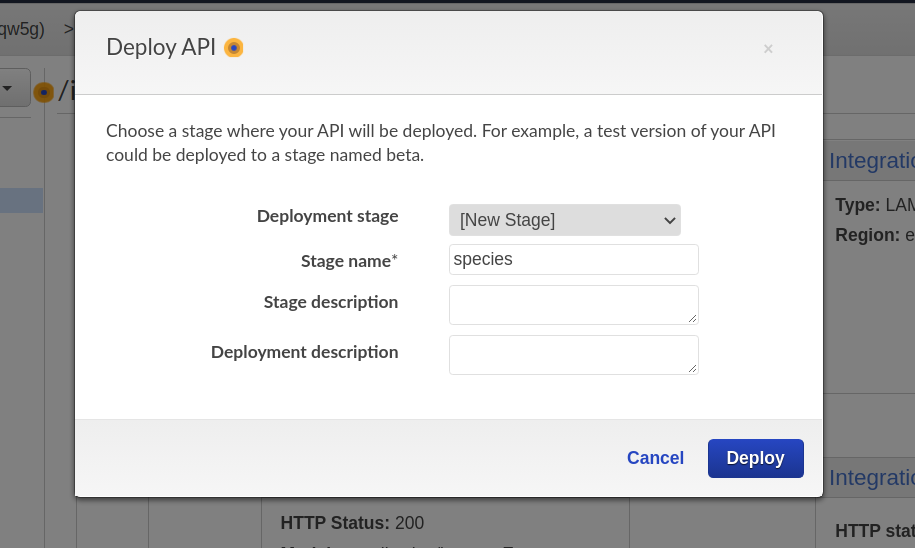

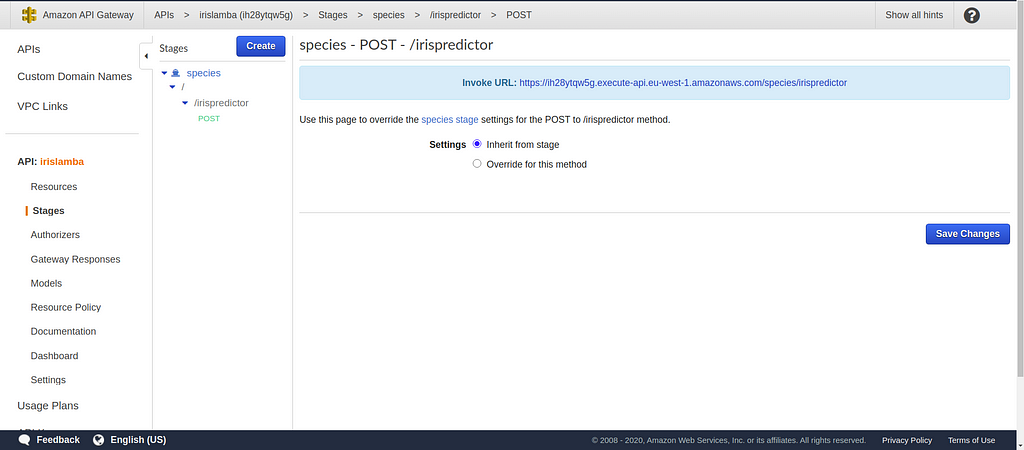

7. From Actions select Deploy API. On the page that appears, create a new stage. Call it “species” and click on Deploy.

8. A window appears with a stage created. Go to the post method, and invoke URL will be generated which is the final API Endpoint.

Testing With Postman

Postman is a popular API client that makes it easy for developers to create, share, test, and document APIs.

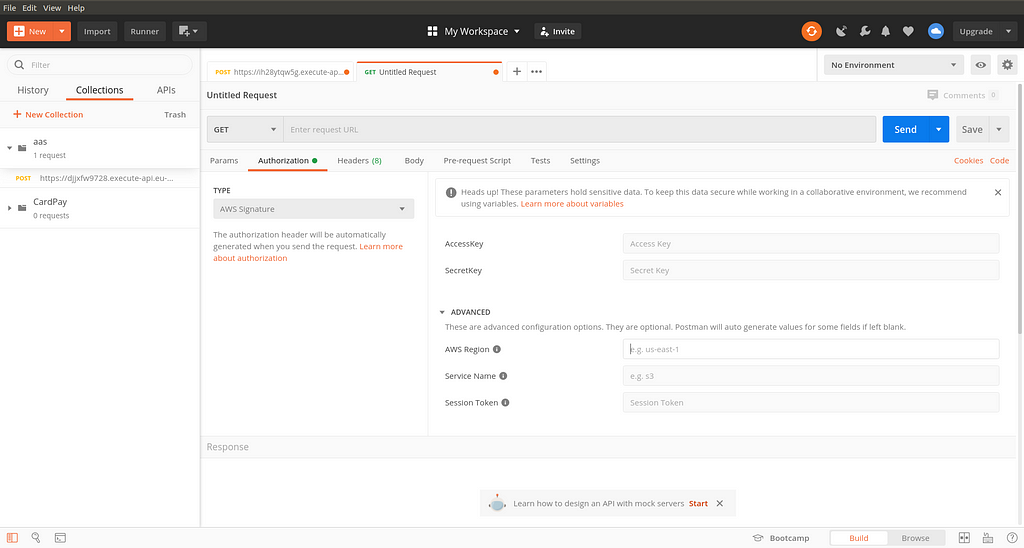

1. Before Invoking the API through Postman, add your AWS Secret Key and Access Key in Authorization section.

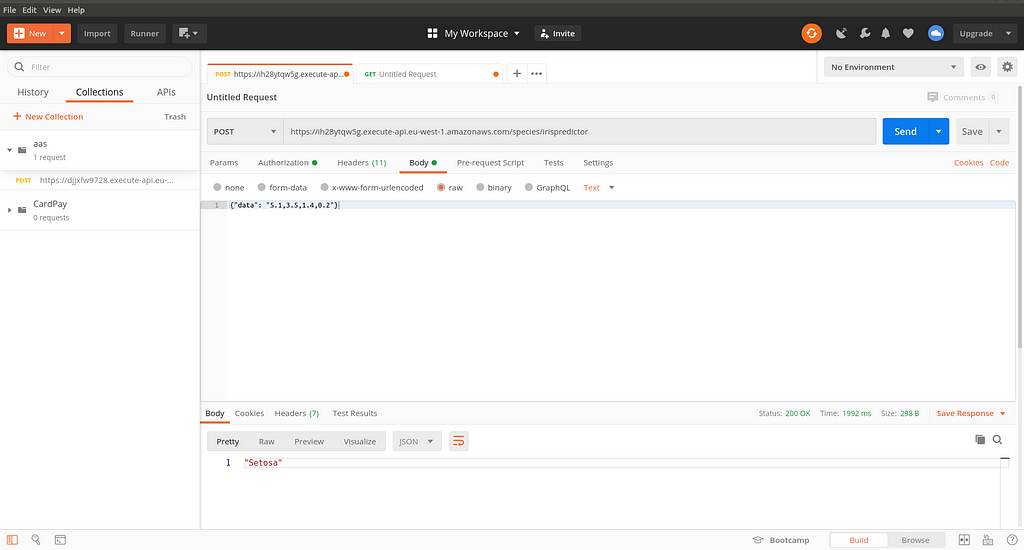

2. Test the Postman. Give input as JSON in Body. The output is displayed accordingly when you click on Send request to API.

Conclusion

Now we have successfully deployed the machine learning model as API using Lambda (a serverless component). This API can be invoked with just one click and inferences are made available easy for users and developers.

Final thoughts

I will get back to you on Deploying ML Models as Web Applications using ElasticBeanStalk and other AWS services. Till then, Stay Home, Stay Safe, and keep exploring!

Get in Touch

I hope you found the article insightful. I would love to hear feedback from you to improvise it and come back better! If you would like to get in touch, connect with me on LinkedIn. Thanks for reading!

References

[1] : AWS Documentation https://docs.aws.amazon.com/sagemaker/latest/dg/getting-started-client-app.html

Deploying Machine Learning Models as API using AWS was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.