Algorithmic Bias in Facial Recognition Technologies

Last Updated on April 25, 2024 by Editorial Team

Author(s): Nimit Bhardwaj

Originally published on Towards AI.

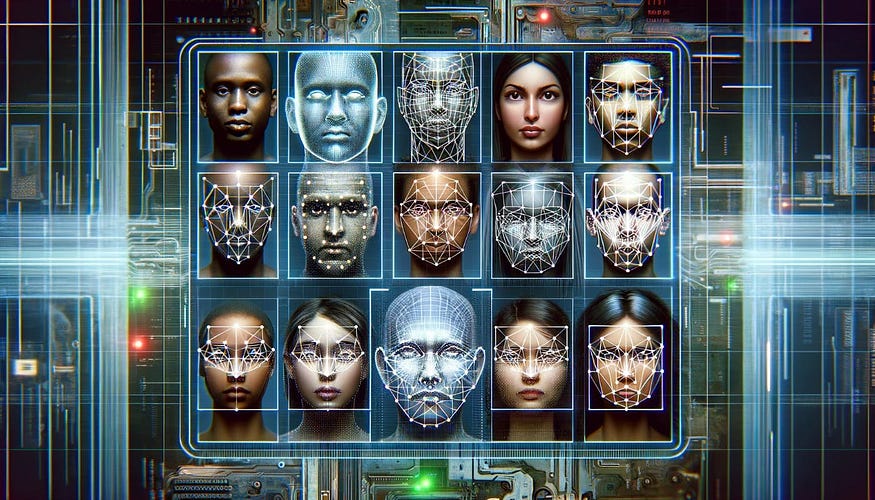

Algorithmic Bias in Facial Recognition Technologies

Exploring how facial recognition systems can perpetuate biases.

Facial recognition (FR) technologies have become an increasingly common AI used in our day-to-day lives. Simply put this refers to technologies that can identify or group individuals from image frames — both still and video [1]. Used by governments, businesses, and consumers, examples of FR include CCTV, airport security screening, and device authentications.

In this article, we will discuss the evolution of FR as a technology to where it is now, and then delve deeper into ethical concerns pertaining to biases which can perpetuate racial and sexual inequalities.

A Brief History of Facial Recognition Technologies

In the 1960s, Woodrow Bledsoe pioneered various computer-based pattern recognition technologies, including one of the earliest FR systems. His “Man-Machine System for Pattern Recognition” manually measured facial features to identify people in photographs [2]. While FR was limited by a lack of computational power and algorithmic accuracy back then, we have since seen huge innovative improvements in the field. With increasing academic focus and investment in R&D since the 1990s, FR has become more sophisticated, benefiting from advancements in image processing and feature extraction algorithms in the early 2000s [2]. Ultimately, the culmination of progress in computer vision, machine learning, and biometric authentication in the 2010s has now brought FR to be a commonly integrated and adopted technology across many commercial and social applications.

Today, FR technologies play pivotal roles in society, utilised by sectors like security, law enforcement, retail, and consumer electronics. There are benefits to be seen especially in security and law enforcement, such as assistance in missing persons cases, enhanced surveillance and public safety, and crime prevention and deterrence [3]. However, as with all AI, there is a fine line between FR causing net societal good and net bad. With the potential to exacerbate issues with racial profiling, misidentification, and confirmation biases, we must also consider how to steer the future of FR in an assuredly ethical direction.

How Biases Can Affect Facial Recognition

Of commonly used biometric identification technologies (fingerprint, palm, iris, voice, and face), FR presents the most problems as the least accurate and most prone to perpetuating bias [4]. This can occur from data bias, algorithmic bias, and societal or human biases.

Data Bias:

- The training sets used by FR technologies typically disproportionately represent white racial features as compared to ethnic minorities and black racial features.

- This leads to a high inaccuracy rate when identifying people of colour. In fact, a 2019 report published by NIST found a 10 to 100 times differential in the rates of false positive recognition of Asian and African American faces versus Caucasians5. Furthermore, females and those ages 18–30 also fell into the social groups most likely to suffer from erroneous matches [4].

- The exact error rate does depend on individual algorithms used, and interestingly Asian, developed algorithms proved to be less inaccurate when recognizing people of color [5].

Algorithmic Bias:

- The design of the algorithm itself can make it prone to perpetuating underlying training data or human-fed biases.

- Depending on the assumptions and heuristics programmed into the algorithm, it may naturally prioritise certain facial features which may not be sufficiently considerate of different demographic and racial feature norms [6]. This, especially combined with a lack of representation in training data sets, can compound issues of inaccuracy and bias.

- As algorithms iterate and improve with deep learning capabilities, this can feed into the “Black Box Problem” — whereby we lack visibility into the decision-making processes of these algorithms [7].

Societal Bias:

- Racially motivated stereotypes and prejudices, which sadly still prevail across cultures today, can creep into software, algorithms, and technology alike, contributing to the problem of bias in FR.

- Especially in law enforcement applications, where certain demographics or neighborhoods are overrepresented, e.g., arrest numbers, FR algorithms can positively reinforce these human biases by being more likely to identify members of that group.

- This can then create feedback loops amplifying the bias both to human moderators and the algorithm itself during refinement processes.

Case Study: Understanding the Impact of Facial Recognition Bias

In August 2020, Robert Williams was wrongly arrested for a shoplifting charge in Detroit [8]. Taken from his home in front of his wife and children, police issued the arrest based on a partial facial recognition match. It turned out to be a false positive, and yet Williams was still held overnight in jail for nearly 30 hours — despite him having an alibi. When he told police “I hope y’all don’t think all black people look alike” their response, rather than to look deeper into how the FR had made the match and confirm that CCTV matches Williams’ driver’s license, was, “The computer says it’s you” [8].

As of September 2023, there has been a total of 7 wrongful arrests publicly recorded using FR technologies, all of whom have been black — 6 males, and 1 female [9]. While there have not been any wrongful convictions using FR yet, these victims of wrongful arrest have been subjected to days in jail following the incorrect matches.

Tech companies providing FR software (mainly Apple with regards to misidentification) and police departments using them incorrectly (especially predominantly black cities: Louisiana, Maryland, Michigan and New Jersey) have all been sued at some point as part of legal action taken against misidentification and false arrests caused by FR technologies9.

Not only does overreliance on FR technologies therefore erode public trust in AI capabilities, but it also further diminishes public trust in legal and law enforcement institutions.

Regulation of Algorithmic Bias

As of March 2024, no concrete or quantifiable regulations exist surrounding algorithmic bias. However, since these technologies possess the ability to further entrench systemic biases, it is a topic which governments recognise needs discussion [10].

In December, the “Eliminating Bias in Algorithmic Systems Act of 2023” was introduced to the US Senate (following the “Algorithmic Accountability Act of 2022”), and similar endeavours like blueprints for an “AI Bill of Rights” and discussions on algorithmic discrimination protections continue to ramp up in the right direction [11].

While these are positive efforts towards protecting society’s best interest and reducing harm in the long term, accountability to steer FR innovation in an unbiased direction still, therefore, falls on the companies producing them and the corporations using them.

Potential Solutions and the Future of Facial Recognition Technologies

With a lack of legal protection, victims of wrongful arrests using FR have understandably lobbied for a complete ban on these technologies [8]. Nonetheless, FR does possess the potential to benefit and enhance society. In their current state, even adopting simple ‘best practice’ procedures could help reduce the impact of technological and algorithmic inaccuracy. For example, having human checks and oversight to confirm any positive matches before trusting and acting on an AI’s results can reduce the unfairness felt by the likes of Robert Williams.

In terms of technological improvements, the diversification of data sets used to train these algorithms can help mitigate data biases [6]. Better transparency and understanding of how complex algorithms arrive at these decisions will not only increase trust in the technologies but reduce algorithmic biases too.

IBM and Microsoft are two examples of tech giants who have announced roadmaps for reducing bias in their FR technologies [4]. These align with our expectations; they plan to modify and improve training data, collection thereof, and testing cohorts, especially with regard to underrepresented demographics and social groups.

However, this will also need to be coupled with improved training and development processes of the algorithms themselves. This includes prioritising the likes of “Fairness-Aware Algorithms” [12], i.e. those which are built on machine learning models that respect human principles of fairness and actively mitigate biases.

References

[1] What is face recognition? (2024), Microsoft Azure

[2] N. Lydick, A Brief Overview of Facial Recognition (n.d.), University of Michigan

[3] J. A. Lewis, and W. Crumpler, How Does Facial Recognition Work? (2021), CSIS

[4] A. Najibi, Racial Discrimination in Face Recognition Technology (2020), Harvard Univerity

[5] C. Boutin, NIST Study Evaluates Effects of Race, Age, Sex on Face Recognition Software (2019), NIST

[6] D. Leslie, Understanding bias in facial recognition technologies: an explainer (2020), The Alan Turing Institute

[7] Black box problem: Humans can’t trust AI, US-based Indian scientist feels lack of transparency is the reason (2019), The Economic Times

[8] T. Ryan-Mosley, The new lawsuit that shows facial recognition is officially a civil rights issue (2021), MIT Technology Review

[9] C. Carrega, Facial Recognition Technology and False Arrests: Should Black People Worry? (2023), Capital B News

[10] Algorithmic Discrimination Protections (n.d.), The White House

[11] Senator E. J. Markey, S.3478 — Eliminating Bias in Algorithmic Systems Act of 2023 (2023), Congress.Gov

[12] S. Palvel, Introduction to Fairness-aware ML (2023), Medium

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI