A Simple and Scalable Clustering Algorithm for Data Summarization

Last Updated on February 25, 2021 by Editorial Team

Author(s): Haris Angelidakis

Machine Learning

A Simple and Scalable Clustering Algorithm for Data Summarization

The Gonzalez heuristic for k-Center

Introduction

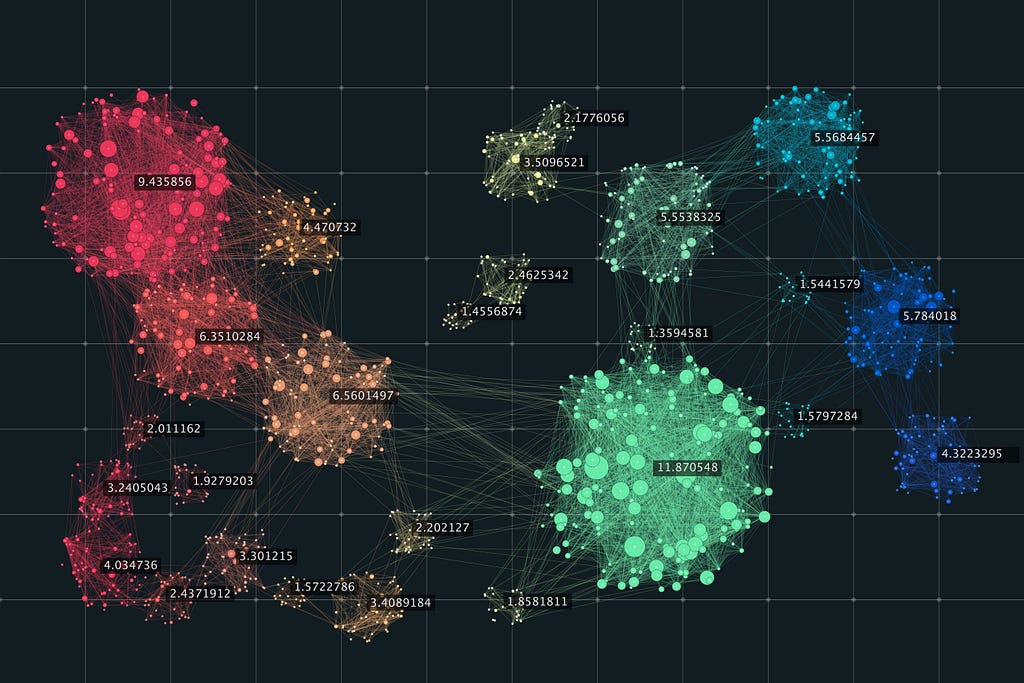

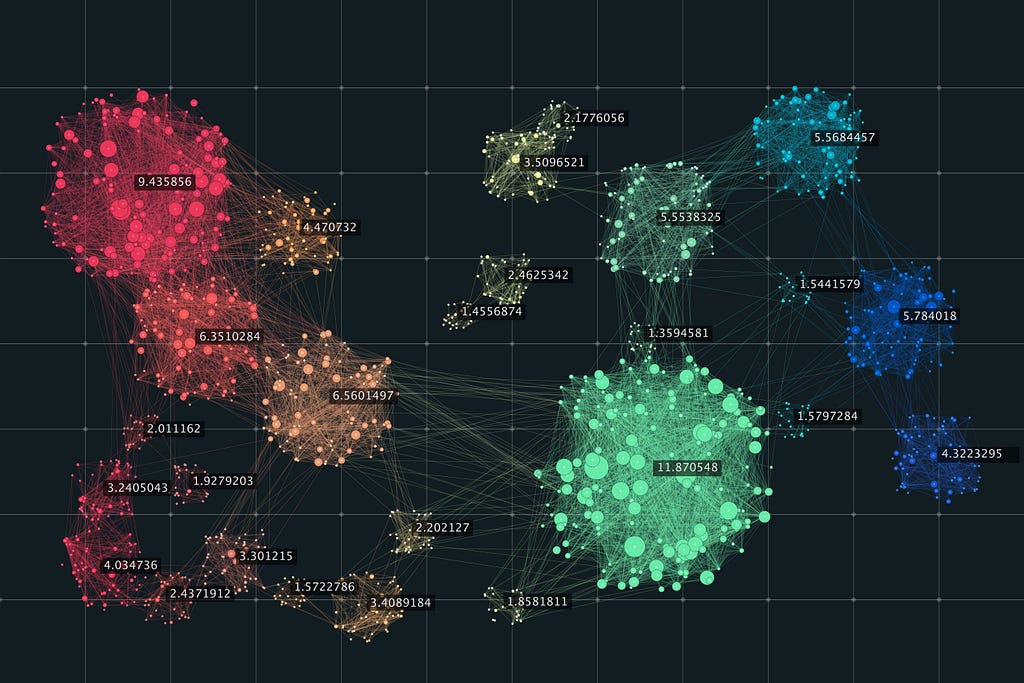

In the era of big data, the need for designing efficient procedures that summarize millions of data points in a meaningful way is more pressing than ever. One of the most successful ways to do so, that falls into the unsupervised learning framework, is clustering. Roughly speaking, assuming that there is some notion of distance between points that captures how similar or dissimilar two points are, the goal of a clustering algorithm is the following:

a clustering algorithm computes a small subset of the given points that can serve as a set of representatives of all the points.

Let’s try to make things a bit more formal. Suppose we have N points

{p(1), p(2), …, p(N)},

and some notion of distance between them is denoted as

D(p(i), p(j)), for 1 ≤ i, j ≤ N.

Reiterating, at a high level, we would like to do the following:

select k points among {p(1), …, p(N)} that summarize all the points in the best possible way.

We now discuss what “summarize in the best possible way” means. A standard way of quantifying whether a set of k centers is good or not is by defining a cost function that somehow summarizes the distances of all points to their closest center among the ones selected. More formally, given a set C of k centers, there are three main objectives that are usually studied.

- k-Median objective: Here, we minimize the average distance of each point to its closest center in C (corresponds to l1-minimization).

- k-Means objective: Here, we minimize the average squared distance of each point to its closest center in C (corresponds to l2-minimization).

- k-Center objective: Here, we minimize the maximum distance of a point to its closest center in C (corresponds to l-infinity-minimization).

In this article, we focus on the last objective, and present a very simple and fast algorithm, proposed by Teofilo Gonzalez in 1985, that also happens to provide a tight approximation factor for the problem, assuming that P is not equal to NP.

The k-Center problem

We are interested in the following problem, known as the k-Center problem. The input consists of

- a metric space (P,D), where P = {p(1), …, p(N)} is a set of N points and D is a distance function between these points, and

- an integer parameter k, which denotes the number of centers that we are allowed to open.

The goal is to compute a subset C of P that has k points such that the largest distance of any point to C is minimized. More formally, the objective for a given subset C is equal to max D(p, C) over all points p in P, where D(p,C) := min{ D(p,c): c in C }, and the goal is to minimize this objective function.

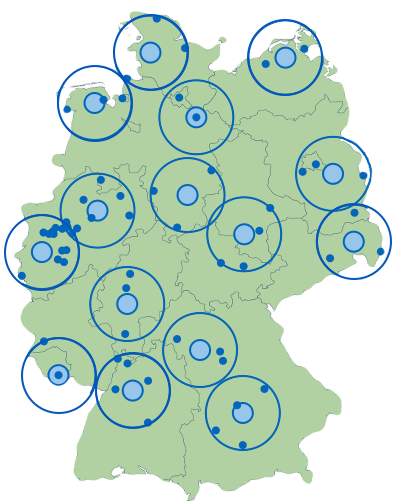

An equivalent, and perhaps easier, way to to phrase the objective is the following: compute a subset C of P that has k points and the smallest possible radius r such that if we consider the balls of radius r around the points in C, the union of these balls covers all the points in P. In mathematical notation, this would mean that {p: D(p, C) ≤ r} = P.

The Gonzalez heuristic

Let P = {p(1), …, p(N)} denote the input set of points. The Gonzalez heuristic is a greedy algorithm that starts by selecting an arbitrary center, and then in order to make the next choice of a center, it selects the points that is farthest away from the points selected so far. That simple!

We now give a description of the algorithm in pseudocode.

1. Let Z = P; S = emptyset;

2. Select an arbitrary point p of P;

3. Set Z = P - {p}; S = S + {p};

4. For i = 2,...,k:

Let p' be a point in Z that maximizes D(p',S);

Set Z = Z - {p'}; S = S + {p'};

5. Return S;

Observation: Assuming that the distance between two points can be computed in constant time, the total running time of the algorithm is O(nk).

In practice, this is a very fast and easy to implement algorithm that is scalable and can be applied to very large datasets. Besides its speed, it also happens to be a theoretically optimal algorithm for the problem, assuming that P is not equal to NP. More precisely, we will now do a theoretical analysis of it and show that it always succeeds in returning a solution whose cost is within a factor of 2 from the optimal cost; in other words, it is a 2–approximation algorithm. Moreover, this factor of 2 is tight, meaning that one cannot hope for a better approximation factor, assuming that P is not equal to NP; this result was proved by Hsu and Nemhauser (1979).

Note: In case you are interested to learn more about approximation algorithms, you can check out an article of mine on the topic here.

Theorem: The Gonzalez heuristic is a 2-approximation algorithm for the k-Center problem.

In order to prove the above theorem, we denote by OPT the optimal cost in a given instance. Let c(1), …, c(k) be an optimal selection of centers with corresponding clusters C(1), …, C(k); in words, each cluster C(i) contains all the data points that are closer to c(i) compared to any other c(j), for j different than i, breaking ties arbitrarily. Let s(1), …, s(k) be the selected centers of the Gonzalez heuristic, given in the order the algorithm selected them. We will show that the balls of radius 2 OPT around the points {s(1), …, s(k)} cover all the data points. We do some case analysis:

(1) Suppose that each point s(i) belongs to a distinct cluster of the optimal solution. In other words, suppose that the set of points {s(1), …, s(k)} that the algorithm computes “hits” every cluster of the optimal solution. For every i = 1,…,k, let g(s(i)) denote the center of the optimal solution that is the closest to s(i). By the previous discussion, we have {g(s(1)), g(s(2)), …, g(s(k))} = {c(1), …, c(k)}.

Moreover, for every i, we have D(s(i), g(s(i))) ≤ OPT, since we assumed that {c(1), …, c(k)} is an optimal selection of centers. Thus, for every i, we have that each point of the cluster C(g(s(i))) is within distance 2 OPT from s(i), since for every point p in the cluster C(g(s(i))), by the triangle inequality we have D(s(i), p) ≤ D(s(i), g(s(i))) + D(g(s(i)), p) ≤ OPT + OPT = 2 OPT. We conclude that every point is within distance 2 OPT from a selected center.

(2) Suppose that at some iteration i, the point s(i) belongs to an optimal cluster C(j) that has already been “hit” by some point s(t) selected by the algorithm in some previous iteration t < i. Since s(i) and s(t) belong to the same cluster C(j), by the triangle inequality this means that

D(s(i), s(t)) ≤ D(s(i), c(j)) + D(c(j), s(t)) ≤ OPT + OPT = 2 OPT.

By the greedy choices that the algorithm makes, at the beginning of the i-th iteration, the algorithm selected a point that has the largest possible distance from the set {s(1), …, s(i-1)} among all points not selected so far. The above computation now implies that this distance is at most 2 OPT. This means that all remaining points are at distance at most 2 OPT from the selected set of centers.

In both cases, we showed that all points are within distance 2 OPT from the selected set of centers. Thus, the algorithm is indeed a 2-approximation algorithm for the k-Center problem.

Conclusion

In this short article, we described a very simple greedy approach for clustering points that turns out to be highly effective and very fast. Any reasonable implementation of the algorithm scales easily to millions of points, and so it provides a very convenient way of obtaining small summaries of big data sets! As an added bonus, it is one of the cleanest and easiest algorithms to analyze theoretically, and it gives a tight approximation factor for the problem! What else could you ask for ?!

References

- Teofilo F. Gonzalez: Clustering to Minimize the Maximum Intercluster Distance. Theor. Comput. Sci. 38: 293–306 (1985)

- Wen-Lian Hsu, George L. Nemhauser: Easy and hard bottleneck location problems. Discret. Appl. Math. 1(3): 209–215 (1979)

A simple and scalable clustering algorithm for data summarization was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI