A Complete Guide to Embedding For NLP & Generative AI/LLM

Last Updated on October 19, 2024 by Editorial Team

Author(s): Mdabdullahalhasib

Originally published on Towards AI.

Understand the concept of vector embedding, why it is needed, and implementation with LangChain.

This member-only story is on us. Upgrade to access all of Medium.

If you want to learn something efficiently, first, you should ask questions yourself or generate questions about the topics. For example, why should I learn this topic? Why this topic has been discovered? How I can effectively use this topic? And so on. The more questions you ask, the more knowledge you get.

After reading the whole article carefully, you can answer the following questions.

What is Vector embedding and why do we need this?How Vector Embedding has been discovered?How to implement Vector Embedding in LangChain?How to visualize the embedding?

For training machine learning algorithms with datasets, Machines only understand numbers. The data type can be an image, text, audio, or tabular data, we have to convert the data into representative numerical formats.

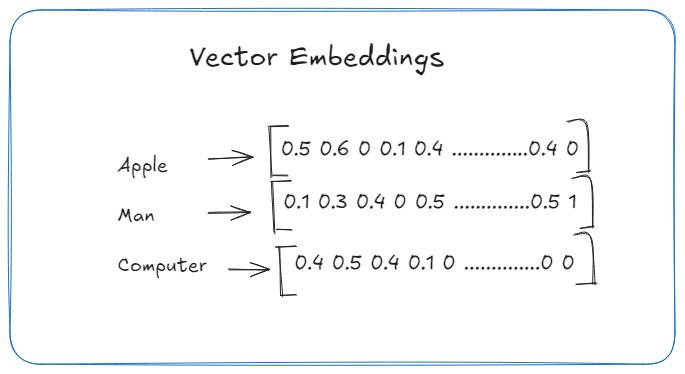

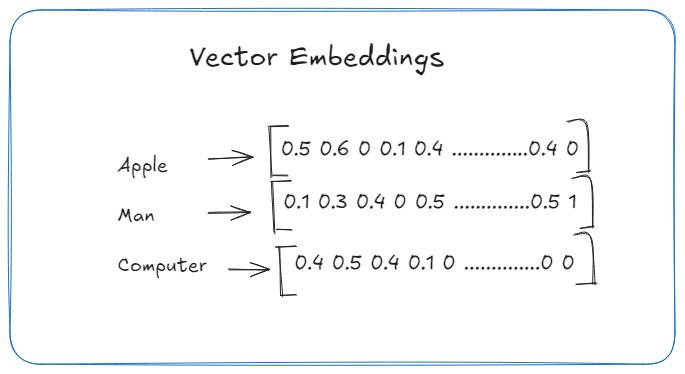

Vector embedding is a mathematical representation of any objects/data. The main theme is that it can contain semantic and meaningful contextual information about the objects so that ML algorithms can efficiently analyze and understand the data.

Many neural network approaches have been developed to convert the data into numerical representation. Different data types are embedded in different ways. Let’s have a look at those.

Textual… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.