Titanic Challenge — Machine Learning for Disaster Recovery — Part 2

Last Updated on July 20, 2023 by Editorial Team

Author(s): Bindhu Balu

Originally published on Towards AI.

Part-2 — Predictive Model Building

Code Location: https://github.com/BindhuVinodh/Titanic—Predictive-Model-Building

II — Feature engineering

In the previous part, we flirted with the data and spotted some interesting correlations.

In this part, we’ll see how to process and transform these variables in such a way the data becomes manageable by a machine learning algorithm.

We’ll also create, or “engineer” additional features that will be useful in building the model.

We’ll see along the way how to process text variables like the passenger names and integrate this information into our model.

We will break our code in separate functions for more clarity.

Loading the data

One trick when starting a machine learning problem is to append the training set to the test set together.

We’ll engineer new features using the train set to prevent information leakage. Then we’ll add these variables to the test set.

Let’s load the train and test sets and append them together.

def get_combined_data():

# reading train data

train = pd.read_csv('./data/train.csv')

# reading test data

test = pd.read_csv('./data/test.csv')

# extracting and then removing the targets from the training data

targets = train.Survived

train.drop(['Survived'], 1, inplace=True)

# merging train data and test data for future feature engineering

# we'll also remove the PassengerID since this is not an informative feature

combined = train.append(test)

combined.reset_index(inplace=True)

combined.drop(['index', 'PassengerId'], inplace=True, axis=1)

return combined

combined = get_combined_data()

Let’s have a look at the shape :

print(combined.shape)

# (1309, 10)

train and test sets are combined.

You may notice that the total number of rows (1309) is the exact summation of the number of rows in the train set and the test set.

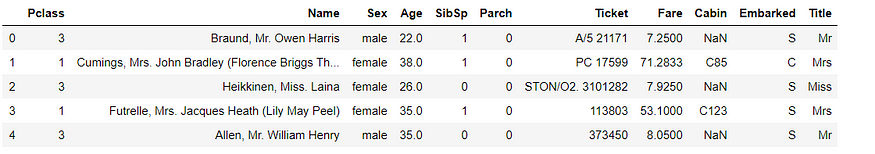

combined.head()

When looking at the passenger names one could wonder how to process them to extract useful information.

If you look closely at these first examples:

- Braund, Mr. Owen Harris

- Heikkinen, Miss. Laina

- Oliva y Ocana, Dona. Fermina

- Peter, Master. Michael J

You will notice that each name has a title in it! This can be a simple Miss. or Mrs. but it can be sometimes something more sophisticated like Master, Sir or Dona. In that case, we might introduce additional information about the social status by simply parsing the name and extracting the title and converting it to a binary variable.

Let’s see how we’ll do that in the function below.

Let’s first see what the different titles are in the train set

print(titles)

# set(['Sir', 'Major', 'the Countess', 'Don', 'Mlle', 'Capt', 'Dr', 'Lady', 'Rev', 'Mrs', 'Jonkheer', 'Master', 'Ms', 'Mr', 'Mme', 'Miss', 'Col'])

Title_Dictionary = {

"Capt": "Officer",

"Col": "Officer",

"Major": "Officer",

"Jonkheer": "Royalty",

"Don": "Royalty",

"Sir" : "Royalty",

"Dr": "Officer",

"Rev": "Officer",

"the Countess":"Royalty",

"Mme": "Mrs",

"Mlle": "Miss",

"Ms": "Mrs",

"Mr" : "Mr",

"Mrs" : "Mrs",

"Miss" : "Miss",

"Master" : "Master",

"Lady" : "Royalty"

}def get_titles():

# we extract the title from each name

combined['Title'] = combined['Name'].map(lambda name:name.split(',')[1].split('.')[0].strip())

# a map of more aggregated title

# we map each title

combined['Title'] = combined.Title.map(Title_Dictionary)

status('Title')

return combined

This function parses the names and extracts the titles. Then, it maps the titles to categories of titles. We selected :

- Officer

- Royalty

- Mr

- Mrs

- Miss

- Master

Let’s run it!

combined = get_titles()

combined.head()

Let’s check if the titles have been filled correctly.

combined[combined['Title'].isnull()]

There is indeed a NaN value in-line 1305. In fact, the corresponding name is Oliva y Ocana, Dona. Fermina.

This title was not encountered in the training dataset.

Perfect. Now we have an additional column called Title that contains the information.

Processing the ages

We have seen in the first part that the Age variable was missing 177 values. This is a large number ( ~ 13% of the dataset). Simply replacing them with the mean or the median age might not be the best solution since the age may differ by groups and categories of passengers.

To understand why, let’s group our dataset by sex, Title, and passenger class and for each subset to compute the median age.

To avoid data leakage from the test set, we fill in missing ages in the train using the train set and we fill in ages in the test set using values calculated from the train set as well.

Number of missing ages in the train set

print(combined.iloc[:891].Age.isnull().sum())

# 177

Number of missing ages in the test set

print(combined.iloc[891:].Age.isnull().sum())

# 86grouped_train = combined.iloc[:891].groupby(['Sex','Pclass','Title'])

grouped_median_train = grouped_train.median()

grouped_median_train = grouped_median_train.reset_index()[['Sex', 'Pclass', 'Title', 'Age']]grouped_median_train.head()

This data frame will help us impute missing age values based on different criteria.

Look at the median age column and see how this value can be different based on the Sex, Pclass and Title put together.

For example:

- If the passenger is female, from Pclass 1, and from royalty the median age is 40.5.

- If the passenger is male, from Pclass 3, with a Mr title, the median age is 26.

Let’s create a function that fills in the missing age is combined based on these different attributes.

def fill_age(row):

condition = (

(grouped_median_train['Sex'] == row['Sex']) &

(grouped_median_train['Title'] == row['Title']) &

(grouped_median_train['Pclass'] == row['Pclass'])

)

return grouped_median_train[condition]['Age'].values[0]

def process_age():

global combined

# a function that fills the missing values of the Age variable

combined['Age'] = combined.apply(lambda row: fill_age(row) if np.isnan(row['Age']) else row['Age'], axis=1)

status('age')

return combined

combined = process_age()

Perfect. The missing ages have been replaced.

However, we notice a missing value in Fare, two missing values in Embarked and a lot of missing values in Cabin. We’ll come back to these variables later.

Let’s now process the names.

def process_names():

global combined

# we clean the Name variable

combined.drop('Name', axis=1, inplace=True)

# encoding in dummy variable

titles_dummies = pd.get_dummies(combined['Title'], prefix='Title')

combined = pd.concat([combined, titles_dummies], axis=1)

# removing the title variable

combined.drop('Title', axis=1, inplace=True)

status('names')

return combined

This function drops the Name column since we won’t be using it anymore because we created a Title column.

Then we encode the title values using a dummy encoding.

combined = process_names()

combined.head()

As you can see :

- there is no longer a name feature.

- new variables (Title_X) appeared. These features are binary.

- For example, If Title_Mr = 1, the corresponding Title is Mr.

Processing Fare

Let’s imputed the missing fare value by the average fare computed on the train set

def process_fares():

global combined

# there's one missing fare value - replacing it with the mean.

combined.Fare.fillna(combined.iloc[:891].Fare.mean(), inplace=True)

status('fare')

return combined

This function simply replaces one missing Fare value by the mean.

combined = process_fares()

Processing Embarked

def process_embarked():

global combined

# two missing embarked values - filling them with the most frequent one in the train set(S)

combined.Embarked.fillna('S', inplace=True)

# dummy encoding

embarked_dummies = pd.get_dummies(combined['Embarked'], prefix='Embarked')

combined = pd.concat([combined, embarked_dummies], axis=1)

combined.drop('Embarked', axis=1, inplace=True)

status('embarked')

return combined

This function replaces the two missing values of Embarked with the most frequent Embarked value.

combined = process_embarked()combined.head()

Processing Cabin

train_cabin, test_cabin = set(), set()for c in combined.iloc[:891]['Cabin']:

try:

train_cabin.add(c[0])

except:

train_cabin.add('U')

for c in combined.iloc[891:]['Cabin']:

try:

test_cabin.add(c[0])

except:

test_cabin.add('U')print(train_cabin)

# set(['A', 'C', 'B', 'E', 'D', 'G', 'F', 'U', 'T'])print(test_cabin)

# set(['A', 'C', 'B', 'E', 'D', 'G', 'F', 'U'])

We don’t have any cabin letter in the test set that is not present in the train set.

def process_cabin():

global combined

# replacing missing cabins with U (for Uknown)

combined.Cabin.fillna('U', inplace=True)

# mapping each Cabin value with the cabin letter

combined['Cabin'] = combined['Cabin'].map(lambda c: c[0])

# dummy encoding ...

cabin_dummies = pd.get_dummies(combined['Cabin'], prefix='Cabin')

combined = pd.concat([combined, cabin_dummies], axis=1)

combined.drop('Cabin', axis=1, inplace=True)

status('cabin')

return combined

This function replaces NaN values with U (for Unknow). It then maps each Cabin value to the first letter. Then it encodes the cabin values using dummy encoding again.

combined = process_cabin()

Ok, no missing values now.

combined.head()

Processing Sex

def process_sex():

global combined

# mapping string values to numerical one

combined['Sex'] = combined['Sex'].map({'male':1, 'female':0})

status('Sex')

return combined

This function maps the string values male and female to 1 and 0 respectively.

combined = process_sex()

Processing Pclass

def process_pclass():

global combined

# encoding into 3 categories:

pclass_dummies = pd.get_dummies(combined['Pclass'], prefix="Pclass")

# adding dummy variable

combined = pd.concat([combined, pclass_dummies],axis=1)

# removing "Pclass"

combined.drop('Pclass',axis=1,inplace=True)

status('Pclass')

return combined

This function encodes the values of Pclass (1,2,3) using a dummy encoding.

combined = process_pclass()

Processing Ticket

Let's first see how the different ticket prefixes we have in our dataset

def cleanTicket(ticket):

ticket = ticket.replace('.', '')

ticket = ticket.replace('/', '')

ticket = ticket.split()

ticket = map(lambda t : t.strip(), ticket)

ticket = list(filter(lambda t : not t.isdigit(), ticket))

if len(ticket) > 0:

return ticket[0]

else:

return 'XXX'

tickets = set()

for t in combined['Ticket']:

tickets.add(cleanTicket(t))

print(len(tickets))

#37

def process_ticket():

global combined

# a function that extracts each prefix of the ticket, returns 'XXX' if no prefix (i.e the ticket is a digit)

def cleanTicket(ticket):

ticket = ticket.replace('.','')

ticket = ticket.replace('/','')

ticket = ticket.split()

ticket = map(lambda t : t.strip(), ticket)

ticket = filter(lambda t : not t.isdigit(), ticket)

if len(ticket) > 0:

return ticket[0]

else:

return 'XXX'

# Extracting dummy variables from tickets:

combined['Ticket'] = combined['Ticket'].map(cleanTicket)

tickets_dummies = pd.get_dummies(combined['Ticket'], prefix='Ticket')

combined = pd.concat([combined, tickets_dummies], axis=1)

combined.drop('Ticket', inplace=True, axis=1)

status('Ticket')

return combined

combined = process_ticket()

Processing Family

This part includes creating new variables based on the size of the family (the size is, by the way, another variable we create).

This creation of new variables is done under a realistic assumption: Large families are grouped together, hence they are more likely to get rescued than people traveling alone.

def process_family():

global combined

# introducing a new feature : the size of families (including the passenger)

combined['FamilySize'] = combined['Parch'] + combined['SibSp'] + 1

# introducing other features based on the family size

combined['Singleton'] = combined['FamilySize'].map(lambda s: 1 if s == 1 else 0)

combined['SmallFamily'] = combined['FamilySize'].map(lambda s: 1 if 2 <= s <= 4 else 0)

combined['LargeFamily'] = combined['FamilySize'].map(lambda s: 1 if 5 <= s else 0)

status('family')

return combined

This function introduces 4 new features:

- FamilySize : the total number of relatives including the passenger (him/her)self.

- Sigleton : a boolean variable that describes families of size = 1

- SmallFamily : a boolean variable that describes families of 2 <= size <= 4

- LargeFamily : a boolean variable that describes families of 5 < size

combined = process_family()print(combined.shape)

# (1309, 67)

We end up with a total of 67 features.

combined.head()

III — Modeling

In this part, we use our knowledge of the passengers based on the features we created and then build a statistical model. You can think of this model as a box that crunches the information of any new passenger and decides whether or not he survives.

There is a wide variety of models to use, from logistic regression to decision trees and more sophisticated ones such as random forests and gradient boosted trees.

We’ll be using Random Forests. Random Forests has proven a great efficiency in Kaggle competitions.

Back to our problem, we now have to:

- Break the combined dataset in the train set and test set.

- Use the train set to build a predictive model.

- Evaluate the model using the train set.

- Test the model using the test set and generate an output file for the submission.

Keep in mind that we’ll have to reiterate on 2. and 3. until an acceptable evaluation score is achieved.

Let’s start by importing useful libraries.

from sklearn.pipeline import make_pipeline

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble.gradient_boosting import GradientBoostingClassifier

from sklearn.feature_selection import SelectKBest

from sklearn.model_selection import StratifiedKFold

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import cross_val_score

from sklearn.feature_selection import SelectFromModel

from sklearn.linear_model import LogisticRegression, LogisticRegressionCV

To evaluate our model we’ll be using a 5-fold cross-validation with the accuracy since it’s the metric that the competition uses in the leaderboard.

To do that, we’ll define a small scoring function.

def compute_score(clf, X, y, scoring='accuracy'):

xval = cross_val_score(clf, X, y, cv = 5, scoring=scoring)

return np.mean(xval)

Recovering the train set and the test set from the combined dataset is an easy task.

def recover_train_test_target():

global combined

targets = pd.read_csv('./data/train.csv', usecols=['Survived'])['Survived'].values

train = combined.iloc[:891]

test = combined.iloc[891:]

return train, test, targets

train, test, targets = recover_train_test_target()

Feature selection

We've come up to more than 30 features so far. This number is quite large.

When feature engineering is done, we usually tend to decrease the dimensionality by selecting the "right" number of features that capture the essential.

In fact, feature selection comes with many benefits:

- It decreases redundancy among the data

- It speeds up the training process

- It reduces overfitting

Tree-based estimators can be used to compute feature importance, which in turn can be used to discard irrelevant features.

clf = RandomForestClassifier(n_estimators=50, max_features='sqrt')

clf = clf.fit(train, targets)

Let’s have a look at the importance of each feature.

features = pd.DataFrame()

features['feature'] = train.columns

features['importance'] = clf.feature_importances_

features.sort_values(by=['importance'], ascending=True, inplace=True)

features.set_index('feature', inplace=True)features.plot(kind='barh', figsize=(25, 25))

As you may notice, there is great importance linked to Title_Mr, Age, Fare, and Sex.

There is also an important correlation with the Passenger_Id.

Let’s now transform our train set and test set in a more compact dataset.

model = SelectFromModel(clf, prefit=True)

train_reduced = model.transform(train)

print(train_reduced.shape)

# (891L, 14L)test_reduced = model.transform(test)

print(test_reduced.shape)

# (418L, 14L)logreg = LogisticRegression()

logreg_cv = LogisticRegressionCV()

rf = RandomForestClassifier()

gboost = GradientBoostingClassifier()

models = [logreg, logreg_cv, rf, gboost]

for model in models:

print('Cross-validation of : {0}'.format(model.__class__))

score = compute_score(clf=model, X=train_reduced, y=targets, scoring='accuracy')

print('CV score = {0}'.format(score))

print('****')

Yay! Now we’re down to a lot fewer features.

We’ll see if we’ll use the reduced or the full version of the train set.

Let’s try different base models

logreg = LogisticRegression()

logreg_cv = LogisticRegressionCV()

rf = RandomForestClassifier()

gboost = GradientBoostingClassifier()

models = [logreg, logreg_cv, rf, gboost]

for model in models:

print('Cross-validation of : {0}'.format(model.__class__))

score = compute_score(clf=model, X=train_reduced, y=targets, scoring='accuracy')

print('CV score = {0}'.format(score))

print('****')Cross-validation of : <class 'sklearn.linear_model.logistic.LogisticRegression'>

CV score = 0.817071431282

****

Cross-validation of : <class 'sklearn.linear_model.logistic.LogisticRegressionCV'>

CV score = 0.819318764148

****

Cross-validation of : <class 'sklearn.ensemble.forest.RandomForestClassifier'>

CV score = 0.805891969854

****

Cross-validation of : <class 'sklearn.ensemble.gradient_boosting.GradientBoostingClassifier'>

CV score = 0.830560996274

****

Hyperparameters tuning

As mentioned at the beginning of the Modeling part, we will be using a Random Forest model. It may not be the best model for this task but we’ll show how to tune. This work can be applied to different models.

Random Forest is quite handy. They do however come with some parameters to tweak in order to get an optimal model for the prediction task.

To learn more about Random Forests, you can refer to this link :

Additionally, we’ll use the full train set.

# turn run_gs to True if you want to run the gridsearch again.

run_gs = False

if run_gs:

parameter_grid = {

'max_depth' : [4, 6, 8],

'n_estimators': [50, 10],

'max_features': ['sqrt', 'auto', 'log2'],

'min_samples_split': [2, 3, 10],

'min_samples_leaf': [1, 3, 10],

'bootstrap': [True, False],

}

forest = RandomForestClassifier()

cross_validation = StratifiedKFold(n_splits=5)

grid_search = GridSearchCV(forest,

scoring='accuracy',

param_grid=parameter_grid,

cv=cross_validation,

verbose=1

)

grid_search.fit(train, targets)

model = grid_search

parameters = grid_search.best_params_

print('Best score: {}'.format(grid_search.best_score_))

print('Best parameters: {}'.format(grid_search.best_params_))

else:

parameters = {'bootstrap': False, 'min_samples_leaf': 3, 'n_estimators': 50,

'min_samples_split': 10, 'max_features': 'sqrt', 'max_depth': 6}

model = RandomForestClassifier(**parameters)

model.fit(train, targets)

Now that the model is built by scanning several combinations of the hyperparameters, we can generate an output file to submit on Kaggle.

output = model.predict(test).astype(int)

df_output = pd.DataFrame()

aux = pd.read_csv('./data/test.csv')

df_output['PassengerId'] = aux['PassengerId']

df_output['Survived'] = output

df_output[['PassengerId','Survived']].to_csv('./predictions/gridsearch_rf.csv', index=False)

IV — Conclusion

In this article, we explored an interesting dataset brought to us by Kaggle.

We went through the basic bricks of a data science pipeline:

- Data exploration and visualization: an initial step to formulating hypotheses

- Data cleaning

- Feature engineering

- Feature selection

- Hyperparameters tuning

- Submission

- Blending

Lots of articles have been written about this challenge, so obviously there is room for improvement.

Here is what I suggest for the next steps:

- Dig more in the data and eventually build new features.

- Try different models: logistic regressions, Gradient Boosted trees, XGboost, …

- Try ensemble learning techniques (stacking)

- Run auto-ML frameworks

I would be more than happy if you could find out a way to improve my solution. This could make me update the article and definitely give you credit for that. So feel free to post a comment.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.