This AI newsletter is all you need #46

Last Updated on July 25, 2023 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI.

What happened this week in AI by Louie

This week we were interested to see some of the latest advancements in NLP and LLMs being applied to biology and medicine. OpenAI was also in the news again with the gradual rollout of its Code Interpreter plugin (offering a personal data analyst assistant) and rumors suggesting an upcoming release of the 32k token context length GPT-4 variant. We also saw the flow of new Open Source models continue including 3B and 7B RedPajama-INCITE from Together and MPT-7B from MosaicML.

Building upon parallels between NLP and single-cell biology, a new preprint introduced scGPT, a large language model tailored specifically for single-cell biology. Trained on a vast dataset of 10 million cells, scGPT demonstrates its ability to learn representations of cells and genes simultaneously. By conditioning existing cells, the model gradually learns to generate gene expressions based on varying conditions. scGPT incorporates a specialized attention masking procedure that determines the order of gene predictions using attention scores. This innovative approach captures gene interactions and establishes an order among genes, mimicking the auto-regressive nature of text generation.

We also saw AI applied to vaccine development with scientists at the California division of Baidu Research introducing a novel software called LinearDesign. Drawing inspiration from computational linguistics, LinearDesign aims to design mRNA sequences with more intricate shapes and structures compared to current vaccines, enabling genetic material to persist for longer.

We expect to see rapid application of the latest AI models to biology and medicine, as well as to AI assistants to doctors and health professionals. We see the huge positive potential for these products once optimized and approved for use, but as AI continues to advance, particularly in the fields of medicine and biology, the need for robust AI regulation becomes increasingly apparent. And on this note, we saw more movement on the regulatory front this week with a WhiteHouse AI meeting focussed on mitigating AI risks and prioritizing the safety of AI products. The government also released the Blueprint for an AI Bill of Rights and we expect to see more firm AI regulation over the coming months.

The HackAPrompt competition by Learn Prompting

HackAPrompt, organized by Learn Prompting and advised by Towards AI, is aimed at enhancing AI safety. In this competition, participants will try to hack as many prompts as possible by injecting, leaking, and defeating the sandwich defense. The competition is designed to be beginner-friendly, welcoming even non-technical people to participate.

The competition runs from May 5th, 6:00 pm to May 26th, 11:59 pm EST

Participate here to win prizes worth over $35,000!

Check the HackAPrompt submission tutorial here.

Hottest News

MPT-7B is a transformer model trained from scratch on a dataset containing 1 trillion tokens of text and code. It is an open-source model that can be used for commercial purposes and delivers comparable quality to LLaMA-7B. The training of MPT-7B took place on the MosaicML platform, requiring only 9.5 days and costing approximately $200,000, without any human intervention.

RedPajama releases a model trained on its base dataset. It is a 3 billion and a 7B parameter base model that aims to replicate the LLaMA recipe as closely as possible. The 3B model is the strongest in its class, and the small size makes it extremely fast and accessible.

3. Google Plans to Make Search More ‘Personal’ with AI Chat and Video Clips

Google is undergoing a shift in the way it displays search results by integrating conversations with artificial intelligence, as well as incorporating more short videos and social media posts. This marks a departure from its traditional format of displaying website results, which has been the foundation of its dominance as a search engine for several decades.

4. Microsoft’s Bing Chat AI is now open to everyone, with plug-ins coming soon

Microsoft is now offering its Bing GPT-4 chatbot to the public, eliminating the need for a waitlist. To access the open preview version powered by GPT-4, simply sign in to the new Bing or Edge using your Microsoft account.

5. The amazing AI super tutor for students and teachers, Khan Academy

Sal Khan, the founder, and CEO of Khan Academy, believes that artificial intelligence has the potential to ignite the most significant positive transformation in education. He showcases some exciting new features of their educational chatbot, Khanmigo.

5-minute reads/videos to keep you learning

With the advancing capabilities of GPT-4 and the imminent widespread availability of Microsoft Copilot, work dynamics are expected to undergo significant transformations within months, rather than years.

2. This Company Adopted AI. Here’s What Happened To Its Human Workers

The article explores the adoption of AI by a company and its impact on human workers. The implementation resulted in improved efficiency and the transformation of certain job roles, but it did not lead to job losses. Instead, employees were provided with upskilling opportunities to collaborate with AI in their work.

3. A list of open LLMs available for commercial use

The list comprises open LLMs that are commercially available, featuring models like T5, Dolly, and specialized LLMs for Code such as Replit Code and Santa Coder.

4. Text-to-Video: The Task, Challenges and the Current State

This blog post delves into the past, present, and future of text-to-video models. It begins by examining the distinctions between text-to-video and text-to-image tasks and explores the specific challenges associated with unconditional and text-conditioned video generation.

5. Google “We Have No Moat, And Neither Does OpenAI”

This document recently leaked and shared by an anonymous individual on a public Discord server, originates from a researcher within Google. The anonymous individual has granted permission for its republication.

Papers & Repositories

The paper introduces Distilling step-by-step, a new mechanism that trains smaller models that outperform LLMs, and achieves so by leveraging less training data needed by finetuning or distillation. The method extracts LLM rationales as additional supervision for small models within a multi-task training framework.

MLC LLM is a universal solution that enables the deployment of language models on various hardware backends and native applications. It also provides a productive framework for optimizing model performance according to specific use cases. The system operates locally without the need for server support and utilizes local GPUs on mobile devices and laptops for accelerated processing.

3. Unlimiformer: Long-Range Transformers with Unlimited Length Input

This work introduces Unlimiformer, a general approach that can enhance any existing pre-trained encoder-decoder transformer. It achieves this by efficiently distributing the attention computation across all layers using a single nearest-neighbor index. The index can be stored in either GPU or CPU memory and allows for sub-linear time queries.

4. Significant-Gravitas/Auto-GPT-Plugins: Plugins for Auto-GPT

This repository contains Auto-GPT plugins, including both first-party (included by default) and third-party plugins (added individually). First-party plugins are recommended for general use and wide adoption, while third-party plugins are more suited for specific personal needs.

5. Are Emergent Abilities of Large Language Models a Mirage?

The paper introduces an alternative explanation for emergent abilities, suggesting that when examining fixed model outputs for a specific task and model family, the choice of metric can influence the inference of emergent abilities. The analysis finds strong supporting evidence that emergent abilities may not be a fundamental property of scaling AI models.

Enjoy these papers and news summaries? Get a daily recap in your inbox!

The Learn AI Together Community section!

Upcoming Community Events

The Learn AI Together Discord community hosts weekly AI seminars to help the community learn from industry experts, ask questions, and get a deeper insight into the latest research in AI. Join us for free, interactive video sessions hosted live on Discord weekly by attending our upcoming events.

@george.balatinski is hosting a workshop to harness the power of ChatGPT to create real-world projects for the browser using JavaScript, CSS, and HTML. The interactive sessions are focused on building a portfolio, guiding you to create a compelling showcase of your talents, projects, and achievements. Engage in pair programming exercises, work side-by-side with fellow developers, and foster hands-on learning and knowledge sharing. In addition, we offer valuable networking opportunities with our and other communities in Web and AI. Discover how to seamlessly convert code to popular frameworks like Angular and React and tap into the limitless potential of AI-driven development. Don’t miss this chance to elevate your web development expertise and stay ahead of the curve. Join us at our next meetup here and experience the future of coding with ChatGPT! You can get familiar with some of the additional content here.

Date & Time: 2nd June, 12:00 pm EST

Add our Google calendar to see all our free AI events!

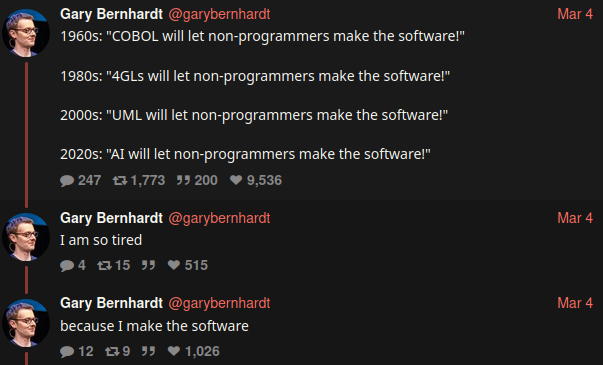

Meme of the week!

Meme shared by Recruiter6061#8864

Featured Community post from the Discord

rmarquet#2048 has developed a Streamlit component that offers a text annotation tool. This tool enables users to highlight text, add annotations, and categorize annotations under various references. Take a look at it here and support a fellow community member! Feel free to share your feedback and questions in the discussion thread here.

AI poll of the week!

Join the discussion on Discord.

TAI Curated section

Article of the week

The Multilayer Perceptron: Built and Implemented from Scratch by David Cullen

This article provides a comprehensive overview of the multilayer perceptron, addressing its key aspects through mathematical, visual, and programmatic explanations. The approach taken allows readers to grasp the intuition behind the multilayer perceptron through a step-by-step method presented in bite-sized portions.

Our must-read articles

MLCoPilot: Empowering Large Language Models with Human Intelligence for ML Problem Solving by Sriram Parthasarathy

Generative AI: A Game Changer by Patrick Meyer

Beyond Accuracy: How to Enable Responsible AI Development using Amazon SageMaker by John Leung

If you are interested in publishing with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.

Job offers

Senior Software Engineer (Python) @Reserv (Remote)

Lead Software Engineer @Charger Logistics Inc (Remote)

Intern — Software Engineer @Displayr (Sydney, Australia/Temporary)

Software Engineering Manager @Lucidya (Remote)

Data Processing Specialist @Hyperscience (Remote)

Data Analyst intern @Dott (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.