The Rise of Vector Databases: Understanding Vector Search and RAG Pipeline

Last Updated on June 11, 2024 by Editorial Team

Author(s): Shwetha Acharya

Originally published on Towards AI.

What is a Vector?

Vector is an object that possesses both magnitude and direction. It is represented as an array of numbers that define its dimensionality. Here is an example of how vectors — [3, -1,4] and [-2,3,1] — are depicted in 3D (red and blue. It shows how far/near these 2 entities are.

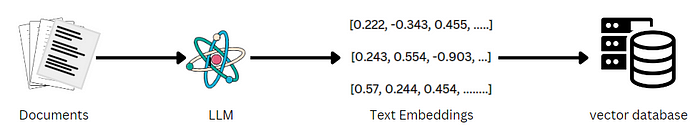

This concept is applied in ML to interpret and match data… be it any kind — text or image audio file, or video. LLM embedding models (such as that of OpenAI, and Hugging Face) are used to convert text data such as sentences, paragraphs, and documents to numeric vectors. Such representations are called text embeddings. For example, “It is sunny today.” can be encoded as [ -0.001798793, 0.978899863, …. -0.0036557987, -0.256577990] by applying text embedding model and can be represented in a multi-dimensional space. Similarly, images can be represented as vectors (image embedding), which encode the semantics of contents in images.

Vector Databases

Vector Databases are databases that store vector representations. These number representations are stored in “collections” in vector dbs., just as we store records in tables in RDBMS. Each record is identified with a unique id, and we can perform insert, read, delete and update operations on records. Some of the popular databases include — Chroma, Pinecone, Milvus and Qdrant.

Vector searches i.e. searching a vector database against a given query, is used in semantic search or to find similar data. The similarity between vectors or texts is determined using the Approximate Nearest Neighbor (ANN) algorithm. ANN finds a point in a data set that’s approximately close, not exactly close to the given query point. The distance between vectors is measured by any one of the metrics — Euclidean, cosine, or inner product (IP). The closer the two vectors are in this space, the more similar the meaning of their corresponding texts. Here is an example of cosine distance calculation between 2 vectors of text data.

%%capture

!pip install openai

import numpy as np

from openai import OpenAI

text1="chilly day"

text2="freezing cold"

# get open ai model

key = 'sk-T.......'

llm = OpenAI(api_key=key)

#function to generate text emeddings

def get_embeddings(text):

emb=llm.embeddings.create(input=text,model="text-embedding-3-small")

return emb.data[0].embedding

vectorA=get_embeddings(text1)

vectorB=get_embeddings(text2)

#function to calculate similarity between the 2 vectors

def cos_similarity(a,b):

cos_sim=np.dot(a,b)/(np.linalg.norm(a) * np.linalg.norm(b))

return (cos_sim)

print("Similarity between the 2 vectors : ", cos_similarity(vectorA,vectorB))

Similarity between the 2 vectors : 0.574156855905206

The similarity between these 2 texts is around 57% — related to a certain extent. Now, let’s plot 2 vectors against a query vector and see the similarity on a graph.

import matplotlib.pyplot as plt

#sample dataset

vector_data=[

[1.1, 2.3, 3.2],

[4.5, 6.9, 4.4]]

query=[1.0, 2.4, 3.9]

# function to plot 2 vector records against the query vector

def plot_vectors(a, b, query):

fig=plt.figure()

ax=plt.axes(projection="3d")

ax.set_xlim([-2, 8])

ax.set_ylim([-2, 8])

ax.set_zlim([-2, 8])

origin=[0,0,0] #starting point for all the 3 vectors

ax.quiver(origin[0], origin[1], origin[2], a[0], a[1], a[2], color="b")

ax.quiver(origin[0], origin[1], origin[2], b[0], b[1], b[2], color="g")

ax.quiver(origin[0], origin[1], origin[2], query[0], query[1], query[2], color="r")

return plt.show()

plot_vectors(vector_data[0], vector_data[1], query)

Why are Vector Databases so popular?

Vector databases can easily retrieve data, making query search faster. Hence, they are used extensively in NLP, recommendation engines, image / video searches. We will see how vector search can be performed in 3 easy steps using Qdrant database. I have used movies JSON file for this example.

- Extract required fields from the data: summary and title of every movie/record

!pip install jq

from langchain_community.document_loaders import JSONLoader

from langchain_community.vectorstores import Qdrant

from langchain_openai import OpenAIEmbeddings

import pandas as pd

#Load external dataset

#function to get the title / identifier of every record

def get_title(record: dict, metadata: dict) -> dict:

metadata["display_title"] = record.get("display_title")

return metadata

loader = JSONLoader(file_path='movies.json', jq_schema='.[]',

content_key="summary_short", text_content=False,

metadata_func=get_title)

movies = loader.load()

movies

"""

[Document(page_content='Agnès Varda, photographer, installation artist....',

metadata={'source':'/content/movies.json','seq_num': 1,'display_title':'Varda by Agnès'}),

Document(page_content='The writer-director Trey Edward Shults ....',

metadata={'source':'/content/movies.json','seq_num': 2},'display_title':'Waves'),

....]

"""

2. Create vector embeddings for “movies” using the OpenAI text embedding model and upload to the collection in vector DB.

#create vector embeddings for external dataset and upload to vector DB

key = 'sk-Z.........'

embeddings = OpenAIEmbeddings(api_key=key, model='text-embedding-3-small')

vectorstore = Qdrant.from_documents(movies, embeddings, location=":memory:",

collection_name="my_movies")

3. Run a search in the collection for a query by measuring the “similarity” (i.e. distance).

query = "movie about cars"

qdrant_docs = vectorstore.similarity_search_with_score(query, k=2)

#Format output in a table format

data=[{"Title": doc.metadata['display_title'], "Story" : doc.page_content, "Maqdrant_docstch" : score} for doc, score in qdrant_docs]

df=pd.DataFrame(data)

df

My query was about cars and interestingly, the model picked “Ford vs Ferrari”, which 87% related to the query!!

We can embed vector searches in the LLM pipeline to improve its accuracy. This technique is called Retrieval-Augmented Generation or RAG. The growing popularity of vector databases can be attributed to the increased adoption of RAG. Let’s understand this a bit more. As a background, there are broadly 2 ways to improve the accuracy of LLM so as to reduce hallucinations:

- Re-train or fine-tune the base model on desired domain data.

- Retrieval-Augmented Generation or RAG — Supplement base LLM with external knowledge base such as files, database items, or text stored in vector databases (as vector embeddings) and perform vector search.

In RAG, the model not only uses the training data but also the additional data (stored in vector databases) to provide more contextual answers. RAG is more resourceful and cost-effective in introducing new data to LLM, unlike fine-tuning or model retraining, which incorporates the additional knowledge into the model itself. One of the major applications of RAG is customer support bots and recommendation engines.

Let’s dive into a simple RAG implementation: we will create a Q&A RAG flow with text file as our external data source.

First, we will create vector embeddings of the text file and load them into our database.

from langchain_community.document_loaders import TextLoader

from langchain_community.vectorstores import Qdrant

from langchain_openai import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

# load text doc

!wget "https://raw.githubusercontent.com/sacharya225/data-expts/master/RAG/state_of_the_union.txt"

loader = TextLoader("state_of_the_union.txt")

data = loader.load()

#split doc into chunks of size 1000

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

docs = text_splitter.split_documents(data)

# get OpenAI Embedding model

key="sk-t......."

embeddings = OpenAIEmbeddings(api_key=key)

# upload vector embeddings into Qdrant

vectorstore = Qdrant.from_documents(docs, embeddings, location=":memory:",

collection_name="sotu")

Once all the data is available in the vector database, we’ll create a retrieval module. This lists top searches from our database that match with our query. The output will be used to set the context/background for our LLM ChatModel.

#Retrieval module

query = 'What did the president say about Ketanji Brown Jackson'

# retrieve data that match query to form context .

docs = vectorstore.similarity_search_with_score(query)

#above returns a list of tuples of matched Document and scores

"""

[(Document(page_content='Tonight. I call........',

metadata={'source': 'state_of_the_union.txt', '_id': '6e0bfd4dfe5844a3b366194168d1d768', '_collection_name': 'sotu'}),

0.8153497264571492),

(Document(page_content='A former top........',

metadata={'source': 'state_of_the_union.txt', '_id': 'ed214a222fdd4f118a5be2cf94a06493', '_collection_name': 'sotu'}),

0.7824609310325081),.....]

"""

# join all matched documents and generate a context/answer

context_text = "\n\n".join([doc.page_content for doc, score in docs])

Next, we will create a prompt template and use the matched & retrieved data to form a context. We’ll then feed the prompt to the model for Q&A.

#create/use a prompt template

template = """

You are an AI Assistant that follows instructions extremely well.

Assist with answers for questions based on the context: {context}

Use this context and answer the question : {question} """

# get prompt template and load context and query

prompt_template = ChatPromptTemplate.from_template(template)

prompt = prompt_template.format(context=context_text, question=query)

# Invoke LLM chat model to generate answer based on the context and query

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", api_key=key)

response=llm.invoke(prompt)

print(response.content)

"""

The President praised Ketanji Brown Jackson as one of our nation's top legal

minds who will continue Justice Breyer's legacy of excellence.

He mentioned her background as a former top litigator in private practice and

federal public defender, as well as her bipartisan support and commitment

to consensus building.

"""

Woohoo!!! We have a more appropriate/contextual response to our query.

You can extend the above example to a more real-time scenario: Using enterprise specific knowledge base, say company HR policies or company documentation or ticketing system notes, and create more effective enterprise RAG pipelines or explore image / audio search using vector embeddings.

You can find all the datasets and code here. I would encourage you to try it out with other LL models and use cases and share your feedback and learnings with me:)

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.