Statistics for Data Science

Last Updated on July 26, 2023 by Editorial Team

Author(s): sonia jessica

Originally published on Towards AI.

In this hyper-connected world, data is being consumed and generated at an unparalleled pace. Despite the fact that we enjoy this superconductivity of data, it brings forth abuse as well. Data professionals have to be trained to utilize statistical methods not just to decipher numbers but to bring to light such abuse and save us from being misled. Few data scientists are formally experienced in statistics. There are also a handful of good books and courses that demonstrate these statistical methods from the perspective of data science. A British mathematician, Karl Pearson, once described that statistics is the grammar of science and this is especially true for Computer and Information Sciences, Biological Science, and Physical Science. When you are beginning your career in Data Science or Data Analytics, acquiring statistical knowledge will encourage you to better leverage data insights.

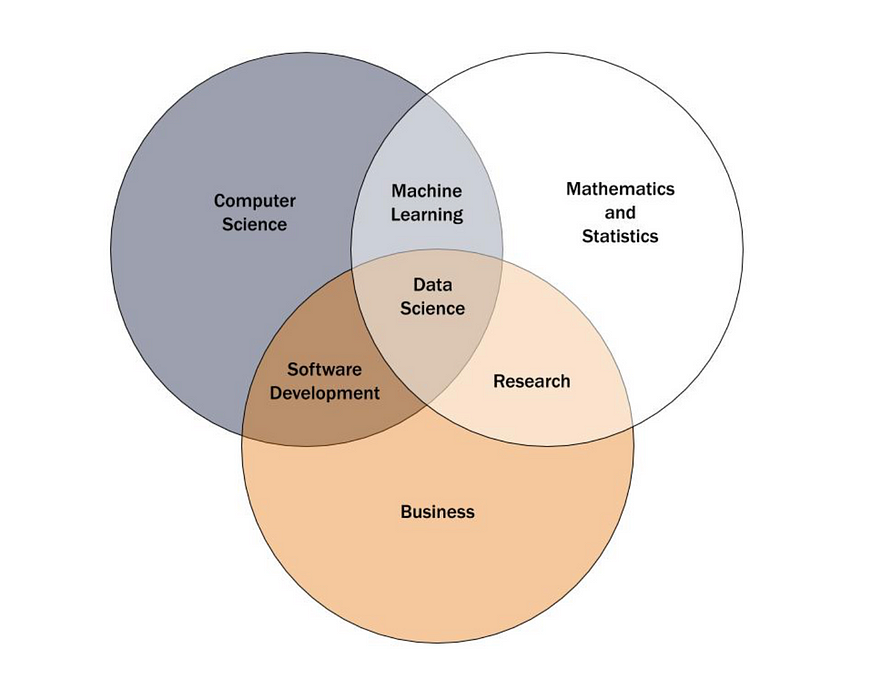

We cannot underestimate the importance of statistics in data science and data analytics. Statistics offer tools and methods to discover the structure and provide deeper data insights. Both mathematics and statistics love facts and hate guesses. Learning the basics of these two vital subjects will let you think critically and be creative while utilizing the data to resolve business problems and carry out data-driven decisions.

In this article, we will learn about the importance and basics of Statistics in Data Science. Data science is an interdisciplinary, multifaceted field of study. It not only dominates the digital world but is integral to some of the most basic functions, such as social media feeds, internet searches, grocery store stocking, political campaigns, airline routes, hospital appointments, and more. It’s everywhere. Among other disciplines, statistics is one of the most significant disciplines for data scientists. Learn more about data science interview questions for you to prepare your best for interviews.

Statistics for Data Science

Statistics act as a base while handling data and its analysis in data science. There are specific core theories and basics which have to be comprehensively understood before getting into advanced algorithms. Visual representation of the data and the algorithm’s performance on the data acts as a good metric for the layman to understand the same. Additionally, visual representation assists in identifying specific trivial patterns, outliers, and specific metric summaries like mean, variance, and median, which help in interpreting the middlemost value, and how the outlier influences the rest of the data. Data related to statistics is of many types. We have discussed some of them below.

- Categorical data describes attributes of people, including marital status, gender, the food they prefer, and so on. It is also regarded as ‘yes/no data’ or ‘qualitative data’. It takes numerical values such as ‘1’, ‘2’, where these numbers show one or other types of attributes. These numbers are not mathematically important, which states that they can’t be associated with each other.

- Continuous data handles data that is immeasurable, and can’t be counted, which basically continual forms of values are. Predictions from linear regression are continuous and are a continuous distribution that is also called the probability density function.

With statistics, you can create a thorough comprehension of data via data inference and summarization. Talking about these two terms, statistics is split into two –

- Descriptive Statistics

- Inferential Statistics

- Descriptive Statistics

Descriptive Statistics or summary statistics is used for describing the data. It handles the quantitative summarization of data and this summarization is executed via numerical or graphs representations. To have a full understanding of descriptive statistics, learn a few of the following key concepts:

- Normal Distribution: In a normal distribution, we show many data samples in a plot. With the help of normal distribution, we portray large values of variables in the form of a bell-shaped curve, called the Gaussian Curve.

- Variability: Variability calculates the distance between the central mean of the distribution and the data point. There are different measures of variability, including standard deviation, range, variance, and inter-quartile ranges.

2. Inferential Statistics

Inferential Statistics is the process of deducing or concluding from the data. With inferential statistics, we deduce a conclusion about the larger population by executing different tests and deductions from the smaller sample. For instance, during an election survey, if you are willing to know the number of people who support a political party, you just have to ask everyone about their views. This approach is not correct, as there are several people in India, and questioning every single person is a tedious task. Thus, we pick a smaller sample, draw conclusions from that sample, and attribute our observations to the larger population. There are several approaches in inferential statistics which are suitable for data science. Let us look at some of these techniques:

- Central Limit Theorem: The mean of the smaller sample is like that of the mean of the larger population, in a central limit theorem. Thus, the resulting standard deviation is equal to the standard deviation of the population. A crucial concept of the Central Limit Theorem is the evaluation of the population mean. By multiplying the standard error of the mean with the z-score of the percentage of confidence level, we can measure margin error.

- Hypothesis Testing: Hypothesis testing is the method of assumption. Applying hypothesis testing, we attribute the outcome from a smaller sample to a bigger group. There are two hypotheses that we should be testing against each other: The alternate Hypothesis and the Null Hypothesis. A null hypothesis shows the ideal scenario, while an alternate hypothesis is usually the opposite, using which, we try to prove it wrong.

- Qualitative Data Analysis: Qualitative Data Analysis comprises two essential techniques, i.e regression, and correlation. In regression, we determine the relationship between the variables. There are simple regression and multivariable regression. Also, if the function is non-linear, then we have a non-linear regression. Correlation is the method of discovering relationships between bi-variate data and random variables.

Statistical topics for Data Scientists

There are several statistical techniques that data scientists have to grasp. When beginning your career, it is crucial to have a thorough understanding of these principles, as any gaps in knowledge will cause compromised data or false conclusions.

Understand the Type of Analytics

- Descriptive Analytics informs us what took place in the past and encourages a business to learn how it is performing by offering context to help stakeholders understand information.

- Diagnostic Analytics interprets descriptive data a step further and encourages you to learn why something transpired in the past.

- Predictive Analytics anticipate what is most prone to happen in the future and offer businesses actionable insights based on the data. Prescriptive Analytics offers recommendations in regards to actions that will take advantage of the predictions and advise the necessary actions toward a solution.

A data scientist carries out a number of decisions every day. They vary from small ones, such as the ways to tune a model all the way up to the big ones, such as the team’s R&D strategy. Many of these decisions need a firm foundation in statistics and probability theory.

Central Tendency

- Mean: The average of the dataset.

- Median: The middle value of an ordered dataset.

- Mode: The most recurrent value in the dataset. If the data have many values that appeared most frequently, we have a multimodal distribution.

- Skewness: A measure of symmetry.

- Kurtosis: A measure of whether the data are light-tailed or heavy-tailed relative to a normal distribution

Probability distributions

We define probability as the chance that something might happen, defined as a simple “yes” or “no” percentage. For example, when weather reporting shows a 30 percent chance of rain, it also implies there is a 70 percent possibility it will not rain. Determining the distribution computes the probability that all those likely values in the study will occur. For instance, calculating the probability that the 30 percent possibility of rain will vary over the coming two days is an example of the probability distribution.

Dimension reduction

Data scientists scale down the number of random variables under consideration via feature extraction (developing new features from functions of the original features) and feature selection (selecting a subset of relevant features). This streamlines data models and simplifies entering data into algorithms.

Over and undersampling

Sampling techniques are incorporated when data scientists have too little or too much of a sample size for classification. Data scientists will either curb the selection of a majority class or build copies of a minority class to support equal distribution depending on the balance between the two sample groups.

Bayesian statistics

Frequency statistics utilize current data to figure out the probability of a future event. However, Bayesian statistics take this concept a step further by accounting for elements we anticipate will be true in the future. For instance, think about predicting whether at least 100 users will visit your coffee shop every Saturday over the next year. Frequency statistics will demonstrate probability by interpreting data from the last Saturday’s visits. But Bayesian statistics will show probability by also taking into account a nearby art show that will begin in the summer and take place each Saturday afternoon. This lets the Bayesian statistical model offer a much more accurate figure.

Regression

- Linear Regression

- Assumptions of Linear Regression

- Linear Relationship

- Multivariate Normality

- No or Little Multicollinearity

- No or Little Autocorrelation

- Homoscedasticity

Linear Regression is a linear approach to creating the relationship between one independent variable and a dependent variable. A dependent variable is a variable being calculated in a scientific experiment. An independent variable is handled in a scientific experiment to assess the effects on the dependent variable.

Another linear approach, Multiple Linear Regression models the relationship between two or more independent variables and a dependent variable.

Steps for Executing the Linear Regression

Step 1: Know about the model description, directionality, and causality.

Step 2: Go through the data, missing data, categorical data, and outliers

- Outlier is a data point that varies considerably from other observations. We can utilize the interquartile range (IQR) method and standard deviation method.

- The dummy variable holds only the value 0 or 1 to show the result for categorical variables.

Step 3: Simple Analysis- Look at the effect of comparing the dependent variable to the independent variable and the independent variable to the independent variable

- Make use of scatter plots to check the correlation.

- Multicollinearity arises when over two independent variables are extremely correlated. We can utilize the Variance Inflation Factor (VIF) to check if VIF > 5 there is highly correlated and if VIF > 10, then there is definitely multicollinearity among the variables.

- Interaction Term suggests a modification in the slope from one value to another value.

Step 4: Multiple Linear Regression- Analyse the model and the correct variables

Step 5: Residual Analysis

- Monitor normal distribution and normality for the residuals.

- Homoscedasticity represents a situation where the error term is similar across all values of the independent variables and implies that the residuals are the same across the regression line.

Step 6: Interpretation of Regression Output

- R-Squared is a statistical measure of fit that shows how much variation of a dependent variable is demonstrated by the independent variables.]

- A Higher R-Squared value means minor differences between the fitted values and observed data.

- P-value

- Regression Equation

Terminologies in Statistics — Statistics for Data Science

One should know some of the statistical terminologies while sitting for any Data Science interview. In most of the Data Scientist interview processes, the most commonly asked questions are from machine learning, probability statistics, coding, and algorithms. We’ve discussed these statistical terminologies below:

- Variable: It can be a number, characteristic, or quantity that can be counted. It is also known as a data point.

- Population: It is the collection of resources from where the collection of data is worked out.

- Sample: Basically, it is a subset of the population employed for data sampling and to predict the result in inferential statistics.

- Statistical Parameter: It is nothing but a quantity that helps index a family of probability distributions such as the median, mode or mean of a population. It is a population parameter.

- Probability Distribution: It’s a mathematical concept that chiefly provides the probabilities of occurrence of various outcomes, usually for an experiment that statisticians conduct.

Data Science Interview Example

Data Science interview preparation is quite a deal for everyone. Most of the candidates find it difficult to get through the recruitment process. Even though you’ve appeared in many interviews, each interview is a new learning experience. It can be a strenuous situation, as you will have to answer the tricky questions reasonably and satisfactorily.

In a data science interview, there are fundamental questions revolving around coding, behavioral questions, machine learning, modeling, statistics, and product sense, which the candidate must be certainly prepared for. Let’s talk about statistics in general. Questions about statistical concepts in a data science interview can be tricky to handle, as, unlike a product question, statistics questions have a definite right or wrong answer. This implies that your understanding of certain statistics and probability concepts will be fully tested during the interview. Thus, it is imperative to brush up on your statistics knowledge and be fully prepared before the data science interview. There are also at least three big topics in statistics that are commonly asked in a data science interviews, which are:

- The measure of center and spreads (mean, variance, standard deviation)

- Inferential statistics (p-Value, Confidence Interval, Sample Size, and Margin of Error, Hypothesis Testing)

- Bayes’ theorem

The questions that the interviewer asks generally fall into one or two buckets: the theory part and the implementation part. So, are you aware of how to improve your theory and implementation knowledge? What we can recommend from experience is that you must have a few personal project stories. You should have two to three stories where you can talk in-depth about a data science project you’ve done in the past. You should be able to answer questions like:

- Why did you choose this model?

- What assumptions do you need to validate in order to use this model correctly?

- What are the trade-offs with that model?

If you can answer these questions, you are basically convincing to the interviewer that you know both the theory and have implemented a model in the project. So, some of the modeling techniques that you may need to know are:

- Regressions

- Random Forest

- K-Nearest Neighbour

- Gradient Boosting and more

In the next section, we will learn about the commonly asked statistical question in Data Science interviews.

10 Frequently asked Statistics interview questions

Q.1: Mention the relationship between median and mean in normal distribution?

Ans: In the normal distribution, the mean is equal to the median.

Q.2: What is the proportion of confidence intervals that will not contain the population parameter?

Ans: Alpha is the probability of a confidence interval that will not contain the population parameter.

α = 1 — CL

We usually express alpha as a proportion. For example, if the confidence level is 95%, then alpha would be equal to 1–0.95 or 0.05.

Q.3: Mention the way to calculate range and interquartile range?

Ans: IQR = Q3 — Q1

Here, Q3 is the third quartile (75 percentile)

Here, Q1 is the first quartile (25 percentile)

Q.4: What are the consequences of the width of the confidence interval?

Ans: Confidence interval is utilized for decision making. As the confidence level rises, the width of the confidence interval also rises. As the width of the confidence interval increases, we seem to receive useless information as well. Useless information — wide CI High risk — narrow CI

Q.5: What is the advantage of using box plots?

Ans: Boxplot is a visually effective portrayal of two or more data sets and helps a quick comparison between a group of histograms.

Q.6: Mention one method to find outliers?

Ans: The most efficient way to find all of your outliers is by employing the interquartile range (IQR). The IQR contains the middle bulk of your data, so we can easily find the outliers once we know the IQR.

Widely used — Any data point that lies outside the 1.5 * IQR

Lower bound = Q1 — (1.5 * IQR)

Upper bound = Q3 + (1.5 * IQR)

Q.7: What do you understand by the degree of freedom?

Ans: We define DF as the number of options we have and we use DF with t-distribution and not with Z-distribution

For a series, DF = n-1 (where n is the number of observations in the series)

Q.8: When to use t distribution and when to use z distribution?

Ans: We must satisfy the following conditions to use Z-distribution Do we know the population standard deviation? Is the sample size > 30?CI = x (bar) — Z*σ/√n to x (bar) + Z*σ/√n

Else we should use t-distribution CI = x (bar) — t*s/√n to x (bar) + t*s/√n

Q.9: How would you explain a confidence interval to a non-technical audience?

Ans: We can apply Bayes Theorem here. Let U stand for the case where we are flipping the unfair coin and F stand for the case where we are flipping a fair coin. As the coin is chosen randomly, we know that P(U) = P(F) = 0.5. Let 5T denote the event where we flip 5 heads in a row. Then we are interested in solving for P(UU+007C5T), i.e., the probability that we are flipping the unfair coin, given that we saw 5 tails in a row.

We know P(5TU+007CU) = 1 since by definition the unfair coin will always result in tails. We know that P(5TU+007CF) = 1/2⁵ = 1/32 by definition of a fair coin. As per Bayes Theorem we have:

Therefore, the probability we picked the unfair coin is about 97%.

Q.10: What are H0 and H1 for the two-tail test?

Ans: H0 is regarded as the null hypothesis. It is the normal case or default case. For one tail test x <= µ For two-tail test x = µ

H1 is regarded as an alternate hypothesis. It is the other case. For one tail test x > µ For two-tail test x <> µ

How is Statistics Important for Data Science?

Below mentioned are a few points that show the importance of statistics for data science.

Enables classification and organization

This is a statistical approach that’s employed by the same name in the data science and mining fields. Classification is used to classify data into proper, observable analyses. Such an organization is key for companies who decide to utilize these insights to draw predictions and create business plans. Also, it is the first step to making a huge chunk of data usable.

Helps to measure probability distribution and estimation

These statistical approaches are the key to understanding the fundamentals of machine learning and algorithms as logistic regressions. LOOCV and Cross-validation techniques are also intrinsically statistical tools that are introduced into the world of Data Analytics and Machine Learning for A/B, inference-based research, and hypothesis testing.

Finds structure in data

Businesses often have to handle tons of data from a panoply of sources, each more complex than the last. Statistics can come to your aid in spotting anomalies and trends in this data, which lets the researchers dispose of irrelevant data at a very early stage rather than sifting through data and wasting effort, resources and time.

Promotes statistical modeling

Data comprises a series of complicated interactions between variables and factors. To frame these or display them coherently, statistical modeling using networks and graphs is the key. This also helps to determine and account for the effect of hierarchies on global structures and escalate local models to a global scene.

Aids data visualization

Visualization in data is the portrayal and analysis of found structures, insights, and models in interactive, effective, and logical formats. It’s also essential that these formats be simple to update. In this way, nothing has to go through a massive overhaul every time there’s a fluctuation in data. Apart from this, data analytics representations also utilize the same display formats as statistics — histograms, graphs, and pie charts. Not only does this make data more readable, but it also makes it much simpler to identify trends or errors and offset or enhance them as needed.

Promotes awareness of distributions in model-based data analytics

Statistics can help to find clusters in data or even other structures that depend on time, space, and other variable factors. Reporting on networks and values without statistical distribution methods can cause measures that don’t regard variability, which can make or break your results.

Conclusion

Every company is aiming to become data-driven. Thus, we are noticing a rising demand for data scientists and analysts. Now, to deal with issues, answer questions, and chalk out a strategy, we need to make sense of the data. Fortuitously, statistics provide a pile of implements to engender those insights. We learned about various statistical requirements for Data Science. We studied how data science depends on statistics and how descriptive and inferential statistics form its core. In a nutshell, we conclude that statistics for data science is a mandatory requirement for mastering it. Hope now you are clear with all the theories related to statistics for data science. It’s time to check how this concept will help you ace your first job in data science.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI