Should One Skip Linear Algebra to Become a Data Scientist?

Last Updated on July 17, 2023 by Editorial Team

Author(s): Ivan Reznikov

Originally published on Towards AI.

This article is not about why linear algebra is essential in machine learning. This is an article on why you will need linear algebra.

As a data science university lecturer, I recently had a student ask me how they would use their matrix multiplication knowledge in an actual data science project. I understand their skepticism — why would you need to perform matrix operations manually when you have so many powerful libraries and tools available in Python and other programming languages?

I was about to start explaining how the principles and concepts behind matrix multiplication can be valuable in data science, especially in deep learning, how it works, etc. But I caught myself questioning: most of the data scientists I know haven’t performed math operations themselves since graduation. Interesting, but the same day another student mentioned how he’d seen data science being done with several clicks in the autoML interface: load-select-click-click-click-done.

So let’s discover how developing a deep understanding of linear algebra principles can help you to develop more effective algorithms and models.

ML Domains

Image processing and convolution

A key component of image processing is image convolution — the process of extracting features and enhancing images. Image convolution involves multiplying the pixel values of an image with a small matrix called a kernel and summing the results to produce a new pixel value. Efficiently it is performed through matrix multiplication and some other linear algebra operations. Yes, you most likely won’t have to do the math yourself, but knowing the complexity, it is more practical to set the sizes of kernels and images. Other image processing techniques, such as edge detection and image segmentation, also heavily rely on linear algebra concepts such as eigenvalues and eigenvectors, matrix factorization, and singular value decomposition.

NLP and Word Embeddings

Word embeddings, one of the most widely used NLP methods besides transformers, represent words as vectors in high-dimensional space. This method heavily relies on linear algebra. In order to increase the accuracy of various NLP tasks, including sentiment analysis, language translation, and text classification, word embedding is used to capture the meaning and relationships between words in a language. Word embeddings are produced and modified using linear algebraic ideas, including vector operations, matrix factorization, eigenvalues, and eigenvectors. Hence, intricate semantic links between words in a language can be captured by data scientists. Moreover, methods like singular value decomposition (SVD) are utilized to lower the high-dimensional vectors’ dimensions, improving their computational efficiency and usability.

Recommendation systems

Recommendation systems are used to predict user preferences and provide personalized recommendations for products, movies, music, and more. These systems often work with large matrices that represent user-item interactions or ratings. Linear algebra techniques such as matrix factorization can be used to reduce the dimensionality of these matrices, allowing data scientists to extract meaningful information about user preferences and item characteristics. Additionally, eigenvectors and eigenvalues are used to capture the latent features of the data, such as user interests or item attributes, which can then be used to make more accurate recommendations. With a strong understanding of linear algebra, data scientists can develop advanced recommendation systems that provide concrete and personalized recommendations to users, improving user engagement and satisfaction.

ML Algorithms

Matrix operations, matrix multiplication, and eigendecomposition are crucial elements of many machine learning models, including logistic regression, support vector machines, and linear regression. These models require the manipulation of data matrices, and linear algebra enables data scientists to carry out operations on these matrices effectively and computationally.

Moreover, complex linear algebra operations, including derivative calculations, matrix convolutions, matrix factorization, and singular value decomposition, are frequently used in more sophisticated machine learning models, such as neural networks and deep learning models.

Principal Component Analysis (PCA) and other dimensionality reduction techniques involve reducing the number of features in a dataset while retaining as much information as possible.

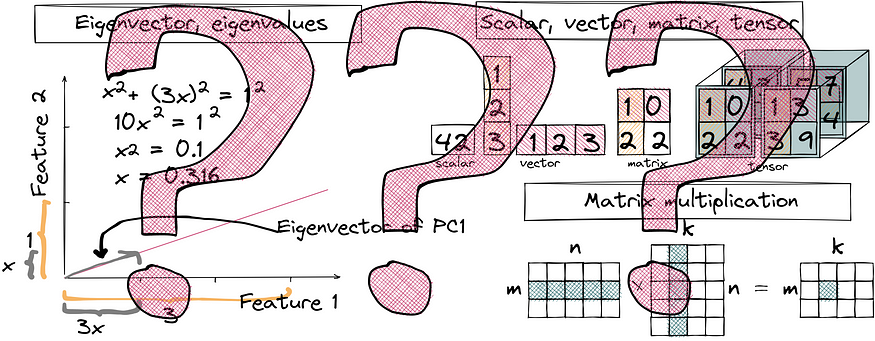

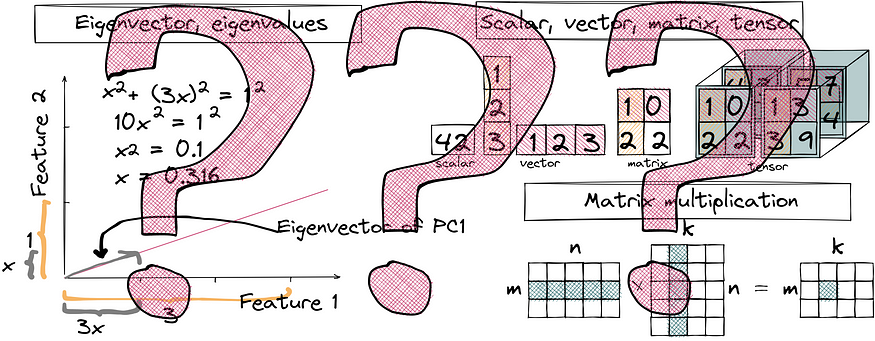

In PCA, the covariance matrix of the data is decomposed into its eigenvectors and eigenvalues, which represent the principal components of the data. These principal components can then be used to reduce the dimensionality of the data while preserving as much variance as possible. Knowing how they are calculated will give you a better understanding when analyzing the importance of PCs and the way they represent features. Linear algebra techniques such as singular value decomposition (SVD) are also used in other dimensionality reduction techniques such as Non-negative Matrix Factorization (NMF).

ML Utilities

Vectorized Code (array programming)

Vectorized code is a programming technique that uses linear algebra concepts to perform operations on arrays and matrices rather than iterating through each element individually. More efficient and streamlined code can process larger amounts of data quickly and accurately. Optimizing pandas or spark calculations is an excellent example of vectorized coding.

Loss functions and metrics

Loss functions are used to quantify the difference between predicted and actual values within the model training process, while metrics are used to evaluate the performance of a model afterward. All loss functions and metrics involve math operations, such as calculating mean squared error. Linear algebra concepts such as matrix multiplication, matrix inversion, and eigenvalues are used to estimate and optimize these loss functions and metrics. For example, gradient descent optimization, a common method for minimizing loss functions, involves calculating the gradient of the loss function with respect to the model parameters, which is represented as a matrix.

Regularization

Regularization is a technique used to prevent overfitting in machine learning models by adding a penalty term to the loss function. This penalty term is often represented as the norm of the model parameters, which involves linear algebra concepts such as matrix multiplication and eigenvalues. Ridge regression, a common regularization technique, involves adding the L2 norm of the model parameters to the loss function. Lasso regression, another popular regularization technique, involves adding the L1 norm of the model parameters to the loss function. Selecting and calculating norms is done via linear algebra concepts.

Conclusions

If you’ve just scrolled the article to the end — that’s all right.

To sum up, you need to know a couple of techniques — matrix operations (multiplication, factorization, inversion, etc.), eigenvectors, and eigenvalues.

High chance you won’t need to perform the math yourself in most of the cases, though. But knowing linear algebra isn’t only about the formulas and knowledge!

Understanding linear algebra can help data scientists to think more critically and creatively about solving complex problems and approach new challenges with a more mathematical and analytical perspective.

What are your thoughts? Should data scientists continue to study linear algebra? When was the last time you used it in a data science project?

Share your experience in the comments below!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI