Over The Rainbow

Last Updated on July 24, 2023 by Editorial Team

Author(s): Abby Seneor

Originally published on Towards AI.

Artificial Intelligence, Fairness

People expected AI to be unbiased; that’s just wrong. If the underlying data reflects stereotypes, you will find these things.

This is a story about Equality, Equity, Justice and the dark side of Artificial Intelligence.

“Somewhere over the rainbow,

skies are blue,

and the dreams that you dare to dream

really do come true.”(Harold Arlen & Yip Harburg for The Wizard of Oz)

Part 1: The Truth Of Justice

“The opposite of poverty is justice” — (Bryan Stevenson)

It is no secret I that am an avid fan of children’s books. The simplicity and naivety are borderline of genius, and as we all know, simplicity is the trademark of genius. ‘A Fence, Some Sheep, and a Little Guy with a Big Problem’ by Yael Biran, is definitely ranked high as one of the best children’s stories to exemplify problem-solving.

For those of you not familiar with this story, it is about a little guy who has trouble sleeping, and as the old trick suggests, he starts to count sheep. But instead of boring him to sleep, these sheep find interesting and unique ways to cross the fence; each in their own individual way.

Solving a Fence:

Initially, this book aims to teach children about the different ways that we can look at the world, but, its simple and straightforward illustrations, became very popular in management workshops for visualising the various creative ways of problem-solving, and encouraging out-of-the-box thinking.

The sheep found various ways to get over the fence: jumping over it, trying to breakthrough, ignoring the fence, overthinking the optimal way, or climbing over a pile of dead sheep who failed the task. The story ends with one sheep who took a step back and realised there is a very simple way to get over the fence; she found a free path near the fence (you can see the graphic at the recording of the book, minute 2:23).

For me, as you probably have guessed, this is not a story about creative problem solving for some c-suite class, but an essential lesson of the way we are dealing with Equality, Equity and what real justice is, and of course, we will have a little bit of Artificial Intelligence.

Equality or Equity:

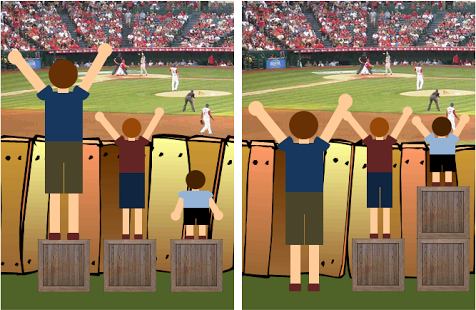

A while ago, I came across an image by Craig Froehle, showing the difference between Equality and Equity.

There are a bunch of iterations of this graphic, so you’ve probably come across a version of it. Basically, it shows three people watching a baseball game over the top of a fence. The people are different heights, so those not as tall have a harder time seeing.

In the first image, all three people have one crate to stand on. In other words, there is ‘Equality’, because everyone has the same number of crates. Whilst this is helpful for the middle-height person, it is not enough for the smaller person and superfluous for the tallest person. In contrast, in the second image, there is ‘Equity’ — each person has the exact number of crates they need to enjoy the game to the fullest.

Deficit Thinking:

My biggest problem with this graphic has to do with where the initial inequity is located. Some people need more support to see over the fence because they are not as tall, an issue inherent to the people themselves. It is rooted in a history of oppression, from colonisation, slavery to “Separate but Equal” along with redlining. It is sustained by systemic racism and the country’s ever-growing economic inequality.

‘Deficit Thinking’, refers to the notion that students, particularly those of low income or ethnic minority background, fail in school because of their internal defects. This endogenous theory blames the victim, rather than examining how the schools are structured to prevent certain students from learning.

This image, and some others — like the one with animals having to climb a tree, or the people picking fruit, suffer from the same problem. Deficit Thinking makes systemic forms of racism and oppression invisible.

Making Justice:

This is not about Equity vs Equality. It is about making justice.

First of all, it is crucial to acknowledge that the height is not the reason some people having more difficulty seeing than others, but the context around them — the fence, as a metaphor. Real justice, in this particular case, would be removing the fence.

There is a famous saying by Robert Frost, “Don’t ever take a fence down until you know the reason why it was put up”. Sorry Mr Frost, but IF fences have been put up without reason, THEN surely this is the perfect time to take those down.

If we want to address the real problem, we do need to look at why the fence was there in the first place and what alternatives can be achieved to make the situation more harmonious. This is justice.

Equality is treating everyone the same, whereas Equity is giving everyone what they need to be successful or achieve something. Although Equality aims to promote fairness, it can only work if everyone starts from the same place and requires the same help. Equity appears unfair, but it actively moves everyone closer to success by levelling the playing field. But, as we know, not everyone starts at the same place, and not everyone has the same needs.

Children Aren’t Born Racists:

“Children are innocent and love justice, while most of us are wicked and naturally prefer mercy”. (G.K. Chesterton)

A new study offers some hope to humanity. It reports that American kids, at the age of five and six, largely reject the belief that one’s skin colour determines an individual’s personality and abilities. Marjorie Rhodes, a New York University Psychologist, said that “beliefs about race that contribute to prejudice take a long time to develop-when they do-and that their development depends to some extent on the neighbourhoods in which the children grow up”.

Our Differences are not Obstacles:

“That’s not fair”, is something we hear more often than we think from children. The playground mentality of fairness is “I get two cookies, and you get two cookies”, and children understand in their pure senses, that fairness doesn’t equal Equity. Because here is the thing: treating everyone exactly the same is not fair. What equal treatment does is to erase our differences and promote privilege.

Since everyone is different and we embrace these differences as unique, we must also redefine our basic expectations for fairness upon those individual differences. We need to recognise our differences as unique, rather than reach for one definition and equal treatment. When we uncover the equality blanket, we can see that not everyone’s needs are being met.

We actively erase our differences or try to ‘fix’ them, by bringing everyone to where we think they should be. But, in a fair society, everyone matters, and everyone contributes to its growth, and this is how society as a whole wins.

Privilege is Invisible to Those Who Have It:

Usually, we are unaware of our own privileges because the system generally works in our favour. But, what does the other side of privilege feel like? I once heard a good explanation for that: ‘it is like riding on roads built for cars’.

Cars and bikes are different. But the truth is that the whole transportation infrastructure privileges the automobile. For a cyclist, the roads are dangerous (take it from a road biker who got hit a few times by cars). Not intentionally, of course. Most drivers aren’t trying to be jerks (or at least, I want to believe so). It is just that the traffic rules and road system simply wasn’t made to work for both cars and bikes to coexist peacefully.

Everyone is privileged in some aspects; a man will never understand a woman struggling climbing up a hierarchy, as a healthy person with functioning legs will not understand disability. But, that doesn’t mean the difference makes one superior or better. It doesn’t mean these are obstacles. We are all unique and different, and trying a one-size-fits-all solution is wrong and damaging.

But sadly, we can rarely change the whole flawed system. What we can do is promote fairness and put extra work into seeking justice, by helping to remove the antiquated boundaries.

Part 2: The Dark Side Of Artificial Intelligence

“People expected AI to be unbiased; that’s just wrong. If the underlying data reflects stereotypes, or if you train AI from human culture, you will find these things.” (Joanna Bryson, AI Researcher)

So, maybe a solution to a world powered by Artificial Intelligence (AI), isn’t that bad. Machines are not biased, let alone racists, right? — we tend to think their appeal is immense; machines can use cold, dry data, to make decisions that are sometimes more accurate than a human’s. Well, this is not necessarily true.

I recently wrote that AI is as intelligent as the person who created it, and that explains the danger of AI if not used properly. It can make decisions that perpetuate the racial biases that exist in society. It is not because the computers are racist, it is because human beings create them, and humans are biased. They are trained by data of the world as it is, not as the world ought to be.

“If you’re not careful, you risk automating the exact same biases these programs are supposed to eliminate,” says Kristian Lum, Lead Statistician at the Human Rights Data Analysis Group.

Garbage In — Garbage Out:

In 2016, an investigation published by ProPublica about a Criminal Risk Assessments Software, which is used by courts to predict the likelihood of someone to committing a crime after being booked, found the program to be biased against black people.

And the irony of this is screaming alarm bells; we use these systems because we think they are clean from human biases, and hence they can make impartial decisions. ProPublica wrote — “If computers could accurately predict which defendants were likely to commit new crimes, the criminal justice system could be fairer and more selective about who is incarcerated and for how long”.

And this is not only with Criminal Assessment. The majority of commercial facial-recognition systems exhibit bias, as a recent US study found. The systems have 1:10 ratio of false identifying African-American and Asian than Caucasian faces.

Same goes for identifying women and older adults. Research at MIT Media Lab found that some facial recognition software could identify a white man with near-perfect precision, but failed spectacularly at identifying a darker-skinned woman. And the problem continues with well-established companies. In 2015, Google Photos labelled black people’ gorillas’. The formal apology was: “We’re appalled and genuinely sorry that this happened”.

The debate renewed recently when a US University claimed to have a software which operates “with no racial bias”. Krittika D’Silva, A Computer-Science researcher at Cambridge University, warns of the serious harm caused by using this software. She said it is irresponsible to think a machine can predict criminality based on a person’s face.

“Numerous studies have shown that machine-learning algorithms, in particular face-recognition software, have racial, gendered, and age biases”. Although it is still widely used for low enforcement, the awareness of its danger is rising, and San Francisco became the first major American city to ban police and other law enforcement agencies from using facial recognition.

And San Fransisco is not alone in this fight. A few months ago, Microsoft and IBM joined forces with the Vatican to create a doctrine for face recognition and Ethical AI. In a joint document released on February 28 2020, “Rome Call for AI Ethics”, they stated their mission: “New forms of regulation must be encouraged to promote transparency and compliance with ethical principles, especially for advanced technologies that have a higher risk of impacting human rights, such as facial recognition”. And furthermore, “AI systems must be conceived, designed and implemented to serve and protect human beings and the environment in which they live”.

Various companies claim to have an AI with sophisticated Face Recognition algorithms to detect terrorists or predict who is criminal. This is a terrifying fact, for regardless of how robust the algorithm is, it is all about what kind of data you feed the algorithms to teach them to discriminate. If you feed it crap, you will get crap. “Garbage In, Garbage Out”, as we say.

Pattern Behaviour:

The challenge, of course, is to make sure the computer gets it right. If it is wrong in one direction, a dangerous criminal could go free (false negative). If it goes wrong in another direction, it could result in someone unfairly receiving a harsher sentence or waiting longer for parole than is appropriate (false positive).

There is, of course, the ‘Sexist AI’, and a good example of this is Machine Learning programs by Amazon, used for filtering resumes of potential candidates, which are gender-biased. But, the real danger seems to appear in the rise of AI applications within healthcare. Health data is filled with historical bias, and that can be exacted with human lives.

The solution might be by teaching machines about human behaviour, including prejudices and how to spot them, exactly as we humans do, to fight racism. “If you change the definition of racism to a pattern of behaviour — like an algorithm itself — that’s a whole different story. You can see what is recurring, the patterns then pop-up. Suddenly, it’s not just me that’s racist; it’s everything. And that’s the way it needs to be addressed — on a wider scale”, said Mike Bugembe, an AI specialist, to Metro.

Double Challenge:

The challenge we are facing goes both ways:

The people who use these programs are actually listening and accepting the decisions of machines. They are listening to something that is perpetuating or even accentuating the biases that already exist in society. They should be aware of the problem and take the machine’s decision with a grain of salt.

Meanwhile, data scientists and AI researchers are facing a new, unfamiliar challenge, that goes above statistical data and complex maths; working on shaping the future, with the age-old baggage of our past.

Part 3: The Underdog

“Peace will not come out of a clash of arms but out of justice lived and done by unarmed nations in the face of odds” — (Mahatma Gandhi)

Now that we have established that even AI will not help, we furthermore have an obligated duty to ensure that the future of humanity will be as clean as possible from prejudices, to ensure our biases are not embedded into machines. We think that what we are doing is pursuing justice, but this is not so. All we are doing is seeking for fairness or Equity, by giving the less tall people an extra amount of crates.

It is very easy and almost effortless to do something from the comfort of our own living room or donating to a charity from our saving account. These are all extraordinary, self-gratifying and make us feel saintly, they don’t cost us much, and it also allows us to keep moving on with our own lives, but do we honestly care? — if we really do — where were we, until now?

Special days, movements, protests and hashtags have their place, but they are on the verge of Equity. It’s like giving extra crates to the less tall person, an emphasis on their flaw. This is everything but justice. It is easy to post a picture, wave a colourful flag or wear a specific colour of shirt to feel like we are changing something when what we are really doing is going through the motions.

It is like hanging a sign on the door stating this house is Vegan, but behind that closed door, it is actually a meat festival. Or taking a magic diet pill, hoping to lose some excess fat with the minimal effort taken swallowing the pill, but, not changing the bad eating habits nor the lack of exercise required for the pill to be effective. Guess what; I have some breaking news for you; it will not and does not work!

Please do not take this the wrong way; I am sure there are people who genuinely care, and some very honest houses out there, where the words on the door go hand-in-hand with the actions behind them. I am only referring to the increasing number of hypocrites and sanctimonious, who ride along on the wave to gain some fame or popularity, but then reveal their true colours (how ironic is this phrase in that context), when reality hits their face.

The Underdog:

Joker, the psychological thriller film released last year, had a huge hype around it. Google rated it as one of the “best films of all times”, and you will find the Joker alongside other all-time classics like Godfather, Pulp Fiction, The Lord of The Rings and a few other exceptional movies.

So having felt a little peer pressure, I went to watch it. I hated it for several reasons, but I will mention here, only one which is relevant to what I am trying to say.

In my opinion, this film reflects perfectly the world we are living in. We all feel compassion towards the underdog, despite their actions — (in the Joker’s case, ‘murder’. Violence does not solve problems; it creates them. Where the only viable solution is to kill, it would be a great failure. Non-violence requires not only physical courage but great intelligence. Anything else is a failure in both moral and practical terms).

It is very easy to empathise and sympathise with the underdog, people do not hesitate to align themselves with underdogs, a phenomenon called “The Underdog Effect”, but sympathy goes to the underdog and sympathy is easily mistaken for an imperative of justice. The Underdog Effect also has an aspect of fairness. If we perceive that one’s been given an unfair advantage, we tend to root for them, possibly to offset the unfairness.

Social Shaming:

I am not here to talk about the Joker but to use it as another example of our nature towards unfairness. We are rooting for the underdog to seek fairness, but this is the wrong approach, the real question we should be asking ourselves is — “Why do we call them underdogs in the first place?” — in my opinion, this is a ‘Social Shaming’, it embeds the differences and puts the blame on the victim, rather than on society itself. We give them a nickname because we think they don’t align with our view of normality, so we try to bring them to our level, but this is Equity, not justice.

And there is another aspect to it, which we could face in the future, as a consequence of; “Everybody loves the underdog, then they take the underdog and make him into a hero but then hate him” (Fred Durst), once society gets ‘fairness’ by rooting for the underdog, we tend to turn them around into the victor. The Joker then ends up with a more important role to that he came to play. But it is human nature to hate and criticise success and heroism (an element of Nouveau Riche perhaps?).

I have a dream — –

I wish to have answers on how we can get justice, but sadly I don’t. I am only here to raise some form of awareness and put a mirror to our face, but, hopefully, this is the first step of changing things.

The Colour Theory:

The answer to the question, “are black and white colours?”, is one of the most debated issues about colour. Ask a scientist, and you’ll get a lecture about light frequencies and Additive vs Subtractive colour theory, with the conclusive reply: “black is not a colour, white is a colour.” However, if you ask an artist or a child with crayons, the table turns (subtractive colour theory): “black is a colour, white is not a colour.”

As a scientist, when I hear the term “black lives matter”, I wonder.

Because in an ideal-perfect world of justice, where everybody is colourblind, it won’t be about ‘black lives matter’, but about ‘lives matter’.

Black people are asking for justice, not charity. They are not asking to hire black people just because they are black. They are asking companies to stop *not hiring people just because they are black. Because even with the exact same qualifications and resume, the thing that blocks people is a black-sounding name. The way we treat others defines us, and the way others treat us — define them. Let us treat every human on this planet (well, until we will colonise Mars) with common sense, human values, respect, dignity and compassion.

“Someday I’ll wish upon a star

And wake up where the clouds are far behind me

Where troubles melt like lemon drops

Away above the chimney tops, that’s where you’ll find me.”(Harold Arlen & Yip Harburg for The Wizard of Oz)

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.