Keras for Multi-label Text Classification

Last Updated on July 24, 2023 by Editorial Team

Author(s): Aman Sawarn

Originally published on Towards AI.

Machine Learning

CNNs and LSTMs architectures for Multi-Label Text Classification using Keras

Multi-label classification can become tricky, and to make it work using pre-built libraries in Keras becomes even more tricky. This blog contributes to working architectures for multi-label classification using CNNs and LSTMs.

Multi-label classification has been conventionally used to predict tags from movies synopsis, predict tags on YouTube videos, etc.

Let’s define what a Multi-Label classification is?

Multi-label classification is a generalization of multi-class classification which is the single-label problem of categorizing instances into precisely one of more than two classes, in the multi-label problem there is no constraint on how many of the classes the instance can be assigned to i.e there could be one, two or many labels in the output data used for training.

Metric Used:

F1 Score: F1 score is calculated using the harmonic mean of precision and recall.

F1 Score = 2 * (precision * recall) / (precision + recall)

This F1 score is micro averaged to use it as a metric for multi-class classification. It is calculated by counting the value of true positives, false positives, true negatives, and false negatives. All the predicted outputs, in this case, are column indices and are used in sorted order by default.

def f1micro(y_true, y_pred):

return tf.py_func(f1_score(y_true, y_pred,average='micro'),tf.double)

Data and its understanding:

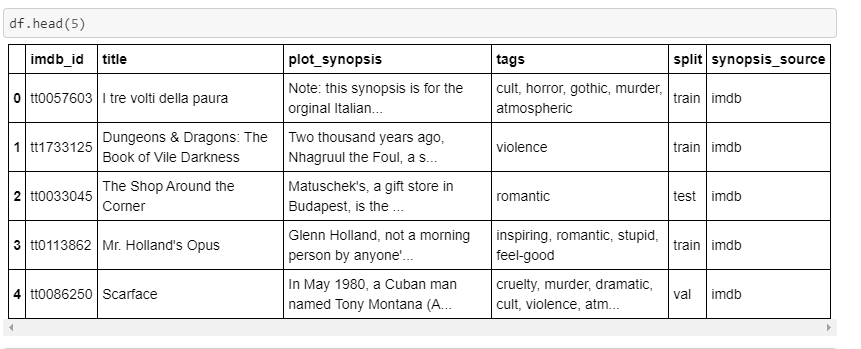

The data used for this illustration has been taken from Kaggle MPST- Movie Plot Synopsis Data.

df = pd.read_csv(r'F:\mpst_full_data.csv', delimiter=',')

nRow, nCol = df.shape

df.head(5)

Data Cleaning

import re

def decontracted(phrase):

# specific

phrase = re.sub(r"won't", "will not", phrase)

phrase = re.sub(r"can\'t", "can not", phrase)

# general

phrase = re.sub(r"n\'t", " not", phrase)

phrase = re.sub(r"\'re", " are", phrase)

phrase = re.sub(r"\'s", " is", phrase)

phrase = re.sub(r"\'d", " would", phrase)

phrase = re.sub(r"\'ll", " will", phrase)

phrase = re.sub(r"\'t", " not", phrase)

phrase = re.sub(r"\'ve", " have", phrase)

phrase = re.sub(r"\'m", " am", phrase)

return phrasestopwords= set(['br', 'the', 'i', 'me', 'my', 'myself', 'we', 'our', 'ours', 'ourselves', 'you', "you're", "you've",\

"you'll", "you'd", 'your', 'yours', 'yourself', 'yourselves', 'he', 'him', 'his', 'himself', \

'she', "she's", 'her', 'hers', 'herself', 'it', "it's", 'its', 'itself', 'they', 'them', 'their',\

'theirs', 'themselves', 'what', 'which', 'who', 'whom', 'this', 'that', "that'll", 'these', 'those', \

'am', 'is', 'are', 'was', 'were', 'be', 'been', 'being', 'have', 'has', 'had', 'having', 'do', 'does', \

'did', 'doing', 'a', 'an', 'the', 'and', 'but', 'if', 'or', 'because', 'as', 'until', 'while', 'of', \

'at', 'by', 'for', 'with', 'about', 'against', 'between', 'into', 'through', 'during', 'before', 'after',\

'above', 'below', 'to', 'from', 'up', 'down', 'in', 'out', 'on', 'off', 'over', 'under', 'again', 'further',\

'then', 'once', 'here', 'there', 'when', 'where', 'why', 'how', 'all', 'any', 'both', 'each', 'few', 'more',\

'most', 'other', 'some', 'such', 'only', 'own', 'same', 'so', 'than', 'too', 'very', \

's', 't', 'can', 'will', 'just', 'don', "don't", 'should', "should've", 'now', 'd', 'll', 'm', 'o', 're', \

've', 'y', 'ain', 'aren', "aren't", 'couldn', "couldn't", 'didn', "didn't", 'doesn', "doesn't", 'hadn',\

"hadn't", 'hasn', "hasn't", 'haven', "haven't", 'isn', "isn't", 'ma', 'mightn', "mightn't", 'mustn',\

"mustn't", 'needn', "needn't", 'shan', "shan't", 'shouldn', "shouldn't", 'wasn', "wasn't", 'weren', "weren't", \

'won', "won't", 'wouldn', "wouldn't"])

This function “decontracted” defined above takes a text column from a data frame and removes all HTML tags and special characters.

In the snippet given below, the plot synopsis provided in the dataset has been cleaned.

from tqdm import tqdm

preprocessed_synopsis = []

# tqdm is for printing the status bar

for sentance in df['plot_synopsis'].values:

sentance = re.sub(r"http\S+", "", sentance)

sentance = BeautifulSoup(sentance, 'lxml').get_text()

sentance = decontracted(sentance)

sentance = re.sub("\S*\d\S*", "", sentance).strip()

sentance = re.sub('[^A-Za-z]+', ' ', sentance)

# https://gist.github.com/sebleier/554280

sentance = ' '.join(e.lower() for e in sentance.split() if e.lower() not in stopwords)

preprocessed_synopsis.append(sentance.strip())df['preprocessed_plots']=preprocessed_synopsis

Training and Test Split

In the output labels for the dataset- movie genres have been separated using “,” , it has been cleaned before One-hot encoding. So, after removing spaces from output tags, the data has been split into train and test datasets.

def remove_spaces(x):

x=x.split(",")

nospace=[]

for item in x:

item=item.lstrip()

nospace.append(item)

return (",").join(nospace)df['tags']=df['tags'].apply(remove_spacetrain=df.loc[df.split=='train']

# cv=df.loc[df.split=="val"]

# cv=cv.reset_index()

train=train.reset_index()

test=df.loc[df.split=='test']

test=test.reset_index()

Preparing labels for training and testing

Since it is a multi-label classification, so the output labels need to be one-hot encoded. We have used Bag of words technique using the sci-kit learn method for this.

vectorizer = CountVectorizer(tokenizer = lambda x: x.split(","), binary='true')

y_train = vectorizer.fit_transform(train['tags']).toarray()

y_test=vectorizer.transform(test['tags']).toarray()

Maximum Length of Input Sequence

def max_len(x):

a=x.split()

return len(a)

In [23]:max(df['plot_synopsis'].apply(max_len))

Size of Vocabulary

vect=Tokenizer()

vect.fit_on_texts(train['plot_synopsis'])

vocab_size = len(vect.word_index) + 1

print(vocab_size)

Modeling Using LSTMs

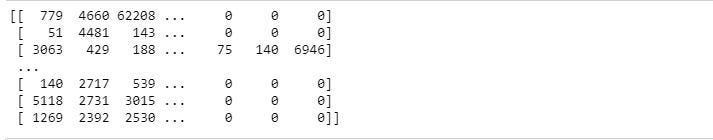

- Padding and making all input sequences of the same length and preparing input sequences

encoded_docs_train = vect.texts_to_sequences(train['preprocessed_plots'])

max_length = vocab_size

padded_docs_train = pad_sequences(encoded_docs_train, maxlen=1200, padding='post')

print(padded_docs_train)

encoded_docs_test = vect.texts_to_sequences(test['preprocessed_plots'])

padded_docs_test = pad_sequences(encoded_docs_test, maxlen=1200, padding='post')

encoded_docs_cv = vect.texts_to_sequences(cv['preprocessed_plots'])

padded_docs_cv = pad_sequences(encoded_docs_cv, maxlen=1200, padding='post')

2. Defining Model: For this problem, we are using the embedding layer as the first layer and a 71(Total number of unique tags) dimension dense layer as the output layer.

model = Sequential()

# Configuring the parameters

model.add(Embedding(vocab_size, output_dim=50, input_length=1200))

model.add(LSTM(128, return_sequences=True))

# Adding a dropout layer

model.add(Dropout(0.5))

model.add(LSTM(64))

model.add(Dropout(0.5))

# Adding a dense output layer with sigmoid activation

model.add(Dense(n_classes, activation='sigmoid'))

model.summary()out[]:_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_6 (Embedding) (None, 1200, 50) 4939100

_________________________________________________________________

lstm_4 (LSTM) (None, 1200, 128) 91648

_________________________________________________________________

dropout_6 (Dropout) (None, 1200, 128) 0

_________________________________________________________________

lstm_5 (LSTM) (None, 64) 49408

_________________________________________________________________

dropout_7 (Dropout) (None, 64) 0

_________________________________________________________________

dense_5 (Dense) (None, 71) 4615

=================================================================

Total params: 5,084,771

Trainable params: 5,084,771

Non-trainable params: 0

Why Sigmoid and not Softmax in the final dense layer?

In the final layer of the above architecture, sigmoid function as been used instead of softmax. The advantage of using sigmoid over Softmax lies in the fact that one synopsis may have many possible genres. Using the Softmax function would imply that the probability of occurrence of one genre depends on the occurrence of other genres. But for this application, we need a function that would give scores for the occurrence of genres, which would be independent of occurrences of any other movie genre.

Guide to multi-class multi-label classification with neural networks in python

Often in machine learning tasks, you have multiple possible labels for one sample that are not mutually exclusive. This…

www.depends-on-the-definition.com

3. Training using ‘adam’ as an optimizer and binary cross-entropy as the loss function.

model.compile(optimizer='adam', loss='binary_crossentropy')

history = model.fit(padded_docs_train, y_train,

class_weight='balanced',

epochs=5,

batch_size=32,

validation_split=0.1,

callbacks=[])

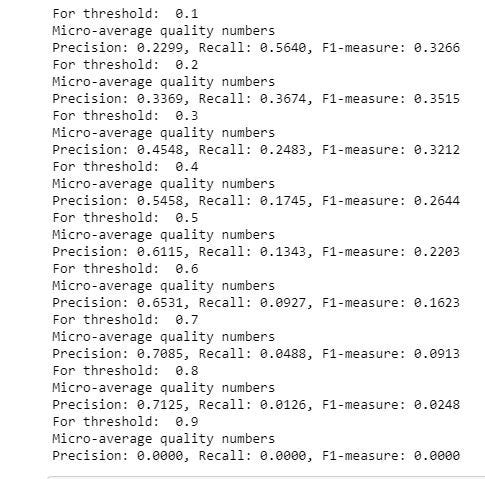

4. Analysis of Model and calculating the f1 micro score: The final dense layer in the model has 71(Total number of unique movie genres) dimensions. Each dimension in the output has a score between 0 and 1, 0 being the least probable score for any genre and 1 being the best score.

A threshold matrix has been defined, with values in range 0.1 to 0.9. then, we run a loop over the predicted output and compare it with the threshold value and choose tags only if the corresponding value of a tag is more than the threshold value.

This helps in two ways:

- Choosing the best threshold value, and use it to predict tags.

- Calculating the micro averaged F1 score, by comparing the tags predicted in each iteration and the original tags in the test dataset.

predictions=model.predict([padded_docs_test])

thresholds=[0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9]for val in thresholds:

pred=predictions.copy()

pred[pred>=val]=1

pred[pred<val]=0

precision = precision_score(y_test, pred, average='micro')

recall = recall_score(y_test, pred, average='micro')

f1 = f1_score(y_test, pred, average='micro')

print("Micro-average quality numbers")

print("Precision: {:.4f}, Recall: {:.4f}, F1-measure: {:.4f}".format(precision, recall, f1))

The F1 score for different threshold signifies how the F1 metric score changes with different threshold values. It goes as per what could have been expected out of it- A very large or a very small value of threshold gives a lower value of F1 metric score because when tags are chosen based on a lower threshold value, too many tags get chosen which reduce the F1 metric score, while when the threshold value gets very large, almost no tags get chosen and thus reducing the performance metric.

Modeling using CNNs:

- The first step remains the same as that of what we did in the above model for LSTMs. The first layer is also the same here and we have used an embedding layer followed by fully connected layers. One can use other variations and depth of layers and also try out different values of Dropouts.

model = Sequential()

model.add(Embedding(vocab_size, 71, input_length=1200))

model.add(Conv1D(64, 3, activation='sigmoid'))

model.add(Conv1D(100, 3, activation='sigmoid'))

model.add(Conv1D(100, 3, activation='sigmoid'))

# model.add(Dropout(0.70))

model.add(Conv1D(48, 3, activation='sigmoid'))

model.add(Flatten())

model.add(Dense(71))

model.summary()out[]:_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_5 (Embedding) (None, 1200, 71) 8675845

_________________________________________________________________

conv1d_5 (Conv1D) (None, 1198, 64) 13696

_________________________________________________________________

conv1d_6 (Conv1D) (None, 1196, 100) 19300

_________________________________________________________________

conv1d_7 (Conv1D) (None, 1194, 100) 30100

_________________________________________________________________

conv1d_8 (Conv1D) (None, 1192, 48) 14448

_________________________________________________________________

flatten_2 (Flatten) (None, 57216) 0

_________________________________________________________________

dense_9 (Dense) (None, 71) 4062407

=================================================================

Total params: 12,815,796

Trainable params: 12,815,796

Non-trainable params: 0

_________________________________________________________________

Training using adam optimizer and binary cross-entropy.

model.compile(optimizer='adam', loss='binary_crossentropy')

model.fit(padded_docs_train, y_train,

epochs=10,

verbose=False,

validation_data=(padded_docs_test, y_test),

batch_size=16)predictions=model.predict([padded_docs_test])

for val in thresholds:

print("For threshold: ", val)

pred=predictions.copy()

pred[pred>=val]=1

pred[pred<val]=0

precision = precision_score(y_test, pred, average='micro')

recall = recall_score(y_test, pred, average='micro')

f1 = f1_score(y_test, pred, average='micro')

print("Micro-average quality numbers")

print("Precision: {:.4f}, Recall: {:.4f}, F1-measure: {:.4f}".format(precision, recall, f1))

The same trend of a lower metric score for the very higher or very low value of a threshold.

Conclusion:

In this blog, we have tried out two architectures namely LSTMs and CNNs respectively, and then make it work for multi-label classification problems. We started with data exploration, followed by defining the models using the size of the vocabulary. Once the model has been trained, we used different thresholds and then choose tags based on the threshold score which gave the best F1 micro score on the test dataset.

Connect to Aman: https://www.linkedin.com/in/aman-s-32494b80

References:

Refer the full notebook here: https://github.com/sawarn69/MPST-Movie-Plot-Synopsis/blob/master/LSTMs%20Tag%20from%20Synopsis.ipynb

www.appliedaicourse.com

https://towardsdatascience.com/multi-class-text-classification-with-lstm-1590bee1bd17

Performing Multi-label Text Classification with Keras

Text classification is a common task where machine learning is applied. Be it questions on a Q&A platform, a support…

blog.mimacom.com

https://stackabuse.com/python-for-nlp-multi-label-text-classification-with-keras

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.