Keras EarlyStopping Callback to train the Neural Networks Perfectly

Last Updated on July 25, 2023 by Editorial Team

Author(s): Muttineni Sai Rohith

Originally published on Towards AI.

In the Arrowverse series, When Arrow says to Flash — “Take your own advice, wear a mask”, “You can be better” — Well, I thought, maybe if we have some same kind of feature in Neural Networks where the model can take it’s own advice while training and adjust the epochs accordingly, It would be a lot better and make life easy while choosing the number of epochs. Well Keras already provided that method and that’s what our article is all about — EarlyStopping

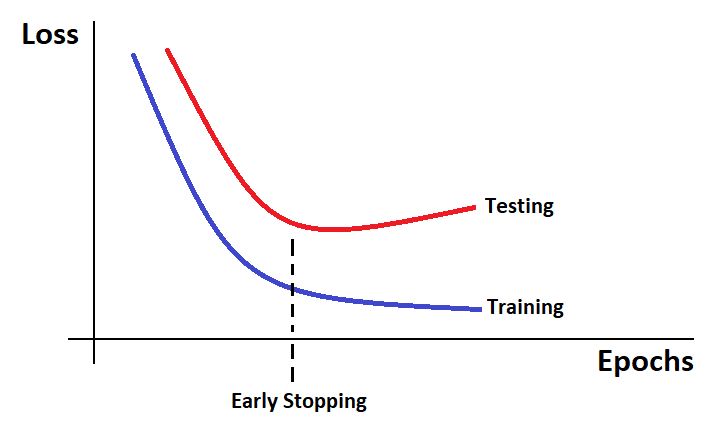

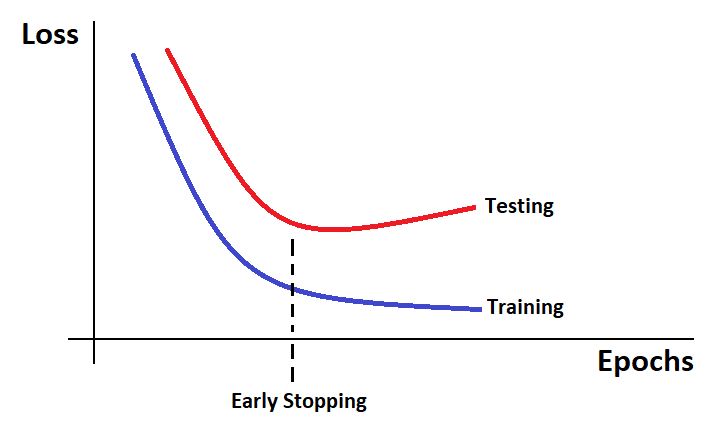

A problem in Neural Networks is choosing the number of epochs while training, too many epochs will overfit the model, while too less may cause underfitting.

EarlyStopping is a callback used while training neural networks, which provides us the advantage of using a large number of training epochs and stopping the training once the model’s performance stops improving on the validation Dataset.

How EarlyStopping is useful?

To understand this, let’s proceed with an example without EarlyStopping —

model = tf.keras.models.Sequential([tf.keras.layers.Dense(10)])model.compile(tf.keras.optimizers.SGD(), loss='mse')history = model.fit(np.arange(100).reshape(5, 20), np.zeros(5), epochs=10, batch_size=1,verbose=1)

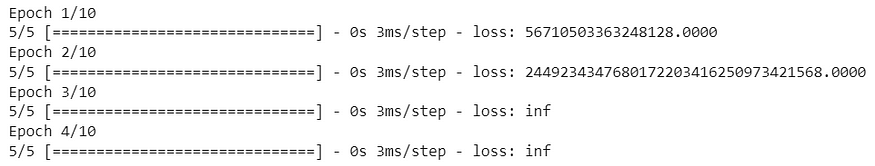

So, in the above example, we are considering a small neural network with some random data. This data makes no sense, and most likely, the model performance will not improve, irrespective of the huge number of epochs.

We can see that in output —

So using the EarlyStopping callback, we can write a Neural Network such that the training will stop when the model’s performance is not improving

callback = tf.keras.callbacks.EarlyStopping(monitor='loss', patience=3)model = tf.keras.models.Sequential([tf.keras.layers.Dense(10)])model.compile(tf.keras.optimizers.SGD(), loss='mse')history = model.fit(np.arange(100).reshape(5, 20), np.zeros(5), epochs=10, batch_size=1, callbacks=[callback],verbose=1)

we can see that our training got stopped after 4 epochs.

len(history.history['loss']) #Number of Epochs

So we have seen how EarlyStopping helped in stopping the training by monitoring the model performance. Now Imagine a case where we don’t know how many epochs are needed to get some good accuracy on a particular data. In that case, EarlyStopping gives us the advantage of setting a large number as — number of epochs and setting patience value as 5 or 10 to stop the training by monitoring the performance.

Important Note:

Even though we can use training loss and accuracy, EarlyStopping makes sense if we have Validation data that can be evaluated during Training. Based on this Validation data performance, we will stop the training.

Syntax:

model.fit(train_X, train_y, validation_split=0.3,callbacks=EarlyStopping(monitor=’val_loss’), patience=3)

So from the above example, if the Validation loss is not decreasing for 3 consecutive epochs, then the training will be stopped.

Parameters for EarlyStopping:

tf.keras.callbacks.EarlyStopping(

monitor="val_loss",

min_delta=0,

patience=0,

verbose=0,

mode="auto",

baseline=None,

restore_best_weights=False,

)

The above is the syntax and Parameters along with default values available for EarlyStopping. Let’s go one by one in detail:

monitor — Metric to be monitored Ex: “val_loss”, “val_accuracy”

min_delta — Minimum change in the Monitored metric to be considered as an improvement.

patience — The number of epochs with No improvement after training will be stopped

verbose — (0 or 1) 1 represents true, which displays messages when the callback takes action.

mode —( “auto”, “min”, “max”) In “min” mode, training will stop when the monitored metric has stopped decreasing. In “max” mode, training will stop when the monitored metric has stopped increasing. In “auto” mode, the direction is automatically inferred from the name of the metric.

baseline — Base Value for the monitored metric. Training will stop if the monitored metric doesn’t show improvement over the baseline value. For example: if the monitored metric is val_accuracy, patience=10, and baseline = 50, then training will stop if val_accuracy is not more than 50 in the first 10 epochs.

restore_best_weights — Whether to restore model weights from the epoch with the best value of the monitored metric. If False, the model weights obtained at the last step of training are used.

So By using restore_best_weights we can save the model weights from the epoch with the best performance and use it thereon.

So Before ending this article, let me provide another example demonstrating the syntax of EarlyStopping —

early_stopping = EarlyStopping(

patience=10,

min_delta=0.001,

monitor="val_loss",

restore_best_weights=True

)model_history = model.fit(X_train, y_train, batch_size=64, epochs = 100, validation_data = (X_test,y_test), steps_per_epoch= X_train.shape[0] // batch_size, callbacks=[early_stopping])

So in the above example, we have used some training data and validation data. And our callback — EarlyStopping, will Stop the training if there is no decrease in the metric “val_loss” of our model by at least 0.001 value after or in any 10 consecutive epochs.

So that’s what today's article is about. I hope the next time you train your Neural Network Model, you use the EarlyStopping callback.

Happy Coding….

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.