IoT Real Time Analytics — WAGO PLC with Databricks Auto Loader

Last Updated on September 8, 2022 by Editorial Team

Author(s): Rory McManus

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

IoT Real-Time Analytics — WAGO PLC with Databricks Auto Loader

Modern businesses have an overwhelming amount of data available to them from a huge number of IoT devices and applications in wide and varied file formats. This data can be extremely time-consuming and complex to ingest and transform into a readable, useful format. Auto Loader is an amazing tool from Databricks which simplifies the solution, ensures full automation of the process, and enables real-time decision making.

Databricks is a scalable big data analytics platform designed for data science and data engineering and integration with major cloud platforms Amazon Web Services, Microsoft Azure, and Google Cloud Platform.

Auto Loader is a feature of Databricks to stream or incrementally ingest and transform millions of files per hour from a data lake reading newly arriving files. For a detailed explanation of Databricks Auto Loader, click here.

Data Mastery recently deployed an Auto Loader solution with an OEM in the mining industry to ingest and transform telemetry data from WAGO PLC devices to IoT Hub. Previously, the OEM was manually downloading the data files on site, then manually transforming the data file using excel to produce a readable report. Auto Loader completely automates this process and delivers a readable report automatically, eliminating one man hour of manual data processing per shift, saving over 21+ man hours per week per site (24hr operation). Needless to say, our client was delighted with the result!

How to ingest and transform WAGO PLC device data

In this article, I will explain what we did to create a streaming solution to ingest and transform WAGO PLC data being sent to the IoT hub from multiple sites and devices.

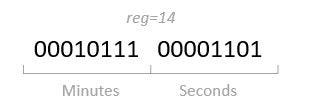

The data starts out in 1000x 16-bit Unicode registers. This is used for calculations in the ARM assembly language used by the MRA and in the PLC.

In this case, the encoding within the binary registers is subdivided to carry more than one piece of information. An example is date and time encoding, where seconds and minutes are encoded within the same 16-bit register.

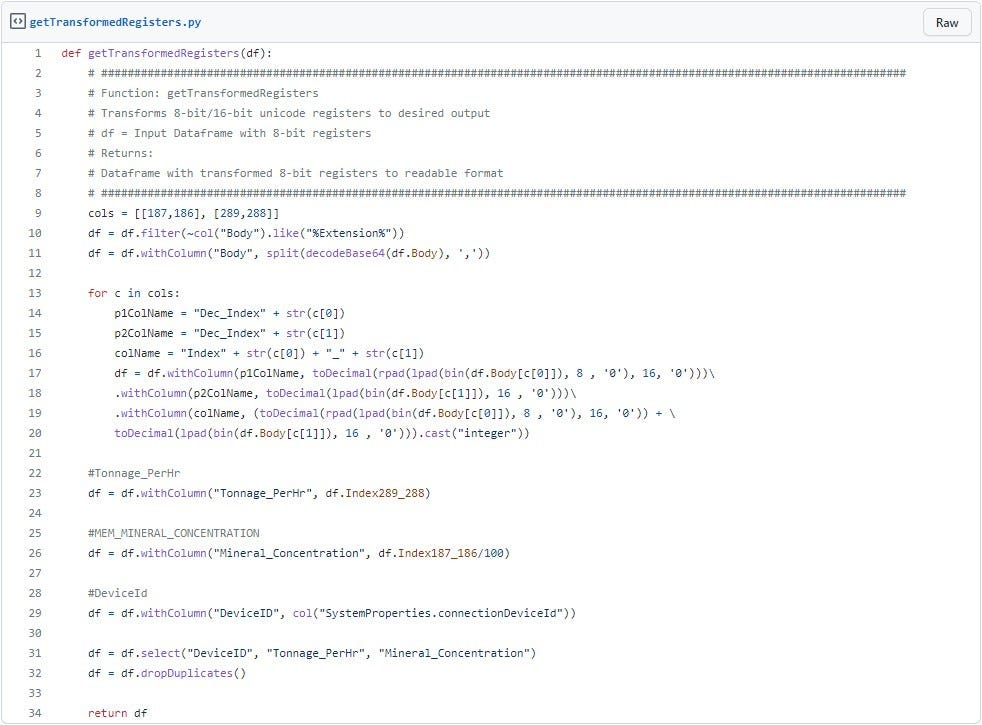

While the data is stored on the WAGO PLC device as 16-bit registers, it is sent to the IoT hub as 2000x 8-bit Unicode registers to reduce the packet sizes being sent. Therefore it needs to be transformed back into 16-bit registers from 8-bit as part of the transformation into readable format.

Solution

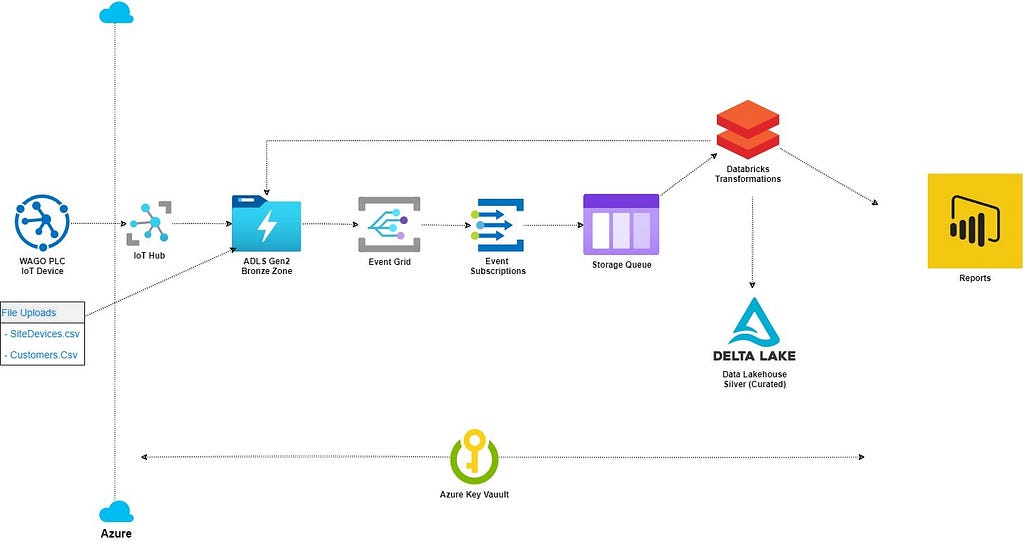

The solution used follows the high-level steps below:

- WAGO PLC messages are sent to Azure IoT Hub, where these messages are routed to specific Azure Storage containers in JSON format based on their DeviceId.

- As the file lands in the Storage Container, an Event Grid-created subscriber creates a message on an Azure Storage Queue with the location and name of the new file.

- Databricks Auto Loader checks the Storage Queue for new messages and reads the file stream into a dataframe as they arrive.

- The input dataframe is then transformed from 2000x 8-bit Unicode registers back to 1000x 16-bit Unicode registers and subsequently to the desired formats, e.g., Tonnage Per Hour, Day, Month Year, etc.

- The transformed dataframe is then appended to a Delta Table for Power BI consumption.

Prerequisites

- Configure WAGO PLC device(s) to send messages to Azure IoT Hub

- Register an Azure service principal — used for the auto-creation of Event Grid/Subscription and Storage Queue. Alternatively, you can create the services manually if you want

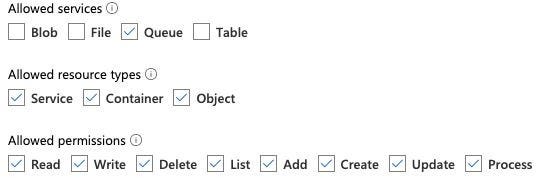

- Generate a Storage Queue Shared access signature with the following permissions

- Create the following Azure Key Vault secrets for SubscriptionId, TenantId, Bronze-Queue-ConnectionString (created above), Service Principal ClientId & ClientSecret (created above)

To create the Auto Loader streaming solution, the following steps must be completed.

- Configure WAGO PLC devices to send messages to Azure IoT Hub

- Route incoming IoT Hub messages to specific storage containers by filtering on DeviceId

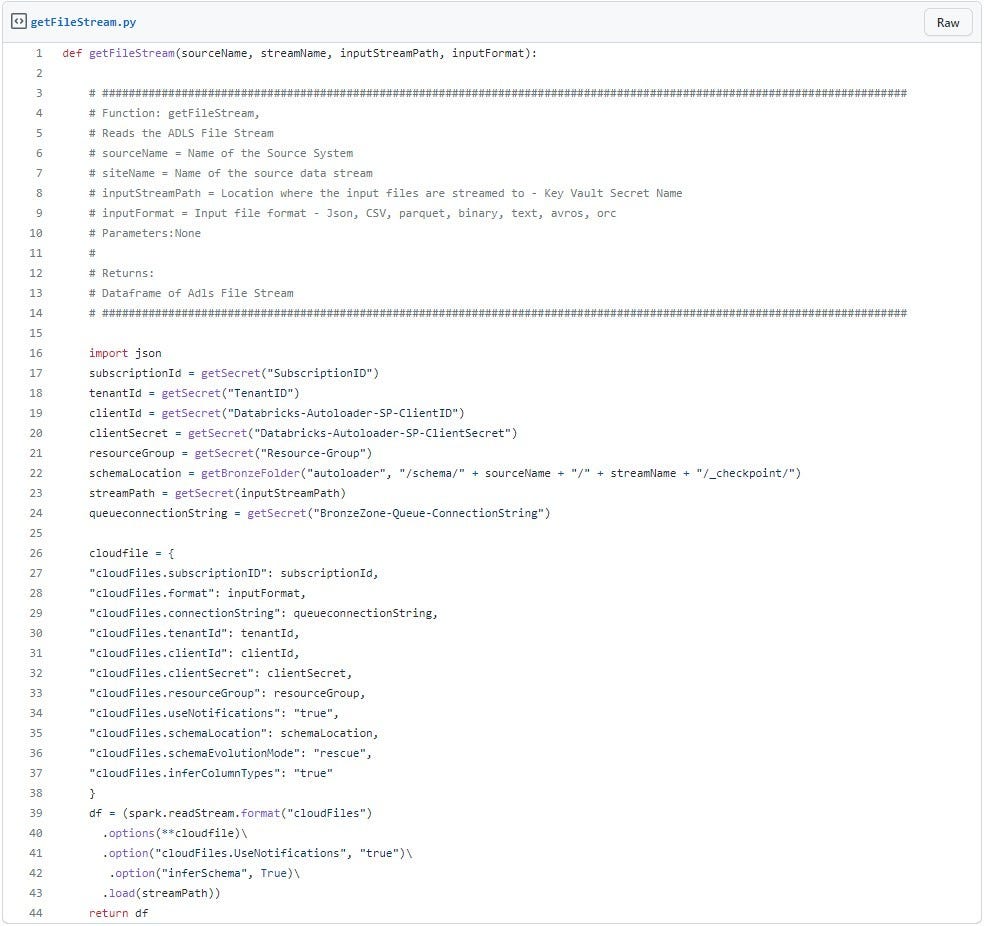

- Create a PySpark function to use Spark structured stream source cloudFiles to read from the input ADLS Gen2 storage container

- Create a PySpark function to transform the input file stream to the desired output columns

- Write the stream or batch dataframe to a Databricks Delta table

- Test 🙂

Detailed step-through:

- Configure the WAGO PLC to send messages to the Azure IoT hub

- Route incoming IoT Hub messages to specific storage containers depending on DeviceId

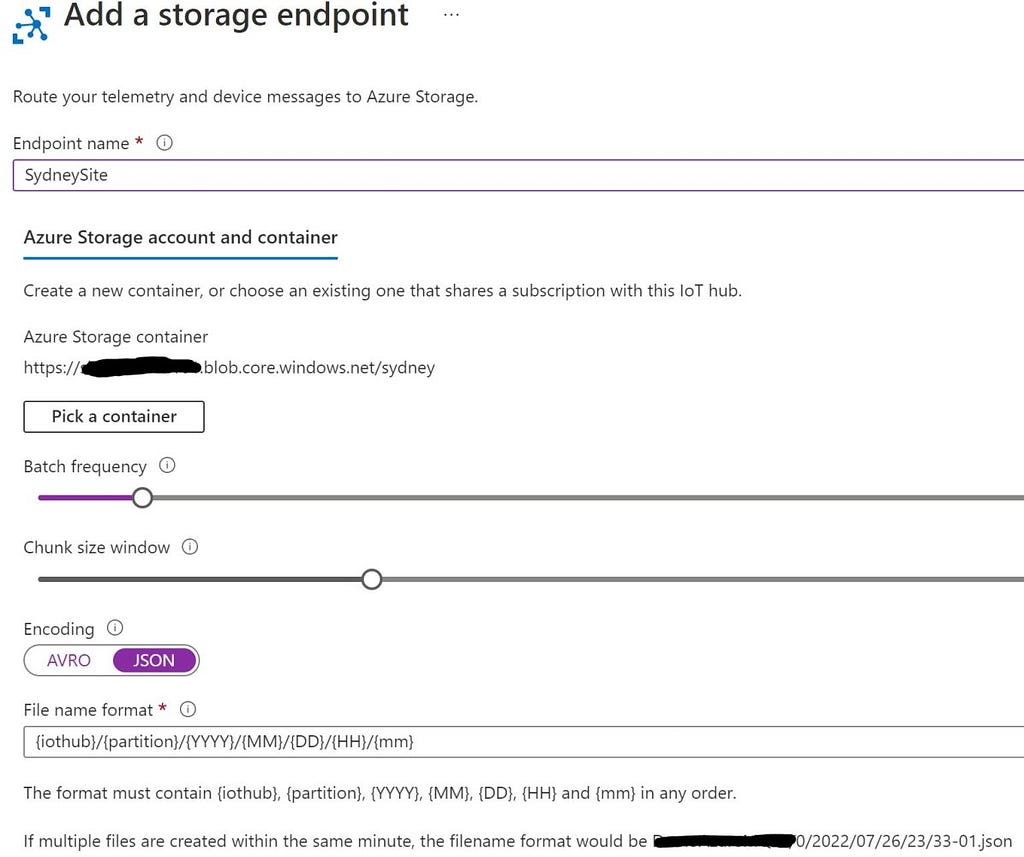

2.1. Create a custom Endpoint to an ADLS Gen2 storage container

IoT Hub 🠮 Message routing 🠮 Custom endpoints 🠮 Add

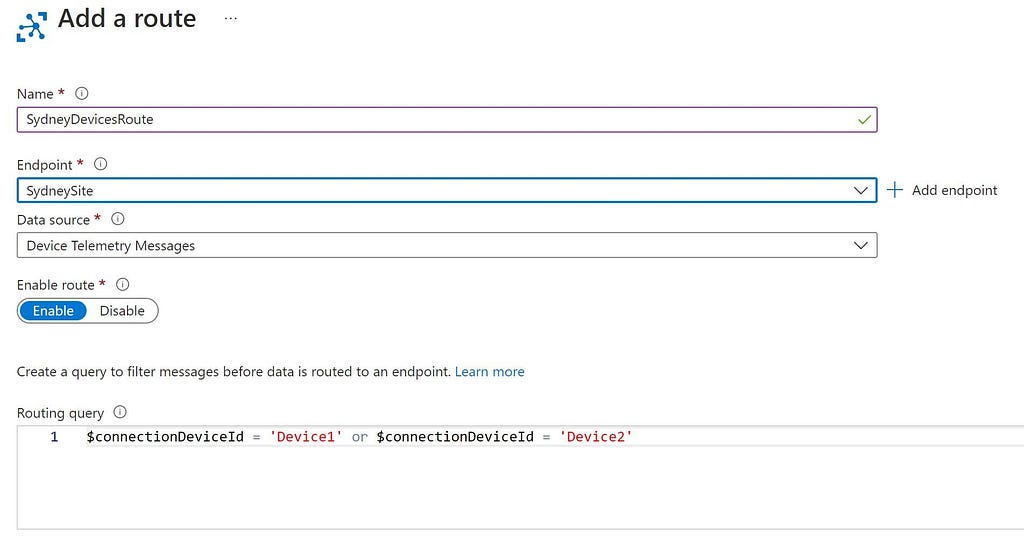

2.2. Create a route and add a query to filter by DeviceId

IoT Hub 🠮 Message routing 🠮 Routes 🠮 Add

3. Create a PySpark function to read newly arriving files on the storage queue

4. Create a PySpark function to transform the input file stream dataframe to the desired output columns.

NOTE: I have only added a sample transformation due to a large number of transformations

6. Test 🙂

Run the process end-to-end to check the data has been inserted into the table.

Conclusion

Auto Loader is an excellent tool that can be applied across any business in any sector to get the benefits of a simple, time-efficient solution and real-time data.

I hope you have found this helpful and will save your company money and time getting started with Databricks Auto Loader, and I hope it will help drive insights to give value to your business.

If you would like a copy of my code, please drop me a message on LinkedIn, or if you have enjoyed reading as much as I did writing, please share it with your friends.

IoT Real Time Analytics — WAGO PLC with Databricks Auto Loader was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.