Nuclei Detection and Fluorescence Quantification in Python: A Step-by-Step Guide (Part 2)

Author(s): MicroBioscopicData (by Alexandros Athanasopoulos)

Originally published on Towards AI.

Welcome back to the second tutorial in our series, “Nuclei Detection and Fluorescence Quantification in Python.” In this tutorial, we will focus on measuring the fluorescence intensity from the GFP channel, extracting relevant data, and performing a detailed analysis to derive meaningful biological insights.

To fully benefit from this tutorial, it’s helpful to have a basic understanding of Python programming as well as some familiarity with fluorescence microscopy, including the principles behind using fluorescent proteins like GFP (Green Fluorescent Protein).

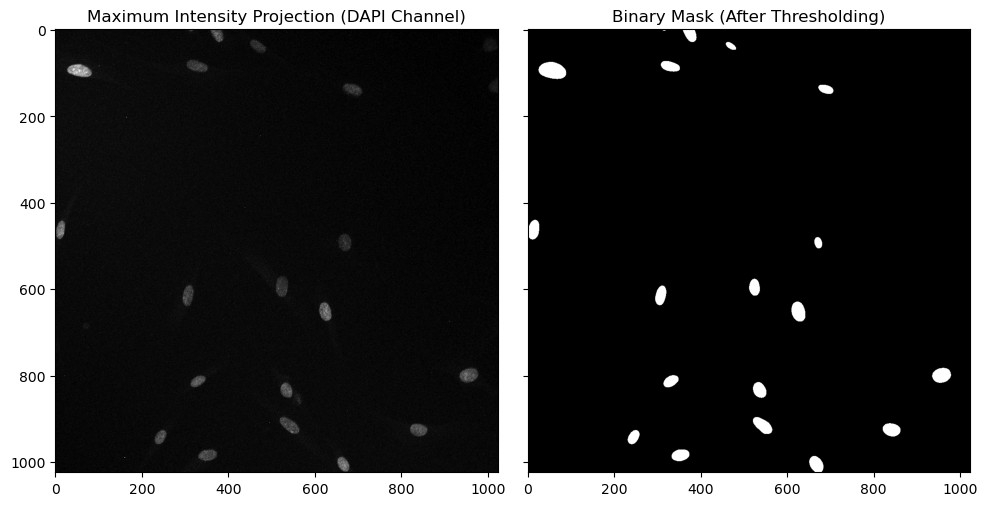

In the previous tutorial, we used images of fibroblast cells where the nuclei are labeled with DAPI, a fluorescent dye (blue channel) that binds to DNA, and a protein of interest that is present in both the cytoplasm and nucleus, detected in the green channel. We began by preprocessing the images to enhance data quality. We applied Gaussian smoothing with varying sigma values to reduce noise and used thresholding methods to effectively distinguish the nuclei from the background. Additionally, we discussed post-processing techniques, such as removing small artifacts, to further refine the segmentation results.

The code below (from our first tutorial) effectively segments and visualizes nuclei in fluorescence microscopy images, offering clear insights into the distribution and intensity of the detected features. The next step in fluorescence quantification is to label the segmented nuclei.

from skimage import io, filters, morphology, measure, segmentation, color

from skimage.measure import regionprops

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

# Set option to display all columns and rows in Pandas DataFrames

pd.set_option('display.max_columns', None)

pd.set_option('display.max_rows', None)

# Load the multi-channel TIFF image

image = io.imread('fibro_nuclei.tif')

# Separate the GFP channel (assuming channel 0 is GFP)

channel1 = image[:, 0, :, :] # GFP channel

# Perform Maximum Intensity Projection (MIP) on GFP channel

channel1_max_projection = np.max(channel1, axis=0)

# Separate the DAPI channel (assuming channel 1 is DAPI)

channel2 = image[:, 1, :, :] # DAPI channel

# Perform Maximum Intensity Projection (MIP) on DAPI channel

channel2_max_projection = np.max(channel2, axis=0)

# Apply Gaussian smoothing to the DAPI MIP

smoothed_image = filters.gaussian(channel2_max_projection, sigma=5)

# Apply Otsu's method to find the optimal threshold and create a binary mask

threshold_value = filters.threshold_otsu(smoothed_image)

binary_mask = smoothed_image > threshold_value

# Create subplots with shared x-axis and y-axis

fig, (ax1, ax2) = plt.subplots(1, 2, sharex=True, sharey=True, figsize=(10, 10))

# Visualize the Maximum Intensity Projection (MIP) for the DAPI channel

ax1.imshow(channel2_max_projection, cmap='gray')

ax1.set_title('Maximum Intensity Projection (DAPI Channel)')

# Visualize the binary mask obtained after thresholding the smoothed DAPI MIP

ax2.imshow(binary_mask, cmap='gray')

ax2.set_title('Binary Mask (After Thresholding)')

# Adjust layout to prevent overlap

plt.tight_layout()

# Display the plots

plt.show()

Labeling the Segmented Nuclei

Labeling the binary mask is a crucial step in image analysis. When we perform thresholding on an image, the result is a binary mask (see also our previous tutorial) where pixels are classified as either foreground/True (e.g., nuclei) or background/False. However, this binary mask alone doesn’t distinguish between different individual nuclei — it simply shows which pixels belong to the foreground and to the background.

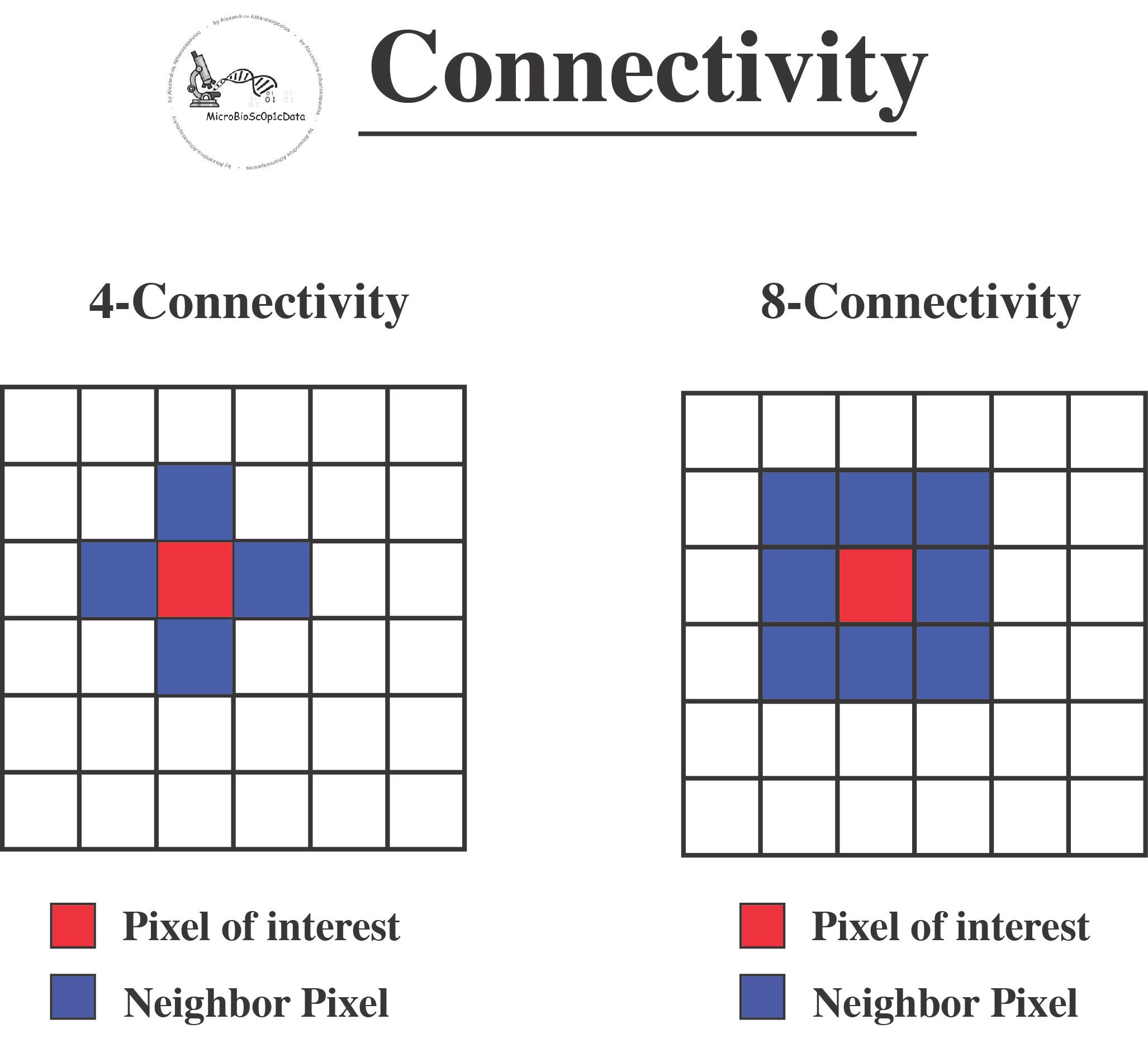

Labeling is the process of assigning a unique identifier (label) to each nucleus in the binary mask. In the context of connected components, labeling involves identifying and marking groups of connected pixels (components) that represent individual objects, such as nuclei, in the image. Once the binary mask is created, the connected components algorithm is applied. This algorithm scans the binary mask to detect groups of connected pixels using either 4-connectivity or 8-connectivity criteria (see below the image) and assigns a unique label to each connected component. Each label corresponds to a distinct nucleus in the image [1].

There are different types of connectivity, primarily 4-connectivity and 8-connectivity:

4-Connectivity:

- Definition: In 4-connectivity, a pixel (of interest) is considered connected to another pixel if they share an edge. In a 2D grid, each pixel has four possible neighbors: left, right, above, and below.

- Applications: 4-connectivity is often used in algorithms where diagonal connections are not considered, thus providing a more restrictive form of connectivity.

8-Connectivity:

- Definition: In 8-connectivity, a pixel (of interest) is connected to all of its neighbors, including those that share a vertex. This means that, in addition to the four edge-connected neighbors (as in 4-connectivity), the pixel is also connected to the four diagonal neighbors.

- Applications: 8-connectivity is used in applications where diagonal connections are significant, providing a more inclusive form of connectivity.

Why Labeling is Important

- Identification: Labeling allows us to identify and differentiate between individual nuclei within the binary mask. Each nucleus has a unique label, which makes it possible to treat and analyze each nucleus separately.

- Analysis: Once the nuclei are labeled, we can measure various properties of each nucleus individually, such as area, perimeter, and fluorescence intensity… This is essential for quantitative analysis in biological research.

- Visualization: Labeling also facilitates the visualization of segmented nuclei. By assigning different colors or intensities to each label, we can easily see and distinguish the segmented nuclei in a labeled image.

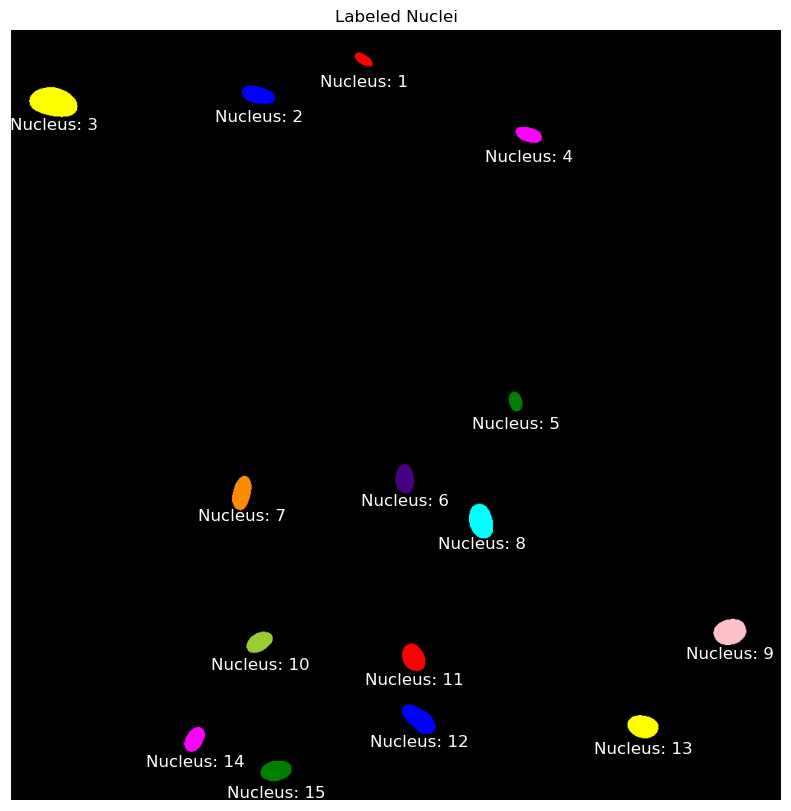

The code below is used to label connected regions (components) in our binary image. The function skimage.measure.label scans the binary mask and assigns a unique integer label to each connected component. The output is a labeled image (2D numpy array) where each connected component is assigned a unique integer label (e.g., 1, 2, 3, etc.). Pixels that belong to the same component (e.g., a single nucleus) will have the same label. By default, the function uses 8-connectivity.

The function color.label2rgb(labeled_nuclei, bg_label=0) from the skimage.color module converts a labeled image into an RGB (color) image.

labeled_nuclei: This is the labeled imagebg_label=0: This specifies that the background label is 0, so the background will not be colored, and only the labeled regions (nuclei) will be colored differently in the output RGB image.

The segmentation.clear_border() function is used next to remove any nuclei that touch the edges of the image, ensuring that only fully contained nuclei are considered. The image is then relabeled to reflect the removal of these border-touching nuclei, and the updated count is printed. Finally, the labeled nuclei are visualized in color, with each nucleus annotated at its centroid using its corresponding label number.

# Label the nuclei and return the number of labeled components

labeled_nuclei, num_nuclei = measure.label(binary_mask, return_num=True)

print(f"Initial number of labeled nuclei: {num_nuclei}")

# Remove nuclei that touch the borders

cleared_labels = segmentation.clear_border(labeled_nuclei)

# Recalculate the number of labeled nuclei after clearing the borders

# Note: We need to exclude the background (label 0)

final_labels, final_num_nuclei = measure.label(cleared_labels > 0, return_num=True)

print(f"Number of labeled nuclei after clearing borders: {final_num_nuclei}")

# Visualize the labeled nuclei

plt.figure(figsize=(10, 10))

plt.imshow(color.label2rgb(final_labels, bg_label=0), cmap='nipy_spectral')

plt.title('Labeled Nuclei')

plt.axis('off')

# Annotate each nucleus with its label

for region in measure.regionprops(final_labels):

# Take the centroid of the region and use it for placing the label

y, x = region.centroid

plt.text(x, y+30, f"Nucleus: {region.label}", color='white', fontsize=12, ha='center', va='center')

plt.show()Initial number of labeled nuclei: 19

Number of labeled nuclei after clearing borders: 15

Measure fluorescence

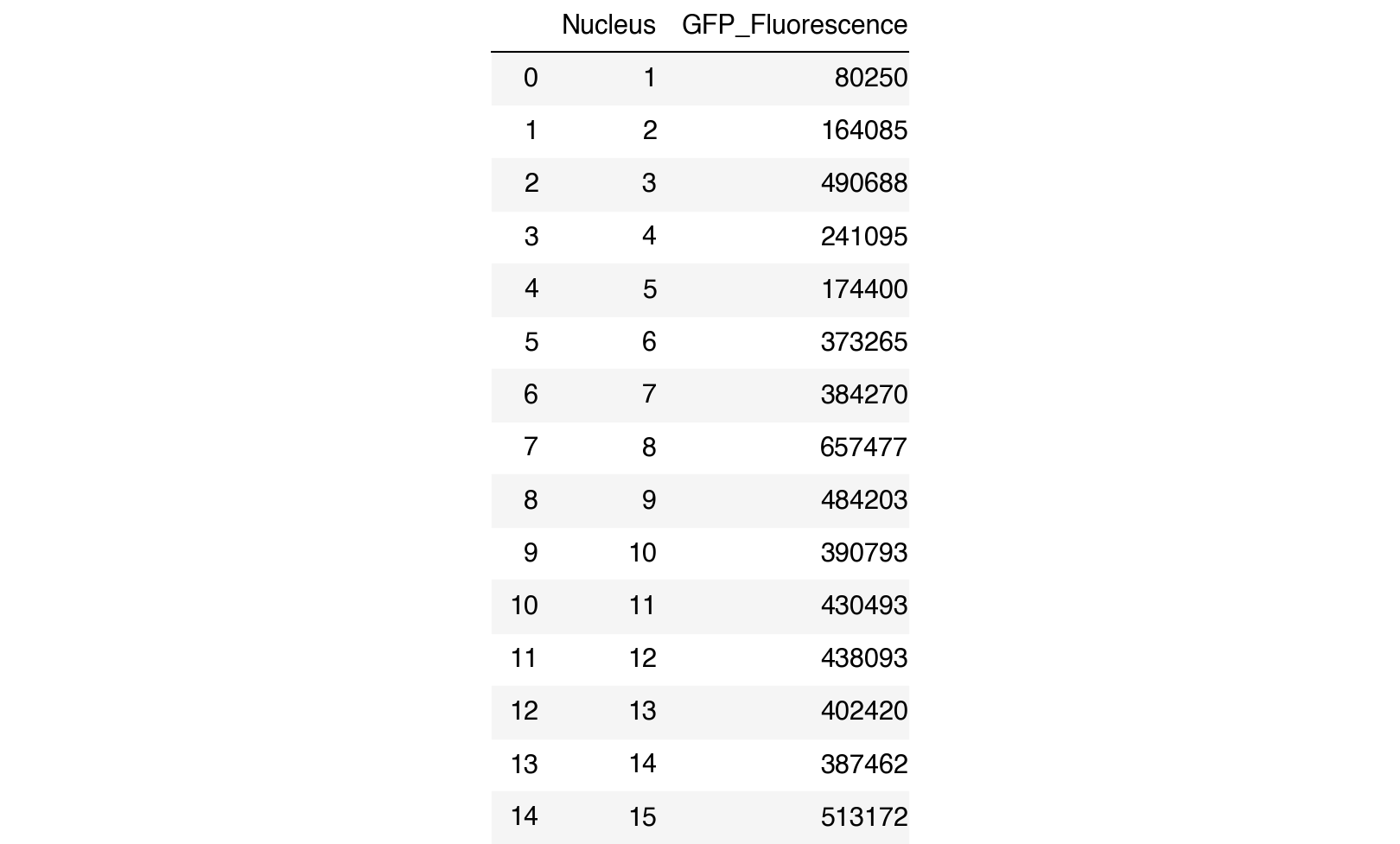

To measure the fluorescence in the green channel (GFP) of our multi-channel Z-stack image, we sum the pixel values of the GFP channel within the regions defined by our binary mask, instead of relying solely on the maximum intensity projection.

This method (sum the pixel values) provides a better representation of the total fluorescence signal within each labeled region (nucleus) because it accounts for the entire intensity distribution rather than just the brightest pixels.

The code below calculates the total GFP fluorescence for each labeled nucleus in the image by summing the pixel intensities in the GFP channel. The resulting values are stored in a list for further analysis, such as comparing fluorescence across different nuclei or assessing the distribution of GFP within the sample. The operation channel1.sum(axis=0) sums the pixel intensities across all Z-slices for each (x, y) position in the image. This results in a 2D image where each pixel value represents the total fluorescence intensity at that (x, y) coordinate across the entire depth of the sample.

# Sum fluorescence in GFP channel within each labeled nucleus

gfp_fluorescence = []

for region in measure.regionprops(final_labels, intensity_image=channel1.sum(axis=0)): # channel1.sum(axis=0) has a data type of 64-bit unsigned integer

gfp_sum = region.intensity_image.sum()

gfp_fluorescence.append(gfp_sum)

# Print the total fluorescence for each nucleus

for i, fluorescence in enumerate(gfp_fluorescence, start=1):

print(f"Nucleus {i}: Total GFP Fluorescence = {fluorescence}")Nucleus 1: Total GFP Fluorescence = 80250

Nucleus 2: Total GFP Fluorescence = 164085

Nucleus 3: Total GFP Fluorescence = 490688

Nucleus 4: Total GFP Fluorescence = 241095

Nucleus 5: Total GFP Fluorescence = 174400

Nucleus 6: Total GFP Fluorescence = 373265

Nucleus 7: Total GFP Fluorescence = 384270

Nucleus 8: Total GFP Fluorescence = 657477

Nucleus 9: Total GFP Fluorescence = 484203

Nucleus 10: Total GFP Fluorescence = 390793

Nucleus 11: Total GFP Fluorescence = 430493

Nucleus 12: Total GFP Fluorescence = 438093

Nucleus 13: Total GFP Fluorescence = 402420

Nucleus 14: Total GFP Fluorescence = 387462

Nucleus 15: Total GFP Fluorescence = 513172

Data Analysis

The code above practcially calculated the integrated density which is a measure used in image analysis to quantify the amount of signal (e.g., fluorescence) within a region of interest (such as a nucleus).

In fluorescence microscopy, integrated density can be used to estimate the total amount of fluorescence in a given nucleus or cellular compartment. This can be useful for comparing the expression levels of a fluorescently labeled protein between different cells or experimental conditions.

The code below converts the gfp_fluorescence list into a pandas DataFrame for further statistical analysis, such as comparing fluorescence across different nuclei or conditions, calculating mean and standard deviation, or performing more advanced analyses like clustering or correlation studies.

# Convert the fluorescence data into a DataFrame

df = pd.DataFrame({'Nucleus': range(1, len(gfp_fluorescence) + 1), 'GFP_Fluorescence': gfp_fluorescence})

# Display the DataFrame

df

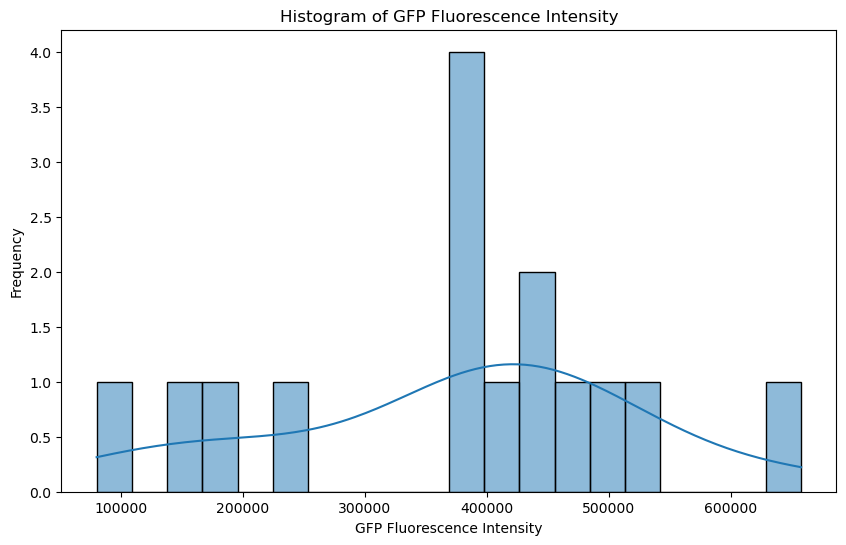

By analyzing the distribution of fluorescence intensity across the nuclei, we can potentially reveal the presence of different populations or subgroups within the sample. This analysis could provide valuable insights, such as identifying distinct expression patterns or responses to treatment. Techniques like clustering can help in categorizing the nuclei based on their fluorescence profiles, enabling deeper biological interpretations.

# Plot histogram

plt.figure(figsize=(10, 6))

sns.histplot(df['GFP_Fluorescence'], bins=20, kde=True)

plt.title('Histogram of GFP Fluorescence Intensity')

plt.xlabel('GFP Fluorescence Intensity')

plt.ylabel('Frequency')

plt.show()

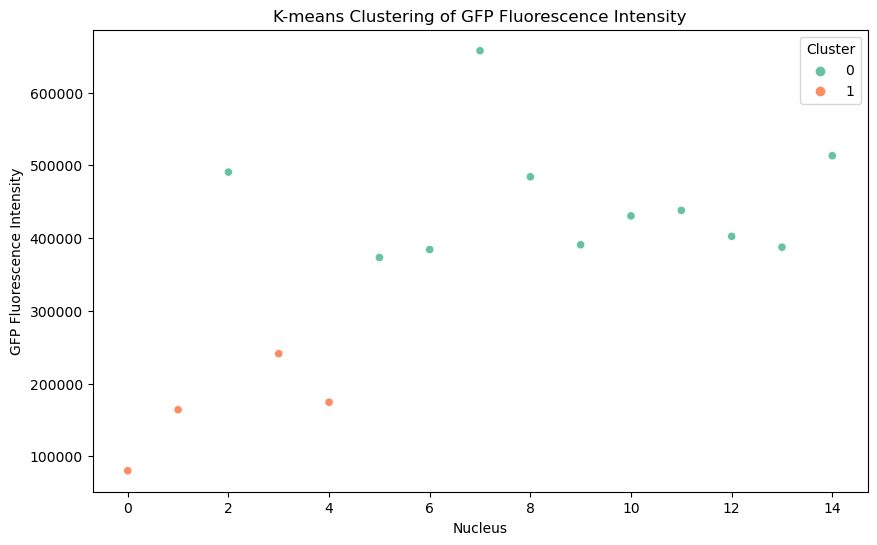

Clustering Analysis:

We can apply K-means clustering to group the nuclei based on their fluorescence intensity. This can help identify distinct populations that differ in their expression levels. In the scatter plot below each point represents a nucleus, with the x-axis showing the nucleus index and the y-axis showing the total GFP fluorescence intensity for that nucleus. The points are color-coded based on the cluster they belong to. Two clusters are represented: cluster 0 (in green) and cluster 1 (in orange). The clustering was performed using K-means with two clusters. This plot demonstrates how nuclei can be grouped into distinct clusters based on their GFP fluorescence intensity.

from sklearn.cluster import KMeans

# Reshape data for clustering

fluorescence_data = df['GFP_Fluorescence'].values.reshape(-1, 1)

# Apply K-means clustering (let's assume 2 clusters for simplicity)

kmeans = KMeans(n_clusters=2, random_state=0).fit(fluorescence_data)

df['Cluster'] = kmeans.labels_

# Visualize clusters

plt.figure(figsize=(10, 6))

sns.scatterplot(x=df.index, y=df['GFP_Fluorescence'], hue=df['Cluster'], palette='Set2')

plt.title('K-means Clustering of GFP Fluorescence Intensity')

plt.xlabel('Nucleus')

plt.ylabel('GFP Fluorescence Intensity')

plt.show()

Together, these plots (histogram and scatter plot) indicate the presence of at least two subpopulations of nuclei based on their GFP fluorescence, potentially reflecting biological variability or different conditions affecting fluorescence expression.

Conclusion

In this tutorial, we explored advanced image processing techniques for segmenting nuclei and quantifying fluorescent signals using Python. By employing methods like Gaussian smoothing, thresholding, and connected component labeling, we were able to accurately identify and separate individual nuclei in the DAPI channel. We also demonstrated how to measure fluorescence intensity in the GFP channel by summing pixel values across Z-slices to capture the full distribution of fluorescence in each nucleus. Through data analysis, we were able to quantify and interpret the fluorescence signals, enabling deeper insights into biological variations.

References:

[1] P. Bankhead, “Introduction to Bioimage Analysis — Introduction to Bioimage Analysis.” https://bioimagebook.github.io/index.html (accessed Jun. 29, 2023).

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.