How To Speed Up Your Grid Search 60x

Last Updated on July 26, 2023 by Editorial Team

Author(s): Optumi

Originally published on Towards AI.

Includes an example notebook and dataset

Example notebook

For this article, we will use a notebook called GridSearchCV, created using code from a post on the website GeeksforGeeks. We will tune the grid search hyperparameters of a Keras classifier.

Here are the files needed to download and run the notebook yourself:

Grid search refresher

A grid search is a technique that helps data scientists find the best settings for a model by systematically testing different combinations of hyperparameters.

For example, suppose you are training a neural network to classify images of handwritten digits. You may want to experiment with different numbers of hidden layers and different numbers of neurons in each layer. Rather than randomly guessing different values for these hyperparameters, a grid search allows data scientists to systematically try out different combinations of values and see which one gives the best results.

Baseline performance

Since the grid search is by far the most computationally intense part of this example, we will only focus on the cell containing the grid search (cell #5).

To get a baseline performance of the cell’s run time, we used a laptop with 8 CPU cores and 32 GB of RAM. The cell was completed in 26 minutes and 43 seconds.

Our enhancements

Let’s understand more about this cell and walk through ways to speed it up.

- Parallelize the grid search

By default, the grid search uses only 1 CPU core, leaving 7 other cores on the laptop idle. This can be improved via parallelization, which grid searches lend themselves well to.

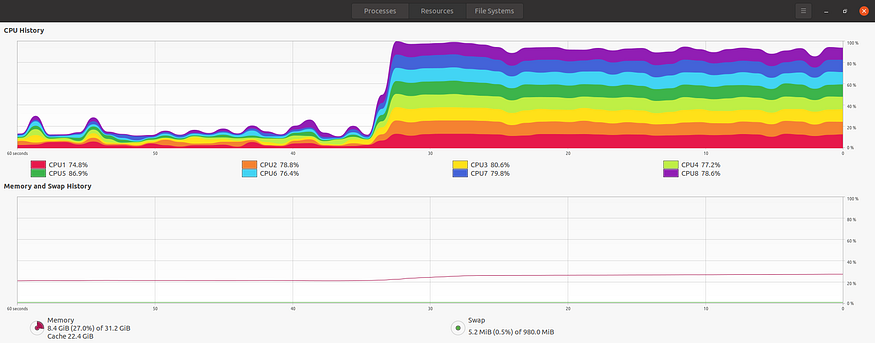

The below screenshot shows laptop resource utilization and you can see that less than half of the cores are being used.

The first enhancement is minimally invasive and requires one small tweak to the code. We will set the n_jobs parameter in the GridSearchCV call to -2 in order to use all but 1 core. This remaining 1 core is to leave wiggle room for other computer processes, which is often good practice.

Our line of code should look something like the following:

GridSearchCV(estimator=model, param_grid=params, cv=10, n_jobs=-2)

We ran on the same laptop used for the prior experiment and the cell was completed in 7 minutes and 34 seconds.

2. Leverage a bigger machine

The second enhancement is non-invasive and requires no code changes. Rather, since the grid search is now able to run in parallel across as many cores as it’s given, we ran it on a much larger machine with 120 cores.

The cell used 119 cores, as expected, and completed in 27 seconds — a 60x improvement from the first run!

U+1F3CE After: 27 seconds

Key Takeaways

So, what should be remembered going forward?

- Grid searches are very parallelizable tasks (since none of the training experiments are dependent on each other), but at first we were not leveraging this attribute. Single-core processing is the default in some packages, so make sure to check on your notebook’s resource utilization.

- Performance improved all the way from 1 to 119 cores. Due to the parallelizable nature of grid searches, similar scaling can be expected in other grid search cases. However, the size of your dataset can have an impact (e.g. if you are using a huge dataset there will be more RAM overhead) and there will likely be a point of diminishing returns (e.g. 300 cores might actually slow down the grid search).

Solution options

Finally, what tools or resources are needed to accomplish the goal in this article?

To get results similar to the above, you will need a machine with 120 CPU cores. Unless you or your company has one laying around, your options are likely:

- Provision a cloud instance yourself.

- Ask DevOps or IT to provision a cloud instance.

- Use an ML service that provisions cloud instances. Make sure the service you are evaluating has access to the type of instance you need.

Happy experimenting U+1F9EA

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.