How do GPUs Improve Neural Network Training?

Last Updated on June 19, 2021 by Editorial Team

Author(s): Daksh Trehan

Deep Learning

What GPU have to offer in comparison to CPU?

I bet most of us have heard about “GPUs”. There have been sayings that GPU is the best investment you can do for gaming.

But the technology that once fancies the Gaming Industry, is now a core element of various other realms including Artificial Intelligence, Video Rendering, Healthcare.

The two main GPU manufacturers are NVIDIA & AMD. The electronic giants once used to focus solely on the gaming industry to design their GPUs, but as the corpus of AI is expanding, the demand and specialization of GPUs are increasing exponentially.

The increasing attention on Deep Learning has enabled manufacturers to also focus on software rather than just hardware. In response to this, CUDA was invented, which could accelerate the processing in a software manner.

What is a GPU and how it differs from a CPU?

GPU stands for Graphical Processing Unit. It usually lies inside the graphic card of your machine and isn’t a requirement for your machine like CPU.

GPUs are small silicon-based microprocessors that help to fasten processes that involve mathematical computations. In simple words, GPU can be defined as an extra muscle to our CPU that just can’t work on its own.

Though, both GPU and CPU are silicon-based and fast, the difference lies between their architectures and mode of operation. CPU usually has 2/4/8/16 cores and, on the other hand, GPU comprises thousands of core. Additionally, the CPU works serially and on general purpose data. But, GPUs work parallelly on the specific type of data.

Factually, both CPU and GPU have the same speed but due to the above-mentioned differences, GPU can outperform CPU when the process is computationally expensive.

While playing heavy-duty games, our machines need to render a lot of graphics, and graphics are visualized using mathematical computations. When the CPU is given in charge for the same, it works serially and thus we usually suffer lags in our game. The plants are loaded once, then roads are loaded, and then the player is loaded. But when the same scenario is processed using GPUs, due to its parallel mode nature, things are operated concurrently and we get perfect lag-free output.

GPUs also differ from CPUs in terms of memory access, GPUs can handle a lot faster and larger memory together, while CPU can match the speed of GPU but it can’t match the memory capacity of CPU(again due to a high number of cores).

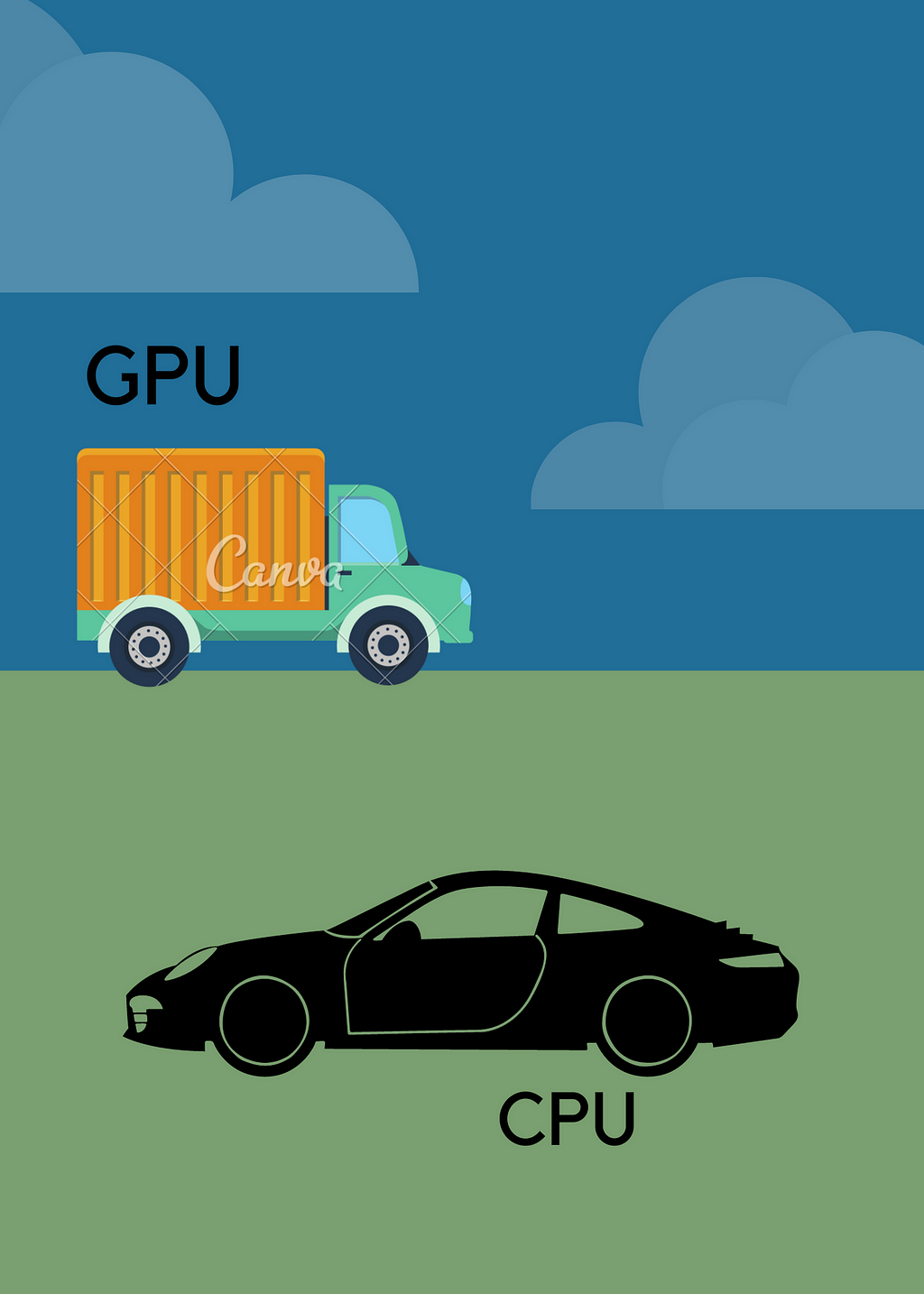

Consider, GPUs as Trucks and CPUs as Porsche. CPU is faster indeed but is only two-seater whereas in Trucks you can load many people together. In addition, GPUs have more cores i.e. more trucks and thus more data transfer. This higher bandwidth helps GPU to outperform CPU and, thus, are used in modern days high tech computers.

As the world grows more complex each day, slowly the use cases of GPUs are increasing and it is being used in domains even where visualizations are not required. It is now extensively used in Bitcoin mining, Neural Network training, etc.

Three things that GPUs excel as compared to CPUs are:

- Parallelization

- Larger Memory Bandwidth.

- Faster Memory Access.

GPUs might be slow but takes more data and store more data in RAM and due to the parallel mode of operation, they execute that data much faster than CPU.

How GPUs assist in Neural Network Trainings?

GPUs are slowly and gradually becoming a must for Neural Network training due to extensive and highly complex data with many parameters. A deep Neural Network can consist of millions of parameters in the form of an array.

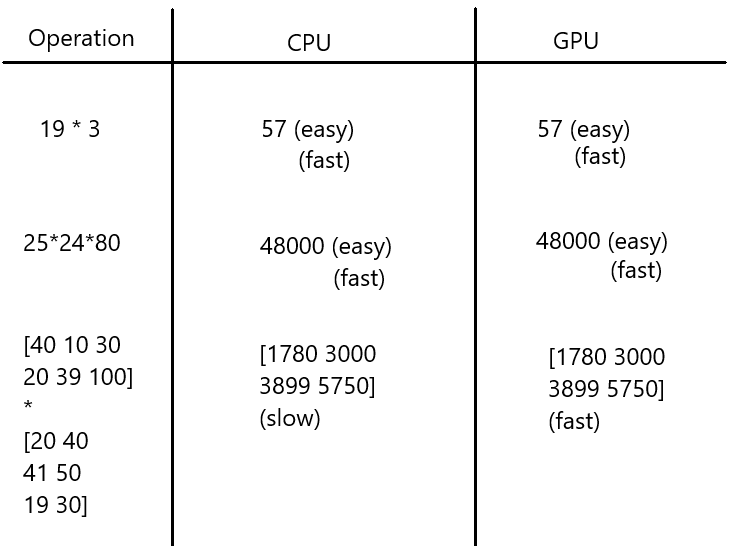

Now, for illustration:

This can be concluded that CPUs are great with scalar values but struggle with matrices, because, matrices operations need multiple values at the same time and CPUs are Porsche they can only take one/two values but GPUs are truck and can carry multiple values. Till the time, CPUs will be done taking all the values to the RAM, GPU would’ve already taken all the values performed multiplication and put the answer-back and still have some time left.

Supporting GPU operation, Neural Networks are embarrassingly parallel i.e. they can be easily broken down into smaller subproblems i.e. following a divide-and-conquer approach.

The nature of Neural networks when mixed with the Parallel mode of operation of GPU provides a blazing fast speed.

Don’t have a GPU?

Even if you don’t have a GPU and still an emerging Data Scientist/Machine Learning Engineer. There is nothing to worry about.

GPU power can be shared through the cloud and it can be used in any machine. Believe me, your dreadfully slow machine too can perform million of operations in a snap of a finger.

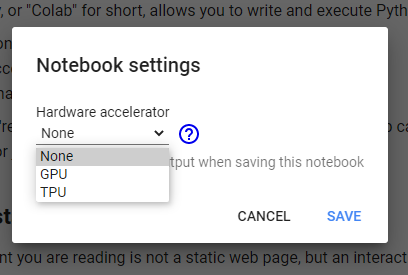

One of the most common cloud GPU environments is Google Colab.

To start with GPU computation, simply start with a new notebook and change “runtime type”.

Conclusion

In this article, we tried to shed a light on how GPU outperforms CPU and how Neural Network training can be benefitted by use of GPU rather than CPUs.

If you like this article, please consider subscribing to my newsletter: Daksh Trehan’s Weekly Newsletter.

Find me on Web: www.dakshtrehan.com

Follow me at LinkedIn: www.linkedin.com/in/dakshtrehan

Read my Tech blogs: www.dakshtrehan.medium.com

Connect with me at Instagram: www.instagram.com/_daksh_trehan_

Want to learn more?

How is YouTube using AI to recommend videos?

Detecting COVID-19 Using Deep Learning

The Inescapable AI Algorithm: TikTok

GPT-3 Explained to a 5-year old.

Tinder+AI: A perfect Matchmaking?

An insider’s guide to Cartoonization using Machine Learning

How Google made “Hum to Search?”

One-line Magical code to perform EDA!

Give me 5-minutes, I’ll give you a DeepFake!

Cheers

How do GPUs Improve Neural Network Training? was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.