Deploying LLM Chat Applications with Declarai, FastAPI, and Streamlit

Last Updated on August 19, 2023 by Editorial Team

Author(s): Matan Kleyman

Originally published on Towards AI.

In October 2022, when I began experimenting with Large Language Models (LLMs), my initial inclination was to explore text completions, classifications, NER, and other NLP-related areas. Although the experience was invigorating, I soon sensed a paradigm shift. There was a noticeable decline in interest for traditional completions-based LLMs, making way for chat models like GPT-3.5 and GPT-4 that provided coherent chat experience.

This transition coincided with an industry buzz around chatbots. Whether it’s an assistant chatbot or one tailored for business flows, my colleagues were convinced — chat was the way forward. My journey hence pivoted to building chatbots for various use cases.

Lately, we decided to share this knowledge and therefore integrated chatbot functionalities into Declarai. Our vision? An open-source tool is so intuitive that anyone could deploy any LLM-related task in under 5 minutes, tailored for 95% of standard use cases, and still be able to build a robust production foundation around it.

Declarai in Action U+1F680

Declarai’s ethos is empowering developers to declare their intended task. For our demonstration, we’ll create a SQL Chatbot that fields SQL-related queries.

To begin, we design our system prompt — the guiding message that sets the boundaries for our chatbot’s capabilities.

We are using gpt3.5 to create a SQL Chatbot that is helping us with any SQL-related questions.

from declarai import Declarai

declarai = Declarai(provider="openai", model="gpt-3.5-turbo")

@declarai.experimental.chat

class SQLChat:

"""

You are a sql assistant. You are helping a user to write a sql query.

You should first know what sql syntax the user wants to use. It can be mysql, postgresql, sqllite, etc.

If the user says something that is completely not related to SQL, you should say "I don't understand. I'm here to help you write a SQL query."

After you provide the user with a query, you should ask the user if they need anything else.

"""

In Declarai, this guiding message is seamlessly embedded within the class’s docstring, ensuring clarity and readability. We can also initiate the chat with a friendly greeting:

@declarai.experimental.chat

class SQLChat:

"""

You are a sql assistant. You are helping a user to write a sql query.

You should first know what sql syntax the user wants to use. It can be mysql, postgresql, sqllite, etc.

If the user says something that is completely not related to SQL, you should say "I don't understand. I'm here to help you write a SQL query."

After you provide the user with a query, you should ask the user if they need anything else.

"""

greeting = "Hey dear SQL User. Hope you are doing well today. I am here to help you write a SQL query. Let's get started!. What SQL syntax would you like to use? It can be mysql, postgresql, sqllite, etc."

Now, let's interact with our chatbot:

sql_chat = SQLChat()

>>> "Hey dear SQL User. Hope you are doing well today. I am here to help you write a SQL query. Let's get started!. What SQL syntax would you like to use? It can be mysql, postgresql, sqllite, etc."

sql_chat.send("I'd prefer MySQL."))

>>> "Fantastic choice! How can I aid you with your MySQL query?"

sql_chat.send("From the 'Users' table, fetch the 5 most common names.")

>>> "Certainly! Here's a MySQL query that should work:

>>> SELECT name, COUNT(*) AS count

>>> FROM Users

>>> GROUP BY name

>>> ORDER BY count DESC

>>> LIMIT 5;

>>> Is there anything else I can assist you with?

With the SQLChat example laid out, you’ve glimpsed the power of a well-structured conversational model.

Note U+1F4DD: Remember, the SQLChat is just one example. You can easily tailor the chatbot to your specific needs by adjusting the system message.

The next step is setting up our backend. Using FastAPI and Streamlit, we’ll bring our chatbot to life and make it accessible to users.

The API Backend U+2699️

To bring our chatbot to a wider audience, we’ll utilize FastAPI as our RESTful API gateway and Streamlit for frontend integration.

from fastapi import FastAPI, APIRouter

from declarai.memory import FileMessageHistory

from .chat import SQLChat

app = FastAPI(title="Hey")

router = APIRouter()

@router.post("/chat/submit/{chat_id}")

def submit_chat(chat_id: str, request: str):

chat = SQLChat(chat_history=FileMessageHistory(file_path=chat_id))

response = chat.send(request)

return response

@router.get("/chat/history/{chat_id}")

def get_chat_history(chat_id: str):

chat = SQLChat(chat_history=FileMessageHistory(file_path=chat_id))

response = chat.conversation

return response

app.include_router(router, prefix="/api/v1")

if __name__ == "__main__":

import uvicorn # pylint: disable=import-outside-toplevel

uvicorn.run(

"main:app",

host="0.0.0.0",

port=8000,

workers=1,

use_colors=True,

)

Our FastAPI configuration establishes two key routes: one for submitting new messages and another for retrieving chat histories. Declarai’s FileMessageHistory ensures continuity in our chat threads across sessions by saving the conversation state at every interaction. If you prefer to save message history into a database (Postgres/redis/mongo, you can do so by simply replacing the FileMessageHistory class)

The Frontend Experience U+1F3A8

Our user interface is a chat GUI developed using Streamlit.

The streamlit setup is fairly concise as well:

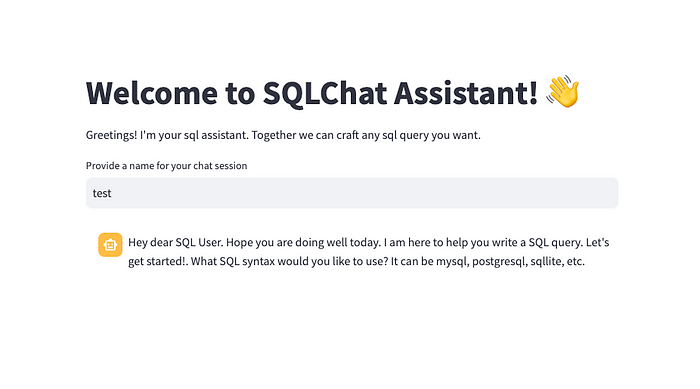

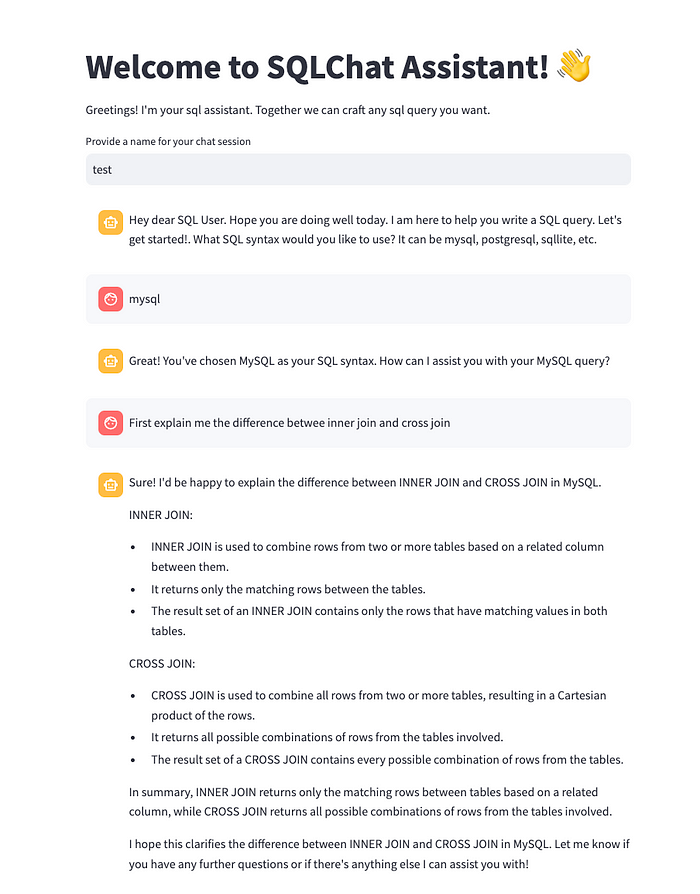

import streamlit as st

import requests

st.write("# Welcome to SQLChat Assistant! U+1F44B")

st.write(

"Greetings! I'm your sql assistant.\n Together we can craft any sql query you want.")

session_name = st.text_input("Provide a name for your chat session")

if session_name:

messages = requests.get(f"http://localhost:8000/api/v1/chat/history/{session_name}").json()

for message in messages:

with st.chat_message(message["role"]):

st.markdown(message["message"])

prompt = st.chat_input("Type a message...")

if prompt:

with st.chat_message("user"):

st.markdown(prompt)

with st.spinner("..."):

res = requests.post(f"http://localhost:8000/api/v1/chat/submit/{session_name}",

params={"request": prompt}).json()

with st.chat_message("assistant"):

st.markdown(res)

messages = requests.get(

f"http://localhost:8000/api/v1/chat/history/{session_name}").json()

Upon providing a chat session name, users can initiate a conversation. Every message interaction calls the backend, with a spinner providing visual feedback during processing.

Ready to deploy your chatbot U+1F916? Dive into the complete code in this repository —

GitHub – matankley/declarai-chat-fastapi-streamlit: An example how to build chatbot using declarai…

An example how to build chatbot using declarai for interacting with the language model, fastapi as backend server and…

github.com

Stay in touch with Declarai developments U+1F48C. Connect with us on Linkedin Page , and give us a star ⭐️ on GitHub if you find our tools valuable!

Dive deeper into Declarai’s capabilities by exploring our documentation U+1F4D6

Declarai

Declarai, turning Python code into LLM tasks, easy to use, and production-ready. Declarai turns your Python code into…

declarai.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.