DeepMind’s AlphaTensor: Deepmind’s Alphatensor: The AI That Is Reinventing Math

Last Updated on October 10, 2022 by Editorial Team

Author(s): Salvatore Raieli

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

How the DeepMind’s latest model could revolutionize math

Without realizing it, any of our activities, in one way or another, involve matrix multiplications. The whole of computing relies on them; being able to improve efficiency is fundamental. DeepMind (a year after revolutionizing biology with AlphaFold2) presented an article in which, using reinforcement learning, it manages to increase the efficiency of matrix multiplication. In this article, we discuss how and why it is important.

How we still struggle with matrices

Algorithms have been fundamental since the beginning of history. Both the Greeks and the Egyptians invented algorithms that enabled them to succeed in great works. Algorithms are also the basis of modern civilization, and without realizing it, they underpin almost every field of knowledge and its application.

On the other hand, discovering new algorithms is by no means easy (we have all suffered in studying algorithms and data structures, but discovering new ones is even more difficult). One of the most important algorithms today is the multiplication of two matrices. Why?

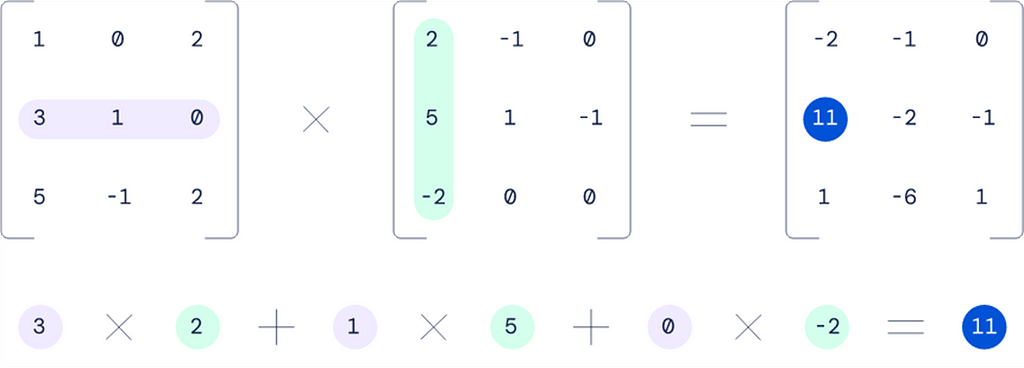

Because practically all types of data can be represented as matrices. In fact, images can be represented as matrices, can be used to solve linear equations, used in graph video games, weather simulations, etc. Furthermore, most artificial intelligence algorithms can be reduced to multiplications of matrices (which are then efficiently processed by a GPU).

Matrix multiplication seems like a very simple concept, but given its importance, being able to improve its efficiency even a little would save an enormous amount of computation. For centuries, mathematicians believed that known matrix multiplication was an efficient method. In 1969, the community was shocked by the fact that the efficiency was actually suboptimal, as was demonstrated by Volker Strassen.

Now, one less scalar multiplication may not seem like a big deal. But if we multiply 1 billion matrices, we have saved 1 billion scalar multiplications. The problem was that Strassen’s method only fit the multiplication of two 2×2 matrices.

How DeepMind Solves the problem

DeepMind on Twitter: "ICYMI: On the cover of @Nature – #AlphaTensor, an AI system for discovering novel, efficient, and exact algorithms for matrix multiplication.Learn more ⬇️https://t.co/E18DezAevbhttps://t.co/SvHgsaitFt https://t.co/ia9OQYuwZg pic.twitter.com/2eQsBCC9H5 / Twitter"

ICYMI: On the cover of @Nature – #AlphaTensor, an AI system for discovering novel, efficient, and exact algorithms for matrix multiplication.Learn more ⬇️https://t.co/E18DezAevbhttps://t.co/SvHgsaitFt https://t.co/ia9OQYuwZg pic.twitter.com/2eQsBCC9H5

Strassen’s work showed that matrix-multiplication algorithms can be discovered by finding new ways to decompose a 3D array of numbers called a matrix multiplication tensor into a sum of elementary building blocks. — Nature comments on the article

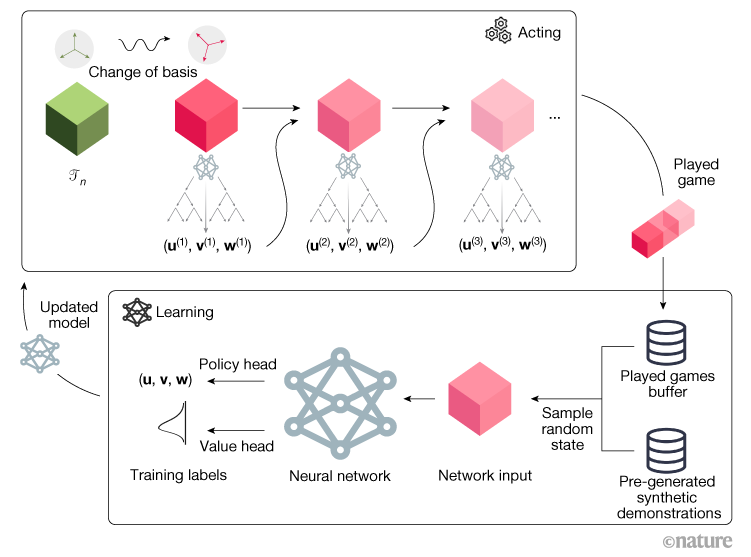

The researchers at DeepMind have turned the problem of matrix multiplication into a kind of single-player game (after all, they are particularly experienced in the field after AlphaZero and AlphaGo). In fact, in this case, the board is a three-dimensional tensor (a tensor is practically a matrix, and a 3D tensor is a 3D matrix), and the player moves around trying to arrive at the optimal solution (modify the tensor and zero out its entries). If the player succeeds, the result of his moves is the correct matrix multiplication algorithm (efficiency is given by the number of steps taken to zero out the tensor). So the aim is to minimize the number of moves (steps) to zero out the tensor. Pretty clever, right?

The researchers used reinforcement learning in order to ‘play’. As described in the article, one can consider this system as an adapted version of AlphaZero (where the goal of the agent was to win at Go, chess, and other games). For this reason, the model was called AlphaZero.

The problem described in these terms sounds simple, but as described by the DeepMind researchers, in reality, there are so many potential combinations:

This game is incredibly challenging — the number of possible algorithms to consider is much greater than the number of atoms in the universe, even for small cases of matrix multiplication. Compared to the game of Go, which remained a challenge for AI for decades, the number of possible moves at each step of our game is 30 orders of magnitude larger (above 1033 for one of the settings we consider). — DeepMind Blogpost

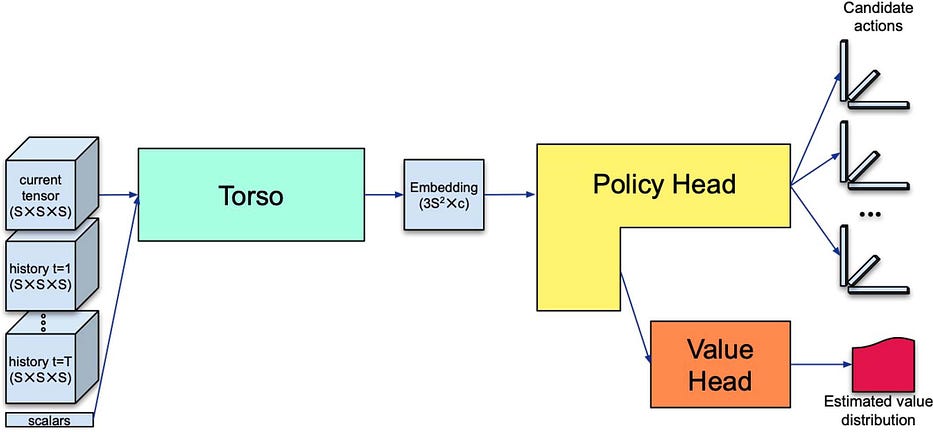

Now, to succeed, the authors used a new type of architecture incorporating problem-specific inductive biases; they also used synthetic data and some information about the problem (symmetries). To be more specific, the researchers used a transformer-based architecture (using cross-attention, causal self-attention, etc., here and here is a detailed image of the structure). The model was then trained using reinforcement learning (the input is, in fact, the current state and the 3D tensor, and the previous actions.

At the beginning of the training, the model has no knowledge of existing algorithms to multiply matrices, but during the training, it gets better. Interestingly, AlphaTensor first rediscovery algorithms that are already known and then find unknown algorithms (practically surpassing human intuition)

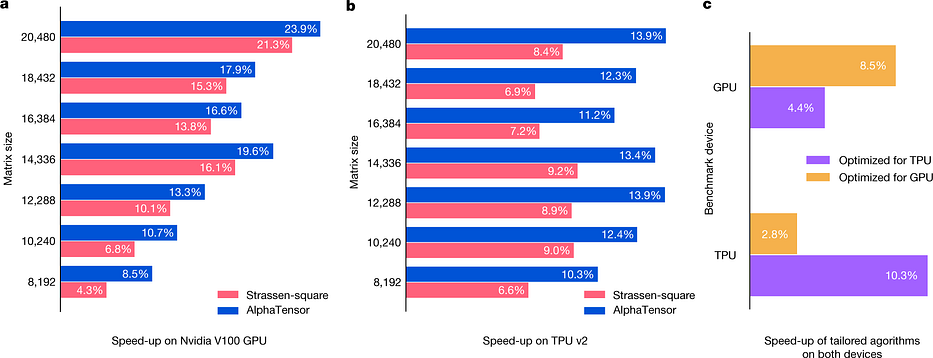

This resulted in the discovery of algorithms that multiply large matrices 10–20% faster than those commonly used on that piece of hardware. — source

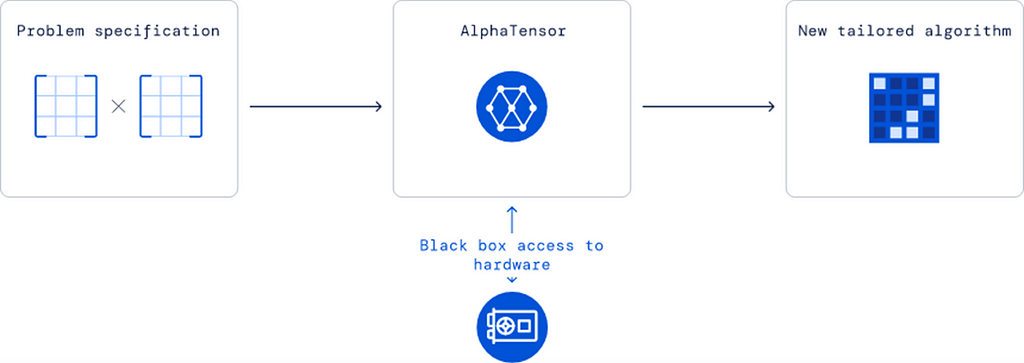

Another interesting result is that practically the space of matrix multiplication algorithms is richer than previously thought. Now, this sounds like mathematical jargon, but it actually means that the authors were able to adapt AlphaTensor to look for more efficient algorithms depending on the case needed: i.e. whether a matrix multiplication algorithm was needed for a GPU or a TPU.

Parting thoughts

DeepMind announced the publication of the article with a tweet and immediately there was hype in the scientific community.

DeepMind on Twitter: "Today in @Nature: #AlphaTensor, an AI system for discovering novel, efficient, and exact algorithms for matrix multiplication – a building block of modern computations. AlphaTensor finds faster algorithms for many matrix sizes: https://t.co/E18DezRPTL & https://t.co/SvHgsa0SNV 1/ pic.twitter.com/bsVEAljvSQ / Twitter"

Today in @Nature: #AlphaTensor, an AI system for discovering novel, efficient, and exact algorithms for matrix multiplication – a building block of modern computations. AlphaTensor finds faster algorithms for many matrix sizes: https://t.co/E18DezRPTL & https://t.co/SvHgsa0SNV 1/ pic.twitter.com/bsVEAljvSQ

Certainly, the result is incredible in itself because, for the first time, one of the basic and fundamental algorithms of computing has been made more efficient (and they had been trying for centuries). Moreover, this discovery was not due to human intuition but to an algorithm (you can see the code and the algorithm here).

The authors claim that even faster algorithms can be found. So this is only the beginning, and the authors want to extend the research to other related problems, such as matrix factorization that involves no negative elements.

In any case, this also seems to be the beginning of a new era where researchers in mathematics will be assisted by algorithms. In addition, more efficient algorithms make computation more efficient, allowing for larger models and thus in a kind of positive loop. In addition, reducing the computational cost of models allows others who do not have state-of-the-art infrastructure to use models with many parameters.

If you have found it interesting:

You can look for my other articles, you can also subscribe to get notified when I publish articles, and you can also connect or reach me on LinkedIn. Thanks for your support!

Here is the link to my GitHub repository, where I am planning to collect code and many resources related to machine learning, artificial intelligence, and more.

Or feel free to check out some of my other articles on Medium:

- Nobel prize Cyberpunk

- AlphaFold2 Year 1: Did It Change the World?

- Speaking the Language of Life: How AlphaFold2 and Co. Are Changing Biology

- A critical analysis of your dataset

DeepMind’s AlphaTensor: Deepmind’s Alphatensor: The AI That Is Reinventing Math was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.