Create your Mini-Word-Embedding from Scratch using Pytorch

Last Updated on July 19, 2023 by Editorial Team

Author(s): Balakrishnakumar V

Originally published on Towards AI.

1. CBOW :

Illustration by Author

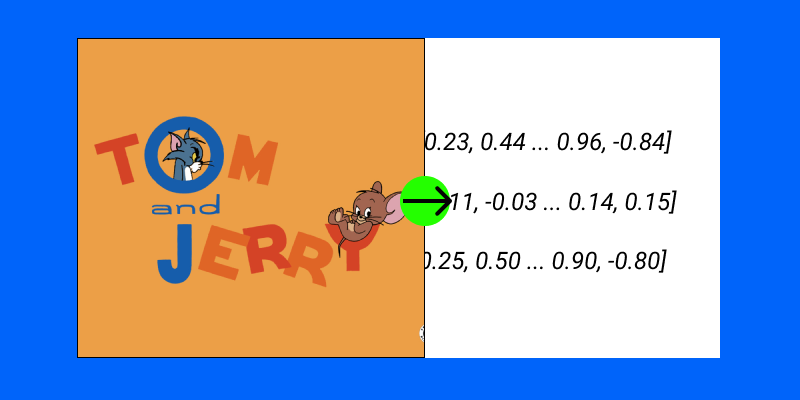

On a lighter note, the embedding of a particular word (In Higher Dimension) is nothing but a vector representation of that word (In Lower Dimension). Where words with similar meaning Ex. “Joyful” and “Cheerful” and other closely related words like Ex. “Money” and “Bank”, gets closer vector representation when projected in the Lower Dimension.

The transformation from words to vectors is called word embedding

So the underlying concept in creating a mini word embedding boils down to train a simple Auto-Encoder with some text data.

Before we proceed to our creation of mini word embedding, it’s good to brush up our… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI