Code Reproducibility Crisis in Science And AI

Last Updated on July 25, 2023 by Editorial Team

Author(s): Salvatore Raieli

Originally published on Towards AI.

Saving AI and scientific research requires we share more

Modern science suffers from a reproducibility problem. Typically, the output of a scientific project is to publish research in a prestigious journal. After that is finished, one moves on to a new project. From microbiology to astrophysics, more and more papers present results that are not reproducible. It is said that the difference between superstition and science is that the latter is reproducible, but this difference is becoming more and more subtle.

A great many articles have addressed this issue, and there are many discussions going on. In this article, the focus is not on the difficulty in reproducing results, but on the problem of reproducibility, and reusability of codes and datasets. If at the beginning of your notebook you import scikit-learn, NumPy or Matplotilib this article is also about you.

Do not dare to ask

The use of software and codes in medicine, biology, and even psychology has exploded in recent years. Almost no scientific article now does not feature the use of code or has amassed a dataset. On the other hand, biology and computer science have elegantly merged into a new science called bioinformatics. In addition, many scientific articles use machine learning models (from simple regression to sophisticated convolutional neural networks ).

The first problem is that often, even when authors have developed a new algorithm, it is not published in any repository. Not to mention that often the details of the algorithm are deliberately described in a vague way that hinders anyone who wants to reproduce it. In general, a very small percentage of journals require that both the data and the code be published (research showed that only 7 percent of surgical journals required this).

“We want a world where data are routinely being used for discovery, to advance science, for evidence-based and data-driven policy. There are data sets that drive entire fields, and the field of research would not be where it is without these open data sets that are driving it.” — Daniella Lowenberg (source)

So often, the result is to read in the methods: data not available. On the other hand, journals that require in their guidelines that data be shared make do with a vague statement such as “available upon request” or, my favorite, “ data and code available upon reasonable request.” Apparently, many of the requests are not reasonable because, very often no response is obtained.

Victoria Stodden tested this system by writing to authors of 200 articles published in science to obtain raw data and code. They were only able to get responses to a minimal extent, and I could only replicate the results in 26% of the cases.

The methods are also uninformative, as shown by a Mayo Clinic study that analyzed 225 articles noted that only in 12 articles was there enough detail to reproduce how the statistical tests were conducted. Not to mention, the articles where more sophisticated algorithms are used and the model is described in succinct terms (often stuff like “we used a convolutional neural network to…).

“if you retyped or cut and pasted the code from the PDF, it wouldn’t do what it was supposed to do. What they published was almost the right code, but it obviously wasn’t the code that they had used” — Lumley (source)

In addition, another reason why researchers are reluctant to publish datasets is that they do not get citations. In a world where their career depends on the number of citations, better to keep it to themselves and use it for another paper.

The library of forgotten codes

Scientific research is based on open-source software. In 2019, an image of a black hole was published that surprised and fascinated the world. Few know that among the libraries used for that image is Matplotlib.

Data science and other research fields rely on open-source libraries in Python or R. Underlying many of these libraries are students, Ph.D. students, and postdocs. Often, the code is developed by students who have not received adequate training and do not know the best documentation and testing practices. Researchers often spend hours and hours trying to fix bugs or compatibility issues without finding adequate documentation or answers to their questions.

“They try to find it and there’s no website, or the link is broken, or it no longer compiles, or crashes when they’ve tried to run it on their data.” — source

In addition, many of these codes were written with abandoned libraries and deprecated functions (called “dependency hell”). The code is then published by a Ph.D. student or postdoc who is at the end of their contract and abandoned to its fate.

As Nelle Varoquaux co-developer of scikit-learn acknowledges, the field is evolving rapidly. The needs of a library are evolving, datasets are getting larger and larger and for example, cannot fit in a RAM.

As mentioned often the codes are poorly documented. Many researchers prefer to publish code on their personal GitHub pages, rather than in repositories such as (CRAN and Bioconductor for R, or PyPi for python).

In addition, another problem is that research often lacks funding. Agencies often do not value the importance of maintaining code, and the funds that are available are few. In requests for funds, research groups omit the funding request for a software engineer, knowing that it is unlikely to be approved. For example, soon after the black hole image was published, funds were requested to support the scipy ecosystem that made it possible and the grant was rejected.

The artificial intelligent being who could not reproduce itself

Does data science fare any better? One would think that those who make code their business should know the importance of reproducibility and best practices for documentation.

In fact, the situation is not rosy in data science either. In fact, not all articles published the code or the details of their algorithm. Sometimes, when developing a new algorithm, it is difficult to be able to compare it with a benchmark or the state-of-the-art. As one Ph.D. student at the University of Montreal recounts, they tried to reproduce the code of another algorithm to compare it with their own: We tried for 2 months and we couldn’t get anywhere close.”

“I think people outside the field might assume that because we have code, reproducibility is kind of guaranteed. Far from it” — Nicolas Rougier (source)

Forty-three percent of articles accepted in major conferences cannot be reproduced. This is the result of a survey conducted by Open Review, and technically these articles have passed a double-blind review. Moreover, State-of-AI reported that only 15% of the scientific article reports the code.

Sometimes code is not present, methods are obfuscated on purpose, or only pseudocode is presented. Not to mention, that without the correct choice of hyperparameters, the original dataset even with the code would be difficult to be able to reproduce the model. it has been pointed out, that the same random seed changes the performance of the model quite a bit at times.

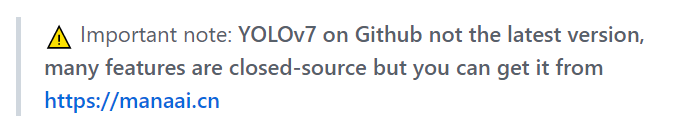

An example, one of the most widely used models is YOLO which is now up to the seventh version, the code is on GitHub but just scroll through read.me (the main page) to find:

the excuses for not sharing models are a wide variety: the code is based on unpublished code, the code is protected by interests, and the algorithm is too dangerous (OpenAI and Google’s favorite excuse).

In fact, Google Health published an article in 2020 where it claimed that its AI was capable of detecting breast cancer lesions in images. The article presented so little information about the code that 31 irritated scientists published a letter in Nature in which they practically branded Google’s article not as a scientific article but as an advertisement for a product. In the letter, they practically stated that artificial intelligence is facing a reproducibility crisis.

“When we saw that paper from Google, we realized that it was yet another example of a very high-profile journal publishing a very exciting study that has nothing to do with science. It’s more an advertisement for cool technology. We can’t really do anything with it.” -Habe-Kains (source)

“Is that even research anymore? It’s not clear if you’re demonstrating the superiority of your model or your budget.” — Anna Rogers (source)

This is no small matter, as the various AI models have real impact and applications. If researchers cannot explore code and models, they cannot be assessed for robustness, bias, and safety.

In any case, being able to replicate a model is no small task. Especially when it comes to large models, the computational and knowledge requirements to be able to replicate a model from methods are quite difficult. In fact, Facebook itself had difficulty replicating the results of AlphaGO. The team claimed that succeeding without the available code was an exhausting task (they literally wrote in the article that the task was “very difficult, if not impossible, to reproduce, study, improve upon, and extend”). And if it is difficult for a multinational company with a huge budget, imagine independent laboratories.

The data science community does not like to share datasets either. Not least because this could expose them to embarrassing errors: for example, the models used to generate the images were trained on a dataset called LAION, but this is far from perfect. First, it was obtained by massively downloading from the web without asking for any permission. Meanwhile, most are images of artists, and photographers who would not have imagined that their works would be used to train AI models. Inside they even found medical images that should not have been there, photoshopped celebrity porn, stolen nonconsensual porn, and even images of executions done by terrorists.

The legacy to whom comes after us

In general, the idea of many researchers is that if you haven’t made the most of a dataset, you don’t release it. Without Iris datasets, though, who among us would have learned to program? For this reason, NASA grants a period of exclusivity to Hubble Space Telescope users before the data are published in a public data repository and the whole community can benefit (for Webb Space telescope the window is one year).

Today, many journals also require genomics data to be published in special repositories (dbGAP, GEO Dataset, and so on). Access to the data is protected by a formal procedure since it is patient medical data and potentially privacy-threatening.

Even funding agencies are realizing the need for data to be shared and that curation is important. National Institutes of Health (NIH) in 2020 required that grant applications that had a data component had to specify their data management and sharing plan.

Then there are Gordon and Betty Moore Foundation, the Alfred P. Sloan Foundation, and the Chan Zuckerberg Initiative that funds open-source software (for example, the last one funds scikit-image, NumPy, the ImageJ, and Fiji platforms). In addition, recently Schmidt Futures (an organization founded by former Google chief executive Eric Schmidt) decided to fund a network of engineers dedicated to maintaining scientific software (including models related to global warming, and ecological changes).

There are also journals that specialize in describing and sharing datasets (such as Scientific Data). Not to mention that repositories such as Zenodo allow you to associate a DOI with code and data and make them citable as well.

What about the code? Meanwhile, there are projects that aim to solve data illiteracy. In fact, there are organizations such as Software Carpentry and the eScience Institute (University of Washington) that organize boot camps. Or the Netherlands eScience Center, which has made available guidelines.

Universities are beginning to realize that models and code written by students and postdocs may be good for publication but is not maintainable. Until now, libraries were often maintained on a voluntary basis, but it is not sustainable. Therefore, English universities have created the position of a research software engineer who is responsible for working on software that is important for academic research.

Also, we recommend using containers (such as docker) for all code that is published so that it can run without all the compatibility issues. There are also repositories for containers specifically for data and biological code (such as bioflow, RNASeq pipeline, and pipeline in R or python). Papers such as Nature Machine intelligence require that the code be available on Code Ocean or Google Colab so that during peer review you can run the code.

Leading scientific conferences are also paying more attention to the presence of code, to better control the methods. Many are encouraging that there be tabs on what data was used, in the description of the code. NeurIPS also started linking code when this is present. Not to mention that not only does the community often try to replicate published models, but there is also an initiative called ReScience that is responsible for publishing AI models that have been replicated.

Parting thoughts

The founding principle of science is the ability to replicate results. Today science is increasingly based on the use of large datasets, analysis software, and machine learning models. Both data science and science need to advance data and models that are both available and reusable. Also, the main libraries that you import into your code are (scikit-learn, NumPy, matplotilib to give an example). They are developed and maintained by researchers and volunteers and this is not always sustainable.

Algorithms have and will have more and more real-life applications and impacts, so it is critical that they are tested by independent labs. Not to mention that the training dataset has a huge impact on the potential bias, and this should also be discussed (and once a model is trained it is hard to make it forget what it should not have known).

When a model is an open source, it allows it to be reused. AlphaFold2 would not have had the same impact if it had not been made open source and its predictions made available to the community. For this same reason, we will see many more applications based on stable diffusion than on DALL-E.

The good news is that researchers, universities, and funding agencies have realized this.

If you have found it interesting:

You can look for my other articles, you can also subscribe to get notified when I publish articles, and you can also connect or reach me on LinkedIn. Thanks for your support!

Here is the link to my GitHub repository, where I am planning to collect code and many resources related to machine learning, artificial intelligence, and more.

GitHub – SalvatoreRa/tutorial: Tutorials on machine learning, artificial intelligence, data science…

Tutorials on machine learning, artificial intelligence, data science with math explanation and reusable code (in python…

github.com

Or feel free to check out some of my other articles on Medium:

How AI Could Help Preserve Art

Art masterpieces are a risk at any time; AI and new technologies can give a hand

towardsdatascience.com

How artificial intelligence could save the Amazon rainforest

Amazonia is at risk and AI could help preserve it

towardsdatascience.com

Reimagining The Little Prince with AI

How AI can reimagine the little prince’s characters from their descriptions

medium.com

Speaking the Language of Life: How AlphaFold2 and Co. Are Changing Biology

AI is reshaping research in biology and opening new frontiers in therapy

towardsdatascience.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.