Centroid Neural Network and Vector Quantization for Image Compression

Last Updated on January 7, 2023 by Editorial Team

Author(s): LA Tran

Deep Learning

Let’s upraise potentials that are not paid much attention

Centroid neural network (CentNN) has been proved an efficient and stable clustering algorithm and has been applied successfully to various problems. CentNN in most cases produces more accurate clustering results compared to K-Means and Self-Organizing Map (SOM). The main limitation of CentNN is that it needs more time to converge because CentNN starts with 2 clusters and grows gradually until reaching the desired number of clusters. Back to the day when the theory of artificial neural networks (ANNs) was introduced in 1943 but it just became popular when backpropagation was derived in the early 60’s and was implemented to run on computers in around 1970, or the idea of Hinton’s Capsules Network (CapsNet) had been mulled for almost 40 years until dynamic routing was released in 2017. From my perspective, CentNN is facing the same issue when it only needs a faster fashion to operate superiorly because the robustness of its theory has been formed. You can find my explanation of CentNN here and my tutorial to implement this algorithm here.

In this post, I am going to implement the CentNN algorithm for the image compression problem and demonstrate how good it is when compared to K-Means.

Image Compression

Image compression is a genre of data compression in which the type of data is digital images with the purpose of minimizing the cost of transmission and storage. To this end, clustering and vector quantization are two methods that are used widely.

Vector quantization is a lossy compression technique for data compression. It refers to dividing a large set of data points into a pre-determined number of clusters in which the centroid of each cluster has the closest distance to its clustered data point compared to the other centroids. In this sense, clustering methods are deployed to carry out quantization. You can find a more straightforward explanation of vector quantization using the K-Means algorithm for image compression in Mahnoor Javed’s post here.

To this point, I suppose you understand CentNN and K-Means algorithms, and image compression using clustering and vector quantization. Again, you can find my explanation of CentNN here and my tutorial to implement this algorithm here. And now, let’s move to our today’s main story.

Centroid Neural Network for Image Compression

In this post, I would like to take the classical Lena image as the sample to do experiments of image compression using CentNN, also the results of using K-Means are obtained for comparison. I would like to compare CentNN and K-Means in terms of 2 types of quantization: scalar quantization and block (vector) quantization. Now let’s load our image sample and do several pre-processing steps:

image = cv2.imread(“images/lena.jpg”, 0)

image = cv2.resize(image, (128,128))

plt.imshow(image, cmap = “gray”)

plt.show()

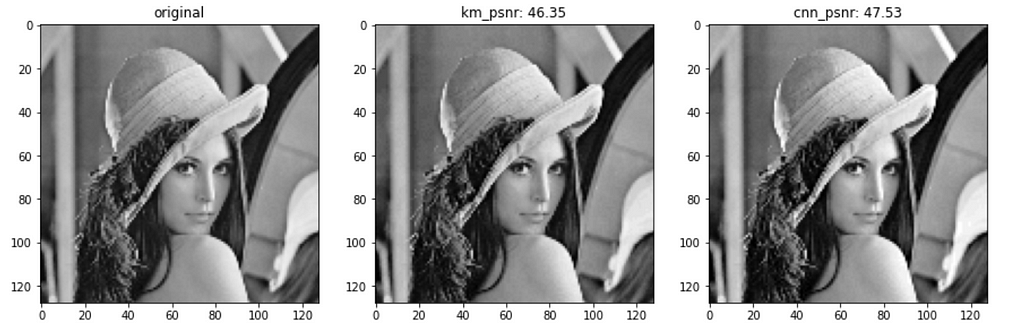

1. CentNN + Scalar Quantization

The number of clusters is set to 48 in this experiment. First, call the K-Means function from sklearn and implement a sub-routine for quantization:

This result is kept to be compared later.

Now let’s implement the CentNN algorithm for quantizing our sample image, below is my full implementation with explaining comments, you can find an easy-to-use version with called function in my GitHub repo here. If you are not interested in this bunch of code lines, just ignore it and jump over to see the results, no hard feeling.

Now we can decode the quantized data, calculate PSNR value and display the results:

This experiment with described settings shows that the reconstructed image of the CentNN algorithm (PSNR 47.53) has better quality than that of K-Means (PSNR 46.35).

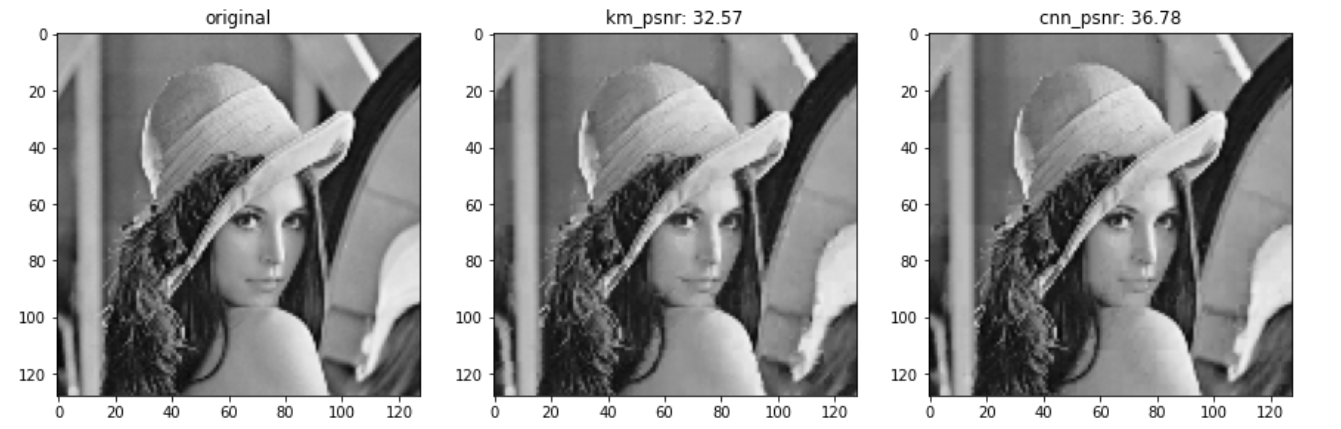

2. CentNN + Block Quantization

In the second experiment, block (vector) quantization, the number of clusters, and the block size are set to 512 and 4×4, respectively. Similarly to the previous experiment, first, call the K-Means function from the sklearn library and implement a sub-routine for block quantization:

Again, the result for K-Means is used for comparing with CentNN soon.

Now make the code of CentNN algorithm for block quantization. Also, if you are not interested in coding, you can ignore the following part and jump to see the results.

Decode and display results:

As we can see in this result, CentNN outperforms K-Means with a significant gap, PSNR of 36.78 and 32.57, respectively.

Conclusions

In this post, I have introduced all of you to the implementation of Centroid Neural Network (CentNN) for image compression and a comparison between CentNN and K-Means in the image compression task. Two experiments of scalar quantization and block (vector) quantization are carried out to come to a conclusion that CentNN outperforms K-Means in the posed task. To me, CentNN is a cool algorithm but somehow it is not paid much attention. By sharing my writing, I hope to bring such fantastic things to all of you guys falling in love with machine learning.

You are welcome to visit my Facebook fan page which is for sharing things regarding Machine Learning: Diving Into Machine Learning.

It’s enough for today. Thanks for spending time!

References

[1] Centroid Neural Network: An Efficient and Stable Clustering Algorithm

[2] Centroid Neural Network for Clustering with Numpy

Centroid Neural Network and Vector Quantization for Image Compression was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.