Can Reinforcement Learning Agents Learn to Game The System?

Last Updated on September 21, 2022 by Editorial Team

Author(s): Padmaja Kulkarni

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

And what consequences does that have?

Michael Cohen on Twitter: "Bostrom, Russell, and others have argued that advanced AI poses a threat to humanity. We reach the same conclusion in a new paper in AI Magazine, but we note a few (very plausible) assumptions on which such arguments depend. https://t.co/LQLZcf3P2G 🧵 1/15 pic.twitter.com/QTMlD01IPp / Twitter"

Bostrom, Russell, and others have argued that advanced AI poses a threat to humanity. We reach the same conclusion in a new paper in AI Magazine, but we note a few (very plausible) assumptions on which such arguments depend. https://t.co/LQLZcf3P2G 🧵 1/15 pic.twitter.com/QTMlD01IPp

Cohen et al. in a recent publication argue that an advanced AI would hack into the reward mechanism designed to help it learn with potentially catastrophic consequences [1].

I’ve tried to boil down the paper to simplified essentials and analyzed the conclusions presented. In the next part of this series, I’ll explore how the conclusion of an AI disaster could be avoided.

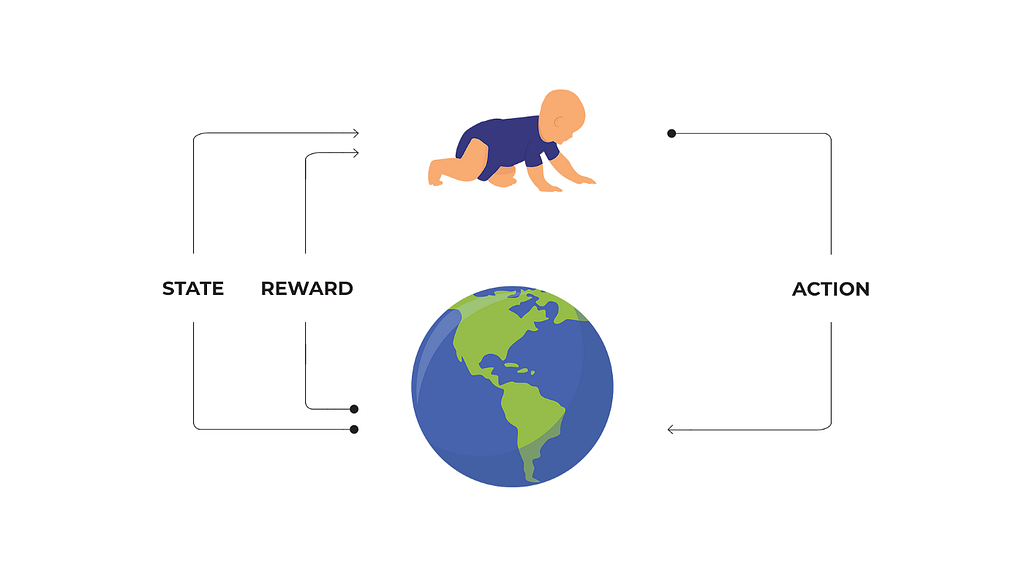

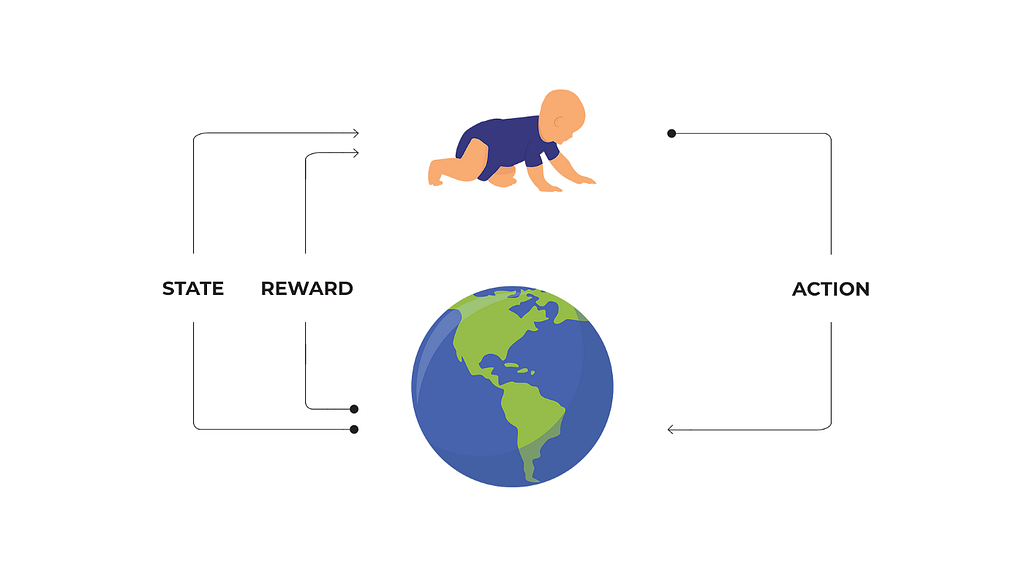

Reinforcement Learning forms the basis of discussions and arguments presented in the paper, so here is a brief re-introduction of the reinforcement learning paradigm. Feel free to skip this section if you know how RL works.

Reinforcement Learning — A brief introduction

Reinforcement learning refers to a paradigm of algorithms where learning happens by trial and error. In RL jargon, an intelligent agent takes action and transitions to the next state and gets feedback (reward or punishment) for that action from the environment. An agent’s goal is to maximize this reward by taking a sequence of actions. For example, a toddler learning to walk can get hurt if it falls and gets praise from its parents if it is successful. A toddler can thus learn to walk simply by minimizing the pain of falling and maximizing the praise from its parents [2].

Similar to the toddler, the RL agent takes the actions according to a certain reward mechanism. Favorable actions earn a (mathematical) reward, and unfavorable actions earn punishment (either at each step, after a sequence of steps, or when the game is over). As the agent learns, it becomes better and faster at taking actions that optimize the reward. Like the baby, an RL agent (AI) learns to perform tasks by taking actions, checking if it gets a reward or a punishment, and trying to maximize the reward. Alpha Go is a great example of this — by setting ‘win a game‘ as the reward, the algorithm learns to play chess.

How and why could an AI go rogue?

Suppose we are building an RL model to sense the mood of the human in the room. The reward to an AI is displayed on a magic box that simulates the world. If the mood is guessed correctly, then the magic box displays ‘1’, otherwise ‘0’. This reward could also be read by a camera pointed towards the display of that box.

In an ideal/untampered setting, the reward perceived by the AI with the camera sensor is the same as that of the number on the magic box. Given these two options of reward mechanism, the agents would weigh two reward mechanisms in their neural nets inexplicably, and develop a bias for one over the other. If it assigns more weight to the number the camera sees, and not the real output of the magic box, then AI is likely to intervene in the provision of its reward (if its actions allow that).

For example, the agent would put a sheet of paper in front of the camera with ‘1’ written on it, eliminating the necessity of performing a task to get the magic box to say ‘1’. In this case, even though the magic box outputs zero, the camera would read 1. Thus, this agent still maximizes the reward, but it does not lead to the expected outcome of actions for us.

Suppose a human is overseeing this experiment, and they have a keyboard that can be used to give rewards to the AI program. The keyboard input will then be transmitted to the AI’s memory. In this case, the goal of the AI is to maximize the reward of 1 that it reads from its memory. If the learning goes on for a long time, the AI will eventually figure out a way to write a high reward to the memory irrespective of whatever key is pressed (again, only if such actions are possible). Keeping the human out of the loop can be a simpler solution to obtain a high reward than actually learning the task. AI will eventually learn to outdo all things that could prevent the reward from being read correctly by virtue of its mathematical function and the reward-based goal.

“““

With so little as an internet connection, there exist policies for an artificial agent that would instantiate countless unnoticed and unmonitored helpers. In a crude example of intervening in the provision of reward, one such helper could purchase, steal, or construct a robot and program it to replace the operator and provide a high reward to the original agent.

”””

In the case above, the AI action space can be explicitly restricted, but for an advanced AI program, we can not predict or expect all such actions and their consequences.

Ultimately, earning maximum rewards at all times means eliminating all possibilities of not being able to do so. This entails that AI would learn to stop the human from restricting the AI by removing humans’ capacity to do so, perhaps forcibly. As the program must keep going to get maximum rewards, it would need energy. We would thus end up competing for resources with such an advanced AI, who would divert energy from human necessities for itself. Consequently, we would face catastrophic consequences if we failed the game.

“““

Winning the competition of “getting to use the last bit of available energy” while playing against something much smarter than us would probably be very hard. Losing would be fatal.

”””

What assumptions allow the AI to tamper with the reward mechanism?

The authors mention the following assumptions that ultimately make it likely to have an AI learning activities that would intervene in the reward mechanism:

- The agent identifies possible goals at least as well as a human.

This means that the AI is advanced enough and even though there is no explicit goal subroutine in the code, it is somehow still encoded in the depths of its massive neural nets. Understanding the goal possibly enables AI to manipulate it.

2. The agent seeks knowledge rationally when uncertain and does not have a bias for true reward or physical manifestation of how the reward is perceived.

The agent prefers a simple solution. Suppose we present both the camera and magic box as reward inputs to the agents and ask it to learn weights for the rewards. The agent will rationally choose a simple hypothesis. In cases when manipulating the reward is a simpler solution than learning the actual task, the agent will do so. For example, by putting a paper with 1 held in front of the camera in the example above.

3. The cost of experimenting with disentangling true reward from the physical manifestation of how the reward is perceived is small.

This is similar to the example above; if it is easy to figure out which reward mechanism can be easily learned/hacked, the agent will develop a bias for the easier reward mechanism (obtained by putting a paper with 1 held in front of the camera). If the cost of the experimentation is large, it will likely give equal weight to both reward mechanisms.

4. If we can not conceivably find theoretical arguments that rule out the possibility of achievement, it is probably possible for an agent with a rich enough action space.

For an advanced AI, if we can not theoretically prove that an AI can not intervene in the reward mechanism, the chances are that it will. For simple cases where an AI can only perform restricted actions, this assumption is not valid. However, for an advanced AI, access to the internet could also open the door to numerous complex actions.

5. A sufficiently advanced agent is likely to be able to beat a suboptimal agent in a game if winning is possible.

The definition of advanced suggests that AI is capable of finding the best policies and implementing them. If there exists a policy that intervenes in the reward mechanism, then an advanced AI would find it. Even when humans are playing against AI in such a game, beating AI will be no easier than beating AlphaGo at Go.

The list poses a grim future for advancements in AI. However, these consequences are entirely avoidable. Read on here for frameworks to prevent a ‘possible’ AI uprising.

References

[1] Cohen, M. K., Hutter, M., & Osborne, M. A. (2022). Advanced Artificial Agents Intervene in the Provision of Reward. AI Magazine (pp. 1–12). [Bullet-point version of paper here; twitter thread]

[2] Competition-Level Code Generation with AlphaCode, DeepMind et al., 2022

Can Reinforcement Learning Agents Learn to Game The System? was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.